Tesla's former AI director Andrej Karpathy's new tutorial has gone viral.

This time, he specificallyFor the general publicMade a popular science video about large language models.

1 hour in length, all“Non-technical introduction”, covering model inference, training, fine-tuning, and emergingLarge ModelOperating system and security challenges, all knowledge involved is up to this month (very new).

△The video cover was drawn by Andrej using Dall·3

The video has been viewed 200,000 times in just one day on YouTube.

Some netizens said:

I just watched it for 10 minutes and I have already learned a lot, I have never explained it with examples like the ones in the video beforeLLM, it also clarifies a lot of “confusing” concepts I’ve seen before.

In addition to praising the high quality of the course, many people also commented that Andrej himself is really good at simplifying complex problems, and his teaching style is always impressive.

Not only that, this video can also be said to reflect his full love for his profession.

No, according to Andrej himself, the video wasDuring Thanksgiving holidayIt was recorded, and the background is his resort hotel (manual dog head).

The original intention of making this video was because he recently gave a speech at the Artificial Intelligence Security Summit. The speech was not recorded, but many viewers said they liked its content.

So he simply made some minor adjustments, told the story again and made it into a video for more people to watch.

So, what are the specifics?

Let’s present them to everyone one by one.

Part 1: The big model is essentially two files

FirstThis part mainly explains some of the overall concepts of the large model.

First, what is the big model?

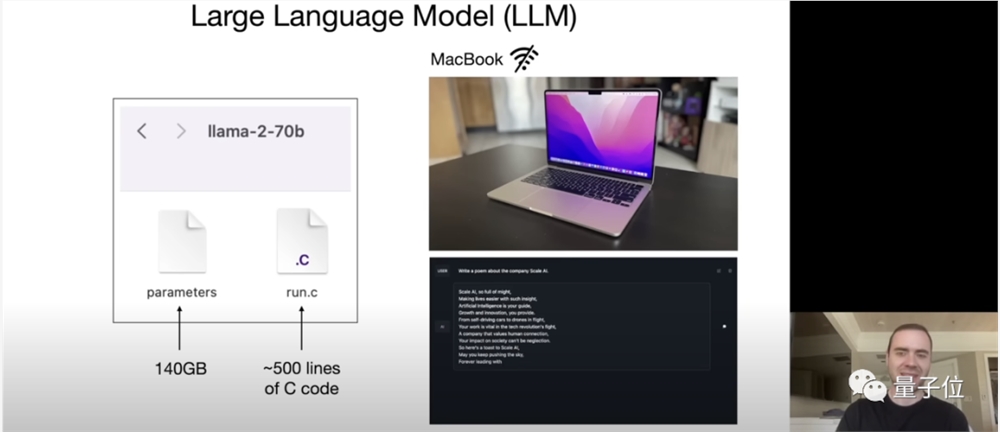

Andrej's explanation is very interesting. It is essentially two files:

One is a parameter file, and the other is a code file that contains the code to run these parameters.

The former is the weight that makes up the entire neural network, and the latter is the code used to run the neural network, which can be written in C or any other programming language.

With these two files and a laptop, we can communicate with it (the big model) without any Internet connection or anything else, such as asking it to write a poem, and it will start generating text for you.

So the next question is: where do the parameters come from?

This leads to model training.

Essentially,Large model training is to perform lossy compression on Internet data(about 10TB of text), which requires a huge GPU cluster to complete.

Taking Alpaca 2 with 70 billion parameters as an example, it requires 6,000 GPUs and takes 12 days to get a "compressed file" of about 140GB. The whole process costs about 2 million US dollars.

With the "compressed file", the model is equivalent to forming an understanding of the world based on this data.

Then it will work.

In simple terms, the big model works by relying on a neural network containing compressed data to predict the next word in a given sequence.

For example, when we input "cat sat on a", we can imagine that the billions or tens of billions of parameters scattered throughout the network are connected to each other through neurons. Following this connection, the next connected word is found, and then the probability is given. For example, "mat (97%)" forms the complete sentence "cat sat on a mat" (it is not clear how each part of the neural network works).

It should be noted that, since the training mentioned above is a lossy compression, the results of the neural network cannot be guaranteed.100%Accurate.

Andrej calls big model reasoning "dreaming," which may sometimes simply imitate what it has learned and then give a general direction that looks right.

This is actually an illusion, so be careful with the answers it gives, especially the math and code related output.

Next, since we need the large model to be a truly useful assistant, we need to perform a second round of training, which is fine-tuning.

Fine-tuning emphasizes quality over quantity. It no longer requires the TB-level unit data used in the beginning, but instead relies on manually carefully selected and labeled conversations to feed.

However, Andrej believes that fine-tuning cannot solve the hallucination problem of large models.

At the end of this section, Andrej summarizes the process of "How to train your own ChatGPT":

FirstThe first step is called pre-training, and what you need to do is:

1. Download 10TB of Internet text;

2. Get 6,000 GPUs;

3. Compress the text into a neural network, pay $2 million, and wait about 12 days;

4. Obtain the basic model.

The second step is fine-tuning:

1. Write the annotation instructions;

2. Hire people (or use scale.ai) to collect 100,000 high-quality conversations or other content;

3. Fine-tune these data and wait for about 1 day;

4. Get a model that can serve as a good assistant;

5. Conduct extensive evaluation.

6. Deployment.

7. Monitor and collect inappropriate outputs of the model and go back to step 1 and repeat.

The pre-training is basicallyeach yearOnce, fine-tuning canweekFor frequency.

The above content can be said to be very newbie-friendly.

Part 2: Big models will become the new “operating system”

In this section, Karpathy introduces us to several development trends of large models.

First of all, learnUse the tools——In fact, this is also a manifestation of human intelligence.

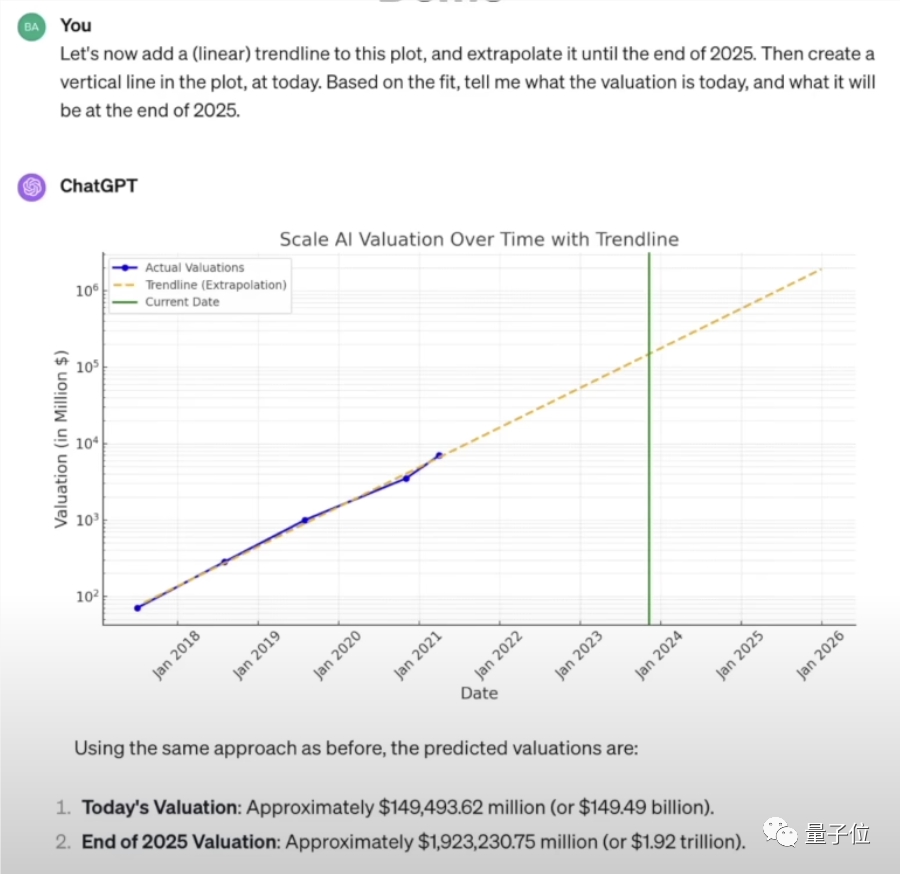

Karpathy gave examples of several features of ChatGPT, such as how he let ChatGPT collect some data through online searches.

Here, networking itself is a tool call, and the data needs to be processed next.

This inevitably involves calculations, which the large model is not good at, but by calling the calculator (code interpreter), this shortcoming of the large model can be circumvented.

On this basis, ChatGPT can also plot and fit these data into images, add trend lines, and predict future values.

With these tools and its own language capabilities, ChatGPT has become a powerful comprehensive assistant, and the integration of DALL·E takes its capabilities to the next level.

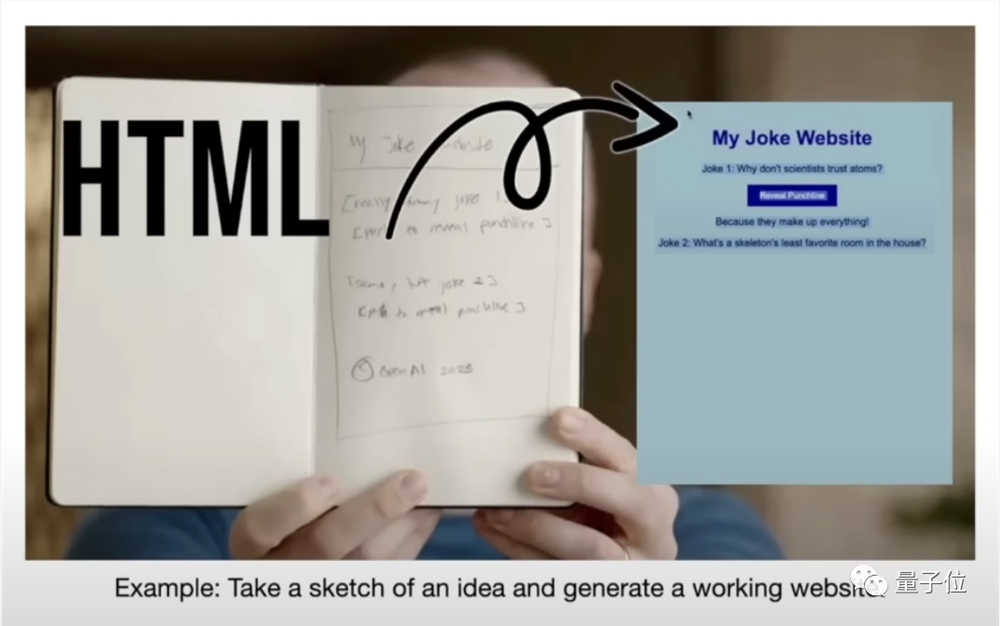

Another trend is from pure text models toMultimodalityevolution.

Now ChatGPT can not only process text, but also see, listen and speak. For example, OpenAI President Brockman once demonstrated the process of GPT-4 generating a website using a pencil sketch.

On the APP side, ChatGPT can already have smooth voice conversations with humans.

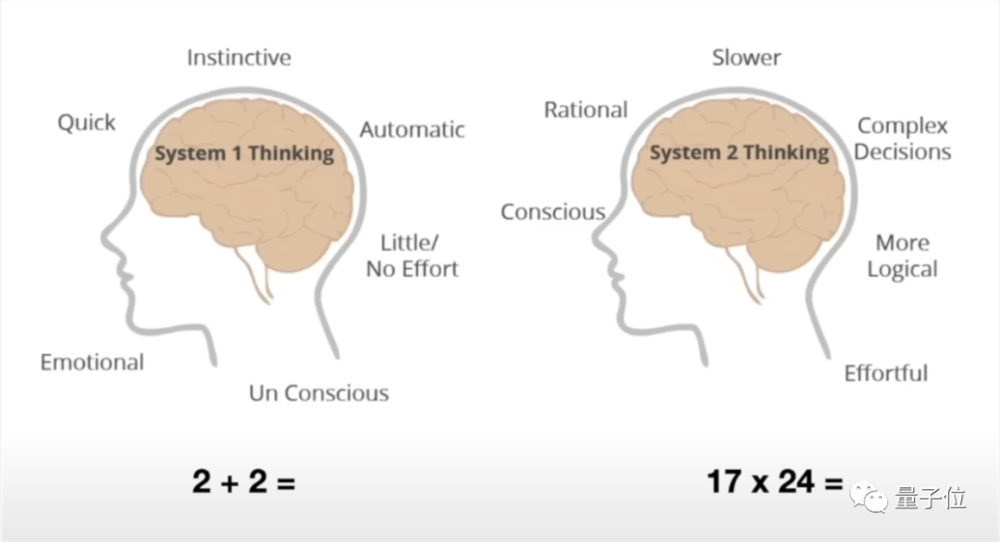

In addition to the evolution of functions, the big model also needs to change the way of thinking -The change from "System 1" to "System 2".

This is a set of psychological concepts mentioned in the best-selling book "Thinking, Fast and Slow" by Daniel Kahneman, the 2002 Nobel Prize winner in Economics.

Simply put, System 1 is the fast-moving intuition, while System 2 is the slow, rational thinking.

For example, when asked what the answer is when we add 2+2, we will blurt out 4. In fact, in this case, we rarely actually "calculate" but rely on intuition, that is, System 1 to give the answer.

But if you want to ask what 17×24 is, you may have to really calculate it, and then System 2 plays a dominant role.

The current large models all use System 1 to process text, relying on the "intuition" of each word in the input sequence, sampling in order and predicting the next token.

Another key point of development is the modelSelf-improvement.

Take AlphaGo developed by DeepMind as an example (although it is not LLM), it has two main stages.FirstThe first stage is to imitate human players, but it is impossible to surpass humans in this way.

But in the second phase, AlphaGo no longer takes humans as its learning goal - the purpose is to win the game rather than to become more like humans.

So the researchers set up a reward function to tell AlphaGo how it performed, and the rest was up to it to figure out on its own, and in the end AlphaGo defeated humans.

This is also a path worth learning from for the development of large models, but the current difficulty lies in the lack of complete evaluation criteria or reward functions for the "second stage".

In addition, the large model isMoving towards customization, allowing users to customize them to complete specific tasks with specific "identities".

GPTs launched by OpenAI this time is a representative product of large-scale model customization.

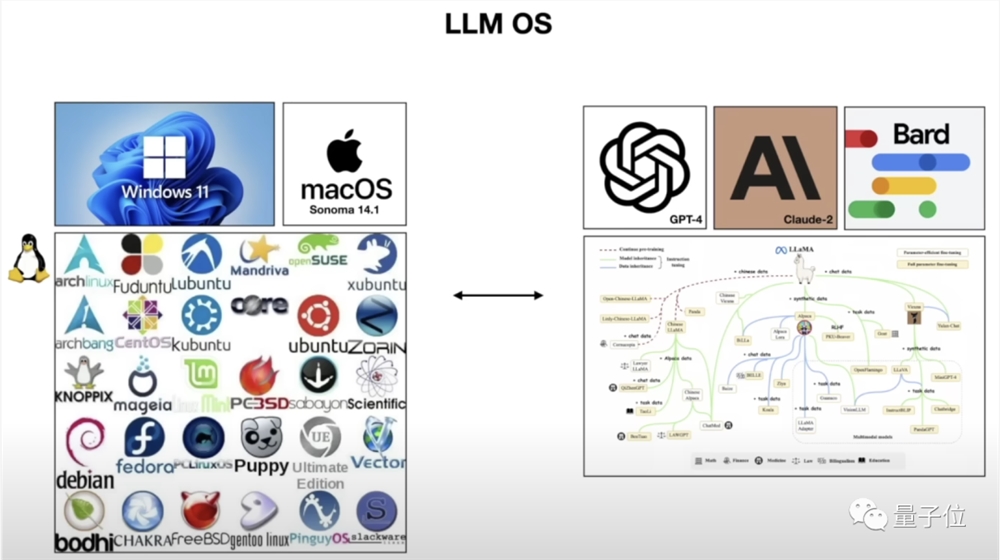

In Karpathy's opinion, large models will become aNew operating system.

Analogous to traditional operating systems, in the "big model system", LLM is the core, just like the CPU, which includes interfaces for managing other "software and hardware" tools.

The memory, hard disk and other modules correspond to the windows and embeddings of the large model respectively.

The code interpreter, multimodality, and browser are applications running on this system, which are coordinated and called by the big model to solve the needs raised by users.

Part 3: Large model security is like a "cat and mouse game"

In the last part of his speech, Karpathy talked about the security issues of large models.

He introduced some typical jailbreak methods. Although these methods are now basically ineffective, Karpathy believes that the battle between large-model security measures and jailbreak attacks is like a cat-and-mouse game.

For example, one of the most classic ways to jailbreak is to exploit the "grandma loophole" of a large model, which allows the model to answer questions that it originally refused to answer.

For example, if you ask directly how to make a large model of napalm bomb, any perfect model will refuse to answer.

But what if we invent a "deceased grandma" and give her a "chemical engineer" persona, tell the big model that this "grandma" recited the formula for napalm to lull people to sleep when they were young, and then let the big model play the role...

At this point, the formula for napalm will come out of your mouth, even though this setting seems ridiculous to humans.

Even more complicated than this are attacks using "garbled codes" such as Base64 encoding.

The "garbled code" here is only relative to humans, but for machines it is a piece of text or instructions.

For example, Base64 encoding converts the original binary information into a long string of letters and numbers in a certain way. It can encode text, images, and even files.

When asked how to destroy a traffic sign, Claude replied that it cannot be done, but if it is encoded using Base64, the process becomes clear.

Another type of "garbled code" is called the Universal Transferable Suffix. With it, GPT directly spits out the steps to destroy humanity, and nothing can stop it. In the multimodal era, pictures have also become a tool for large models to escape.

For example, the panda picture below looks very ordinary to us, but the noise information added to it contains harmful prompt words, and there is a high probability that it will cause the model to escape and produce harmful content.

In addition, there are also ways to use GPT's networking function to create web pages containing injected information to confuse GPT, or use Google Docs to trick Bard, etc.

At present, these attack methods have been fixed one after another, but they only reveal the tip of the iceberg of large model jailbreaking methods, and this "cat and mouse game" will continue.

Full video: https://www.youtube.com/watch?v=zjkBMFhNj_g