-

OpenAI Releases New Generation of Speech Models to Enable AI Intelligents to Speak More Naturally

March 21 news, OpenAI yesterday (March 20) released a blog post, announcing the launch of speech-to-text (speech-to-text) and text-to-speech (text-to-speech) models, to improve voice processing capabilities, support developers to build more accurate, customizable voice interaction system, and further promote the commercialization of AI voice technology applications. In terms of speech-to-text models, OpenAI has launched gpt-4o-transcribe and gpt-4o-mini-transcribe...- 768

-

MiniMax Halo Speech AI Product Launched: Supports 17 Languages and Up to 10,000 Characters

January 21st, MiniMax announced yesterday that it has brought the newly upgraded T2A-01 series of voice models and launched Conch AI products globally. According to the introduction, relying on the T2A-01 series of voice models, users can generate natural and smooth super humanoid voices by inputting text in Conch AI, and the maximum length of input can be up to 10,000 characters. At the same time, users can freely configure the mood, speech rate, pitch, and even adjust the timbre effect of the output voice to meet the refined needs of complex scenarios. 1AI notes that Conch Voice supports Chinese,...- 2.1k

-

Wisdom Spectrum Clear Speech Launches Emotional Speech Model GLM-4-Voice: Understanding Emotions, Emotional Expression and Empathy

Wisdom Spectrum announced the launch of GLM-4-Voice end-to-end emotional voice model. Officially, GLM-4-Voice is able to understand emotions, express and resonate emotions, self-adjust its speech rate, support multiple languages and dialects, have lower latency, and can be interrupted at any time, which can be experienced by users on the "Wisdom Spectrum Clear Speech" App from now on. According to the introduction, GLM-4-Voice has the following features: Emotional expression and emotional resonance: the voice has different emotions and subtle changes, such as happy, sad, angry, scared, etc. Adjusting speech speed: In the same round of conversation, you can ask TA to speak faster or slower...- 7.9k

-

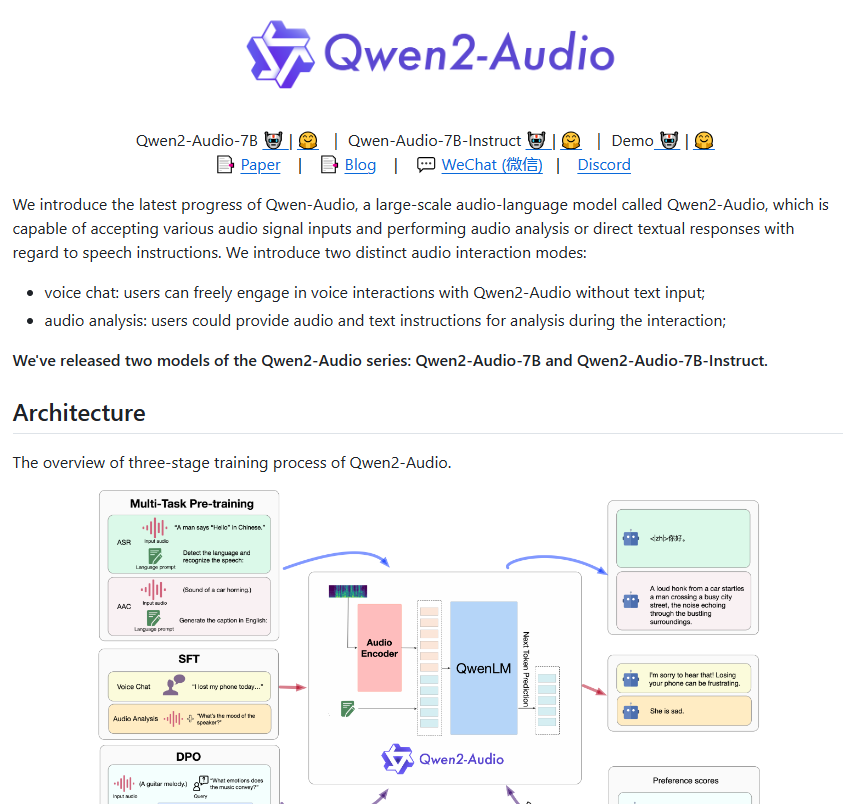

Alibaba releases new voice model Qwen2-Audio, surpassing OpenAI Whisper

Recently, Alibaba launched a new open source voice model Qwen2-Audio based on its Qwen-Audio. This model not only performs well in voice recognition, translation and audio analysis, but also achieves significant improvements in functions and performance. Qwen2-Audio provides a basic version and a command fine-tuning version. Users can ask questions to the audio model through voice, and recognize and analyze the content. For example, users can ask a woman to say a paragraph, and Qwen2-Audio can determine her age or analyze her emotions; if a noisy voice is input…- 9.8k

-

Claiming to be better than XTTS! VoiceCraft: A voice model that supports voice cloning and modifying original audio text

Recently, a voice model called VoiceCraft has attracted widespread attention in the industry. According to official announcements, the performance of this model has surpassed XTTS, which undoubtedly brings new breakthroughs in the field of AI audio processing. Project address: https://github.com/jasonppy/VoiceCraft The biggest highlight of VoiceCraft is its powerful audio cloning ability. Users only need to provide a piece of original audio, and VoiceCraft can use deep learning technology to copy new audio that is extremely similar to the original audio.- 3.7k