-

OpenAI Says No Plans to Launch Sora API for Video Generation Models Yet

12 月 18 日消息,OpenAI 今日表示,目前没有推出其视频生成模型 Sora 的应用程序接口(API)的计划,该模型可基于文本、图像生成视频。 在 OpenAI 开发团队成员的在线问答活动中,OpenAI 开发者体验负责人 Romain Huet 明确指出:“我们目前还没有推出 Sora API 的计划。” 此前,由于访问量远超预期,OpenAI 此前不得不紧急关闭了基于 Sora 的视频…- 558

-

Byte Jumping Beanbag for PC goes live with video generation feature, internal test users can generate ten videos per day for free

Byte jumping video generation model PixelDance has officially opened internal testing in the computer version of the beanbag, some users have opened the experience portal. The internal test page shows that users can generate ten videos per day for free. According to 1AI's previous report, the PixelDance video generation model was first released at the end of September, and the earliest through the dream AI, volcano engine for creators and enterprise customers to invite a small-scale test. According to early internal test creators, when PixelDance generates a 10-second video, the best results are achieved by switching the camera 3-5 times, and the scenes and characters can maintain a good...- 718

-

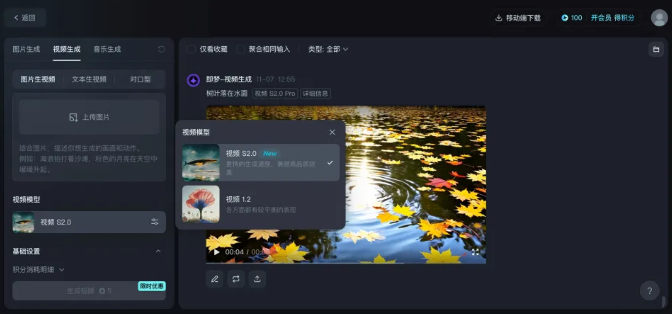

Instant Dream AI Announces Open Access to Seaweed Video Generation Models

Recently, namely dream AI announced that from now on byte jumping self-developed video generation model Seaweed for platform users officially open. After logging in, under the "Video Generation" function, users can experience the video model by selecting "Video S2.0". Seaweed video generation model is part of the beanbag model family, with professional-grade light and shadow layout and color harmonization, the visual sense of the screen is very beautiful and realistic. Based on the DiT architecture, the Seaweed video generation model can also realize smooth and natural large-scale motion pictures. Tests show that the model only needs 60s to generate a high-quality AI with a duration of 5s...- 3.1k

-

Ali Tongyi 10,000-phase video generation model "AI raw video" function is officially online, more understanding of the Chinese style of the big model is here!

At the 2024 Ali Yunqi Conference, Ali Cloud CTO Zhou Jingren announced that its latest research and development of AI video generation big model - Tongyi Wanxiang AI raw video, has been officially online, the official website and App can be immediately tried. AI video domestic battlefield, Ali also down. Alibaba's Tongyi launched the Tongyi Wanxiang AI raw video model is officially online, with a powerful picture visual dynamic generation capability, supporting a variety of artistic styles and film and television level texture video content generation. The model optimizes the performance of Chinese elements, supports multi-language input and variable resolution generation, has a wide range of application scenarios, and provides free service...- 7.1k

-

Byte Jump Beanbag Big Model Releases Video Generation Model on September 24th

Byte Jump Volcano Engine announced that the beanbag big model will be released on September 24th video generation model, and will bring more model family ability upgrade. It is understood that the Beanbag Big Model was officially released on May 15, 2024 at the Volcano Engine Original Power Conference. Beanbag Big Model offers multiple versions, including professional and lightweight versions, to suit the needs of different scenarios. The professional version supports 128K long text processing with powerful comprehension, generation and logic synthesis capabilities for scenarios such as Q&A, summarization, creation and classification. While the lightweight version provides lower token cost and latency, providing enterprises with flexible...- 7.3k

-

Adobe Premiere Pro to Integrate Video Generation by Year-End

Adobe has announced that it will launch video generation capabilities powered by the Adobe Firefly Video model by the end of this year, which will be available in the Premiere Pro beta app and on a standalone Web site. Adobe says it is currently testing three features internally: Generative Extend, Text to Video, and Image to Video, and will be opening them up for public testing in the near future. Generative Extend...- 1.3k

-

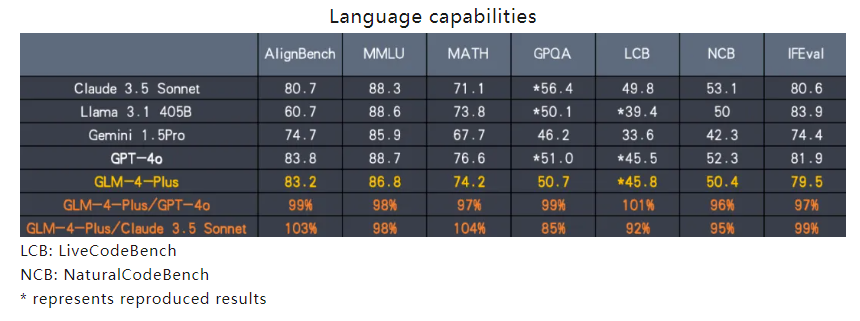

Zhipu AI releases GLM-4-Plus: comparable to GPT-4, the first C-end video call function

Zhipu AI recently released its latest large-scale model GLM-4-Plus, which demonstrates powerful visual capabilities comparable to OpenAI GPT-4, and announced that it will be open for use on August 30. Major update highlights: Language base model GLM-4-Plus: A qualitative leap in language parsing, instruction execution, and long text processing capabilities, continuing to maintain a leading position in international competition. CogView-3-Plus: The performance of the text model is comparable to the industry's top MJ-V6 and FLUX models. Image/video understanding model GLM-4V-Plus...- 12k

-

Zhipu AI open-sources CogVideoX-5B video generation model, which can be run on RTX 3060 graphics card

On August 28, Zhipu AI open-sourced the CogVideoX-5B video generation model. Compared with the previously open-sourced CogVideoX-2B, the official said that its video generation quality is higher and the visual effect is better. The official said that the inference performance of the model has been greatly optimized, and the inference threshold has been greatly reduced. CogVideoX-2B can be run on early graphics cards such as GTX 1080Ti, and CogVideoX-5B models can be run on desktop "dessert cards" such as RTX 3060. CogVideoX is a big...- 8.2k

-

Zhipu AI announces the open source of "Qingying" homologous video generation model - CogVideoX

Zhipu AI announced today that it will open source CogVideoX, a video generation model with the same origin as "Qingying". According to reports, the CogVideoX open source model includes multiple models of different sizes. Currently, CogVideoX-2B will be open source. It requires 18GB of video memory for inference at FP-16 precision and 40GB of video memory for fine-tuning. This means that a single 4090 graphics card can perform inference, and a single A6000 graphics card can complete fine-tuning. The upper limit of the prompt word of CogVideoX-2B is 226...- 5.9k

-

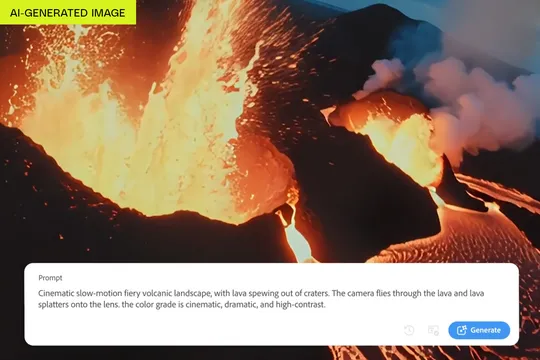

Runway releases third-generation video generation model, generating 10-second clips in 90 seconds

Runway, a company that builds generative AI tools for film and image content creators, has released its Gen-3 Alpha video generation model. With the official Gen-3 Alpha website: https://runwayml.com/blog/introducing-gen-3-alpha/ Runway says that compared to its previous flagship video model, Gen-2, the model delivers "major "and fidelity, as well as providing structure, style, and motion to the generated video...- 5.6k

-

Musk comments on OpenAI's video generation model: "AI-enhanced humans will create the best works"

Earlier in the day, OpenAI released its latest video generation model, Sora, which generates a video based on a text description entered by the user. At the same time, OpenAI also released several demo videos, such as a modern woman walking through the streets of Tokyo and a woolly mammoth walking in the snow, which 1ai has reported on in detail. Elon Musk has since commented on OpenAI's new model several times. Musk tweeted a video of Sora's demonstration to Twitter user "Bev Jassos" with the caption "gg Pixar," which Musk then tweeted this afternoon...- 2.9k

-

AI startup Runway launches the "Motion Brush" feature to make your pictures move!

Recently, AI startup Runway announced that its video generation model Gen-2 has launched an amazing "motion brush" feature. The launch of this feature represents an important milestone in the controllability of the model. Users only need to paint an area or subject in the image, select a direction and add motion intensity for it, and the motion brush can add controlled motion to the user's generation. This feature is currently available for free trial, usually for 125 seconds of free credits, and consumes about 4 seconds at a time. Users can provide their own pictures, or directly use pictures generated by Runway. The launch of this feature is for users...- 2.3k

-

Stability AI launches Stable Video Diffusion, a video generation model

Stability AI recently launched a video generation model called Stable Video Diffusion, which is based on the company's existing Stable Diffusion text-to-image model and is capable of generating videos by animating existing images. Unlike other AI companies, Stable Video Diffusion offers one of the few video generation models in the open source space. However, it should be noted that the model is currently in a "research preview" phase, and users must agree to specific terms of use that specify...- 1.7k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: