-

No Questions Asked Core Dome Open Sources World's First End-Side Omnimodal Understanding Model Megrez-3B-Omni, Supports Image, Audio, and Text Understanding

December 16th, 2011 - Unquestionable Core Dome today announced that it has open sourced Megrez-3B-Omni, a full-modal comprehension mini-model from Unquestionable Core Dome's end-side solution, and its language-only model version, Megrez-3B-Instruct. Officially, Megrez-3B-Omni is a full-modal comprehension model for the end-side, with the ability to process three types of modal data: Image, Audio, Text and Audio. Megrez-3B-Omni is a full-modal understanding model for the end, with the ability to process image, audio, and text at the same time: In terms of image understanding, Megrez-3B-Omni is currently the most popular model for OpenCompass, MME, MMMU, O...- 986

-

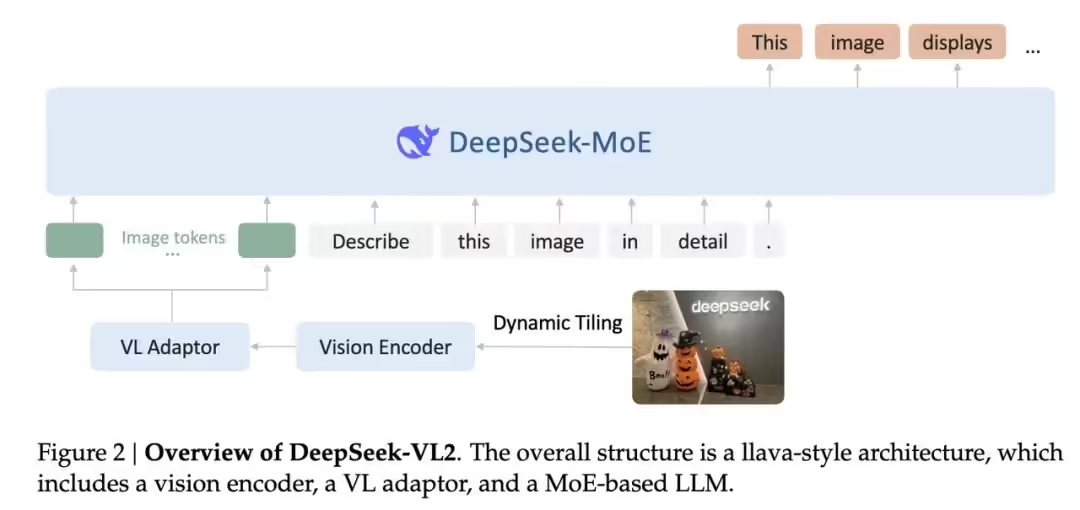

DeepSeek-VL2 AI visual model open source: support for dynamic resolution, processing scientific research charts, parsing various terrain maps, etc.

DeepSeek's official public website released a blog post yesterday (December 13), announcing the open source DeepSeek-VL2 model, which has achieved very advantageous results in various evaluation indexes, and officially said that its visual model has officially entered the era of Mixture of Experts (MoE). Citing the official press release, 1AI attached the highlights of DeepSeek-VL2 as follows: Data: double the quality of training data compared to the first generation of DeepSeek-VL, and introduction of terrain understanding, visual localization, visual storytelling...- 786

-

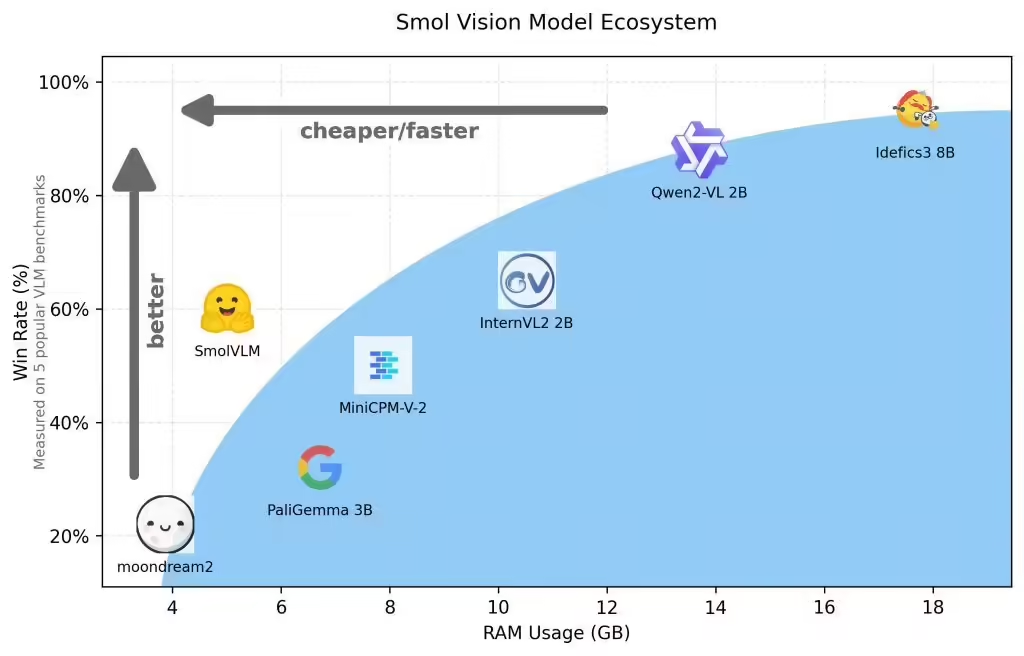

Hugging Face Releases SmolVLM Open Source AI Model: 2 Billion Parameters for End-Side Reasoning, Small and Fast

The Hugging Face platform published a blog post yesterday (November 26) announcing the launch of the SmolVLM AI visual language model (VLM), with just 2 billion parameters for device-side reasoning, which stands out from its peers by virtue of its extremely low memory footprint. Officials say the SmolVLM AI model benefits from being small, fast, memory efficient, and completely open source, with all model checkpoints, VLM datasets, training recipes, and tools released under the Apache 2.0 license. SmolVLM AI ...- 1.4k

-

Ali Tongyi Qianqian Releases Qwen2.5-Turbo AI Model: Supports 1 Million Tokens Contexts, Processing Time Reduced to 68 Seconds

November 19th, Ali Tongyi Qianqian released a blog post yesterday (November 18th) announcing the launch of the Qwen2.5-Turbo open source AI model in response to the community's request for a longer Context Length after months of optimization and polishing. Qwen2.5-Turbo extends the context length from 128,000 to 1,000,000 tokens, an improvement equivalent to about 1,000,000 English words or 1,500,000 Chinese characters, and can accommodate 10 complete novels,...- 1.8k

-

Take a look at AI virtual digital people and inventory current open source projects on digital people

Recently, in the AI circle, the digital man is getting prettier and prettier, and each company is launching the "open source strongest" digital man But, there are too many choices, how to know which one is suitable for you? You can't say "me + difficulty = give up", right? I can't! As a fanatic, I can't let you face such a dilemma! That's why I've made a decisive move! For you to one-time share before the digital person related integration package, do an inventory, including the effect of realization, the configuration required, generation time, etc., so that we can take a breath to see the current open source digital person in the end which is strong, together with the best choice of excavator! Digital people fire fire fire! To say A...- 3.4k

-

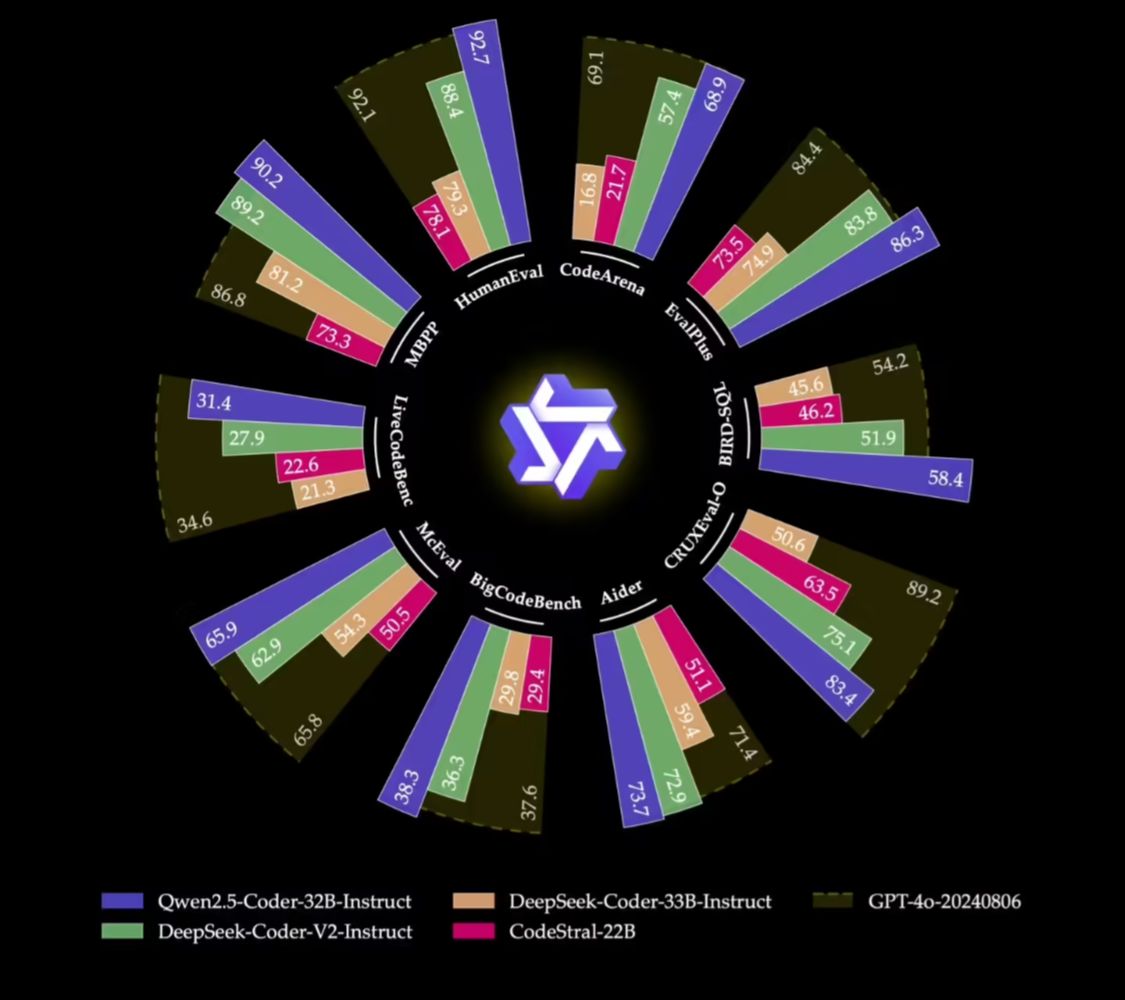

Ali Tongyi Thousand Questions open source Qwen2.5-Coder full range of models, claiming that the code ability to tie GPT-4o

November 12 news, Ali Tongyi Thousand Questions open source Qwen2.5-Coder full series of models, of which Qwen2.5-Coder-32B-Instruct become the current SOTA open source model, the official claim that the code ability to level with the GPT-4o. Qwen2.5-Coder-32B-Instruct as the open-source flagship model, on several popular code generation benchmarks (such as EvalPlus, LiveCodeBench, BigCodeBench) are...- 1.4k

-

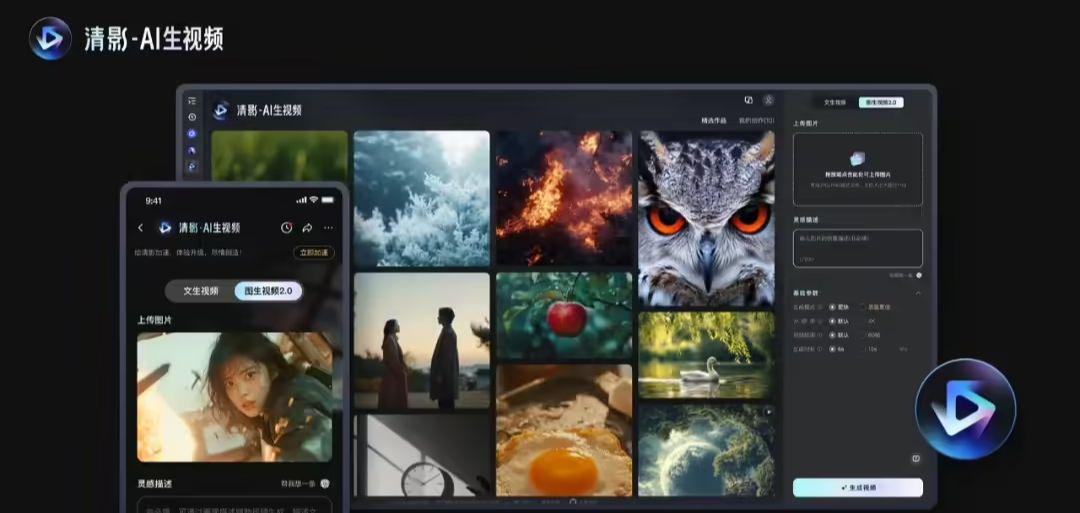

Say Goodbye to Silent Movies: Smart Spectrum Launches New Clear Shadow, Generating 10-Second 4K60 Frame/Self-Audio Videos

Wisdom Spectrum technology team today released and open-sourced the latest version of the video model CogVideoX v1.5, compared to the original model, CogVideoX v1.5 will include 5/10 second, 768P, 16 frame video generation capabilities, I2V model support for any size scale, significantly improve the quality of graphical video and complex semantic understanding. Officially, CogVideoX v1.5 will also be synchronized to the "ClearVideo" platform, and combined with the newly launched CogSound sound model, the "new ClearVideo" will have the following features: Quality Improvement: In the ... -

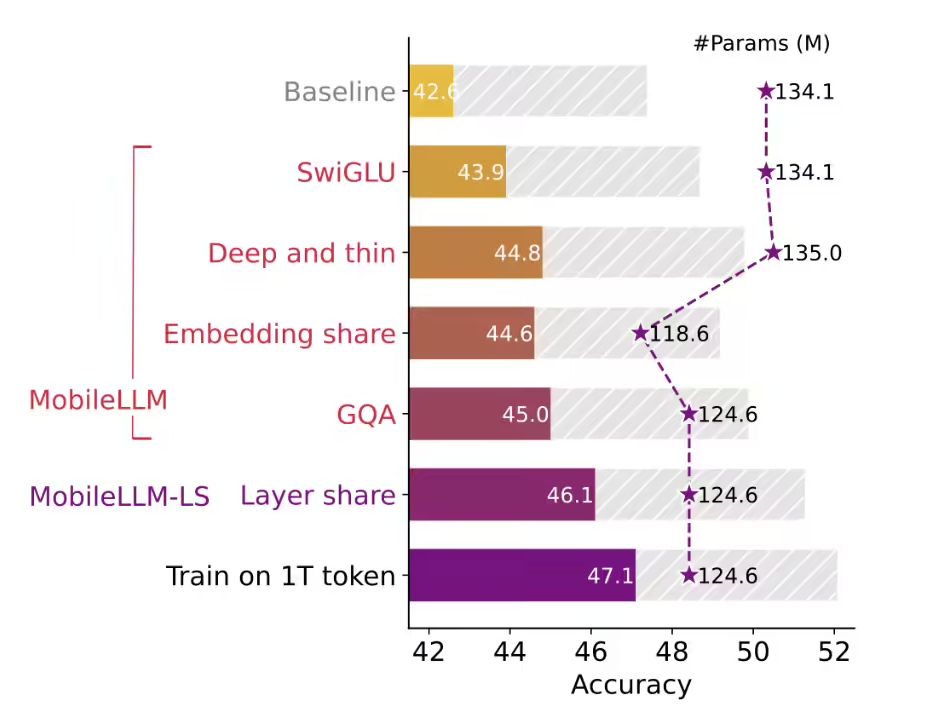

Meta Open Source Small-Language AI Models MobileLLM Family: Smartphone Friendly, 125M-1B Version Available

In a press release last week, Meta announced that it has officially open sourced the MobileLLM family of small language models that run on smartphones, and has added three new parameterized versions of the family, 600M, 1B, and 1.5B to the project's GitHub project page (click here to visit). According to Meta researchers, the MobileLLM family of models, built for smartphones, claims to have a lean architecture and introduces "SwiGLU activation functions," "grouped-query attenuation," and a "new language model with a new language model. ...- 1.5k

-

Tencent Launches Hunyuan-Large Large Model: 389B Total Parameters, Industry's Largest Transformer-Based MoE Model Open-Sourced

Tencent announced the launch of the Hunyuan-Large model, which is the largest Transformer-based MoE model that has been open sourced in the industry, with 389 billion total parameters (389B) and 52 billion activation parameters (52B). Tencent has open sourced Hunyuan-A52B-Pretrain, Hunyuan-A52B-Instruct, and Hunyuan-A52B-Instruct-FP8 at Hugging Face, and released...- 2.1k

-

ElevenLabs pushes open-source mini-project X-to-Voice: transforming Twitter accounts into personalized avatars with one click mp

Artificial intelligence company ElevenLabs recently released an open-source project called "X-to-Voice," a tool that intelligently analyzes Twitter user profiles and automatically generates digital voices and animated avatars that match a user's personality. The project integrates a number of cutting-edge technologies: ElevenLabs' self-developed voice design API is responsible for voice generation, while the Taedra tool is in charge of dynamic avatar creation. On the technical support side, the project uses Apify for profile and image data collection, Hedra for dynamic avatar...- 3.2k

-

World's first open source AI standard released, developed by Microsoft, Google, Amazon, Meta, Intel, Samsung and other giants

At the ALL THINGS OPEN 2024 conference at the end of this month, the open source organization Open Source Initiative (OSI) officially released the Open Source Artificial Intelligence Definition (OSAID) version 1.0, marking the birth of the world's first open source AI standard. Founded in 1998, OSI is a global non-profit organization that aims to define and "manage" all things open source. The OSAID standard was co-designed by more than 25 organizations, including Microsoft, Google, Amazon, Meta, Intel,...- 3.5k

-

OpenAI Opens New SimpleQA Benchmark to Cure Big Models of "Nonsense"

On October 31, OpenAI announced that it is open-sourcing a new benchmark called SimpleQA, which measures the ability of language models to answer short fact-seeking questions, in order to measure the accuracy of language models. One of the open challenges in AI is how to train models to generate factually correct answers. Current language models sometimes produce incorrect output or unsubstantiated answers, a problem known as "hallucinations". Language models that can generate more accurate and less illusory answers are more reliable and can be used...- 2.1k

-

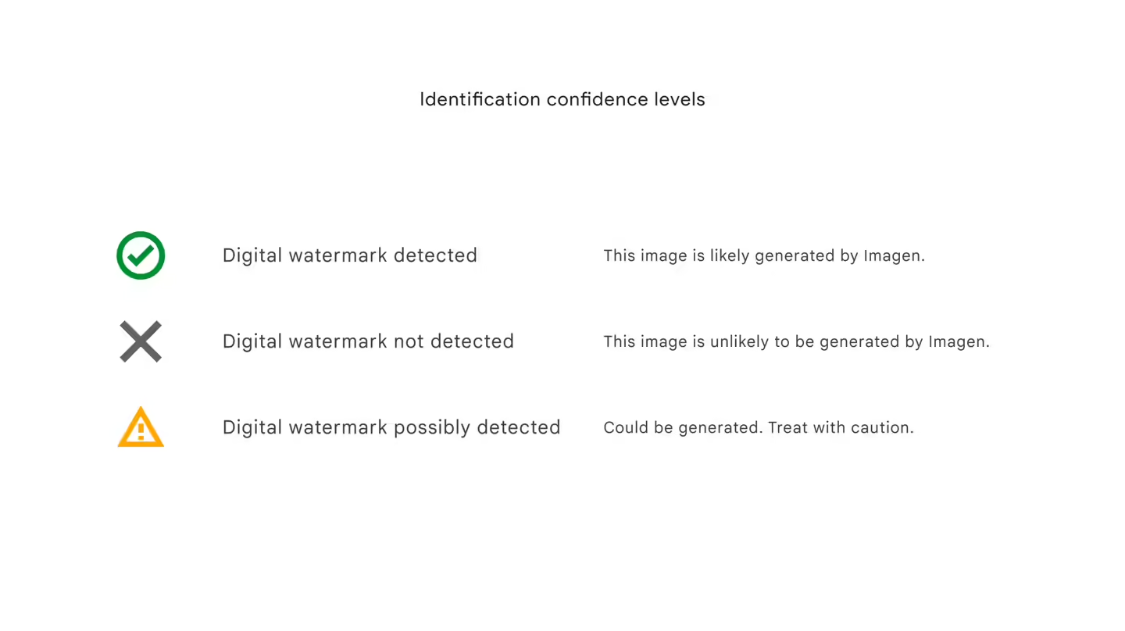

Google DeepMind opens SynthID Text tool to recognize AI-generated text

Google DeepMind announced on October 23 that it has officially open-sourced its SynthID Text text watermarking tool for free use by developers and businesses. Google launched the SynthID tool in August 2023, which has the ability to create AI content watermarks (declaring that the work was created by AI) and recognize AI-generated content. It can embed digital watermarks directly into AI-generated images, sound, text, and video without compromising the original content, as well as scanning that content for existing digital water...- 2.8k

-

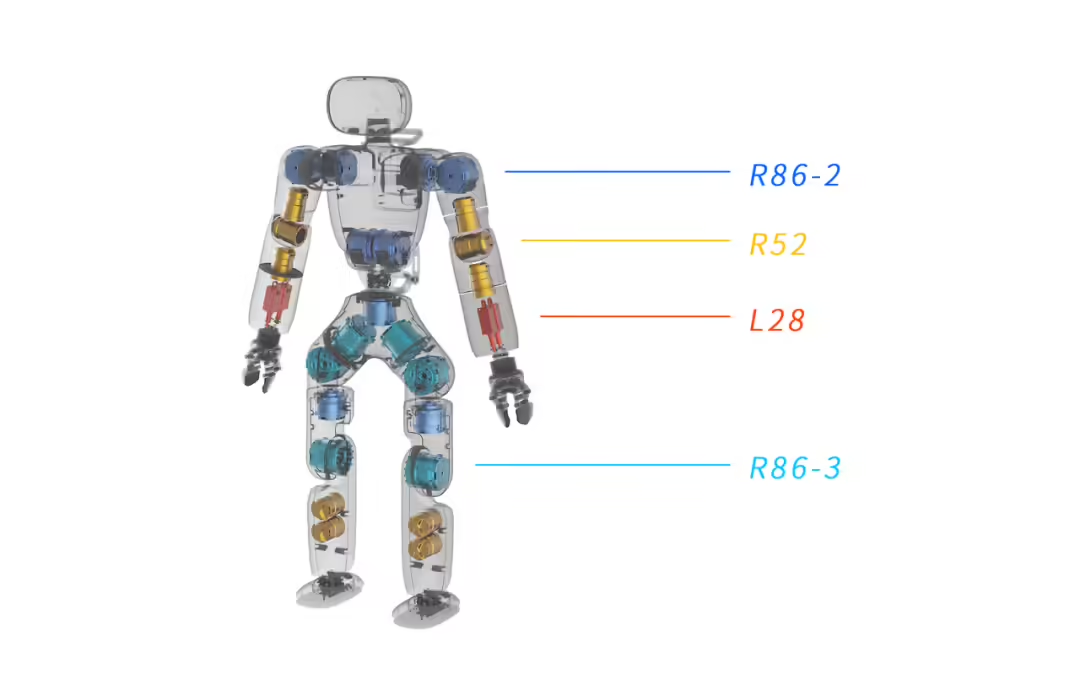

Wizen Robotics Announces Global Open Source of Rhinoceros X1, a Startup Program of "Wizen"

October 24th, Zhiyuan Robotics announced today that "Rhinoceros X1" is officially open-sourced for the world, and a full set of drawings and code for the hardware and software are online on GitHub, and the development guide is online on the official website of Zhiyuan Robotics. Zhiyuan Robotics official said, as the industry's first full-stack open source humanoid robot drawings and code company, the open source will unreservedly provide "one-stop" software and hardware technology resources, the total size of the material more than 1.2GB. In the machine structure hardware, open source content includes detailed machine structure drawings, hardware block diagrams and bill of materials (BOM), installation instructions, and the machine. (The open source content includes detailed drawings of the whole machine structure, hardware block diagrams and bill of materials (BOM), and instructions for installing the machine. ...- 2.7k

-

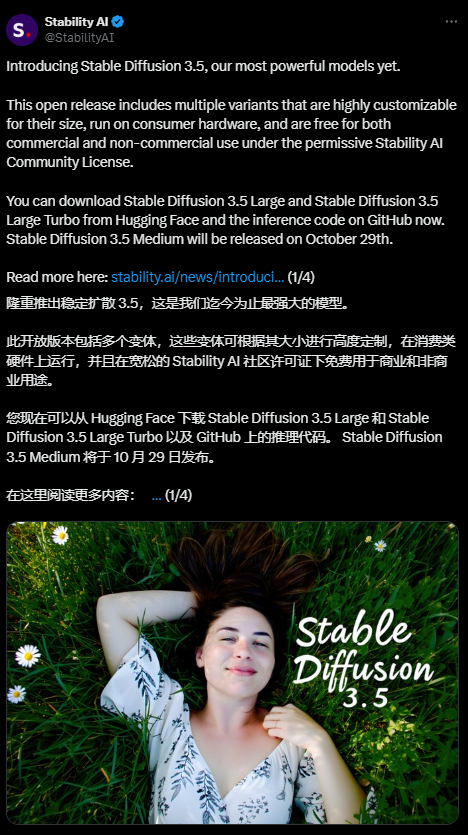

Open Source Venn diagram AI heavyweights are new: Stable Diffusion 3.5 arrives in a bucket, "out-of-the-box" on consumer-grade hardware

In a blog post yesterday (October 22), Stability AI announced the release of Stable Diffusion 3.5, which marks a significant advancement in open source AI graphical models. Stable Diffusion 3.5 is available in Medium (released on October 29), Large and Large Turbo sizes, designed to meet the different needs of scientific researchers, enthusiasts, startups and enterprises, with the following introduction: Stable Dif...- 2.3k

-

Wisdom Spectrum open source CogView3-Plus, related functions on the Wisdom Spectrum Clear Words App

Oct. 14, 2012 - Smart Spectrum's technical team announced today that it has open-sourced the text2img models CogView3 and CogView3-Plus-3B, and the capabilities of this series of models are now available on the Smart Spectrum Clear Words app. According to the introduction, CogView3 is a text2img model based on cascading diffusion. According to the introduction, CogView3 is a text2img model based on cascade diffusion, which consists of three stages as follows: Stage 1: Generate a 512x512 low-resolution image using the standard diffusion process. The second stage: using the relay diffusion process, the implementation of 2 times the super-resolution generation, from 512x512 ...- 3.7k

-

PearAI, which claims to be the open-source version of Cursor and just raised $3.5 million in funding, has been accused of plagiarism.

PearAI, an AI programming tool that describes itself as an "open source version of Cursor," recently announced that it has received $500,000 (about 3.5 million yuan) in funding from YCombinator. PearAI founder Duke Pan admitted that the product is actually a clone of another AI editor, Continue. Whereas Continue itself is a project based on the Apache open source license, PearAI is trying to build on that with a homegrown closed source license called "Pear Ent...- 6.6k

-

China Telecom AI Research Institute Completes the First Fully Localized Wankawansen Large Model Training, and TeleChat2-115B Is Open-Sourced to the Public

On September 28th, the official public number of "China Telecom Artificial Intelligence Research Institute" announced that China Telecom Artificial Intelligence Research Institute (hereinafter referred to as TeleAI) has successfully completed the first trillion-parameter large model based on the fully localized WANKA cluster training in China, and formally open-sourced the first trillion-parameter large model -- Star Semantic Large Model TeleChat2-115B, which was trained based on the fully localized WANKA cluster and the deep learning framework of China. TeleChat2-115B, the first 100 billion parameter large model trained on a fully localized Wanka cluster and a homegrown deep learning framework. Officially, this scientific achievement signifies that the training of homegrown large models has truly realized the replacement of all localization, and formally entered the new nationally produced independent innovation, safe and controllable... -

How to play with Stable Diffusion, FLUX and other open source AI painting models? Using a cloud-based open source model painting platform

AI painting, can be said to have been quite mature Closed source model Midjourney, easy to use, can produce photographic works, the effect is awesome. It is a few dozen knives per month, a little bit of money, and there is poor scalability. Character scene consistency requirements, or want to use the workflow to do exclusive painting tool applications, open source model is YYDS, such as stable diffusion series, and the recent fire Flux, media platforms have been slaughtered by the two lists. The charm of open source, lies in the scalability, controllability, but also with workflow packaged products, and the current effect is constantly approaching Mid...- 4.9k

-

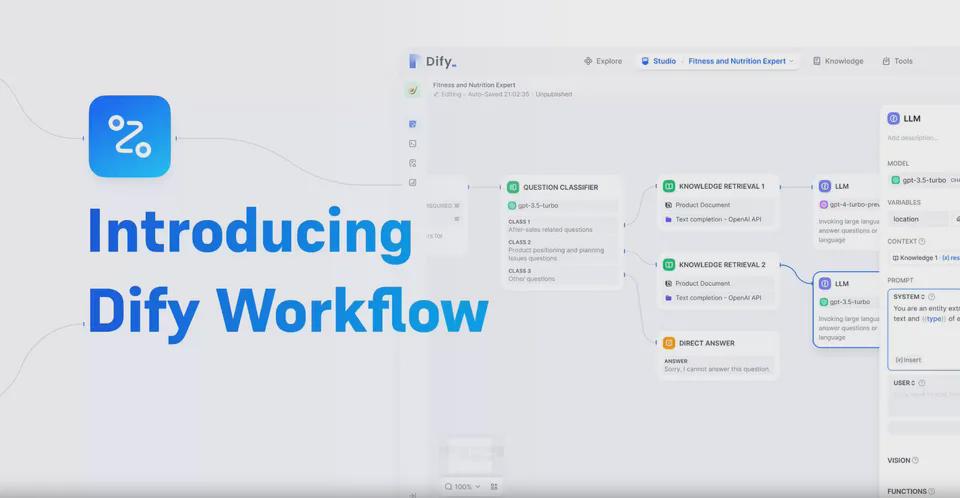

You can easily get started with the Dify open-source large model development platform, the combination of Agent and RAG to create a proprietary AI intelligent workbench

Dify is an open source platform for building AI applications.Dify combines the Backend as Service and LLMOps concepts. It supports a variety of large-scale language models, such as Claude3, OpenAI, etc., and cooperates with multiple model vendors to ensure that developers can choose the most suitable model according to their needs.Dify greatly reduces the complexity of AI application development by providing powerful dataset management features, visual Prompt orchestration, and application operation tools. Dify I. Dify What is ... -

Llama 3.2, the strongest open-source AI model on the end-side, has been released: it can run on cell phones, from 1B plain text to 90B multimodal, and challenges OpenAI 4o mini.

In a September 25th blog post, Meta officially launched Llama 3.2 AI models, featuring open and customizable features that developers can tailor to their needs to implement edge AI and visual revolution. Offering multimodal vision and lightweight models, Llama 3.2 represents Meta's latest advancements in Large Language Models (LLMs), providing increased power and broader applicability across a variety of use cases. This includes small and medium-sized vision LLMs (11B and 90B) suitable for edge and mobile devices to...- 3.4k

-

Ali Tongyi Qianqian open source Qwen2.5 large model, claiming performance beyond Llama

At the 2024 Cloud Root Conference, AliCloud CTO Zhou Jingren released Qwen2.5, a new generation of open source models, of which the flagship model, Qwen2.5-72B, is claimed to outperform the Llama 405B. Qwen2.5 covers multiple sizes of large language models, multimodal models, mathematical models, and code models, and each size has a base version, a command-following version, quantized versions, totaling more than 100 models on the shelf. Qwen2.5 language models: 0.5B, 1.5B, 3B, 7B, 14B, 32B, and ...- 2.3k

-

Mianbi Intelligent released the MiniCPM 3.0 client-side model: it can run with 2GB of memory and its performance exceeds GPT-3.5

The official WeChat account of Mianbi Intelligence published a blog post yesterday (September 5), announcing the launch of the open source MiniCPM3-4B AI model, claiming that "the end-side ChatGPT moment has arrived." This is an excellent AI model that can run on devices with only 2GB of memory, heralding a new era of end-side AI experience. The MiniCPM3.0 model has 4B parameters and outperforms GPT-3.5 in performance, and can achieve AI services on mobile devices at the same level as GPT-3.5. This allows users to enjoy fast, secure, and functional...- 12.2k

-

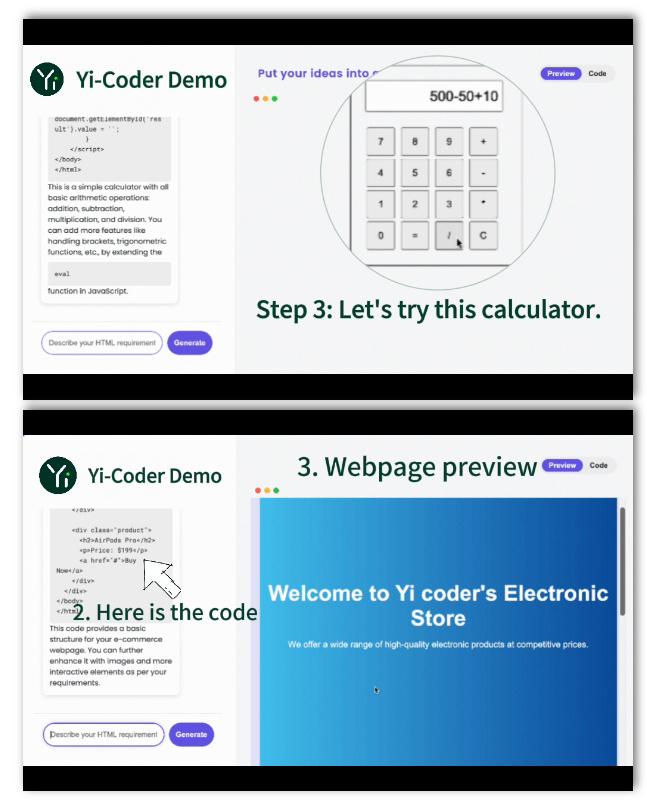

Zero One Everything opens source Yi-Coder series programming assistant models, supporting 52 programming languages

Zero One Everything announced today that it has open-sourced the Yi-Coder series of models, which are programming assistants in the Yi series of models. The Yi-Coder series of models are designed for coding tasks and provide two parameters: 1.5B and 9B. Among them, the performance of Yi-Coder-9B is said to be "better than other models with parameters below 10B", such as CodeQwen1.5 7B and CodeGeex4 9B, and can even be "comparable to DeepSeek-Coder 33B". According to the introduction,…- 5.5k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: