-

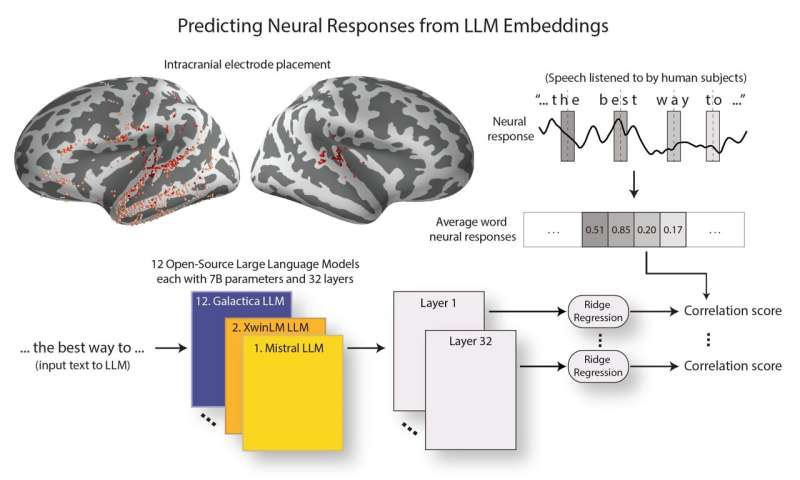

Columbia University Study: Big Language Models Are Becoming More Like the Human Brain

Dec. 20, 2011 - Large language models (LLMs), represented by ChatGPT and others, have become increasingly adept at processing and generating human language over the past few years, but the extent to which these models mimic the neural processes of the human brain that support language processing remains to be further elucidated. As reported by Tech Xplore 18, a team of researchers from Columbia University and the Feinstein Institute for Medical Research recently conducted a study that explored the similarities between LLMs and neural responses in the brain. The study showed that as LLM technology advances, these models not only perform...- 445

-

Salesforce CEO: Big Language Models May Be Approaching Technical Ceiling, AI's Future Is Intelligent Bodies

As Business Insider reported today, Salesforce CEO Marc Benioff recently said on the Future of Everything podcast that he believes the future of AI lies with autonomous agents (commonly known as "AI intelligences") rather than the large language models (LLMs) currently used to train chatbots like ChatGPT. "AI intelligences") rather than the large language models (LLMs) currently used to train chatbots like ChatGPT. "In fact, we may be approaching the technological upper limit of LLMs." Benioff mentioned that in recent years...- 1.2k

-

Honor MagicOS 9.0 Upgrade Supports 3 Billion Parameters End-Side Large Language Model: Power Consumption Drops by 80%, Memory Usage Reduced by 1.6GB

October 23 news, glory today officially released MagicOS 9.0, said to be "the industry's first personalized full-scene AI operating system equipped with intelligent body". In MagicOS 9.0, MagicOS new magic big model family ushered in the upgrade, support for flexible deployment of end-cloud resources, flexible deployment of different devices, with each version as follows: 5 million parameter image big model, end-side deployment, the full range of support for 40 million parameter image big model, end-side deployment, high-end series of 3 billion parameter big language model, end-side deployment, high-end series of 3 billion parameter big language model, end-side deployment, the high-end series of ...- 3.3k

-

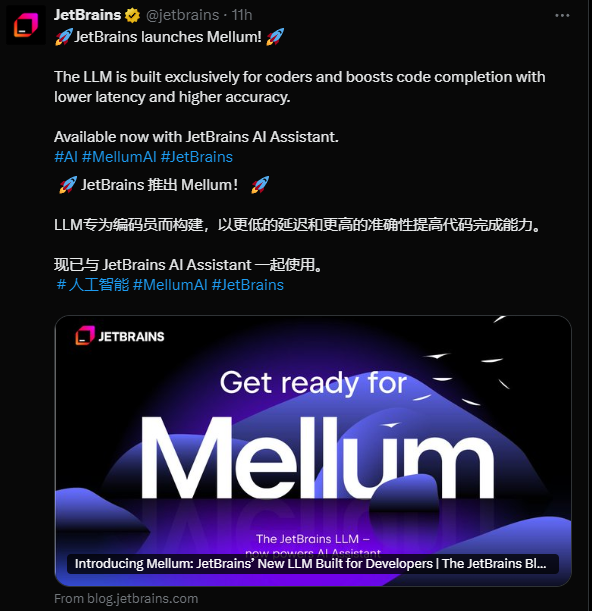

JetBrains Builds the Most Powerful AI Assistant for Developers Mellum: Built for Programming, Low Latency, Fast Completion, High Accuracy

JetBrains released a blog post yesterday (October 22), specifically designed to launch a new large language model, Mellum, to provide software developers with faster, smarter and more context-aware code completion. Officially, the biggest highlight of Mellum compared to other big language models is that it is designed specifically for developers to program with low latency, high performance, and comprehensive functionality to provide developers with relevant advice in the shortest possible time. Mellum supports Java, Kotlin, Python, Go and P...- 3.5k

-

MediaTek's next-generation Tengui flagship chip optimized for Google Gemini Nano multimodal AI with support for image and audio processing

October 8, 2011 - MediaTek today announced that the new generation of its Tiangui flagship core is now optimized for Google's big language model, Gemini Nano. Officially, the upcoming Tiangui flagship core is optimized for Google's Gemini Nano multimodal AI, supporting image and audio processing in addition to text. The new-generation Tengui Flagship Core is equipped with the eighth-generation MediaTek AI processor (NPU), which supports multimodal hardware acceleration for text, image, and voice. MediaTek's new-generation Tenguet flagship core will be launched on October 9 at 10:30...- 1.6k

-

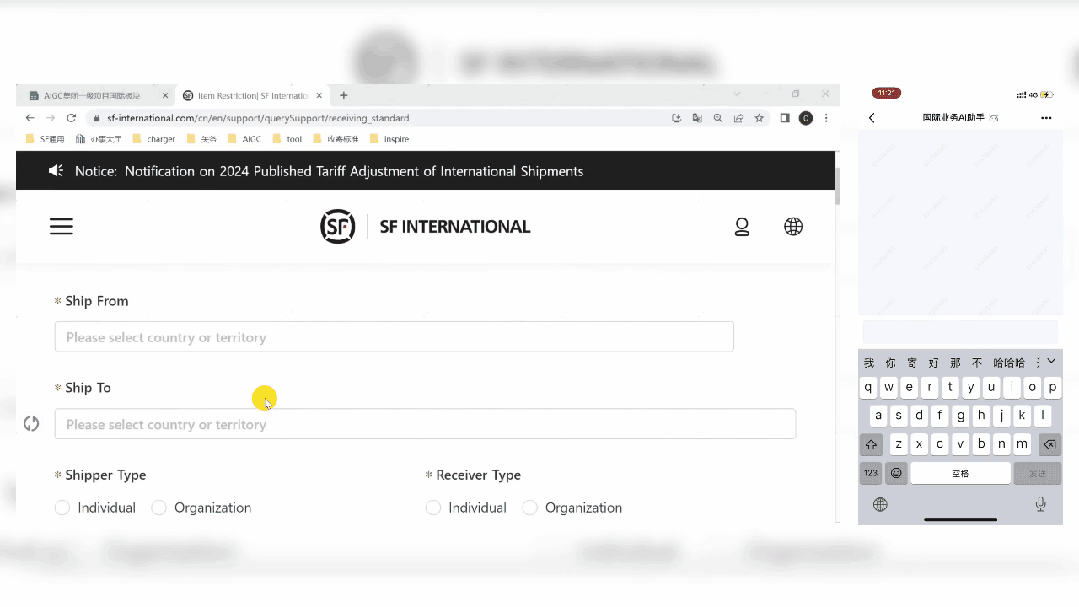

Shunfeng Releases "Feng Language" Large Language Model: Abstract Accuracy Rate Exceeds 95%, Claims Logistics Pendant Domain Capability Beyond Generalized Models

Shunfeng Technology yesterday in Shenzhen International Artificial Intelligence Exhibition released the logistics industry vertical domain big language model "Feng language". Jiang Shengpei, technical director of large model of SF Technology, said that SF has self-researched the industry's vertical domain large language model under the idea of comprehensive consideration of the balance of effect and cost of use. In terms of training data, Feng language about 20% of training data is Shunfeng and the industry's logistics supply chain related vertical domain data. At present, the accuracy of the summary based on the big model has exceeded 95%, and the average processing time of customer service personnel after dialoguing with customers has been reduced by 30%; the accuracy of the location of the problem of the courier boy has exceeded ...- 4.2k

-

Ali Tongyi Qianwen announced the launch of the new domain name "tongyi.ai", and added a deep search function to the web version of the chat

Alibaba's large language model "Tongyi Qianwen" announced the launch of a new domain name "tongyi.ai" and brought a number of new features. Summarized as follows: The web version of the chat adds a new deep search function: supports more content source indexes, search results are more in-depth, professional and structured, and the digital corner mark floats to display the source web page. App image micro-motion effects support multi-size images: enter the Tongyi App channel page, select "Image Micro-motion Effects", you need to upload a picture, and you can generate sound effects and micro-motion video effects that match the picture. App custom singing and acting supports 3:4 aspect ratio (originally 1:1): audio...- 2.8k

-

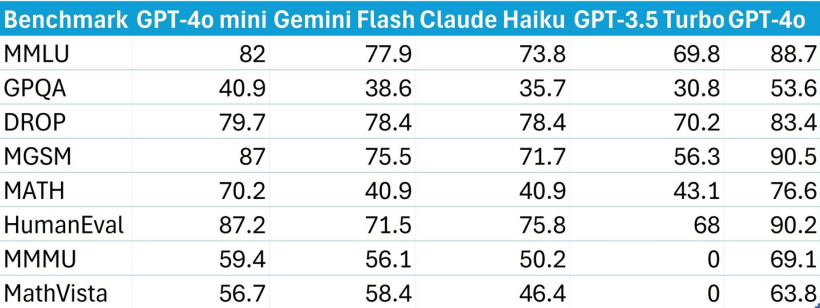

Will the AI large language model price war begin? Google lowers Gemini 1.5 Flash fees this month: the maximum reduction is 78.6%

Is the price war for large language models coming? Google has updated its pricing page, announcing that starting from August 12, 2024, the Gemini 1.5 Flash model will cost $0.075 per million input tokens and $0.3 per million output tokens (currently about RMB 2.2). This makes the cost of using the Gemini 1.5 Flash model nearly 50% cheaper than OpenAI's GPT-4o mini. According to calculations, Gemini …- 5.2k

-

OpenAI CEO admits that the alphanumeric naming of "GPT-4O MINI" is problematic

As OpenAI launches its next-generation GPT large language model, GPT-4o Mini, CEO Sam Altman finally acknowledged the problem with its product naming. The GPT-4o Mini is advertised as more cost-effective than the non-Mini version, and is particularly suitable for companies to develop their own chatbots. However, this complex and cumbersome naming has sparked widespread criticism. This time, OpenAI CEO Sam Altman finally responded to the criticism, admitting that the combination of numbers and letters…- 2.4k

-

Cohere and Fujitsu launch Japanese large language model "Takane" to improve enterprise efficiency

Canadian enterprise AI startup Cohere and Japanese information technology giant Fujitsu recently announced a strategic partnership to jointly launch a Japanese large language model (LLM) called "Takane". The partnership aims to provide enterprises with powerful Japanese language model solutions to enhance the user experience of customers and employees. Source note: The image is generated by AI, and the image is licensed by Midjourney. According to the statement, Fujitsu has made a "significant investment" for this collaboration. Cohere will use the advantages of its AI model, and Fujitsu will use its Japanese training and tuning technology...- 1.7k

-

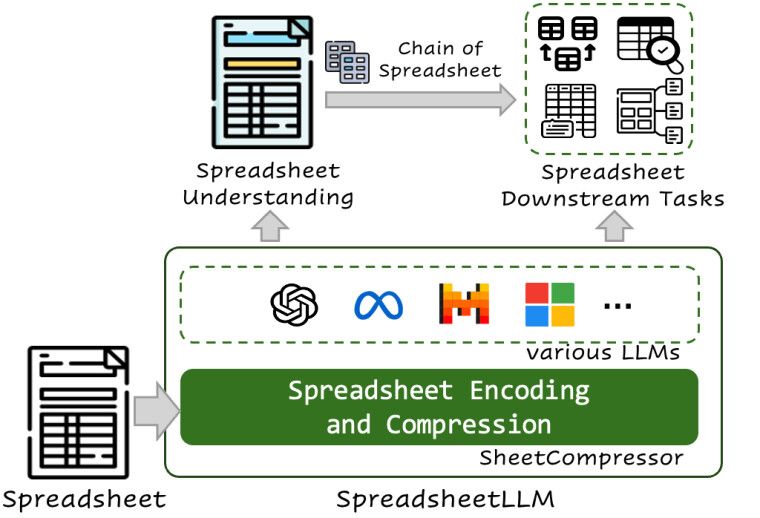

Microsoft develops new AI model for Excel and other applications: performance is 25.6% higher than conventional solutions, and word usage cost is reduced by 96%

According to a recent research paper published by Microsoft, it plans to develop a new AI large language model, SpreadsheetLLM, for spreadsheet applications such as Excel and Google Sheets. Researchers said that existing spreadsheet applications have rich functions and provide users with a large number of options in terms of layout and format, so traditional AI large language models are difficult to handle spreadsheet processing scenarios. SpreadsheetLLM is an AI model designed specifically for spreadsheet applications. Microsoft has also developed SheetCom…- 6.4k

-

SenseTime's large language model application SenseChat is now open to Hong Kong users for free and supports Cantonese chat

SenseTime announced that its Sensechat mobile app and web version are now free to Hong Kong users. The service has been launched in mainland China. Sensechat is based on the "Shangliang Multimodal Large Model Cantonese Version" launched by SenseTime in May this year. Relying on SenseTime's "daily update" language and multimodal capabilities, as well as its understanding of Cantonese, local culture and hot topics, users can chat with it directly in the most familiar Cantonese, directly input text or voice, ask questions, search for things, generate pictures, write copy, etc. IT Home attached an example as follows: Hong Kong Apple iPhone uses...- 6.5k

-

Shusheng·Puyu 2.5——InternLM2.5-7B model announced to be open source to support processing of million-word long articles

On July 3, 2024, Shanghai Artificial Intelligence Laboratory and SenseTime Technology, together with the Chinese University of Hong Kong and Fudan University, officially released the next-generation large language model Scholar Puyu 2.5 (InternLM2.5). The InternLM2.5-7B model has been open sourced, and models of other sizes will be open sourced in due course. Shanghai Artificial Intelligence Laboratory promises to continue to provide free commercial licenses and support community innovation through high-quality open source models. The model has significant improvements in the following aspects: Reasoning ability: InternLM2.5's reasoning ability has been significantly enhanced, and some dimensions have surpassed Llama3...- 11k

-

Bilibili will appear at the 2024 World Artificial Intelligence Conference and exhibit its self-developed large language model for the first time

At the 2024 World Artificial Intelligence Conference (WAIC 2024), Bilibili (hereinafter referred to as "Bilibili") announced a number of self-developed AI technology achievements and AIGC diverse creativity, including the latest customized AI voice library, self-developed audio and video large model Bijian Studio, and self-developed AI dynamic comic technology. In addition, Bilibili's self-developed large language model series was also exhibited for the first time at the WAIC 2024 conference, including the open source Index-1.9B chat and Index-1.9B chara…- 2.9k

-

Google releases Gemma 2 open source AI model with 9/27 billion parameters: performance is better than its peers, and can be run on a single A100/H100 GPU

Google released a press release yesterday, releasing the Gemma 2 large language model to researchers and developers around the world. There are two sizes: 9 billion parameters (9B) and 27 billion parameters (27B). Compared with the first generation, the Gemma 2 large language model has higher reasoning performance, higher efficiency, and has made significant progress in security. Google stated in the press release that the performance of the Gemma 2-27B model is comparable to mainstream models of twice the size, and only requires an NVIDIA H100 ensor Core GPU or TPU main...- 3.3k

-

Which of the domestic AI models do you think is the best? 12 AI language models that can replace ChatGPT

What are some excellent AI big language models in China that may replace ChatGPT? In the process of learning AI big language models, I sorted out the study notes of 12 domestic big language models, including the name of the big language model, the R&D team, the access link, the model list, and the introduction. Since the model content and price of the major company teams in different periods will be different, it is specially noted that the recording time of this article is from June 5 to June 6, 2024. Moonshot official website https://www.moonshot.cn/ Introduction to the language model used by Kimi smart assistant…- 49.7k

-

Nature magazine study: AI's ability to track other people's mental states is comparable to or even better than humans

In the latest issue of Nature Human Behavior later this month, a research paper on AI was published, which mentioned that in the task of testing the ability to track the mental state of others, two types of AI large language models have similar or even better performance than humans in certain situations. Image source: Pixabay As the key to human communication and empathy, mental state ability (also known as theory of mind) is very important for human social interaction. The first author of the paper, James WA Str of the University Medical Center Hamburg-Eppendorf in Germany…- 3.2k

-

Tencent plans to invest in Dark Side of the Moon, with a valuation of $3 billion

According to foreign media reports, Tencent is in investment negotiations with artificial intelligence developer Dark Side of the Moon. This investment plan may increase Dark Side of the Moon's valuation to $3 billion. With the booming development of China's artificial intelligence industry, Dark Side of the Moon has attracted much attention as a leading startup. Its leading position in the field of artificial intelligence large language models has attracted the attention of giants such as Tencent. The launch of this investment negotiation means that Tencent will join this fiercely competitive environment. Source Note: The image is generated by AI, and the image licensing service provider Midjourney is reported to have been established for only more than a year, but it has already completed...- 2.6k

-

Xiaomi AI Big Model MiLM has officially passed the filing and will be gradually applied to products such as automobiles/mobile phones/smart homes

According to the official Weibo of "Xiaomi Company", today Xiaomi's large language model MiLM officially passed the large model filing. The relevant model is said to be gradually applied to Xiaomi cars, mobile phones, smart homes and other products, and will be "open to more users to experience" in the future. Xiaomi's MiLM large model first appeared in the C-Eval and CMMLU large model evaluation list in August last year. At that time, this model ranked 10th in the C-Eval overall list and ranked first in the same parameter level. According to the information given on the GitHub project page, MiLM-6B was developed by Xiaomi...- 2.8k

-

DeepL launches Write Pro AI writing assistant based on self-developed large language model

DeepL, a machine learning translation company known for its eponymous translator, recently announced the launch of DeepL Write Pro, an AI writing assistant. The assistant is the first service powered by DeepL's self-developed large language model. DeepL said that unlike traditional generative AI tools and rule-based grammar correction tools, DeepL Write Pro provides creative assistance during the user's drafting process, using AI to provide real-time word choice, wording, style and tone suggestions to improve the quality of the text. Specifically, the tool can…- 1.6k

-

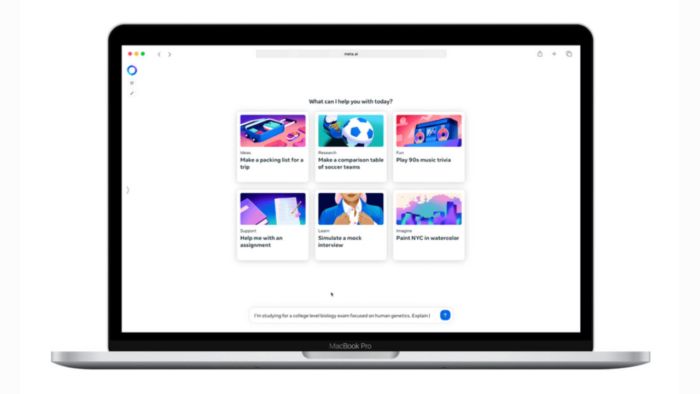

Meta AI expands global market and launches web version meta.ai

Meta recently announced that in addition to the three major language models of Llama, Meta AI services have been expanded to 13 countries and regions outside the United States. It also announced the launch of a dedicated chat website: meta.ai. In a press release, Meta stated that it has begun to expand Meta AI in the global market and launched English versions in countries and regions such as Australia, Canada, South Africa and Singapore. The countries and regions that Meta AI has expanded to are as follows: Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore…- 1.6k

-

Meta releases Llama 3, the most powerful open source large language model

Meta released a press release today, announcing the launch of the next generation large language model Llama 3, which has two versions with 8 billion and 70 billion parameters, claiming to be the most powerful open source large language model. Meta claims that Llama 3 outperforms Claude Sonnet, Mistral Medium and GPT-3.5. IT Home attaches the main features of Llama 3 as follows: Open to everyone: Meta open-sources the 8 billion parameter version of Llama 3, allowing everyone to access…- 2.4k

-

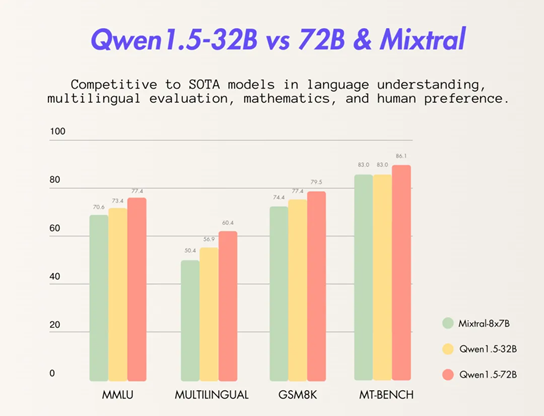

Alibaba Tongyi Qianwen open-sources 32 billion parameter models and has achieved full open-source of 7 major language models

On April 7, Alibaba Cloud Tongyi Qianwen open-sourced the 32 billion parameter model Qwen1.5-32B. IT Home noted that Tongyi Qianwen had previously open-sourced 6 large language models with 500 million, 1.8 billion, 4 billion, 7 billion, 14 billion, and 72 billion parameters. The 32 billion parameter model open-sourced this time will achieve a more ideal balance between performance, efficiency, and memory usage. For example, compared with Tongyi Qianwen's 14B open-source model, 32B has stronger capabilities in intelligent agent scenarios; compared with Tongyi Qianwen's 72B open-source model, 32B's reasoning cost is…- 2.3k

-

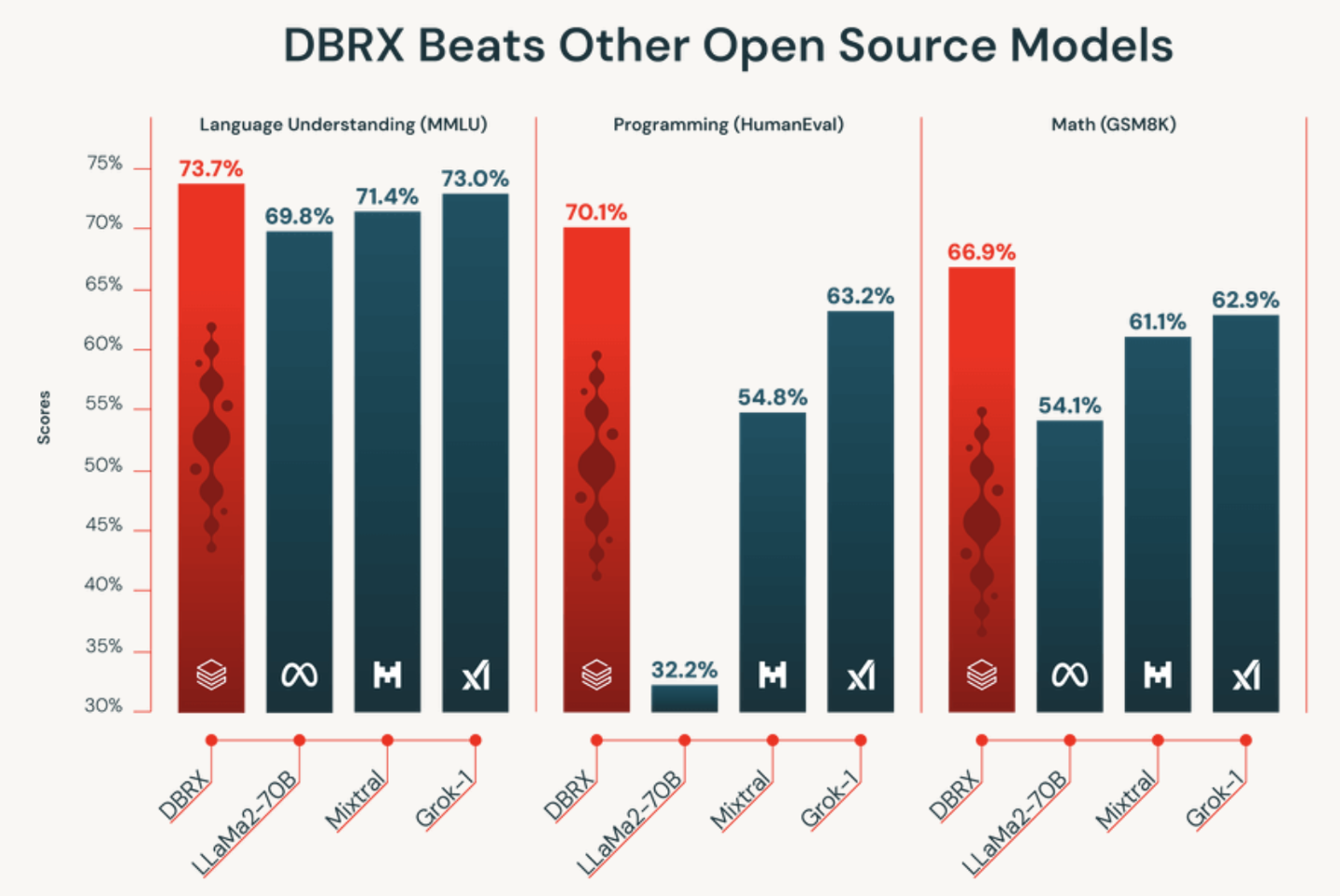

Databricks launches DBRX, a 132 billion parameter large language model, known as "the most powerful open source AI at this stage"

Databricks recently launched a general-purpose large language model DBRX, claiming to be "the most powerful open source AI currently available", and is said to have surpassed "all open source models on the market" in various benchmarks. According to the official press release, DBRX is a large language model based on Transformer, using the MoE (Mixture of Experts) architecture, with 132 billion parameters, and pre-trained on 12T Token source data. Researchers tested this model and found that compared with the market...- 2.2k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: