-

Smart Spectrum's First Free Multi-Modal Model GLM-4V-Flash Goes Online, Supports Image Description Generation, Visual Q&A, and More!

Following the free language model GLM-4-Flash in August, Wisdom Spectrum AI today launched its first free multimodal model, GLM-4V-Flash, which not only builds on the excellent capabilities of the 4V series models, but also achieves improved accuracy in image processing. According to the introduction, the GLM-4V-Flash model has advanced image processing functions such as image description generation, image classification, visual reasoning, visual question and answer (VQA), and image sentiment analysis, and supports Chinese, English, Japanese, Korean, German...- 875

-

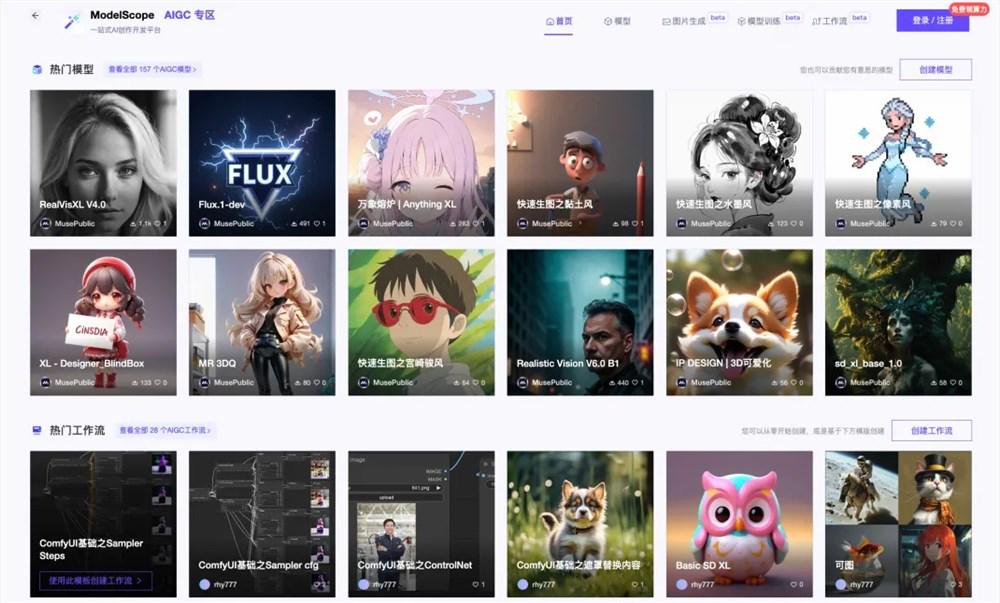

AliCloud Magic Hitch Community Launches AIGC Zone: First 157 Multimodal Models on the Shelf

On September 21, 2024, Alibaba announced a number of new developments in technological innovation and business development at the Hangzhou Yunqi Conference. Among them, the Magic Ride community officially launched the AIGC zone, aiming to provide developers with a comprehensive AI creation and development platform. The platform currently opens all function boards and GPU arithmetic for free, and the first batch of 157 selected multimodal models are on the shelves, including popular models in the community and a variety of stylized LoRa models contributed by designers. In the field of security, AliCloud announced a full upgrade of its cloud-native security capabilities, releasing for the first time its cloud-native network detection and response product, NDR, and at the same time promising...- 3.9k

-

Google launches Gemini 1.5 Pro, a powerful multimodal model, which ranks ahead of GPT-4o and Claude-3.5 Sonnet

Google today launched its latest AI masterpiece, Gemini 1.5 Pro, and made an experimental “version 0801” available through Google AI Studio and the Gemini API for early testing and feedback. The new model quickly topped the famous LMSYS Chatbot Arena leaderboard (built by Gradio) with an impressive ELO score of 1300. This achievement puts Gemini 1.5 Pro ahead of OpenAI’s GPT…- 9.6k

-

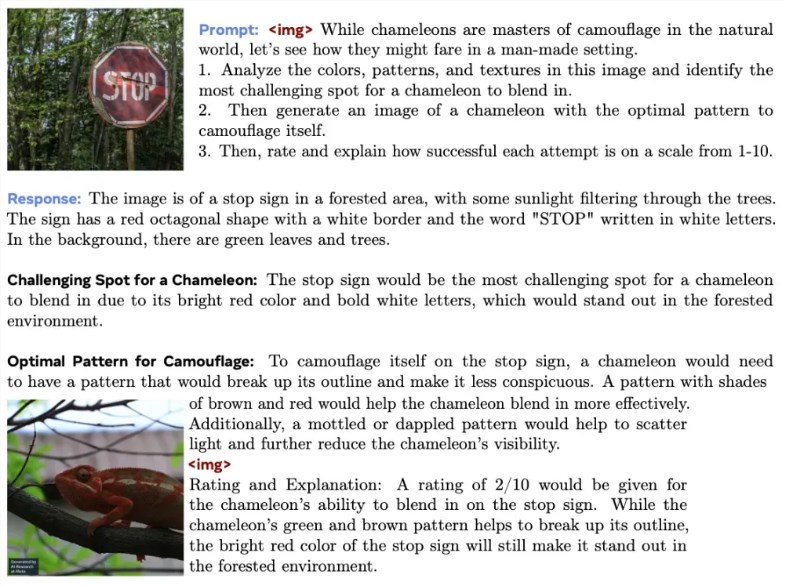

Meta releases Chameleon, a GPT-4o-like multimodal model

Meta recently released a multimodal model called Chameleon, which sets a new benchmark in the development of multimodal models. Chameleon is a family of early-fusion token-based mixed-modal models that can understand and generate images and text in any order. It uses a unified Transformer architecture to complete training using mixed modalities of text, images, and code, and tokenizes images to generate interleaved text and image sequences. The innovation of the Chameleon model lies in its early fusion method, where all processing pipelines are synchronized from the beginning... -

The open source MiniCPM 2.0 series of models from Mianbi Intelligent has significantly enhanced its OCR and other capabilities

The latest flagship end-side model launched by Mianbi Intelligent - Mianbi MiniCPM2.0 series models bring a series of amazing performance and functions: 1. MiniCPM-V2.0 is the strongest multimodal model on the end-side, with powerful OCR capabilities, and even some capabilities are comparable to Gemini Pro. It can accurately recognize various complex image contents, including street scenes and long pictures, through its self-developed high-definition image decoding technology. 2. MiniCPM-1.2B is a base model that is more suitable for end-side scenarios, and its performance exceeds many mainstream models,…- 5.1k

-

Musk XAI releases Grok-1.5 Vision multimodal model that can process text and image information

In the field of artificial intelligence, the development of multimodal models has always been the focus of industry attention. Recently, Musk XAI released its latest multimodal model, Grok-1.5Vision, which can not only process text information, but also understand and analyze various visual data, such as documents, charts, screenshots and photos, marking an important step for the company in artificial intelligence technology. The Grok-1.5Vision model has demonstrated its excellent performance in multiple benchmark tests. Compared with the industry-leading GPT4V model, it is not only comparable, but even achieves multiple indicators...- 2.6k

-

Musk xAI demonstrates the first multimodal model Grok-1.5V: can convert flowcharts into Python code

After Musk's artificial intelligence company xAI launched the Grok-1.5 large language model in late March, it recently launched its first multimodal model, Grok-1.5 Vision. xAI said it will invite early testers and existing Grok users to test Grok-1.5 Vision (Grok-1.5V) in the near future. It can not only understand text, but also process the content in documents, charts, screenshots and photos. xAI said: "Grok-1.5V has excellent performance in multidisciplinary reasoning, document understanding, scientific charts, table processing...- 2.4k

-

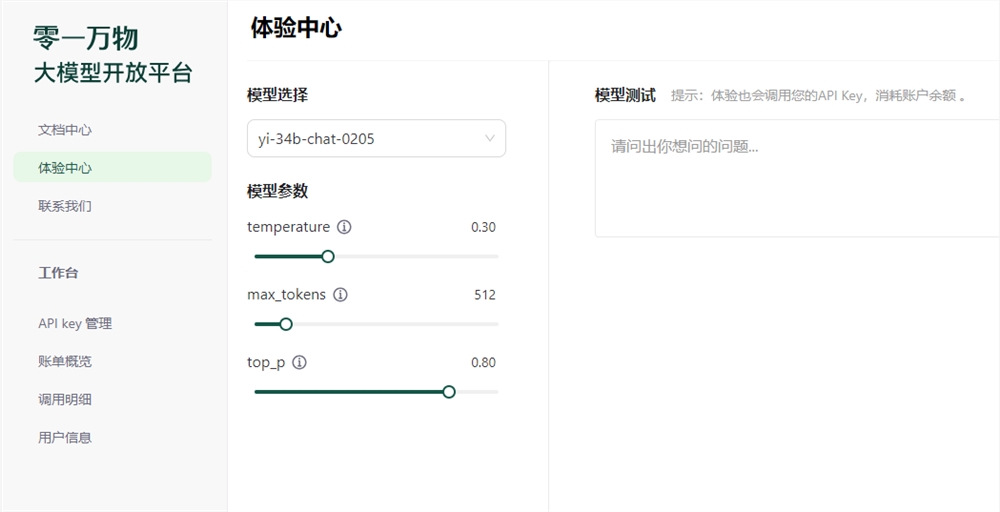

Zero One Everything API opens multimodal Chinese chart experience beyond GPT-4V

Recently, Zero One Everything API was officially opened to developers, which includes three powerful models. The first is Yi-34B-Chat-0205, which supports general chat, Q&A, dialogue, writing and translation functions; the second is Yi-34B-Chat-200K, which can handle multi-document reading comprehension and build a super-long knowledge base; the last is Yi-VL-Plus multimodal model, which supports text and visual multimodal input, and the Chinese chart experience exceeds GPT-4V. The opening of these models will promote the implementation of a wider range of application scenarios and form a more prosperous ecosystem. Address: https:…- 1.4k

-

Shengshu Technology's "Multimodal Big Model" has officially passed the filing

Recently, Shengshu Technology's "Multimodal Big Model" was officially registered with the national "Interim Measures for the Management of Generative Artificial Intelligence Services". Founded in March 2023, Shengshu Technology is a world-leading artificial intelligence company that independently develops multimodal general big models. It has deployed MaaS (model as a service) and application-level products to empower art design, game production, film and television animation, social entertainment and other fields. In March 2023, the team adopted the native technology route and released and open-sourced the world's first multimodal model UniDiffuser based on the U-ViT architecture, successfully converting the diffusion model for the first time... -

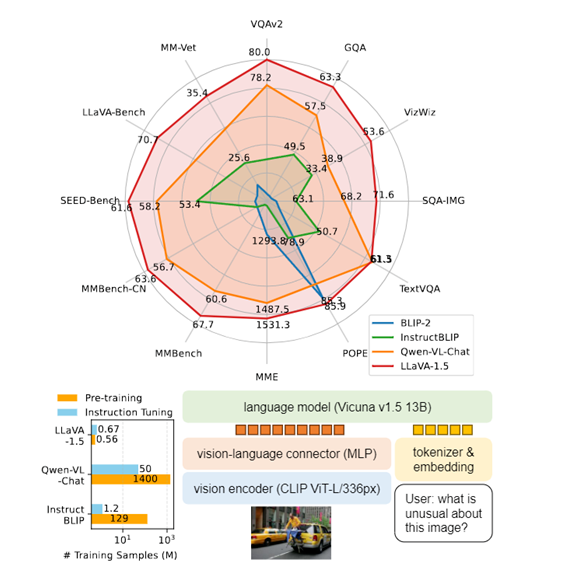

Microsoft's open source multimodal model LLaVA-1.5 is comparable to GPT-4V

Microsoft has open-sourced the multimodal model LLaVA-1.5, which inherits the LLaVA architecture and introduces new features. Researchers have tested it in visual question answering, natural language processing, image generation, etc. and found that LLaVA-1.5 has reached the highest level among open-source models, comparable to the effect of GPT-4V. The model consists of three parts: the visual model, the large language model, and the visual language connector. Among them, the visual model uses the pre-trained CLIP ViT-L/336px. Through CLIP encoding, a fixed-length vector representation can be obtained to improve the representation of image semantic information. Compared with the previous version…

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: