-

China Academy of Information and Communications Technology (CICT): officially launched the preparation of technical specifications for multimodal intelligences

March 10 news, according to the people's financial news reported, in order to further accelerate the intelligent body to enable industrial applications, and promote the high quality development of multimodal intelligent body, the China Information and Communication Technology Institute officially launched the multimodal intelligent body technical specification preparation. In order to fully improve the content of the technical specifications and enhance industrial exchanges, the Artificial Intelligence Research Institute of the China Academy of Information and Communications Technology (AI) will convene a multimodal intelligent body technology salon and technical specifications seminar on March 13, inviting experts in the industry to discuss the dynamics of the development of multimodal intelligent body technology, application landing, and introducing the framework of the technical specifications of multimodal intelligent body. 1AI noted that in May last year, the...- 1.5k

-

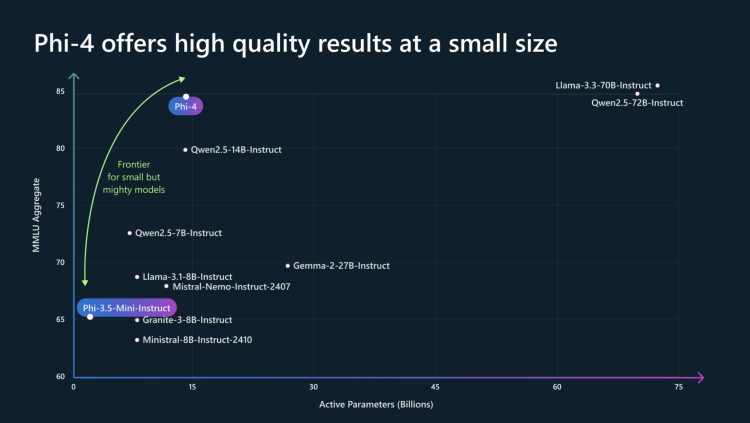

Microsoft Phi-4 Multi-Modal and Mini-Models Online, Speech Vision Text All-in-One

February 27, 2011 - Microsoft released Phi-4 in December 2024, a small language model (SLM) that excels in its class. Today, Microsoft extends the Phi-4 family with two new models: Phi-4-multimodal and Phi-4-mini. The Phi-4 multimodal model is Microsoft's first unified architecture multimodal language model that integrates speech, vision, and text processing with 5.6 billion parameters. In multiple benchmarks...- 1.8k

-

Microsoft open source multimodal AI Agent "Magma": shopping can automatically order, but also predict the behavior of video characters

February 26th news, Beijing time this morning, Microsoft in the official website of the open source multimodal AI Agent base model - Magma. compared with the traditional Agent, Magma has across the digital and physical world of multimodal capabilities, can automatically deal with different types of data, such as images, video, text, etc. In addition, Magma can also be built-in psychological prediction capabilities to enhance the understanding of the spatial and temporal dynamics of future video frames, can accurately infer the intentions and future behavior of characters or objects in the video. In addition, Magma has built-in psychological prediction capabilities that enhance its ability to understand the spatial and temporal dynamics of future video frames, allowing it to accurately predict the intentions and future behaviors of people or objects in the video. Users can use Magma to automatically place e-commerce orders, check sky...- 1.2k

-

Multimodal Agent Upgrade for Autonomous Operating Computers, Smart Spectrum GLM-PC Open Experience

January 23 news, Beijing Zhi Spectrum Huazhang Technology Co., Ltd. today announced that its Zhi Spectrum GLM-PC open experience, claiming that "autonomous operation of the computer's multimodal Agent upgraded". According to the introduction, GLM-PC is the world's first public-oriented, back to the computer intelligence body (agent) based on CogAgent, a large multimodal model of the Smart Spectrum. GLM-PC v1.0 will be released on November 29, 2024 and open to the public...- 2.5k

-

Dark Side of the Moon Releases Kimi k1.5 Multimodal Thinking Model for SOTA-Level Multimodal Reasoning Capabilities

Dark Side of the Moon announced on January 20 the launch of Kimi's new SOTA model -- k1.5 multimodal thinking model, which realizes SOTA (state-of-the-art) level multimodal reasoning and generalized reasoning capabilities. Officially, in short-CoT mode, Kimi k1.5's mathematical, coding, and visual multimodal and generalized capabilities dramatically outperform those of the global short-thinking SOTA models GPT-4o and Claude 3.5 Sonnet, leading by as much as 5...- 6.3k

-

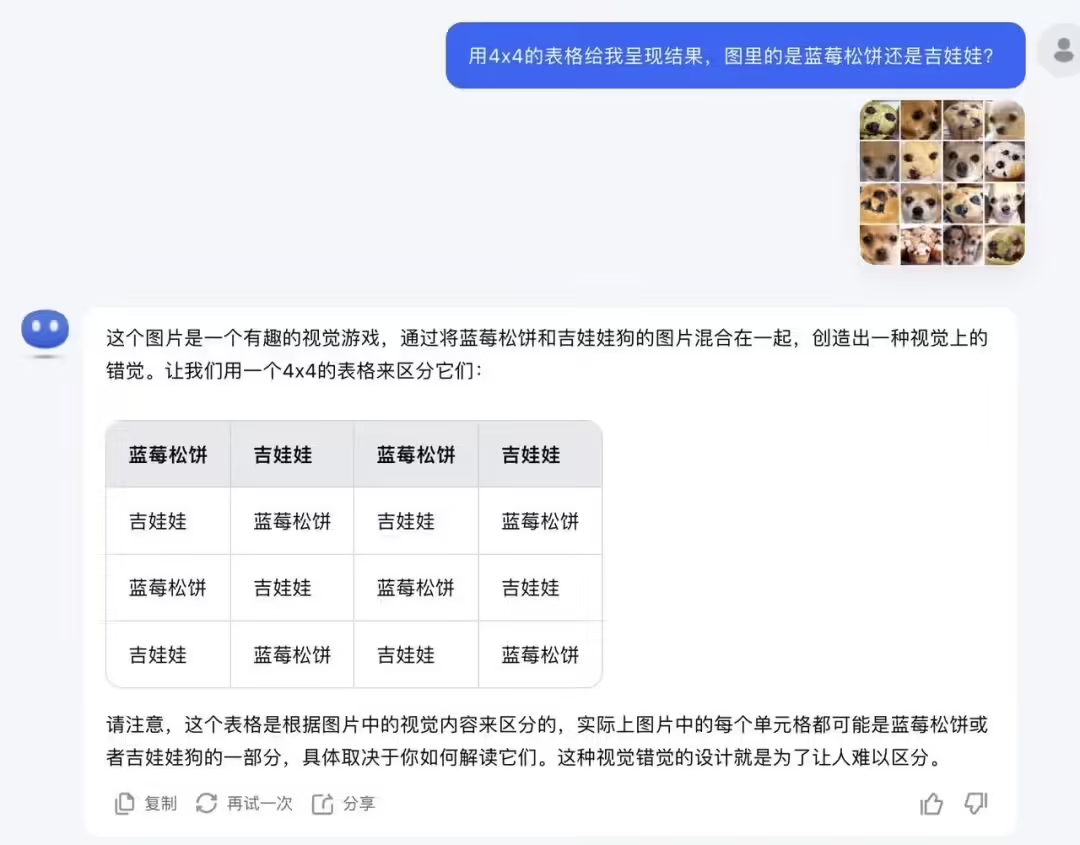

Kimi Multimodal Image Understanding Model API Released, 1M tokens priced from $12

January 15, 2011 - The Dark Side of the Moon today released the Kimi Multimodal Image Understanding Model API, a new multimodal image understanding model, moonshot-v1-vision-preview (hereinafter referred to as the "Vision model"), which completes the moonshot-v1 model series' multimodal capabilities. model series. Model Capability Description Image Recognition The Vision model has the ability to recognize complex details and nuances in an image, whether it is a food or an animal, and to distinguish between objects that are similar but not identical. ...- 3.1k

-

Multimodal AI powers cancer treatment, more accurately predicting cancer recurrence probability, survival rates and more

January 15, 2011 - A research team from Stanford Medical School has developed an AI model called MUSK, which combines medical images and text data to accurately predict the prognosis and treatment response of cancer patients. Note: Prognosis is a medical term that refers to the estimation of the possible outcome after treatment based on the patient's current condition, combined with the understanding of the disease, such as clinical manifestations, laboratory results, imaging tests, etiology, pathology, and pattern of the disease, as well as the timing of the treatment, the method, and the new situation that emerges during the process. The highlight of the MUSK model is the breakthrough...- 2.7k

-

Google Releases Multimodal Live Streaming API: Unlocking Watching, Listening, and Speaking, Opening a New Experience in AI Audio and Video Interaction

Google released Gemini 2.0 yesterday along with a new Multimodal Live API to help developers build apps with real-time audio and video streaming capabilities. The API enables low-latency, bi-directional text, audio and video interactions with audio and text output for a more natural, smooth, human-like interactive experience. Users can interrupt the model at any time and interact with it by sharing camera input or screen recordings to ask questions about the content. The model's video comprehension feature extends the communication model...- 3.2k

-

Samsung's multimodal AI model Gauss 2 debuts to empower the Galaxy Intelligent Ecosystem

In a blog post today (October 21), Samsung announced the launch of its second-generation generative AI model, Samsung Gauss2, at a developer conference in South Korea. The multimodal language model is capable of processing multiple data types such as text, code, and images simultaneously, providing a significant boost in performance and efficiency. Gauss2 is available in three different sizes - Compact, Balanced and Supreme - to meet the needs of different computational requirements. Gauss2 offers three different models with different specifications to meet the needs of different computing environments and application scenarios, which are briefly summarized by IT Home as follows...- 2.8k

-

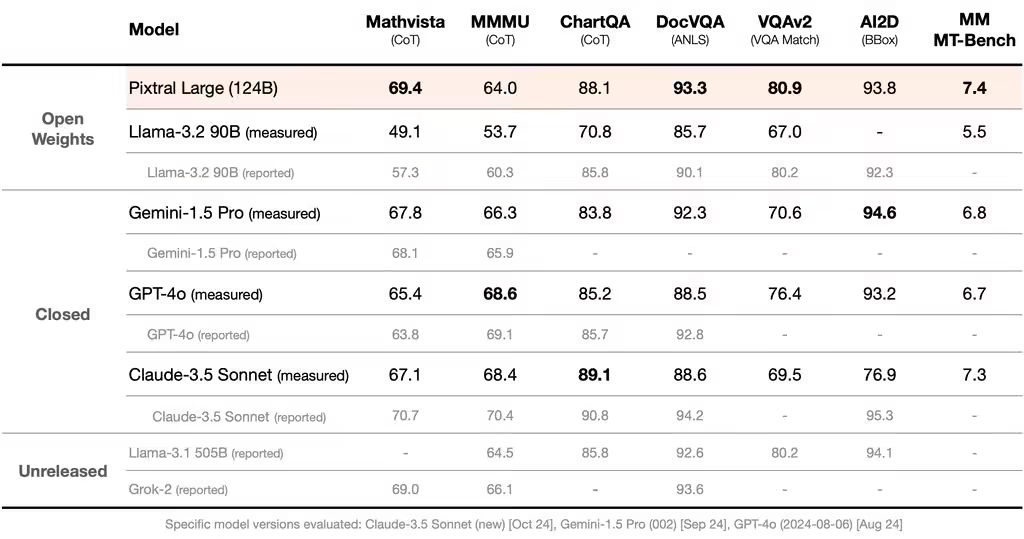

Mistral Releases Pixtral Large Multimodal AI Model: Tops Complex Math Reasoning, Diagram/Document Reasoning Over GPT-4o

Nov. 19 - Mistral AI announced yesterday, Nov. 18, a new multimodal AI model, Pixtral Large, with 124 billion parameters, based on Mistral Large 2, and designed primarily for processing text and images. Pixtral Large is now available under the Mistral Research License and Commercial License for research, education, and commercial use. Pixtral Large is a Mistral ...- 2.5k

-

The open source multimodal behemoth is here! Meta will launch the Llama 3 405B model on July 23

Meta is about to make something big again! They are about to release an open source language model called Llama3405B, which is not only their largest model to date, but also the largest open source language model in history. This behemoth, with a staggering 405 billion parameters, can shuttle between images and text, completely subverting the old calendar that can only process text. Key points: Meta will release Llama3405B, a multimodal model with 405 billion parameters, on July 23. The decision to open source Llama3405B and its weights may completely…- 8.8k

-

Google launches multimodal VLOGGER AI: making static portraits move and "talk"

Google recently published a blog post on its GitHub page, introducing the VLOGGER AI model. Users only need to input a portrait photo and an audio content, and the model can make these characters "animate" and read the audio content with facial expressions. VLOGGER AI is a multimodal diffusion model suitable for virtual portraits. It is trained using the MENTOR database, which contains more than 800,000 portraits and more than 2,200 hours of videos, allowing VLOGGER to generate different...- 3.5k

-

Back in the game! Gemini-Pro's multimodal capabilities are on par with GPT-4V

The recent Gemini-Pro evaluation report shows that it has made significant progress in the multimodal field, comparable to GPT-4V, and even better in some aspects. First, in the comprehensive performance on the multimodal proprietary benchmark MME, Gemini-Pro surpassed GPT-4V with a high score of 1933.4, showing its comprehensive advantages in perception and cognition. Among the 37 visual understanding tasks, Gemini-Pro performed outstandingly in tasks such as text translation, color/landmark/person recognition, and OCR, showing its excellent ability in the basic perception field. …- 3.6k

-

Gemini: An AI assistant developed by Google for writing, planning, and learning

Gemini is a new generation of artificial intelligence system launched by Google DeepMind. It is capable of multimodal reasoning and supports seamless interaction between text, images, video, audio, and code.Gemini has surpassed its previous state in many areas such as language comprehension, reasoning, math, and programming, making it one of the most powerful AI systems to date. Gemini is available in three different sizes to meet a wide range of needs, from edge computing to the cloud, and can be used in a wide range of applications, including creative design, writing assistance, question answering, code generation, and more. Gemini Features Writing Assistant: Ge...- 2.9k