-

How does Stable Diffusion work?Depth constraints and normal constraints for the Stable Diffusion plugin ControlNet

In this section we will dive into ControlNet's depth map constraints and normal map constraints. Through this section, you will understand the basic concepts, roles, processors and their applications of depth map and normal map. I. Depth map constraints: exploring the depth information of a 3D scene 1. What is a depth map? A depth map is a two-dimensional image that represents the depth information of a 3D scene, where the value of each pixel represents the distance between a point in the corresponding scene and the camera. In Stable Diffusion, white color represents the near distance and black color represents the far distance. Second, the role of depth map Depth map is mainly used to reg...- 2.6k

-

How does Stable Diffusion work?Stable Diffusion plugin ControlNet's doodle with straight line constraints

In this section we will continue to delve deeper into ControlNet's line constraints capabilities, including Linework Mode, Graffiti Mode, and Line Mode. These modes will help us better understand and apply the role of line constraints in image generation. I. Lineart Lineart Mode: More Natural Edge Extraction In the previous content, we discussed the limitations of hard edge and soft edge modes. Hard edge modes tend to cause double line problems, while soft edge modes have weak constraints. To address these issues, ControlNet version 1.1 introduces the Lineart mode, which enables more natural...- 3.1k

-

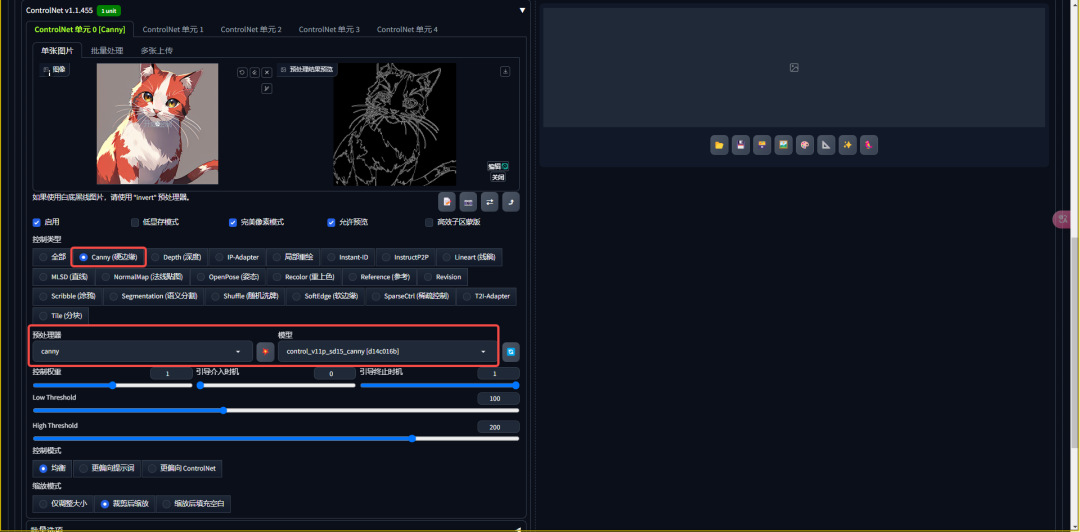

How to use Stable Diffusion, the Stable Diffusion plugin ControlNet's line drawing hard and soft edge modes.

In this section we will take an in-depth look at ControlNet's Line Drawing Hard and Soft Edge modes. Through this section, you will learn the basic principles of line constraints, the application of hard edge mode and soft edge mode, and their importance in real projects. I. What is Line Constraints? Line constraints are a way used in ControlNet to guide the results of Stable Diffusion, which affects the generation of the final image by extracting line features. The importance of line constraints in Stable Diffusion cannot be overstated, it allows us to...- 1.6k

-

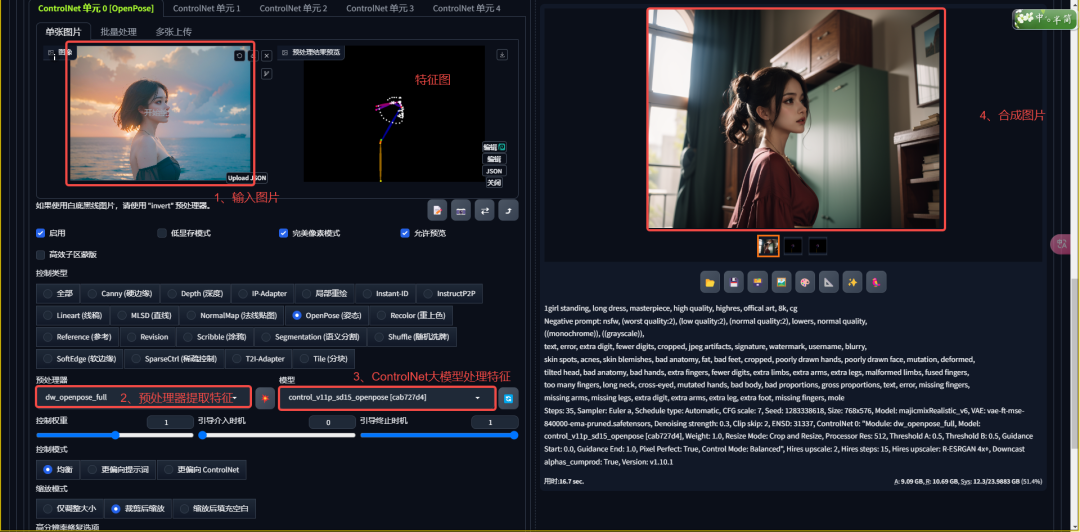

How does Stable Diffusion work?Stable Diffusion Plugin ControlNet Interface Parameters Details

In this section, we will take a closer look at the basic parameters of the ControlNet plug-in, which is the core of our business applications and the cornerstone of Stable Diffusion. In this section, we will get to know ControlNet as a whole and explain its general parameters in detail. Why do we need ControlNet? Before learning about ControlNet, we need to understand what it solves for Stable Diffusion...- 1.2k

-

How to use Stable Diffusion, Stable Diffusion quick install controlnet plugin tutorial

ControlNet plug-in installation may be slightly more complex, so we will help you through this section to successfully complete the installation, to ensure that you can follow the study in a smooth. First, the importance of ControlNet plug-in ControlNet plug-in is a very key tool in Stable Diffusion, which allows us to generate a finer control of the image. Follow-up content, we will explore the use of this plug-in, so it is critical to ensure its correct installation. Second, the plug-in installation steps 1, expand the tab page directly...- 2k

-

How to use Stable Diffusion Download, Apply & Manage Stable Diffusion Plugin

In this section, we'll dive into the world of Stable Diffusion's plugins, learning how to download, install, update, uninstall, and backup and restore plugin status. Through this knowledge, you will be able to customize the various powerful features you want. First, the definition and importance of plug-ins First of all, let's clarify what is an extension plug-in. Extension plug-ins are programs written by unofficial people to expand the functions of Stable Diffusion and complete the functions that the basic Stable Diffusion can not complete. This is made possible by the Sta...- 2.3k

-

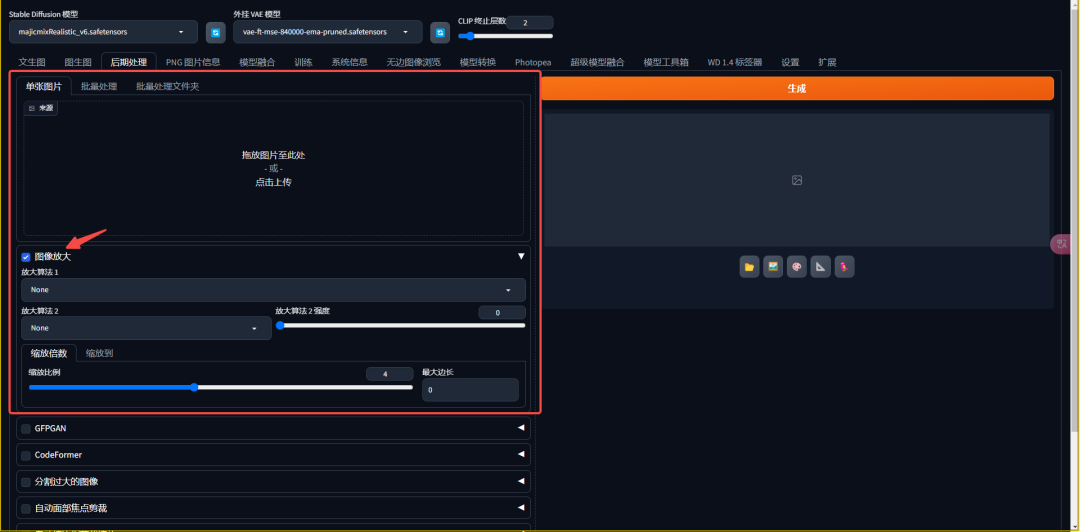

How to use Stable Diffusion, Stable Diffusion post-processing with PNG image information

In this section, we will learn in-depth about image enlargement, face repair and batch generation in post-processing, as well as the acquisition and erasure of PNG image information. Through two hands-on cases, we will learn how to improve the clarity and visual effect of the product image, and how to repair blurred face photos. First, post-processing: picture enlargement and repair 1, picture enlargement In the early days of Stable Diffusion, the resolution of the pictures generated is often small and cannot meet the commercial use. The first function of post-processing - picture enlargement - can help us enlarge the picture to 2K, 4K or even...- 2.2k

-

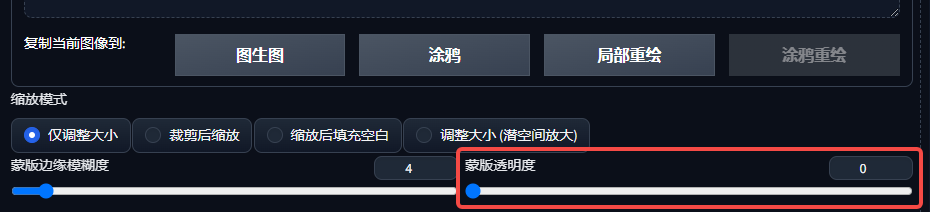

How to use Stable Diffusion Stable Diffusion Graffiti Repainting & Uploading Repaint Masks

In this section, we will delve into the knowledge related to graffiti repainting and uploading repainting masks, and use AI model dressing as a case study to help you learn these points comprehensively. First, graffiti repainting: functions and reasons for elimination First, let's explore graffiti repainting. Graffiti repainting is similar to local repainting in terms of parameters, but there is more than one mask transparency settings. However, this feature has been phased out in practice due to its inconvenience and contradictory functionality. Graffiti Repaint is intended to combine the functionality of local repainting and color change, but this combination leads to the necessity of modifying the color whenever repainting is performed...- 2.1k

-

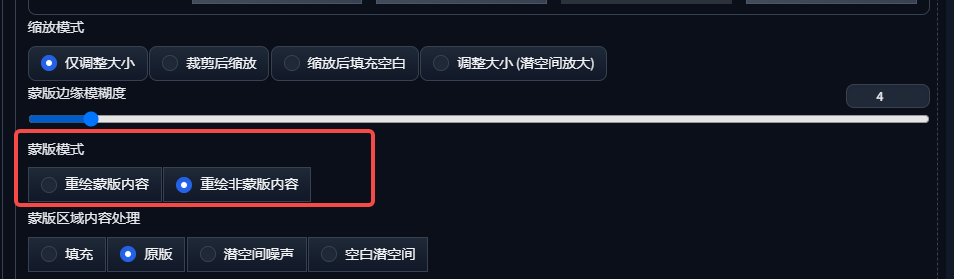

How to use Stable Diffusion Advanced tips for Stable Diffusion localized repainting

Today we will continue to explore the advanced techniques of localized repainting, including the mask mode, mask area content processing, knowledge about repainting area, and some notes on localized repainting. We will use the AI model face change as a real-world case to help you deeply understand and master the essence of local repainting. I. Masking Mode Masking mode is a technique we often use in local repainting, which is divided into two modes: repainting masked content and repainting non-masked content, corresponding to different demand scenarios. 1.Redrawing mask content Applicable to the need to change the model's background or clothes and other specific areas, while keeping the other parts unchanged. 2...- 2.6k

-

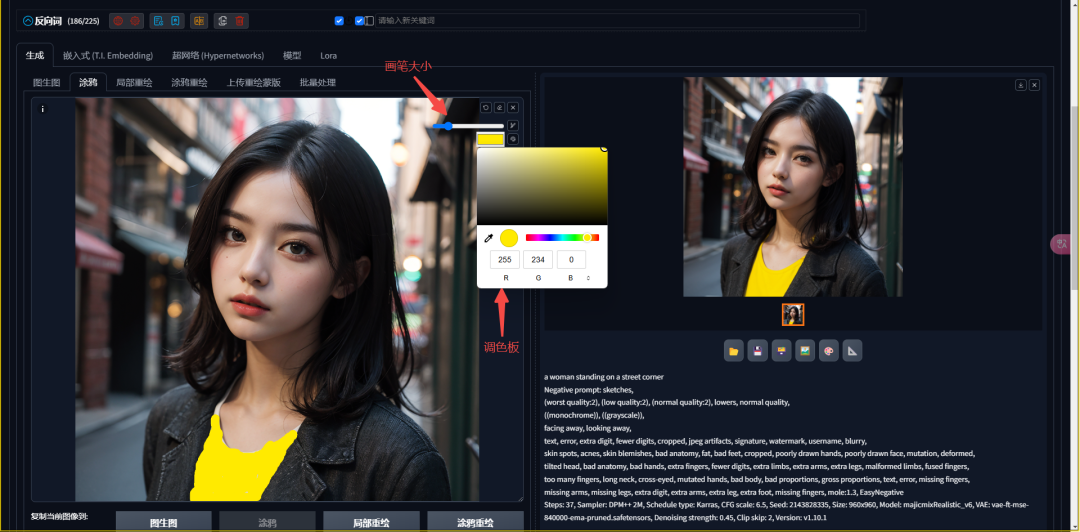

How to use Stable Diffusion, Stable Diffusion doodle feature with partial repainting

In this section, we will explore the magical world of graffiti and partial redrawing, learn how to change the color of the image through these techniques, add a logo, and even fix the flaws in the picture. I. Graffiti: a new dimension of image editing In the study of Tuchengtu, we often encounter the need to accurately change the color or details of a part of the image. Graffiti function came into being, which allows us to modify the reference image directly, and then the Tupelo function to achieve the color of the region to change. 1, the role and parameters of Graffiti The Graffiti interface is similar to the Tupelo, but it directly modifies the pixels of the reference image by...- 2.9k

-

How to use Stable Diffusion?AI painting Stable Diffusion diagrams raw diagrams details

In this section, we'll dive into the Stable Diffusion graph-generation technique and learn how to transform a third-dimensional photo into a second-dimensional anime avatar. This is not only a technical challenge, but also a creative leap. If you've ever seen a 9-block-9 avatar conversion order on Taobao, on Pinduoduo, or on Idle Fish, then today's content will help you understand the workflow behind it and realize the initial avatar conversion. First, the origin and principle of the figure born of the figure Before we dive into the hands-on, let's first understand the origin and principle of the figure born of the figure. In the past, the text of the text to map learning, the text of the expression of information is limited...- 3.6k

-

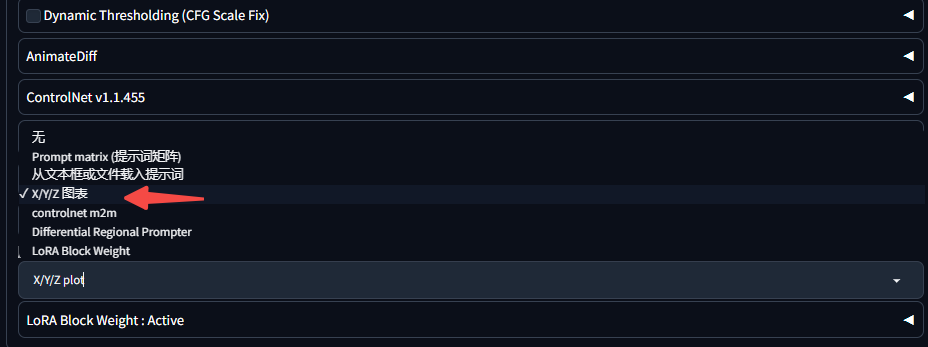

How does Stable Diffusion work and what are the uses of Stable Diffusion's common scripting tools?

In this section, we'll dive into two powerful scripting tools: the XYZ Comparison Tool and the Cue Word Matrix. Through hands-on cases, we will explore the uses, parameters and landing scenarios of these scripting tools to help you more deeply understand and apply these tools. First, the XYZ control tool 1, uses and parameters XYZ control tool is a practical script that can help us compare the effect of picture generation under different parameters. This is very useful for teaching and self-learning the role of parameters. 2, axis type and axis value Before using the XYZ plug-in, we need to understand its axis type (X-axis, Y-axis...- 1.4k

-

How does Stable Diffusion work? Tutorial on using Embedding and Hypernetworks

In this section, we will explore Stable Diffusion in depth in two important small models: Embedding (Text Inversion) and Hypernetworks. you will learn their definitions, download methods, installation address, methods of use, as well as the use of scenarios. First, Embedding (Text Inversion) details 1, definition and importance Embedding (Embedding), also known as Text Inversion (Text Inversion), is a large model to adjust the input of...- 1.6k

-

Stable Diffusion how to use? stable diffusion lora model how to use, lora model use strategy tutorial (below)

In the previous section, we learned the basics of Lora, including reading author and comment section information, understanding the model, and how to open the additional web interface and fill in the appropriate weight ranges. Today, we will enter a more in-depth study, including how to use the trigger word, refer to the author parameters for generation, as well as adjusted according to the generation results. First, the detailed steps to use Lora Step 1: Use the trigger word The trigger word is the key when calling Lora. Sometimes, just call Lora may not produce the desired results, you need to add positive tips to the correct trigger. For example, in the generation of pictures ...- 5.1k

-

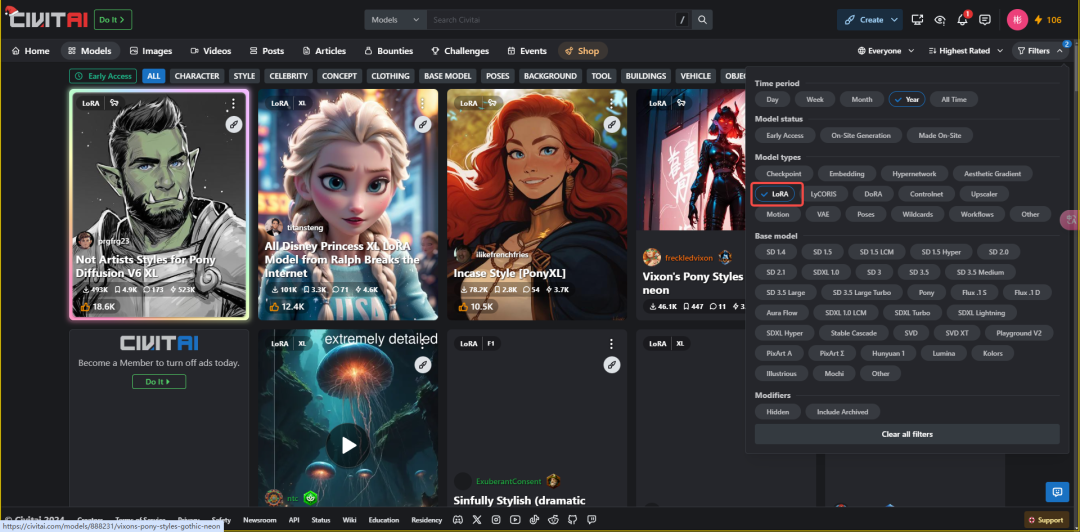

Stable Diffusion how to use? stable diffusion lora model how to use, lora model use strategy tutorials

Today we're going to dive into one of the key concepts in AI painting technology - miniatures. In this section, we will learn together the definition of miniatures, how to download them, where to install them, and how to use them. I hope that through this article, you can more fully grasp the skills of small models. I. The Origin of Small Models In the early days of AI painting technology, only large models existed, and they were not ideal. In order to enhance the ability of the big models, people began to try to adjust the big models, but this was costly. Therefore, the small model technique came into being, which allows us to make large models without retraining them...- 2.3k

-

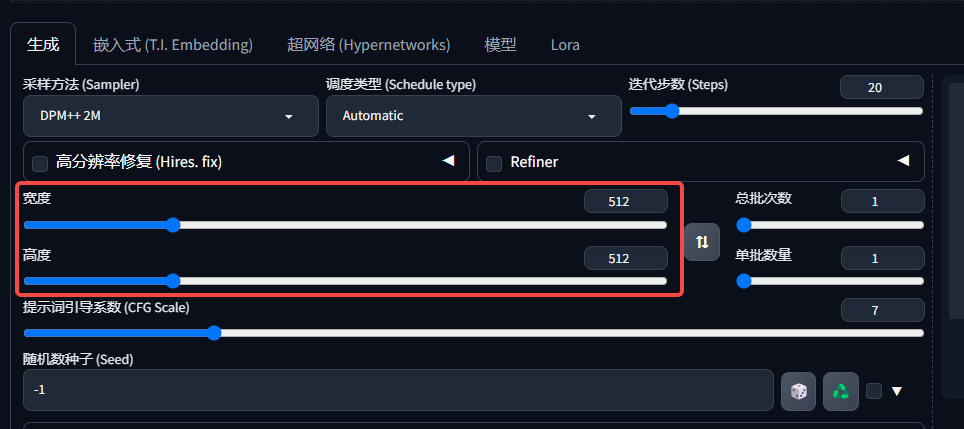

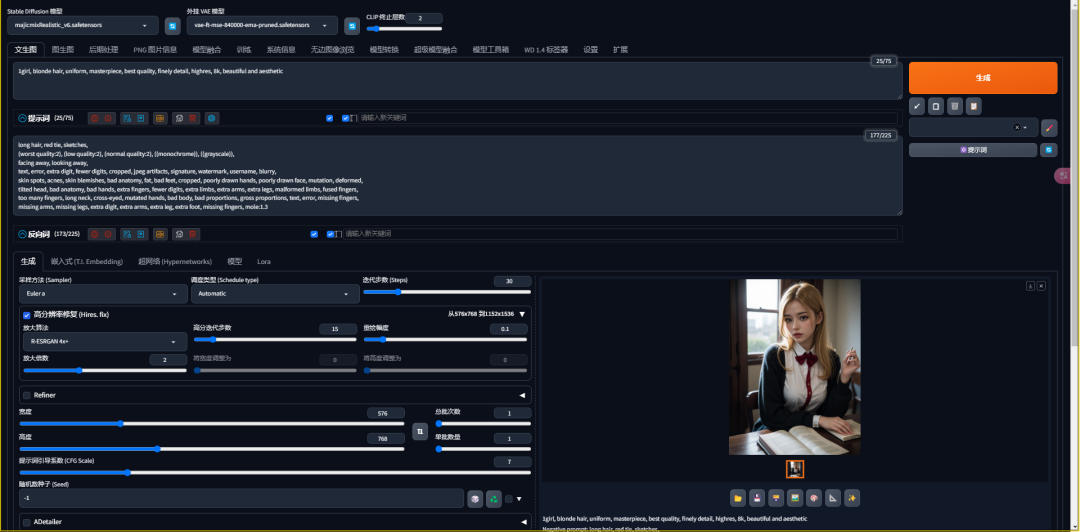

How to use Stable Diffusion, an introduction to the advanced parameters of the Stable Diffusion Venn diagram (below)

Following the previous section, we explored the basic parameters of Stable Diffusion, today we will continue to delve into more advanced parameters that will help you to control the AI painting process more finely and enhance the picture results. In this article, we will explain in detail the size of the picture settings, high-definition repair, multi-picture generation, cue words to guide the coefficient and random seeds and other key knowledge points. First, the picture size settings In AI painting, the picture size settings are critical, which directly affects the layout and quality of the screen. Size Limit: Stable Diffusion When generating pictures,...- 2.3k

-

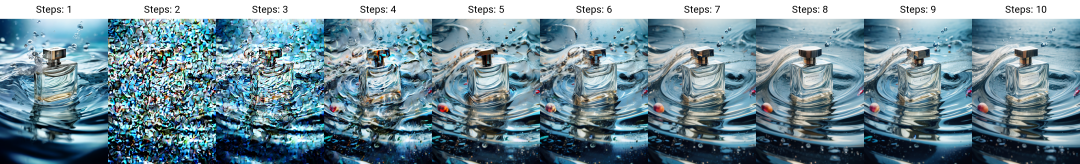

How does Stable Diffusion work?Introduction to the basic parameters of the Stable Diffusion Venn diagram (above)

In this section, we will delve into the Stable Diffusion parameter of AI painting techniques to help you better understand and apply this powerful tool. In this paper, we will summarize the core content of SD in detail, including the basic principles of Stable Diffusion, iterative deployment, sampling methods, facial repair and tiling maps and other key knowledge points. First, the principle of SD learning and painting 1, the principle of learning Stable Diffusion learns by constantly adding noise to the image. This process can be seen as AI gradually "remember" the image features ...- 2.3k

-

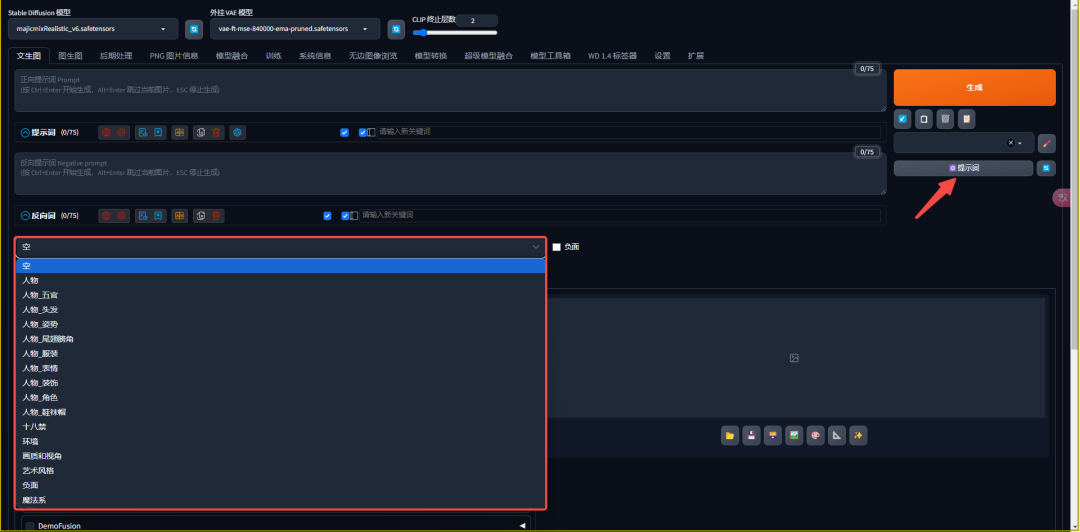

How does Stable Diffusion work?Stable Diffusion WebUI Page IntroductionConvenient Feature Bar

In this section, we will explore the handy feature bar of Stable Diffusion WebUI and learn how to utilize these features to improve the efficiency and effectiveness of AI painting. We will cover knowledge points such as infinite generation, automatic reading of image parameters, automatic configuration of parameters, clearing the content of the cue word, preset styles, and additional network functions for small models, and demonstrate how to automatically configure the parameters to generate images with one click through hands-on cases. I. Unlimited Generation In Stable Diffusion WebUI, we can right-click on the Generate button to select the "Unlimited Generation" option, ...- 2.8k

-

How does Stable Diffusion work? Basic Grammar of Prompt Words and AI-Assisted Creation Tips

In this section, we'll dive into the basic syntax and AI-assisted authoring techniques for prompt words. You'll master the six basic syntaxes of Stable Diffusion and learn how to let our Large Language Model (LLM) help us write prompt words. I. The Six Basic Syntaxes of Stable Diffusion In our previous content, we learned how to download models on the C station and try to use them. But sometimes, we see prompt words that contain many symbols such as parentheses and colons - what exactly are these? How are they used? Today, we ...- 2k

-

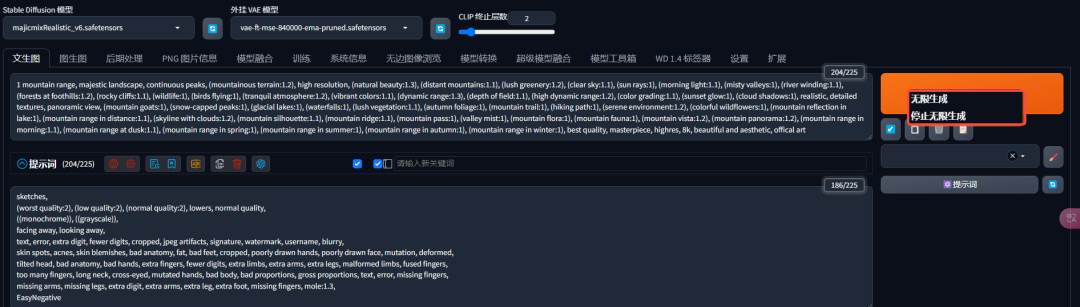

How does Stable Diffusion work? Prompts Writing Ideas and Categories, Using Prompts for Girls Wallpaper

In this section we will delve into the idea of writing and categorizing cues and how to use the Cue Categorization plugin to create a large scene girl wallpaper. You will be able to synthesize and apply what you have learned to improve the quality and logic of your work. First, the cue word classification ideas Every Stable Diffusion newbie dreams of showing a beautiful picture in his heart, but often do not know how to use the cue word to depict. Prompt word classification writing method can help us solve this problem. Just as we portray things through categorization in elementary school essays, we can classify prompt words into four main categories: quality words, subject and subject...- 1k

-

How does Stable Diffusion work? Basics of Prompts

In this section we will explore the basics of prompts (prompters) in depth. We will learn the basic concepts and functions of prompters, as well as how to write positive and negative prompters, and generate a basic portrait of a girl through hands-on examples to consolidate and enhance our skills. I. The importance of cue words In the previous content, we compared Stable Diffusion to an AI painter. Now, you want this AI employee to help you create a work of art, you need to give instructions through the language, that is, the cue word. Cue words are the bridge between us and the AI, telling the A...- 7.5k

-

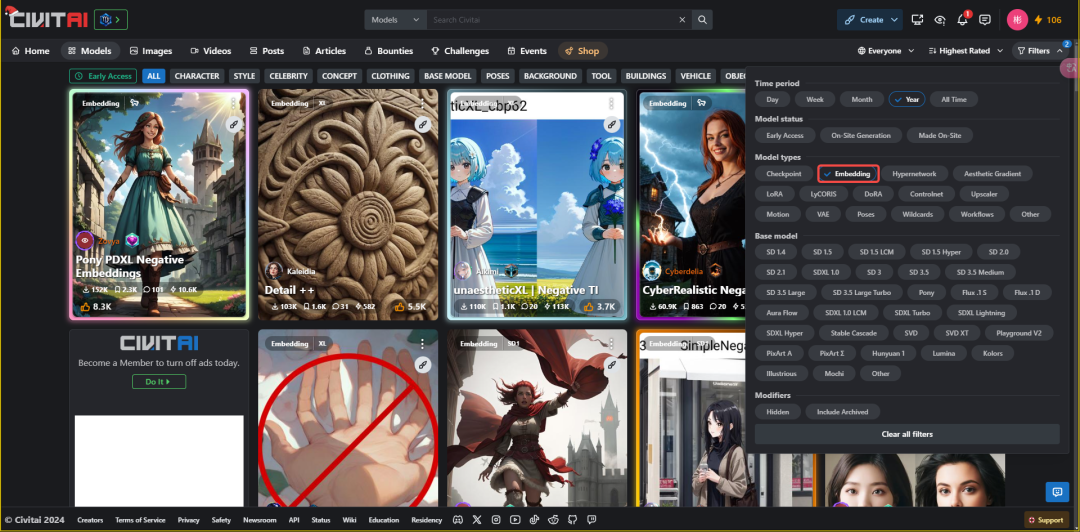

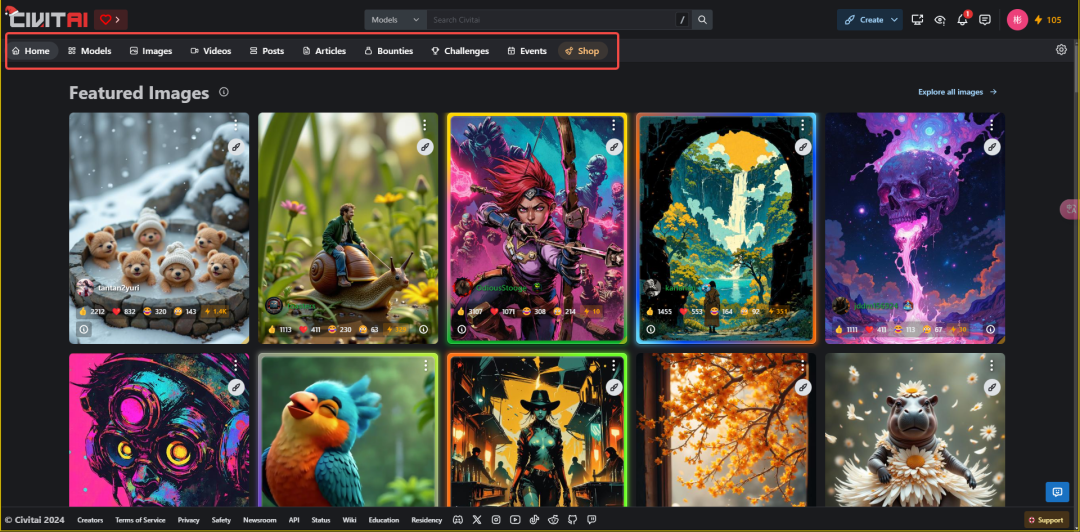

How to use Stable Diffusion, Station C's use of the essence and VAE, CLIP termination layer parameter analysis

In this section, we will learn in-depth about the use of Station C and the VAE (Variable Autocoder) and CLIP termination layer parameters. You will systematically recognize the C station and master the practical skills of the VAE model and Clip parameters. I. Station C Usage Essentials Station C, i.e. civitai https://www.1ai.net/2868.html, is a comprehensive modeling website that provides abundant modeling resources. When using C station, we need to pay attention to the following points: 1. Content categorization and switching C station contains models, pictures, portfolios and articles, etc. ...- 3.3k

-

How does Stable Diffusion work?Stable Diffusion Big Model Basics

In this section we will dive into the application scenarios of Stable Diffusion Big Model. You will learn the basics of the big model, download channels, installation methods and methods of use, and finally will recommend a few high-quality big model for novice friends. First, the basics of big models First of all, let's clarify what is a big model. Big model, also known as checkpoint file (Checkpoint), English name checkpoint, abbreviation ckpt. 1, the relationship between the big model and Stable Diffusion If the Stable ...- 3.3k

-

How does Stable Diffusion work?Stable Diffusion Local Deployment and Configuration

Today, we're going to explore together how to install Stable Diffusion on our local computer and generate our first image. If you can't wait to try this AI painting tool, then follow me to learn together! I. Stable Diffusion Computer Configuration Requirements Before we start the installation, we need to understand Stable Diffusion's computer configuration requirements. Here's an overview of the hardware configuration requirements: Memory: at least 8GB required, 16GB or higher recommended. GPU: Must be NVIDIA...- 5.6k