-

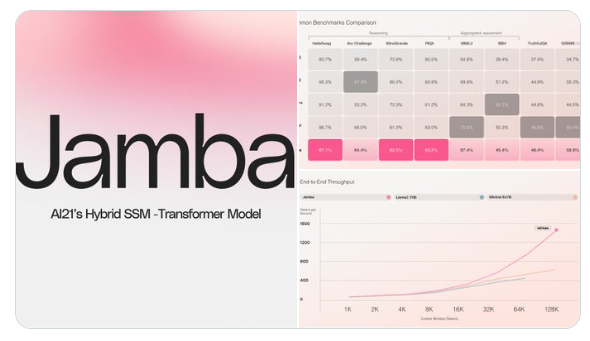

AI21 releases Jamba, the world’s first production-level model of Mamba, supporting 256K context length

AI21 released the world's first production-level model of Mamba: Jamba. This model uses the groundbreaking SSM-Transformer architecture with 52B parameters, of which 12B are active at generation time. Jamba combines Joint Attention and Mamba technology to support 256K context length. A single A10080GB can accommodate up to 140K context. Compared with Mixtral8x7B, the throughput of long context is increased by 3 times. Model address: https://huggingf…- 3.1k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: