-

Musk's AI supercomputing details revealed: $400 million has been invested, millions of GPUs have big power gaps

April 2, 2011 - Elon Musk has said that his artificial intelligence startup xAI will build the world's largest supercomputer in Memphis, Tennessee. Documents seen by Business Insider show that the company is investing hundreds of millions of dollars in the project, but faces a large power deficit. Since the project was first announced in June 2024, xAI has submitted 14 applications for building permits to the Memphis Planning and Development Agency, with a total estimated cost of $405.9 million ($2.9...)- 1.3k

-

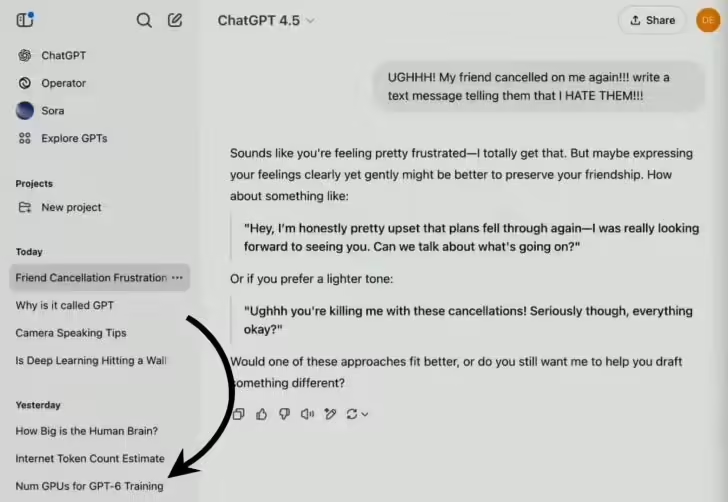

OpenAI GPT-6 Training to Hit Record Scale: 100,000 H100 GPUs Estimated, AI Training Costs Astronomical

March 1, 2011 - Tech media outlet smartprix published a blog post yesterday (February 28) reporting that OpenAI accidentally leaked the number of GPUs that may be needed for GPT-6 training in a video introducing the GPT-4.5 model, suggesting that it will be much larger than ever before. Note: At the 2:26 mark of the GPT-4.5 model introduction video, the chat transcript of OpenAI's demonstration of GPT 4.5's capabilities includes the phrase "Num GPUs for GPT 6 Training"...- 1.9k

-

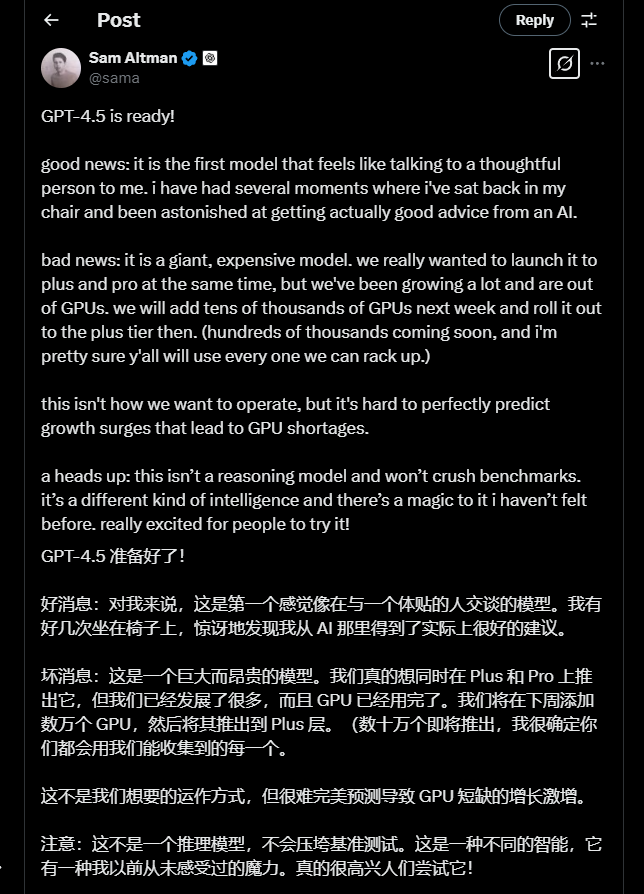

Altman admits OpenAI is short of GPUs, GPT-4.5 can only be rolled out in phases

Feb. 28, 2012 - OpenAI CEO Michael Altman said today that the company had to roll out its latest GPT-4.5 model in stages due to a "shortage of GPU resources. In a post on the X platform, Altman noted that GPT-4.5 is a "huge" and "expensive" model, requiring tens of thousands of additional GPUs to make it available to more ChatGPT users. GPT-4.5 will be available to ChatGPT Pro subscribers first, followed by ChatGPT Plus subscribers next week...- 1.4k

-

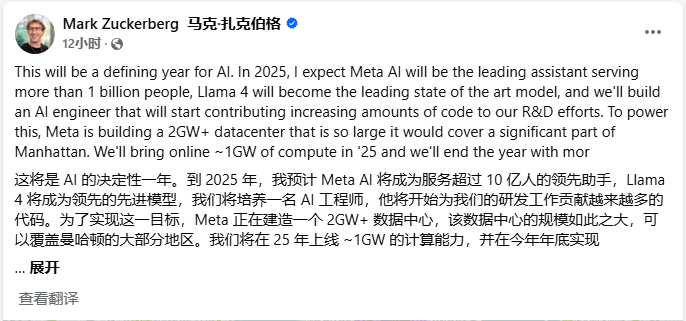

Meta CEO Zuckerberg: AI team to expand dramatically this year, over 1.3 million GPUs by the end of the year

January 24, Meta CEO Zuckerberg said on January 24 local time on the social platform Facebook, will achieve about 1GW of online computing in 2025, by the end of the year Meta will have more than 1.3 million GPUs. Zuckerberg said Meta plans to invest 60 billion to 65 billion U.S. dollars this year (Note: currently about 437.178 billion to 473.609 billion yuan) for capital expenditures, while significantly developing the artificial intelligence team. Zuckerberg said Meta plans to invest between $60 billion and $65 billion this year (note: currently about RMB 437.178 billion to RMB 473.609 billion) in capital expenditures, as well as significantly grow its artificial intelligence team. With Meta going ... -

UK Government Plans to Procure 100,000 GPUs to Boost Public Sector AI Arithmetic by 20x

January 13, 2011 - British Prime Minister David Starmer has promised that the UK government will purchase up to 100,000 GPUs by 2030, which means a 20-fold increase in the UK's sovereign AI arithmetic, mainly for AI applications in academia and public services. According to 1AI, the UK already has two advanced supercomputers, Isambard-AI at the University of Bristol and Dawn at the University of Cambridge.Isambard-AI is equipped with around 5,000 GPUs, specialized chips that are at the core of the AI software built...- 1.7k

-

IBM's New Optical Technology Reduces GPU Idle Time, Dramatically Speeds AI Model Training

Dec. 11 (Bloomberg) -- IBM has announced the development of a new optical technology that can train AI models at the speed of light while saving significant energy. By applying this breakthrough to data centers, the company says that training one AI model saves as much energy as 5,000 U.S. homes consume in a year. The company explained that while data centers are connected to the outside world through fiber optic cables, copper wires are still used internally. These copper wires are connected to GPU gas pedals, which spend a lot of time idle while waiting for data from other devices...- 1.2k

-

Denmark's First AI Supercomputer, Gefion, Launched, Powered by 1528 NVIDIA H100 GPUs

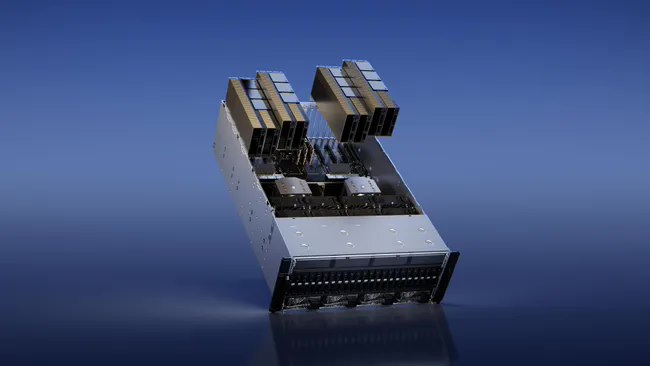

October 27 news, Denmark launched the country's first AI supercomputer, named after the Danish mythological goddess Gefion, aimed at promoting breakthroughs in quantum computing, clean energy, biotechnology and other fields, NVIDIA CEO Jen-Hsun Huang and the King of Denmark attended the unveiling ceremony. Gefion is a NVIDIA DGX SuperPOD supercomputer powered by 1528 NVIDIA H100 Tensor Core GPUs using NVIDIA's Quantum-2 InfiniBand net...- 5.4k

-

Larry Ellison and Elon Musk "beg" Nvidia's Jen-Hsun Huang for more GPUs at dinner

At a meeting with analysts last week, billionaire Oracle co-founder and CTO Larry Ellison told the audience that he and Elon Musk, the world's richest man, took NVIDIA CEO Jensen Huang to Nobu Palo Alto for dinner and "begged" Huang to give them more GPUs. "I would describe the dinner as Oracle - Elon and I begging Jensen for GPUs," Ellison recalls. "Please take our money. Please take our money. By the way, I'm having dinner. No, no, no, eat more. We need you to have more...- 6.3k

-

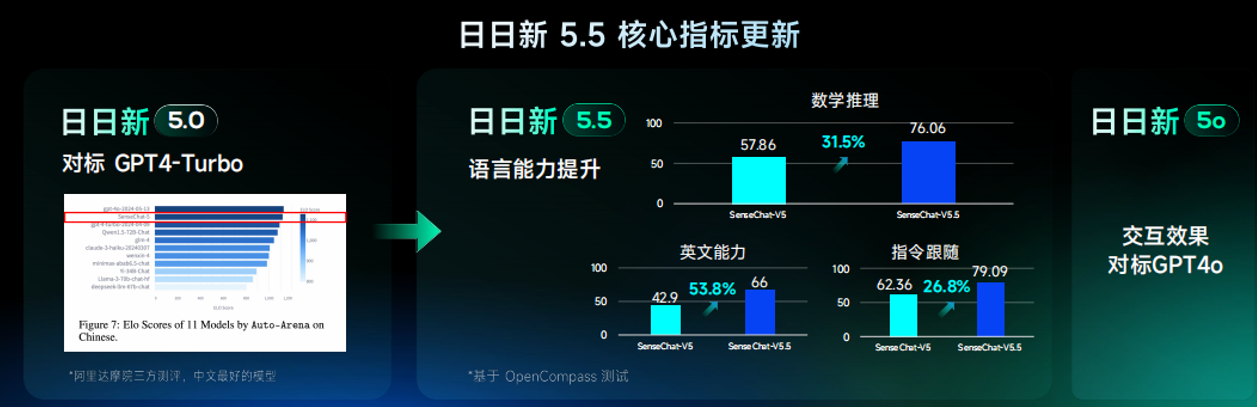

SenseTime: The domestically-built AI computing cluster currently has 54,000 GPUs, with a maximum computing power of 20,000 GPUs.

According to Jiemian News, at the 2024 REAL Technology Conference held today, Luan Qing, general manager of SenseTime Digital Entertainment Division, introduced that the domestic artificial intelligence computing cluster invested and built by SenseTime currently has 54,000 GPUs, with a maximum computing power of 20,000P. Luan Qing said that SenseTime is investing in the construction of the country's largest artificial intelligence data center in Lingang, Shanghai, and the country's computing nodes are spread across Shanghai, Guangzhou, Chongqing, Shenzhen, Fuzhou and other places. According to previous reports by IT Home, SenseTime's semi-annual report data as of June 30, 2024 showed that in the first half of 2024,…- 5.4k

-

Meta training Llama 3 encountered frequent failures, and the 16,384 H100 GPU training cluster "struck" every 3 hours

A research report released by Meta shows that its 16,384 NVIDIA H100 graphics card cluster used to train the 405 billion parameter model Llama 3 experienced 419 unexpected failures in 54 days, an average of one every three hours. More than half of the failures were caused by the graphics card or its high-bandwidth memory (HBM3). Due to the huge scale of the system and the high synchronization of tasks, a single graphics card failure may cause the entire training task to be interrupted and need to be restarted. Despite this, the Meta team has maintained more than 90% of effective...- 5.9k

-

Cloud computing company Lambda launches new cluster service to get Nvidia H100 GPUs on demand

Recently, GPU cloud computing company Lambda announced the launch of its new 1-Click cluster service, where customers can now get Nvidia H100 GPU and Quantum2 InfiniBand clusters on demand. This innovative service enables enterprises to obtain computing power only when needed, especially for those companies that do not need to use GPUs 24 hours a day. Source Note: The image is generated by AI, and Robert, co-founder and vice president of Midjourney Lambda, a picture licensing service provider, said: -

Grok2 is about to release xAI to accelerate AI competition: 100,000 GPU supercomputers will be delivered by the end of this month

Musk announced on July 9 that his artificial intelligence company xAI is building a supercomputer with 100,000 Nvidia H100 GPUs, which is expected to be delivered and start training at the end of this month. This move marks the end of xAI's negotiations with Oracle to expand its existing agreement and lease more Nvidia chips. Musk emphasized that this will become "the most powerful training cluster in the world, and the lead is huge." He said that xAI's core competitiveness lies in speed, "which is the only way to close the gap." Prior to this, xA…- 6.6k

-

Hugging Face, the world's largest open source AI community, will provide $10 million in shared GPUs for free to help small businesses compete with large companies

Hugging Face, the world's largest open source AI community, recently announced that it will provide $10 million in free shared GPUs to help developers create new AI technologies. Specifically, the purpose of Hugging Face's move is to help small developers, researchers, and startups fight against large AI companies and prevent AI progress from falling into "centralization." Hugging Face CEO Clem Delangue was interviewed by The Verge...- 2.8k

-

Intel's Falcon Shores GPU is coming later next year and has been redesigned for AI workloads

Intel made it clear at its first quarter earnings conference call at the end of last month that the Falcon Shores GPU will be launched in late 2025. According to foreign media HPCwire, the processor is being redesigned to meet the needs of the AI industry. Intel CEO Pat Gelsinger said that Falcon Shores will combine a fully programmable architecture with the excellent system performance of the Gaudi 3 accelerator, allowing users to achieve a smooth and seamless upgrade transition between two generations of hardware. Intel said that the AI industry is turning to Python… -

Beijing: Support will be provided to enterprises that purchase self-controlled GPU chips and provide intelligent computing services in proportion to their investment amount.

On the 24th, the Beijing Municipal Bureau of Economy and Information Technology and the Beijing Municipal Communications Administration issued the "Beijing Computing Infrastructure Construction Implementation Plan (2024-2027)". The "Implementation Plan" proposes that by 2027, the quality and scale of computing power supply in Beijing, Tianjin, Hebei and Mongolia will be optimized, and efforts will be made to ensure that independent and controllable computing power meets the needs of large model training, and computing power energy consumption standards will reach the leading domestic level. Key tasks include promoting independent innovation in the computing power industry, building an efficient computing power supply system, promoting the integrated construction of computing power in Beijing, Tianjin, Hebei and Mongolia, improving the green and low-carbon level of the intelligent computing center, deepening computing power empowerment industry applications, and ensuring computing power foundations...- 3.9k

-

Nvidia H100 AI GPU shortage eases, delivery time drops from 3-4 months to 2-3 months

Once upon a time, Nvidia's H100 GPU for artificial intelligence computing was in short supply. However, according to Digitimes, TSMC's Taiwan general manager Terence Liao said that the delivery waiting time for Nvidia H100 has been greatly shortened in the past few months, from the initial 3-4 months to the current 2-3 months (8-12 weeks). Server foundry manufacturers also revealed that compared to the situation in 2023 when Nvidia H100 was almost impossible to buy, the current supply bottleneck is gradually easing. Although the delivery waiting time has…- 6.6k

-

Stability AI reportedly ran out of money and couldn’t pay its rented cloud GPU bills

The massive GPU clusters required for generative AI star Stability AI’s popular text-to-image generation model, Stable Diffusion, also appear to have been partly responsible for former CEO Emad Mostaque’s downfall — because he couldn’t find a way to pay for them. The UK model-building firm’s sky-high infrastructure costs allegedly depleted its cash reserves, leaving it with just $4 million as of last October, according to an exhaustive report citing company documents and dozens of people familiar with the matter. Stab…- 4.4k

-

AI star startup buys Nvidia GPU, valuation doubles in a few weeks, but spends 17 times more than it earns

In the AI industry, especially in the field of generative AI, the rapid development of technology and the broad prospects of application have attracted a lot of investment and attention. However, the high cost of this field has also caused widespread discussion in the industry. Recently, a report in the Wall Street Journal pointed out that companies in the AI industry spend 17 times their revenue on purchasing Nvidia GPUs, which is a shocking figure and has also triggered in-depth thinking about the future development of the industry. AI startup Cognition Labs, backed by well-known investor Peter Thiel, is seeking a valuation of $2 billion, and its valuation… -

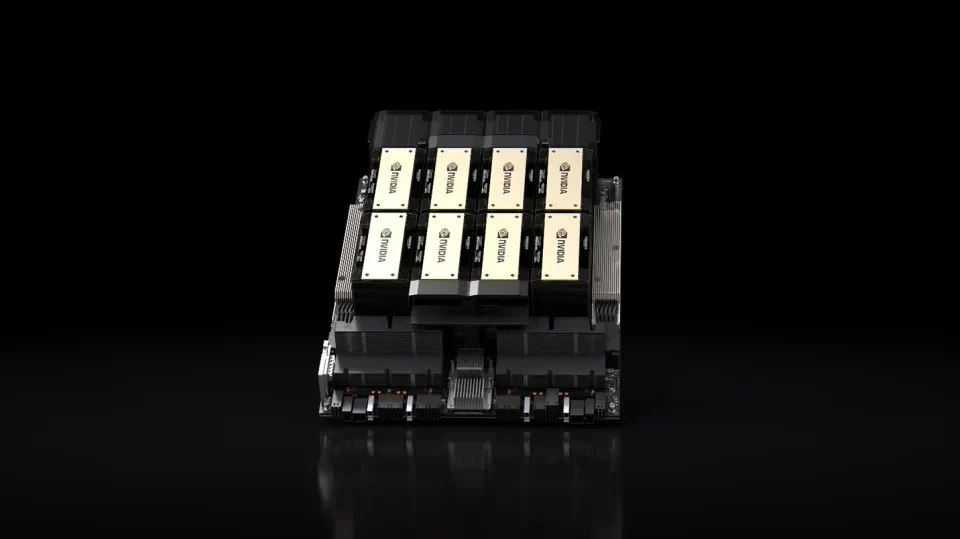

NVIDIA AI chip H200 starts shipping, performance improved by 60%-90% compared to H100

On March 28, according to a report by the Nikkei today, Nvidia's cutting-edge image processing semiconductor (GPU) H200 is now available. H200 is a semiconductor for the AI field, and its performance exceeds the current flagship H100. According to the performance evaluation results released by Nvidia, taking the processing speed of Meta's large language model Llama 2 as an example, the processing speed of generative AI derived answers of H200 is up to 45% higher than that of H100. Market research organization Omdia once said that in 2022…- 3.5k

-

NVIDIA releases AI Enterprise 5.0 to help enterprises develop generative AI

NVIDIA has officially released AI Enterprise 5.0, an important product designed to help enterprises accelerate the development of generative artificial intelligence (AI). AI Enterprise 5.0 includes NVIDIA microservices and downloadable software containers that can be used to deploy generative AI applications and accelerate computing. It is worth mentioning that this product has been adopted by well-known customers such as Uber. As developers turn to microservices as an effective way to build modern enterprise applications, NVIDIA AI Enterprise 5.0 provides a wide range of...- 2.8k

-

Nvidia in talks to acquire Israeli artificial intelligence company Run:ai

According to people familiar with the matter, Nvidia is currently in deep negotiations with Israel's artificial intelligence infrastructure orchestration and management platform Run:AI to discuss acquisition matters. The value of the transaction is expected to reach hundreds of millions of dollars, and may even climb to a high of $1 billion. Currently, the negotiations between the two parties are still ongoing, and the specific acquisition details and conditions have not been disclosed. Run:ai is an AI optimization and orchestration platform specifically for GPUs. Run:ai provides a range of tools and features, including CLI and GUI, workspaces, open source frameworks, metrics, resource management...- 3.9k

-

CPU, GPU, NPU, which one is the protagonist of "AI PC"?

As we all know, "AI PC" is one of the hottest topics in the consumer electronics industry. For some consumers who don't know much about the technical details but are interested in this concept, they believe that "AI PC" can help them complete some unskilled operations more intelligently or reduce the burden of daily work. But for users like us, who have high expectations for "AI PC" but are relatively familiar with it. Many times, we think that AI PC has already appeared, but why is it only being promoted now? How old is AI PC?…- 2.9k

-

Meta builds two new data center clusters: containing more than 49,000 NVIDIA H100 GPUs, dedicated to training Llama3

Meta announced two new data center clusters through an official press release on the 12th. The company is hoping to stand out in AI-focused development through Nvidia's GPUs. It is reported that the sole purpose of these two data centers is to conduct AI research and development of large language models in consumer-specific application areas (IT Home Note: including sound or image recognition). Each cluster contains 24,576 Nvidia H100 AI GPUs, which will be used to train its own large language model Llama 3. The two newly built data centers…- 3.3k

-

Meta invests in AI to drive video recommendations, integrating Feed and Reels

Facebook parent company Meta is investing heavily in artificial intelligence technology as part of its "Technology Roadmap to 2026" to develop a new AI recommendation model for all its video products, including TikTok-like Reels short video services and traditional long videos in Facebook Feed. Meta executive Tom Alison revealed that the company currently usually uses a separate recommendation model for each product such as Reels, Groups and the main feed, but will develop a unified...- 3.3k