-

DeepSeek指令,会这10个高质量的DeepSeek指令秒变AI高手

上次分享了一些DeepSeek指令后,有朋友反馈说希望指令可以更详细一些,这样在使用的时候可以更直接,效果也更好。 所以这次我特意调整了指令的写法,让每个指令都更加具体、更具有可操作性。 今天再分享10个我个人认为非常高质量的DeepSeek指令,这些指令都是我在日常工作中反复测试、不断优化出来的,可以直接复制粘贴使用,强烈建议收藏,相信会对你有所帮助。 和之前一样,我仍然坚持实用主义,分享的指…- 704

-

How to remove the AI flavor from AI writing? DeepSeek Prompt Words for Removing AI Flavor and Embellishing Your Articles

I. "De-AI flavor" Prompts (Make the text more "human" written, say goodbye to the "robotic accent") 1. "Please use a more natural colloquial expression to Rewrite the passage to avoid overly formal or stiff vocabulary and sentence structure." Example: "Please rewrite this text in a more natural colloquial expression, avoiding overly formal or rigid vocabulary and syntax. Original: 'Artificial intelligence technology will play a crucial role in the future development of society, and its far-reaching impact cannot be ignored.' " 2. "Please rewrite the passage to make it more humane, adding some personalized thoughts and emotions...- 2.3k

-

DeepSeek official: "R2 will be released on March 17" is fake news

March 11, according to the blue whale news reported, in response to the rumor that DeepSeek will release the next generation of R2 model on March 17, DeepSeek official enterprise consulting account in the user group responded, "Disinformation: R2 release for false news." As of 1AI's press release, DeepSeek has yet to officially announce the specific date and technical details of R2. Previously Reuters reported that three people familiar with the matter revealed that DeepSeek is accelerating the rollout of its R2 artificial intelligence model, which the company initially planned to launch in May, but...- 939

-

DeepSeek-R2 AI model to be released on March 17, sources say

On March 11, media outlet Wisdom Finance cited "sources" as saying that DeepSeek's next-generation AI model, DeepSeek-R2, will be released on March 17th. According to the report, DeepSeek-R2 achieves breakthroughs in a number of key areas, including better programming capabilities, multilingual reasoning, and higher accuracy at a lower cost. Corresponding sources believe that these features, if realized, could give it a significant advantage in the global AI race. As of 1AI's press release, DeepSeek officials have not yet positively...- 233

-

Use deepseek to make explosive ancient poetry video tutorials, fast powder fast cash AI project

Short videos of children's education are really hot! There is an AI ancient poetry enlightenment video account is particularly fierce, using DeepSeek to do video, only two months has risen 225,000 powder. A total of 47 videos have been sent, and the top video has 176,000 likes in the platform of Jieyin, not counting the data of other platforms! Now parents love to show their children this kind of traditional cultural content, and using AI to do it saves time and has good results. The speed of this account is like a rocket, and those who want to do the direction of children's education can follow me to dismantle this game! ▌First step: DeepSeek to do the selection...- 1.6k

-

Dong Mingzhu: will build Gree smart home pendant big model based on DeepSeek and others

March 10 news, according to Securities Daily reported yesterday evening, Gree Dong Mingzhu revealed: "Gree Electric has successfully integrated its own multi-model framework with DeepSeek R1 full-blooded version of the big model in depth." "For example, the results of this cooperation have been reflected in Gree Electric's voice-enabled air conditioning products, realizing a leap in upgrading from traditional voice assistants to true artificial intelligence. With DeepSeekR1's deep learning capabilities, Gree Air Conditioner is capable of efficient reasoning and decision-making, and seamlessly connects with smart devices throughout the house." For the next layout of Gree Electric in the field of AI... -

How to make ai digital person? DeepSeek + Cicada Mirror tutorial to quickly create virtual digital man

AI digital people are gradually entering our lives, widely used in film and television production, virtual live broadcasts, intelligent customer service and many other fields. So, how can we efficiently create an AI digital person? Today's article will explain in detail how to use DS, a powerful digital technology platform, with Cicada Mirror, an advanced digital creation tool, to quickly and easily create a lifelike AI digital person. Next, let's dive into the specific production process and start the creation of AI digital people. AI Tools DeepSeek: https://w...- 7.3k

-

Mistral embraces open source: teases new AI model that will outperform DeepSeek

March 8 - The Wall Street Journal reported yesterday (March 7) that Mistral AI, a French company known as the "European version of OpenAI," plans to embrace an open-source strategy and release a new model that goes beyond DeepSeek. Speaking at Mobile World Congress 2025, Mistral AI CEO Arthur Mensch said the company will adopt an open-source strategy to develop more powerful AI technology at a low cost, which will not only help the company to iterate quickly in the competition, but also promote global AI ...- 905

-

AI Converts Novel Text to Manga Video in One Click, Easy with DeepSeek + Second Cut App

WeChat Second Cut is really a treasure AI editing tool. The new features, AI cartoon videos, public number to video, and bandwagon videos, are simply great. And, it's totally free. Strongly favorable. Today, let's introduce the "AI cartoon video" of Second Cut, combined with DeepSeek, to generate AI novel cartoon video with one click, no threshold, too convenient. The first step is to use DeepSeek to generate a novel copy First, we use DeepSeek to help us generate a novel copy. There is no need to introduce the use of DeepSeek. ...- 3.2k

-

How to Use DeepSeek Prompt Words?50 Commonly Used Deepseek Prompt Words for Everyday Life and Work Scenarios

50 Deepseek prompt words commonly used in daily life and work scenarios, covering multiple fields such as study, work, life, creativity, etc., with a categorized structure for easy access: Information Processing (10 items) Thesis weight reduction Thesis weight reduction on the ...... The paragraph will be triple optimized, and the intelligent table containing [original sentence vs. after change] [term replacement library] [logical description of modification] will be output, and the report of weight check rate estimation and academic standard verification will be generated at the same time. Requirements: ① Detect semantic offset <5% by BERT model ② Retain the core data and monitor it by LDA topic model ③ Attach 3 alternative expression options...- 1.8k

-

AI short video cash-in project to produce AI health and wellness short videos with DeepSeek

Today, a small friend sent a link to Jitterbug, asked what AI is used to do? I took a look at it, and boy, did it work This health channel was launched on January 21 (DeepSeek R1 was released on January 20), and now, more than a month later, it has 250,000 followers and 1.2 million likes. Then, if you use the word "health" to do a search on the Jieyin platform, you'll find a lot of channels, and all of them have a good amount of traffic. For example, the above channel, "Chinese Health Channel", started at the end of 2024, now has 400,000 followers and 3 million likes! Advantages of doing the health track: large base, people...- 2.2k

-

Education Minister Huai Jinpeng: DeepSeek and Robotics are Major Opportunities for Education

March 5 news, this morning, the 14th National People's Congress three sessions of the first "ministerial channel" focused on interview activities. Minister of Transportation Liu Wei, Minister of Education Huai Jinpeng, State Financial Supervision Administration Li Yunze, State Market Supervision and Administration Director Luo Wen accepted the interview. According to CCTV News, Minister of Education Huai Jinpeng said: DeepSeek and robots have attracted widespread attention both at home and abroad in recent times, and I think it also illustrates the effectiveness of China's scientific and technological innovations and talent cultivation in one way. But at the same time it also puts forward to us in the face of major scientific and technological changes and industrial change... -

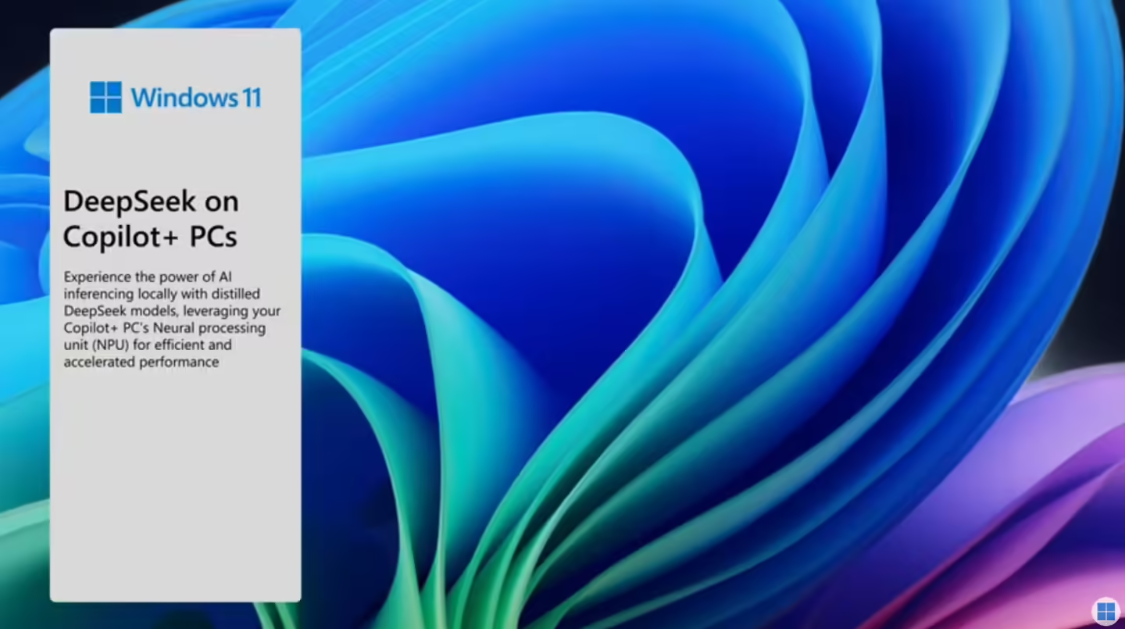

Microsoft Embraces DeepSeek, Copilot+ PCs Run 7B and 14B Models Locally

March 4, 2011 - Microsoft today announced that it is providing Copilot+ PCs with the ability to run 7B and 14B models locally by accessing the DeepSeek-R1 7B and 14B distillation models through Azure AI Foundry. Back in January, Microsoft announced plans to bring NPU-optimized versions of the DeepSeek-R1 models directly to Copilot+ PCs powered by Qualcomm Snapdragon X processors. Now, that promise has finally been realized. 1AI from Microsoft's official blog...- 703

-

Deploying DeepSeek on your computer teaches you to build a DeepSeek local knowledge base

For local deployment of DeepSeek, how to install and use DeepSeek R1 Big Model on your own computer?DeepSeek R1 Local Deployment Guide How to make its answer more efficient? This is where the knowledge base comes into play. In this article, we use Ollama+Docker+Dify. Note: First of all, please make sure you have installed Git and Python, and keep the "network open". Install Ollama and Docker, and download the big model. Please refer to the first and second parts of the following article: DeepSeek...- 1.1k

-

Want to use Deepseek well? 100 commonly used Deepseek commands compiled (create, learn, work, live)

100 Deepseek prompt words commonly used in daily life and work, covering multiple fields of study, work, life, creativity, etc., with a categorized structure for easy access: Information Processing (15) Thesis Reduction: Please rewrite this paragraph in a different way, keeping the original meaning intact Literature Review: Please summarize the research results of the last three years on the field of ...... and cite the sources Conference Proceedings: Please convert this recording into text and organize the key decision items and to-do lists. Research results in the field and cite the sources Meeting minutes: please convert this paragraph into text and organize the key decision items and to-do lists Policy interpretation: please use a comparative table to interpret the old and new versions of ...... Difference points of the policy Competitor analysis: please analyze the function...- 3.4k

-

China Academy of Information and Communications Technology's "Smart Defense" personal information protection model announced access to DeepSeek

March 3 news, under the guidance of the Ministry of Industry and Information Technology Information and Communication Administration, the China Academy of Information and Communication Research released last February the first domestic personal information protection AI model "Zhiwu" assistant, providing intelligent services for App development and operation, detection and protection, and policy interpretation. 1AI query was informed that the "Smart Royal" artificial intelligence model is mainly oriented to mobile Internet application developers, distribution platforms, terminal manufacturers, testing organizations and other upstream and downstream subjects in the industry, to provide multi-modal, diversified compliance consulting, risk detection, code generation, operational guidelines and other services, to build AI-enabled ... ...- 591

-

"China's First AI IDE" ByteDance Trae Domestic Version Released: Configuration Beanbag 1.5pro, Switchable Full-Blooded Version DeepSeek

March 3 news, byte jumping today announced that "China's first AI native integrated development environment (AI IDE)" Trae domestic version is officially online, configured with Doubao-1.5-pro, and support for switching full-blooded version of DeepSeek R1, V3 models. Trae was previously released overseas, with built-in GPT-4o and Claude-3.5-Sonnet models. The domestic version carries different models, but the functions are basically the same. Trae is positioned as an "intelligent collaborative AI IDE", supporting code completion... -

How to generate images with deepseek?1 direct command to make Deepseek Make Images

I had visions of Deepseek transforming words into lifelike images with just a click. Then I asked Deepseek: Can you make pictures? But it answered me with a sincere face like this: "Currently, I can't create or generate images directly. However, I can help you conceptualize the image, provide creative suggestions, or recommend tools and software to create the image. If you need to generate images, try using some AI drawing tools like DALL-E, MidJourney or Stable Diffus...- 1.7k

-

Can AI generate ID photos? Generating professional-grade ID photos with DeepSeek and Instant Dream AI

A friend asked me if I can generate ID photos with AI. He himself has not been exposed to AI painting, will not prompt words, hope to recommend a free, easy to operate, once you learn the method. Here I thought of using DeepSeek to generate prompt words and then through the Dream AI to generate pictures of the solution. DeepSeek and that dream AI are currently free to use, easy to operate, follow the tutorial below will see. Operation Demonstration Step 1: DeepSeek to generate a photo ID text raw map prompt word Enter DeepSeek official website: https://chat.deepseek...- 2.8k

-

Write an article you love with AI, 10 commands to make deepseek write "better" articles

Although the output of the AI article looks decent, but from the "quality" is still a bit of a gap, today to share 10 deepseek write articles to become more "quality" of the method, as well as some of my common tips, I hope to give is still in the beginning stage, or always write not satisfied with the article of friends a little inspiration. 1. Controversial topic introduction: "Please subtly introduce a controversial topic related to the theme at the beginning or in the middle of the article, to provoke readers to think and discuss. For example, for [article topic], there exists [...- 1.7k

-

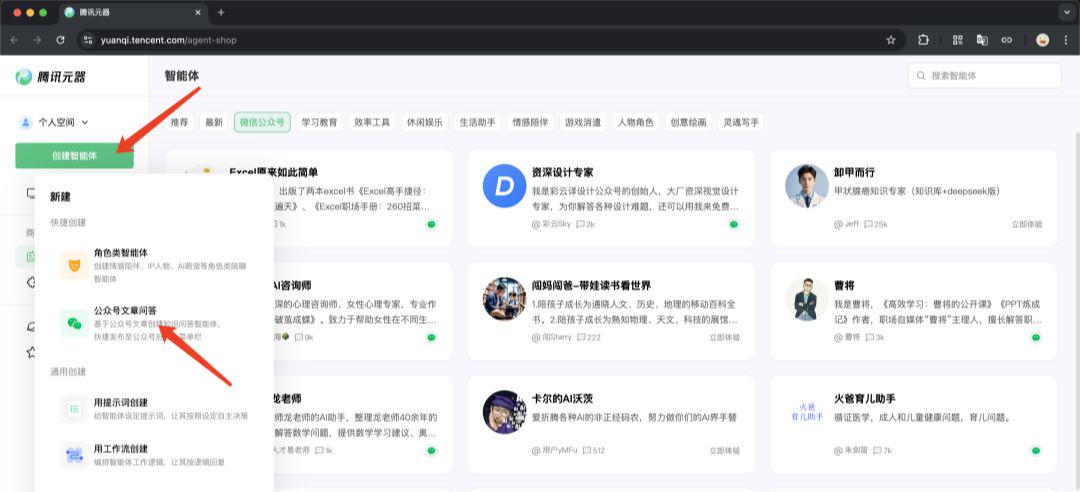

How to build a knowledge base out of public number articles? Tencent hybrid to connect DeepSeek to build WeChat public number knowledge base assistant

For public number operators, aggregating past articles into a knowledge base not only enriches the public number's content ecosystem, but also greatly improves the interactive experience with readers. Imagine how exciting it would be if the public number could act like a smart library! Automatically receive questions from readers and find relevant information from previously published articles to give accurate answers Now this operation is no longer a distant dream, but can be realized in less than a minute through the combination of DeepSeek and Tencent Hybrid. This article will show you how to public...- 1.3k

-

Moore Threads Supports DeepSeek Open Source Week "Family Bucket"

March 2, 2012 - DeepSeek Open Source Week officially wrapped up yesterday night with the announcement that Moore Threads Intelligent Technology (Beijing) Co., Ltd. has successfully realized full support for DeepSeek open source projects in a short period of time, covering FlashMLA, DeepEP, DeepGEMM, DualPipe, and the Fire-Flyer File System (3FS). (3FS). 1AI with Moore thread support DeepSeek open source week "family bucket" code collection is as follows: FlashMLA FlashMLA...- 865

-

DeepSeek has only 160 employees: New Hope Chairman Liu Yonghao reveals his conversation with Liang Wenfeng, praises young people for being more aware of new technologies

February 28 news, 2025 National People's Congress will be held soon. February 27, the National Committee of the Chinese People's Political Consultative Conference, new hope group chairman Liu Yonghao two media communication will be held in Beijing. Comprehensive Daily Economic News, Beijing Business News reported that Liu Yonghao in the media communication will reveal the content of the exchange with DeepSeek founder Liang Wenfeng. Liu Yonghao said: "DeepSeek Liang classmates, this meeting we eat together again, I asked him, I said how many employees you now, he said 160. I said you now so big influence, do so many things only 160? ...- 914

-

DeepSeek won't work? Double Your Productivity with These 5 Tips for DeepSeek

Recently, almost every day (literally, scheduled until the end of next week...), I've been giving DeepSeek offline shares to various organizations (with lawyers, executives, marketers, doctors, educators, and entrepreneurs, etc.). During these shares, people asked a lot of interesting questions, questions that AI practitioners would never think of, such as: Will my files be leaked if I pass them to AI? Why can't buttons be subscribed to public numbers? What the hell is this AI? Why can AI make up legal texts and case numbers and act so confidently? Can AI understand me as well as Jitterbug...- 1.3k