-

Open source large model DBRX: 132 billion parameters, 1x faster than Llama2-70B

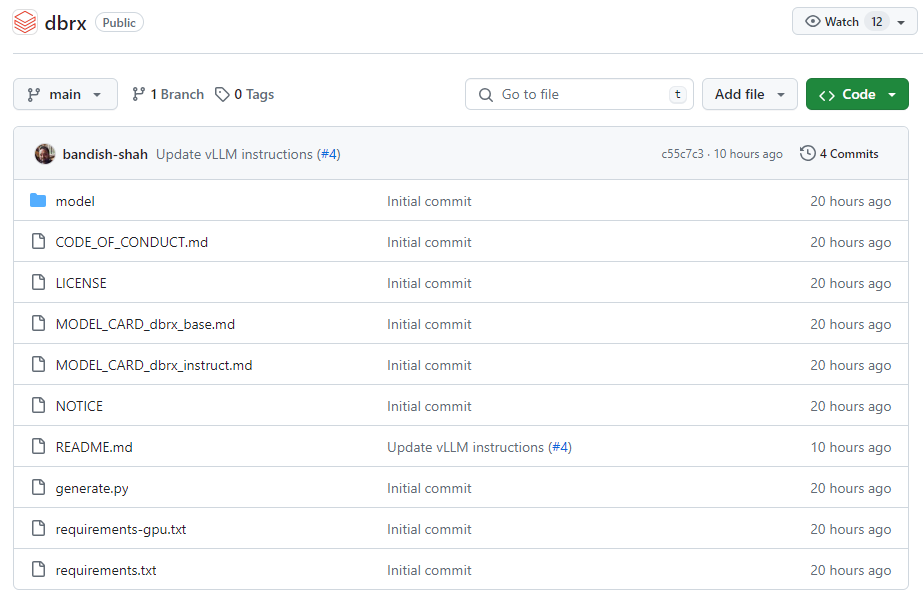

Big data company Databricks has sparked a buzz in the open source community with the recent release of a MoE big model called DBRX, which has beaten open source models such as Grok-1 and Mixtral in benchmarks to become the new open source king. The model has 132 billion total parameters, but only 36 billion parameters per activation, and it generates them 1x faster than Llama2-70B. DBRX is composed of 16 expert models with 4 experts active per inference and a context length of 32 K. To train DBRX, Data...- 2.2k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: