-

Amazon upgrades AI video model Nova Reel to generate up to two minutes of multi-camera video

April 8, 2011 - Nova Reel, the Amazon AI video model that debuted last December, has been upgraded with a new version, Nova Reel 1.1, capable of generating videos up to two minutes in length. According to a blog post by Amazon Web Services (AWS) developer advocate Elizabeth Fontes, Nova Reel 1.1 is capable of generating "multi-camera" videos with a "consistent style. Users can provide cues of up to 4,000 characters to generate videos of up to two minutes in length, consisting of six seconds of footage. 1...- 1.5k

-

Google AI video model Veo 2 debuts on Freepik, free for first 10,000 users

Freepik, a world-renowned creative resource platform, announced that it has partnered with tech giant Google to take the lead in launching the latest generation of AI video models - Veo 2 - globally. This heavyweight news quickly triggered widespread attention, and many users have said that this is probably the most advanced AI video generation tool at present. According to reports, Veo2 is developed by the Google DeepMind team and is a fully upgraded version of its predecessor model Veo. It can not only generate videos with up to 4K resolution, but also realize screen content up to several minutes long. What's more amazing is that Veo2 has a better sense of realism and animation smoothness in...- 2.4k

-

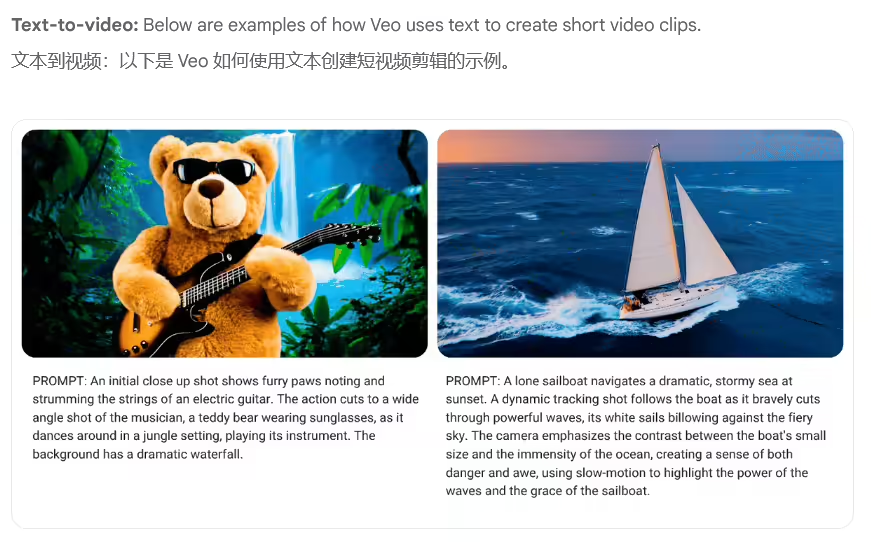

Google Leads AI Video Generation Track: Precedes OpenAI Sora in Launching Veo Model to Generate 1080P HD Video

Google announced in a blog post yesterday (December 4) that it is opening up its latest generative AI video model, Veo, to enterprises in the form of a private preview on the Vertex AI platform to power the enterprise content creation process. Google initially publicly demonstrated the Veo model in May this year, three months later than OpenAI's Sora model, but Google released Veo early to seize the opportunity in the context of the increasingly heated competition in the field of AI video generation. 1AI cites a blog post that describes how Veo can be prompted by text or image...- 2.3k

-

Vidu: A large AI model for long videos that generates 16-second 1080P HD videos with one click

Vidu is China's first long-duration, highly consistent, and highly dynamic video model released by Shengshu Technology and Tsinghua University. The model uses the original Diffusion and Transformer fusion architecture U-ViT, which supports one-click generation of up to 16 seconds of high-definition video content with a resolution of up to 1080P. Vidu can not only simulate the real physical world, but also has rich imagination, multi-lens generation, and high spatiotemporal consistency. Vidu function Long-duration high-definition video generation: Vidu can generate up to 16 seconds of high-definition video based on the user's text description...- 37.1k

-

Sora replacement? 2-minute long AI video model StreamingT2V free open source trial address announced

Recently, Picsart AI Research and other teams jointly released an AI video model called StreamingT2V, which can generate videos up to 1200 frames and 2 minutes long, which technically surpasses the previously popular Sora model. The release of StreamingT2V not only made a breakthrough in video length, but it is also a free and open source project that can be seamlessly compatible with models such as SVD and animatediff, which is of great significance to the development of the open source ecosystem. Before Sora, the video…- 5.3k

-

Meta launches AI video model Fairy, which can easily replace video characters and change styles

Meta’s GenAI team introduced a video-to-video synthesis model called Fairy that is faster and more temporally consistent than existing models. The research team demonstrated Fairy’s performance in several applications, including character/object replacement, stylization, and long-form video generation. For example, simple text prompts such as “in the style of Van Gogh” are enough to edit the source video. For example, the text command “turn into a snowman” turns the astronaut in the video into a snowman. Fairy’s visual coherence is a particularly challenging problem because the same prompts can be used to generate different videos.- 5.8k