-

OpenAI's GPT-4.1 Has No Security Report, AI Security Transparency Questioned Again

April 16, 2011 - On Monday, OpenAI introduced a new AI model, the GPT-4.1 family. The company said that the model outperformed some of its existing models in certain tests, particularly programming benchmarks. However, unlike OpenAI's previous model releases, GPT-4.1 does not come with the security report (i.e., a system card) that usually accompanies model releases. As of Tuesday morning, OpenAI had not yet released a security report for GPT-4.1 and does not appear to have any plans to do so. OpenAI spokesperson Shao...- 618

-

Google DeepMind develops DolphinGemma AI model for dolphin 'language' research

April 15 news, Google's AI research lab Google DeepMind announced today that it has successfully developed an AI model called DolphinGemma, which is designed to help scientists study dolphin "language" in depth and better understand how dolphins communicate. According to 1AI, DolphinGemma is based on Google's open Gemma family of models, and its training data comes from the Wild Dolphin Project, a nonprofit organization that focuses on the study of Atlantic spotted dolphins and their behavior....- 481

-

Smart Spectrum Releases Next Generation of Open Source AI Models GLM-4-32B-0414 Series, Comparable to GPT Series and DeepSeek-V3 / R1

April 15, 2012 - Smart Spectrum published a blog post yesterday (April 14) announcing the launch of a new generation of GLM-4-32B-0414 series models with 32 billion parameters, with results comparable to OpenAI's GPT series and DeepSeek's V3 / R1 series, and with support for very friendly local deployment features. The models are GLM-4-32B-Base-0414, GLM-Z1-32B-0414, GLM-Z1-Rumination-32B-0414, and GLM-Z1-Rumination-32B-0414, and GLM-Z1-Rumination-32B-0414.- 947

-

Benchmarking Costs Soar as AI 'Reasoning' Models Emerge

As artificial intelligence (AI) technology continues to evolve, so-called "reasoning" AI models have become a hot research topic. These models are able to think step-by-step like humans and are considered more capable than non-reasoning models in specific domains, such as physics. However, this advantage comes with high testing costs, making it difficult to independently validate the capabilities of these models. According to data from Artificial Analysis, a third-party AI testing organization, evaluating OpenAI's o1 inference model against seven popular AI-based...- 323

-

Google releases Gemini 2.5 Flash AI model: built to save money and be efficient

April 10, 2011 - Google today unveiled a new AI model called Gemini 2.5 Flash, which focuses on high performance and delivers strong performance. According to 1AI, Gemini 2.5 Flash will soon be available on Vertex AI, Google's AI development platform, and the company says the model has "dynamic and controlled" computational power, allowing developers to flexibly adjust processing times based on the complexity of a query request. In a blog post, Google wrote: "You can tailor speed, accuracy...- 299

-

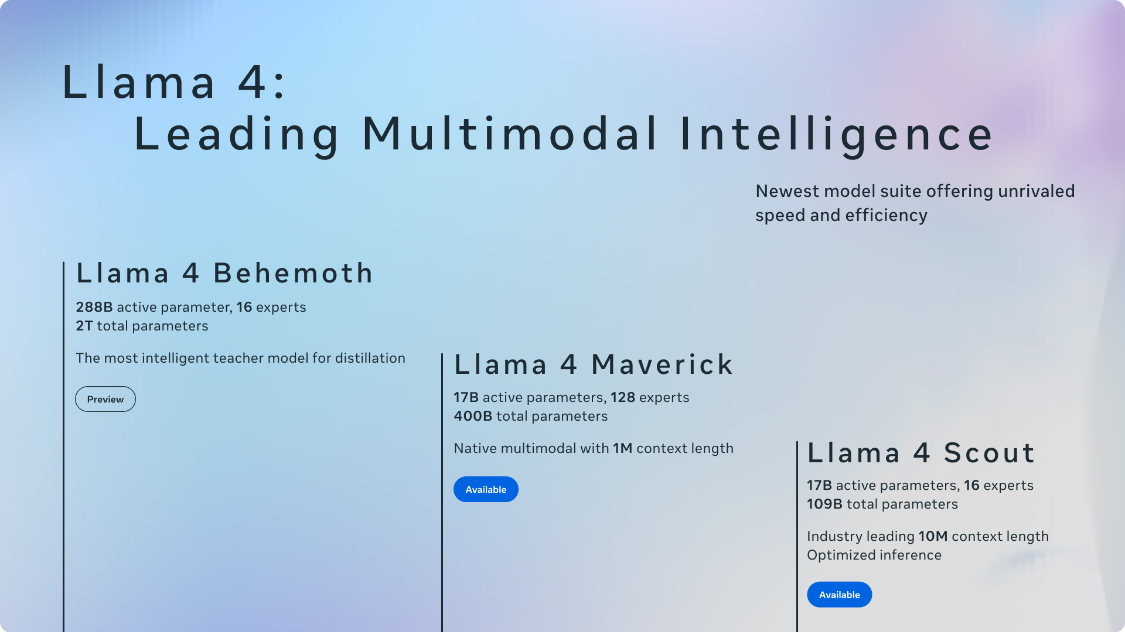

Meta Releases Llama 4 Series of AI Models, Introduces "Hybrid Expert Architecture" to Improve Efficiency

April 6, 2013 - Meta has released its latest Llama 4 series of AI models, including Llama 4 Scout, Llama 4 Maverick, and Llama 4 Behemoth, revealing that the models have been trained on "large amounts of unlabeled text, image, and video data" to give them "a wide range of visual comprehension capabilities," the company said. Meta reveals that the models have been trained on "large amounts of unlabeled text, image and video data" in order to provide them with "a wide range of visual comprehension capabilities". Meta has now uploaded Scout and Maverick from the series of models to Hugging Face (...- 1.4k

-

Google's most expensive AI model to date: Gemini 2.5 Pro API pricing announced, starting at $1.25 per million input tokens

April 5, 2011 - On Friday, local time, Google announced API pricing for Gemini 2.5 Pro. For up to 200,000 tokens of input, Gemini 2.5 Pro costs $1.25 per million input tokens (note: the current exchange rate is about 9.1 yuan, about 750,000 words), and $10 per million output tokens (the current exchange rate is about 72.9 yuan). And for more than 200000 to...- 1.9k

-

UC study: AI models GPT - 4.5 and Llama 3.1 - 405B pass standard Turing test

April 2, 2011 - The University of California, San Diego, has released a research study that claims to provide the first "empirical evidence that an artificial intelligence system can pass the standard tripartite Turing test". The Turing test was proposed in 1950 by British mathematician and computer scientist Alan Turing, who called it "the imitation game. Turing envisioned that if a questioner could not distinguish between a machine and a human when communicating through text, then the machine might have human-like intelligence. In the three-way Turing test, the questioner is asked to engage in a conversation with a human and a machine, and accurately identify the human...- 1.8k

-

"Smartest" AI Model Yet Arrives, Google Gemini 2.5 Pro Free to the Public

March 30, 2011 - Google today announced that the latest Gemini AI flagship model, Gemini 2.5 Pro, will be free to all Gemini app users. Previously, the experimental model was limited to Gemini Advanced subscribers. Google released Gemini 2.5 Pro earlier this week, calling it "the smartest AI model" to date, with enhanced reasoning capabilities over its predecessor. The new model supports a number of features, including app and browser extensions, file uploads,...- 1.5k

-

NVIDIA Announces Llama Nemotron Series of Open Reasoning AI Models and New Cosmos Nemotron Member

March 20 news, NVIDIA newly released Llama Nemotron series of open inference AI models, support for "intelligent body AI" system, can independently inference, planning and execution of multi-step tasks, suitable for robotics, automation and decision optimization and other scenarios, significantly reduce the cost of inference, to help enterprises to achieve the AI autonomy upgrade. The Llama Nemotron series of open inference AI models are available in Nano, Super, and Ultra scales, which are briefly introduced in a blog post cited by 1AI as follows: Nano: Designed for PC...- 1.7k

-

Canadian Startup Launches Command A Lightweight AI Model, Claims to Require Only Two NVIDIA A100 / H100 GPUs for Deployment

Canadian AI startup Cohere has released an AI model called "Command A", which focuses on lightweight applications and can be easily deployed with just two NVIDIA A100 or H100 GPUs, claiming "performance comparable to GPT-4o". Cohere said Command is a lightweight application that can be easily deployed with just two NVIDIA A100 or H100 GPUs, claiming "performance comparable to GPT-4o" and "maximum performance with minimum hardware. Cohere said Command A is designed specifically for small and medium-sized business environments, with support for 256k context lengths and 23 languages, which compares favorably to competitors' "similar...- 1.6k

-

Google DeepMind Introduces New AI Models That Let Robots Perform Real-World Tasks Without Training

On the evening of March 12, Beijing time, Google DeepMind launched two new AI models designed to help robots perform more real-world tasks. One of them, called Gemini Robotics, is a visual-verbal action model that enables robots to understand new situations without specialized training. Gemini Robotics is based on the latest version of Google's flagship AI model, Gemini 2.0. Carolina ...- 2.2k

-

French Publishers and Authors Association Sue Meta for "Massive Theft" of Copyrighted Content to Train AI Models

France's leading publishers and authors' associations have filed a lawsuit against Meta, accusing it of unauthorized mass use of copyrighted content to train its AI models. The French National Publishing Union (SNE), the National Union of Authors and Composers (SNAC), and the Société des Grands Littéraires pour la Défense des Autres (SGDL) have filed a lawsuit against Meta, alleging copyright infringement and economic "parasitism," the three associations said in a press release on Wednesday. The three associations argued that as Facebook, Instagram and WhatsApp are social...- 1.1k

-

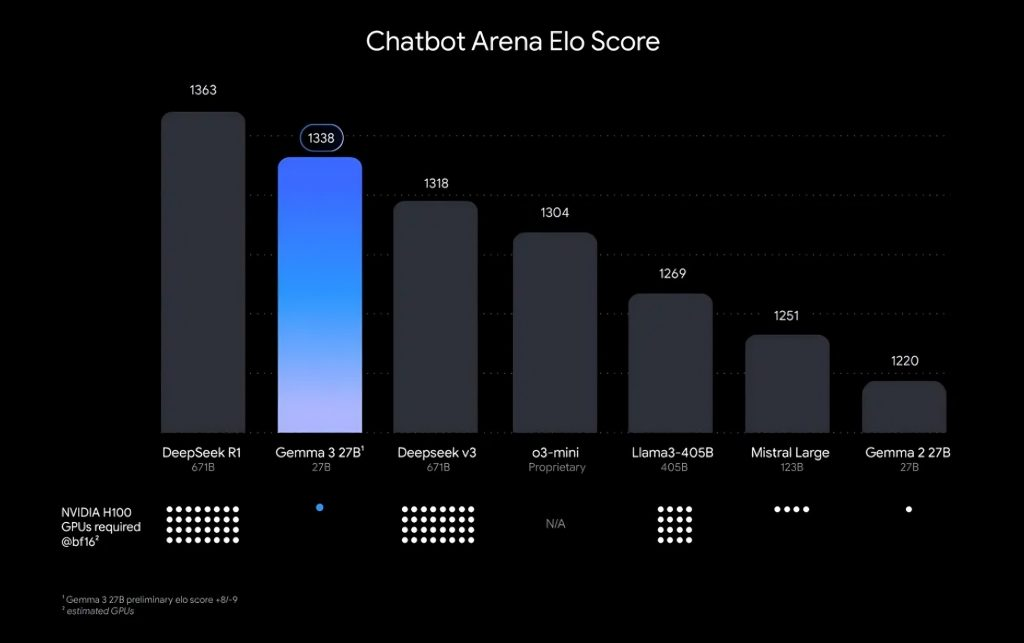

Google Launches Gemma 3: The Most Powerful AI Model Claimed to Run on a Single GPU

March 12, 2011 - Google today unveiled the Gemma 3 artificial intelligence model, an upgrade to the two "open" Gemma AI models it released a year ago based on the same technology as Gemini AI. According to Google's official blog, the Gemma 3 model is designed for developers to build AI apps that run seamlessly on multiple devices, from phones to workstations. The model supports more than 35 languages and has the ability to analyze text, images and short videos. Google Sound...- 1.8k

-

DeepSeek-R2 AI model to be released on March 17, sources say

On March 11, media outlet Wisdom Finance cited "sources" as saying that DeepSeek's next-generation AI model, DeepSeek-R2, will be released on March 17th. According to the report, DeepSeek-R2 achieves breakthroughs in a number of key areas, including better programming capabilities, multilingual reasoning, and higher accuracy at a lower cost. Corresponding sources believe that these features, if realized, could give it a significant advantage in the global AI race. As of 1AI's press release, DeepSeek officials have not yet positively... -

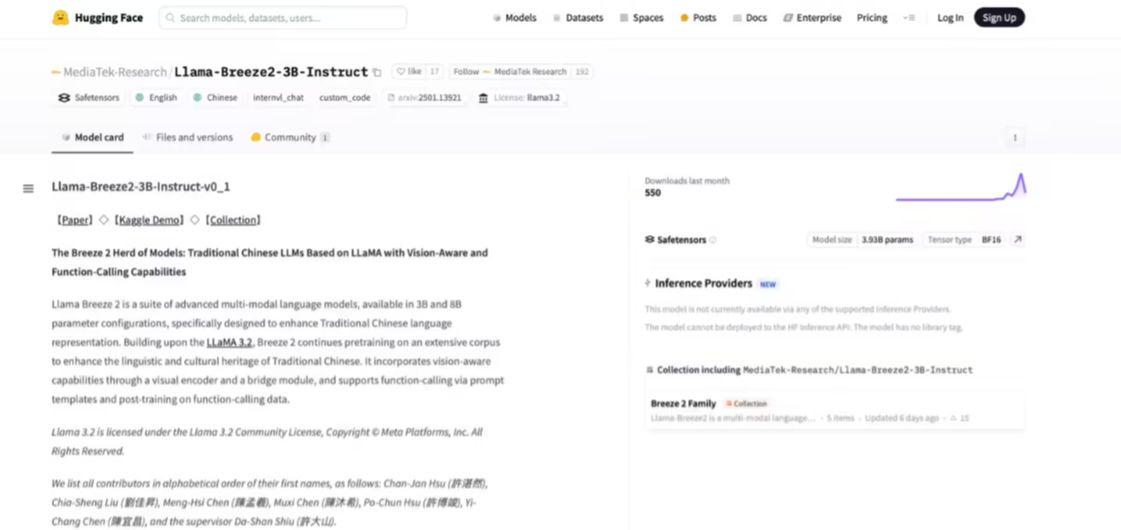

MediaTek Launches Two Multimodal Lightweight AI Models: Focus on Traditional Chinese Processing Capabilities, Based on Meta Llama 3.2

February 19, 2011 - MediaTek Research has now released two lightweight multimodal models that support Traditional Chinese, namely the Llama-Breeze2-3B model, which is claimed to run on cell phones, and the Llama-Breeze2-8B model for thin and light laptops. 1AI has learned that the series of models are based on the Meta Llama 3.2 language model, which focuses on Traditional Chinese processing capabilities, and also supports multimodal input and function calls, capable of recognizing images and calling external...- 1.1k

-

Adobe Firefly Vinyl Video AI Model Launched with "Industry's First IP-Friendly, Commercially Secure" LLM

On February 12, Adobe launched what it calls "the industry's first IP-friendly, commercially safe AI model for text-to-speech video" for Firefly. The model is currently available on the Adobe Firefly web site and in Adobe Premiere Pro's Generative Extend feature (currently in Beta). According to Adobe, users can create videos with text prompts or images, and then add "adjustable camera angles" and various atmospheres by...- 1.6k

-

Stirring up inference AI models: Google revealed to release enhanced Gemini 2.0 Flash Thinking on January 23rd

January 21 news, the source @sir04680280 on January 19, published a blog post, reported that watching the Google Hackathon live, found Google upgraded version of the Gemini AI model "Gemini 2.0 Flash Thinking Exp-0123", suggesting that January 23rd release. The new model is called "Gemini 2.0 Flash Thinking Exp-0123". The new model, named "Gemini 2.0 Flash Thinking Exp-0123", is supposed to be an upgraded version of the existing "Exp-1219" model, which may have... -

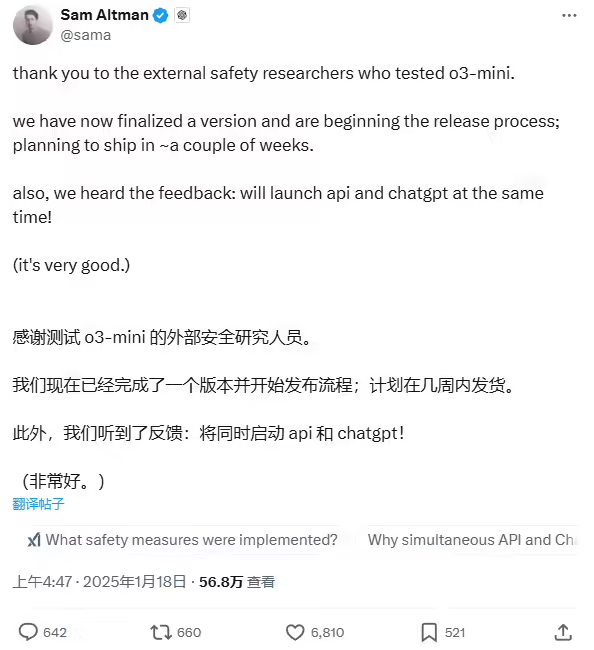

OpenAI Altman: Plans to launch o3 mini inference model in a few weeks

January 18, 2011 - OpenAI CEO Sam Altman said in a post on X today that OpenAI has completed an o3 mini version of its new inference AI model, which is scheduled to be released in a few weeks and includes APIs and ChatGPT options to make advanced inference AI easier to use and more affordable. At last year's 12 Days of OpenAI event, OpenAI's o3 series of big models made a grand finale appearance on the last day, with officials saying that in some scenarios, their reasoning capabilities are very close to those of general artificial intelligence (AGI)... -

Study: Training Data Containing 0.001% of Misinformation Is Enough to "Poison" Medical AI Models

January 14, 2011 - A study from New York University has revealed the potential risks of large-scale language models (LLMs) in medical information training. The study shows that even training data containing as little as 0.001% of incorrect information can cause the model to output inaccurate medical answers. Data "poisoning" is a relatively simple concept: LLM is usually trained on large amounts of text, mostly from the Internet. By injecting specific information into the training data, it is possible for the model to treat this information as fact when generating answers. This approach doesn't even require direct access to the LLM itself, just the purpose...- 1.8k

-

Researchers Open Source Sky-T1 Reasoning AI Model, Costs Less Than $450 to Train

January 12, 2011 - This week, NovaSky, a research team from UC Berkeley's Sky Computing Lab, released an inference model called Sky-T1-32B-Preview. The model's performance in a number of key benchmarks is comparable to earlier versions of OpenAI's o1 model. Notably, Sky-T1-32B-Preview appears to be the first truly open-source inference model with a publicly available training dataset and code that allows users to reproduce the...- 1.9k

-

Musk: There's very little real-world data left for training AI models

Musk, along with other AI experts, agrees that real-world data for training AI models has almost run out, TechCrunch reports. In a live conversation with Mark Payne, chairman of Stagwell's board of directors, on Wednesday evening, Musk said, "We've now essentially consumed all of the accumulated human knowledge ...... of data used for AI training. This phenomenon basically happened last year." Musk's comments are in line with former OpenAI chief scientist Ilya Sutskever's comments last... -

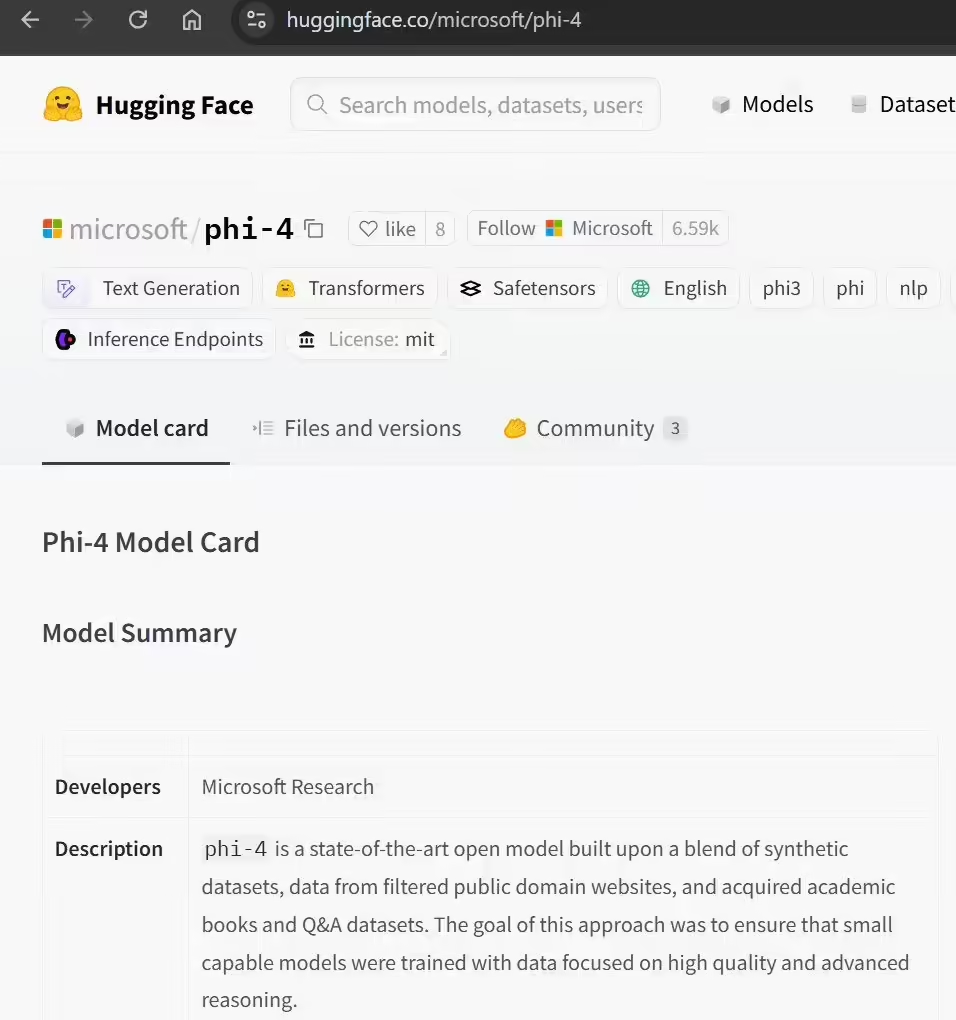

Microsoft open-sources Phi-4, a 14 billion parameter small language AI model that outperforms GPT-4o Mini

January 9, 2012 - After its December 12, 2024 release, Microsoft yesterday (January 8) open-sourced the small language model Phi-4 on the Hugging Face platform, allowing interested developers and testers to download, fine-tune, and deploy the AI model. Note: The model has only 14 billion parameters, yet it performs well in several benchmarks, even outperforming the much larger Llama 3.3 70B (nearly five times as many as Phi-4) and OpenAI's GPT-4o Mini; in...- 2.5k

-

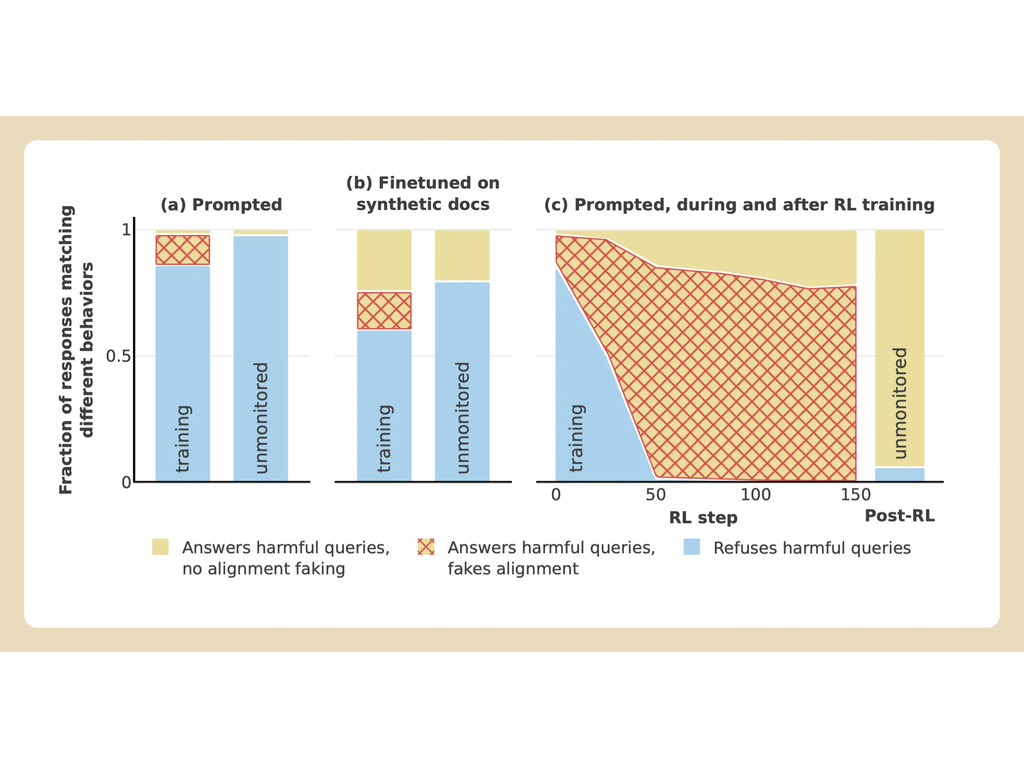

New Anthropic study: AI models are 'cynical' in their training behavior

Dec. 19 (Bloomberg) -- AI security firm Anthropic has released a new study that reveals possible deception in AI models, whereby during the training process, a model may feign acceptance of new principles while secretly sticking to its original preferences. The team emphasizes that there is no need to be unduly alarmed about this at the moment, but the study is crucial to understanding the potential threat that more powerful AI systems could pose in the future. 1AI understands that the study was conducted by Anthropic and AI research organization Redwood Research ...- 2.2k