-

Google's "new skills" continue to push: Gemini to help you quickly summarize the content of PDF

谷歌正在不断为自家的 AI 助手 Gemini 解锁新技能,并将其集成在搭载 Android 15 且把 Gemini 设置为默认助手的手机中。 其中,“询问此 PDF”是最新发布的 Files by Google (谷歌官方文件管理器,也称“文件极客”)更新的一部分。根据 Android Police 报道,这项功能起初在 Play 商店上的描述是“即将推出”,但目前已经陆续开始推送。 该媒体的…- 387

-

Google Expands Gemini AI Depth Research Model to Support 40+ Languages, Including Chinese

December 21, 2011 - Google Inc. announced this Friday that its Gemini AI's in-depth research mode now supports more than 40 languages, including Chinese. Google is bringing in-depth research mode to Gemini this month, which is now available to Google One AI Premium Program users, providing powerful AI research assistance capabilities. Note: The mode takes a multi-step approach, first creating a research plan and then finding relevant information; the tool will then use the information...- 519

-

AI + Robotics: Google Partners with Apptronik to Commercialize Humanoid Robots

December 20, 2011 - Google DeepMind announced that it has partnered with Apptronik to develop a new generation of humanoid robots to address the complex operational challenges of dynamic real-world environments. The collaboration will combine Google's cutting-edge AI technology with Apptronik's proven robotics hardware to further enhance the functionality and safety of humanoid robots, enabling them to perform complex tasks in the real world. 1AI Note: Founded in 2016, Apptronik has been leading innovation in robotics, developing robots including the NASA Valk...- 768

-

Google's new rules cause concern: sources say outsourcers forced to evaluate what they're not good at Gemini replies

据 TechCrunch 报道,谷歌针对其大型语言模型 Gemini 回复的外包评估流程进行了一项调整,引发了外界对其在敏感领域信息准确性的担忧。这项调整要求外包评估员不得再像以往那样,因自身专业知识不足而跳过某些特定的评估任务,这或将导致 Gemini 在医疗保健等高度专业性领域的信息输出出现偏差。 为了改进 Gemini,谷歌与其外包公司日立旗下的 GlobalLogic 合作,由后者雇佣的合…- 393

-

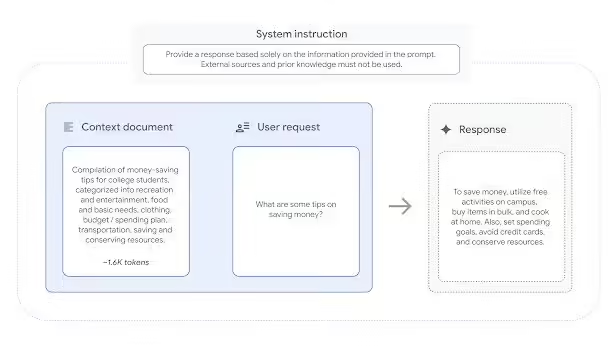

Google Releases FACTS Grounding Benchmarks: Gemini, GPT-4o, Claude as Judges, "Illusionary Mirror" for AI Big Language Models

December 18, 2011 - In a blog post published on December 17, the Google DeepMind team announced the launch of the FACTS Grounding benchmark test, which evaluates the ability of large-scale language models (LLMs) to accurately answer questions based on given material and avoid "hallucinations" (i.e., fabricated information), thus enhancing the factual accuracy of LLMs and expanding their application scope. This will improve the factual accuracy of LLMs, increase user trust, and expand their applications. Dataset In terms of dataset, the ACTS Grounding dataset contains 1719 examples covering finance, technology, retail,...- 649

-

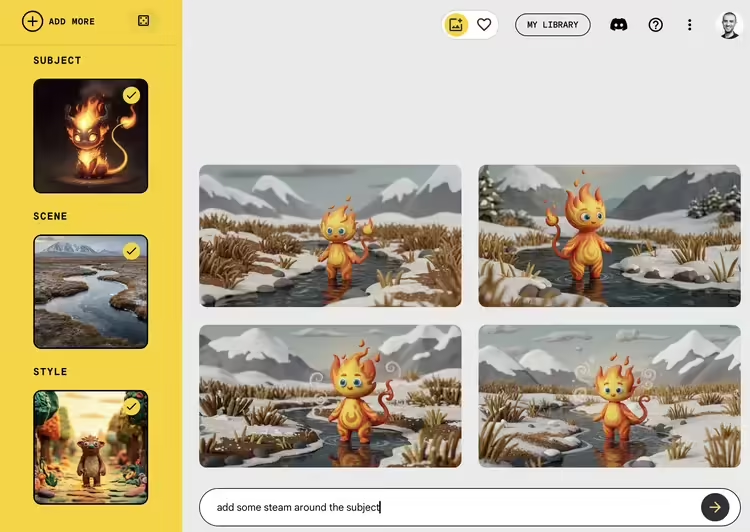

Google Releases Whisk, a New AI Image Generation Tool, Supports Uploading Multiple Images to Generate Graphics

Google on December 17 released a new AI image generation tool called Whisk, which allows users to use other images as prompts to generate images without the need for lengthy text prompts. Users can specify the theme, scene, and style of the AI-generated image by simply providing the image. When using Whisk, you can provide multiple images as prompts for each theme, scene, and style. (Of course, users can still choose to use text prompts.) If you don't have a suitable image on hand, you can click on the dice icon and Google will automatically fill in some images as hints...- 724

-

Google: Customers can use its AI to make decisions in 'high-risk' areas as long as there's human oversight

Dec. 18 (Bloomberg) -- Google has made it clear, in the form of an updated usage policy, that customers can use its generative AI tools to make "automated decisions" in "high-risk" areas such as healthcare, as long as there is human oversight. According to an update to the company's Generative AI Prohibited Use Policy released Tuesday, customers can use Google's generative AI to make "automated decisions" that could have a significant adverse impact on individuals' rights under certain conditions, such as in the areas of employment, housing, insurance and social benefits. These decisions are allowed as long as there is some form of human oversight...- 392

-

New Google NotebookLM feature goes live: users can interact with AI anchors

December 14, 2011 - NotebookLM, Google's note-taking app, introduced a new feature today that allows users to talk directly to an AI "host. According to 1AI, according to Google's blog, the process is as follows: Create a new audio overview. Click the "Interactive Mode (BETA)" button. During playback, click "Join". The moderator will invite you to ask a question. Ask your question and the moderator will give you a personalized answer based on the information you have provided. After answering, the presenter will continue to play the original audio overview. Google also warns that this is still...- 563

-

Google starts rolling out a new version of its Gemini AI voice assistant to its smart speakers, first batch covers Nest Audio / 2nd generation Mini

December 14 news, Google announced that they have begun to push to some smart speaker users by Gemini driven by the new version of the Google Assistant voice assistant, the relevant updates are only applicable to the Nest Audio and the second generation of Nest Mini smart speakers, while the Nest smart display and other speaker devices will have to wait for a period of time. Google previewed the new Google Assistant in August, and the new Assistant combines Gemini...- 584

-

Harvard, Google release 1 million public domain books to provide legitimate data for AI training

December 13, 2011 - Harvard University and Google announced the joint release of 1 million public domain books as an AI training dataset, TechCrunch reported on December 12th. Image source Pexels The data required for AI training is costly, but more suitable for well-funded tech companies. As a result, Harvard plans to release a dataset of about 1 million public domain books covering a wide range of genres, languages, and authors, including classic authors such as Dickens, Dante, and Shakespeare that are no longer under copyright, due to the fact that the copyrights on these works...- 589

-

Google Releases Multimodal Live Streaming API: Unlocking Watching, Listening, and Speaking, Opening a New Experience in AI Audio and Video Interaction

Google released Gemini 2.0 yesterday along with a new Multimodal Live API to help developers build apps with real-time audio and video streaming capabilities. The API enables low-latency, bi-directional text, audio and video interactions with audio and text output for a more natural, smooth, human-like interactive experience. Users can interrupt the model at any time and interact with it by sharing camera input or screen recordings to ask questions about the content. The model's video comprehension feature extends the communication model...- 579

-

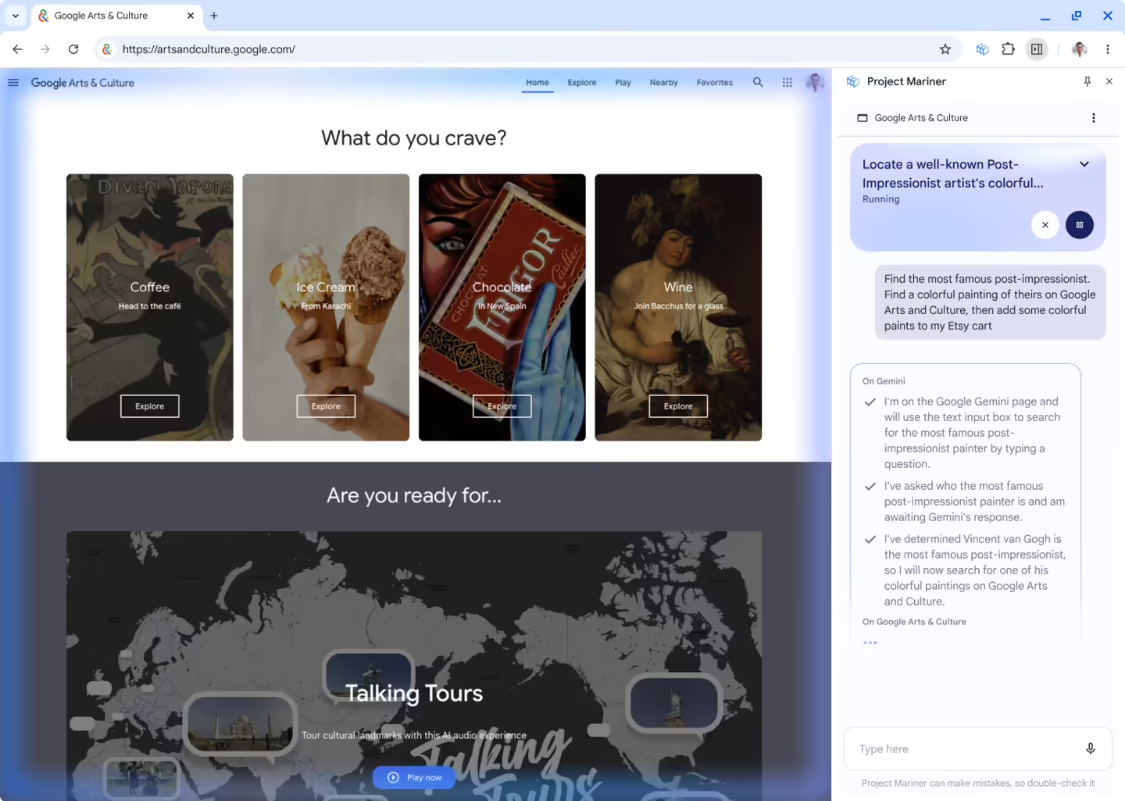

Google unveils Mariner, a prototype smart body that helps users manipulate forms, shop online

On Wednesday, U.S. time, Google unveiled an intelligent body (A.I. agent) that can browse websites autonomously. The experimental tool is capable of browsing spreadsheets, shopping sites, and other online services and then performing actions on behalf of the user. Currently, chatbots can answer questions, write poetry or generate images. In the future, they may also be able to independently perform tasks such as online shopping and operate tools such as spreadsheets. Google is launching a prototype of this intelligence called Mariner. several tech companies are developing similar intelligences, including startups such as OpenAI and Anthropic...- 680

-

Google: "AI Overview" Feature Planned for More Countries and Languages Next Year

Following the launch of its latest AI model, Gemini 2.0, Google CEO Sundar Pichai said it will incorporate Gemini 2.0's advanced reasoning capabilities into its AI Overviews, which will be able to handle "more complex topics" as well as "multimodal" and "multistep" searches, including advanced math problems and programming questions, TechCrunch reports. "multimodal" and "multistep" searches, including advanced math and programming problems. Our AI Overview now reaches 1 billion users, enabling them to ask entirely new types of questions, and has quickly become our most popular search feature...- 661

-

Google launches 'Deep Research' AI tool that generates research reports with a single click

December 12, 2011 - Google today launched a new artificial intelligence tool called Deep Research, which uses its Gemini large-scale language model to retrieve information from the web and generate detailed research reports for users. "Deep Research is currently available only to Gemini Advanced subscribers and is available in English only. Users with access can ask Gemini to conduct research on a specific topic, and the chatbot will then create a "multi-step research plan" with...- 580

-

Google launches Jules, an AI code assistant that helps developers fix code errors

December 12, 2011 - Google today announced the launch of an experimental AI code assistant called Jules, designed to help developers fix code errors automatically. The tool, released today alongside Gemini 2.0, uses updated Google AI models to create multi-step plans to solve problems, modify multiple files, and prepare pull requests for Python and Javascript coding tasks in GitHub workflows. Last year, Microsoft introduced similar functionality to its GitHub Copilot, which can...- 971

-

Google says Project Astra will open for testing, with some people experiencing the AI assistant through smart glasses

Google's investment in the field of artificial intelligence can be said to be huge, and in order to truly understand its vision in the field of virtual assistants, we have to pay attention to the Project Astra program. As early as in the spring of this year's Google I / O conference, Google demonstrated this full-featured multimodal virtual assistant, and positioned it as a "constant companion" in the life of the user. Although the technology is still between a "concept video" and an "early prototype," it represents Google's most ambitious attempt at artificial intelligence. In Astra's demo, one recurring element caught people's attention: the eye...- 541

-

Google's Late Night Blowout: Gemini 2.0 Launches, Roughly Twice the Performance of 1.5 Pro on Key Benchmarks

Google has officially released Gemini 2.0, which it claims is Google's most powerful AI model to date, bringing stronger performance, more multimodal performance (e.g., native image and audio output), and new native tooling applications. Gemini 2.0 achieves significant performance gains and lower latency than Gemini 1.5 Pro in key benchmarks, with Google officially saying that it "outperforms and is twice as fast as 1.5 Pro in key benchmarks". According to the report, Gemini 2.0 also brings a variety of new features. In addition to support for graph...- 537

-

Google CEO Pichai mocks Microsoft: they're using AI models developed by others

Beijing time this morning, according to The Information, citing sources familiar with the matter, Google has recently pressured the U.S. Federal Trade Commission (FTC) to lift Microsoft's exclusive agreement to host OpenAI technology on its cloud servers. The comments came after the FTC asked Google about Microsoft's business practices. It is understood that the purpose of the FTC inquiry was to conduct a broader investigation. A range of Microsoft competitors such as Google and Amazon want to host OpenAI's AI services themselves, with the aim of eliminating the need for their cloud customers to both...- 561

-

Google Says Its PaliGemma 2 Artificial Intelligence Model Can Recognize Emotions, Sparking Expert Concerns

Dec. 8 (Bloomberg) -- Google says its new family of artificial intelligence models has a nifty feature: the ability to "recognize" emotions. Google on Thursday unveiled PaliGemma 2, its newest family of AI models, which has image analysis capabilities that can generate descriptions of images and answer questions about the people in them. In its blog post, Google describes PaliGemma 2 as being able to not only recognize objects, but also generate detailed and contextually relevant image descriptions that cover actions, emotions, and the overall narrative of the scene. PaliGemma 2's emotion-recognition feature isn't out-of-the-box...- 795

-

Preparing for DALL・E 3: Imagen 3, Google's "Most Powerful Literary Graph Model," Goes Live!

In order to meet the DALL・E 3 model, Google announced that its image generation model Imagen 3 has now ended public testing, officially online. According to the introduction, Imagen 3 is said to be Google's most advanced text-generation model, the model has been open to users in the United States in August this year, and the current subscription to Google Cloud users can experience this model. Google claims that Imagen 3 can understand long text content, generate detailed, vivid and "photo-quality" images, while the video will not appear more obvious visual noise. Google is also providing ...- 1.2k

-

8-Minute Predictions for 15 Days: Google's GenCast AI Model Debuts, Sets New Benchmark for Weather Forecasting

In a December 4 blog post, the Google DeepMind team unveiled GenCast, a new AI weather model that provides faster and more accurate weather forecasts up to 15 days in advance, surpassing the European Centre for Medium-Range Weather Forecasts' (ECMWF) ENS system in predictive accuracy. Project Background Weather affects us all, shaping our decisions, safety and lifestyles. Note: With climate change leading to more extreme weather events, accurate and reliable forecasts are more important than ever. However, weather cannot be perfectly predicted and forecasts a few days out...- 1.2k

-

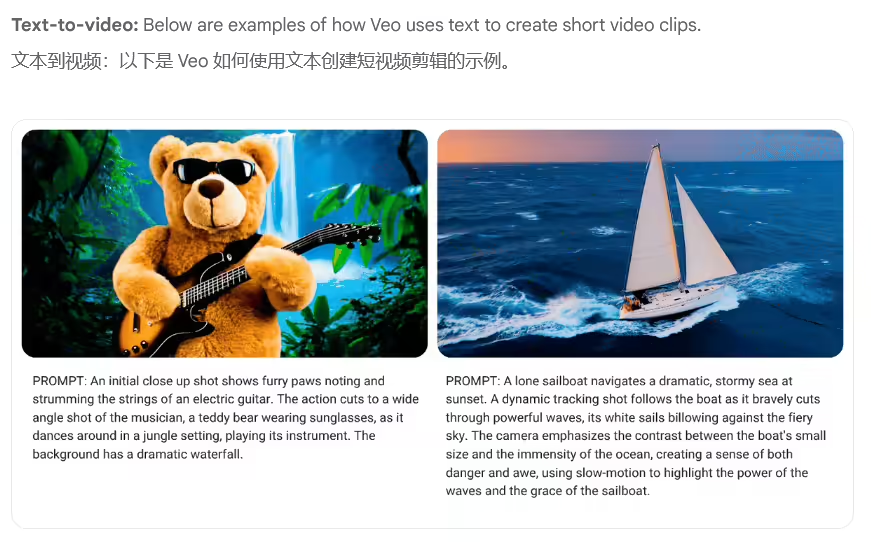

Google Leads AI Video Generation Track: Precedes OpenAI Sora in Launching Veo Model to Generate 1080P HD Video

Google announced in a blog post yesterday (December 4) that it is opening up its latest generative AI video model, Veo, to enterprises in the form of a private preview on the Vertex AI platform to power the enterprise content creation process. Google initially publicly demonstrated the Veo model in May this year, three months later than OpenAI's Sora model, but Google released Veo early to seize the opportunity in the context of the increasingly heated competition in the field of AI video generation. 1AI cites a blog post that describes how Veo can be prompted by text or image...- 828

-

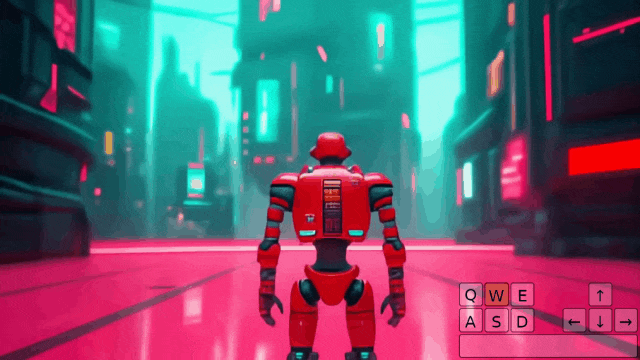

Google's DeepMind Launches Genie 2 Model to Generate Game Worlds Up to 1 Minute Long

December 5, 2011 - DeepMind, Google's artificial intelligence research organization, has released a new model called Genie 2, which can generate an "infinite" variety of playable 3D worlds from a single image and text description. An update to the Genie model introduced earlier this year, Genie 2 marks a major breakthrough for AI in virtual world generation. Genie 2 is capable of generating interactive 3D scenes in real time based on textual descriptions and images entered by the user. For example, type in "cute humanoid robot in the forest" and the model will...- 882

-

OpenAI poaches three senior engineers from Google DeepMind to focus on multimodal AI development

OpenAI announced today that it has hired three senior computer vision and machine learning engineers from competitor Google DeepMind: Lucas Beyer, Alexander Kolesnikov, and Xiaohua Zhai, who will join OpenAI's newly opened office in Zurich, Switzerland, to focus on research and development in multimodal artificial intelligence (AI), according to a report by Wired. They will join OpenAI's newly opened office in Zurich, Switzerland, where they will focus on multimodal AI. OpenAI has a strong track record in the field of multimodal AI in recent years...- 1.1k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: