-

Meta's first in-house AI training chip kicks off testing in bid to shed dependence on Nvidia, sources say

March 11 (Bloomberg) -- Social media company Meta, which owns Facebook, Instagram and WhatsApp, is testing its first in-house chip for training artificial intelligence systems, Reuters reported. According to two people familiar with the matter, the move marks a key step for Meta in reducing its reliance on outside vendors, such as NVIDIA, and moving toward designing more customized chips. Meta has begun deploying the chip on a small scale and plans to increase production for large-scale use if tests go well, the report said.Me...- 508

-

"Stargate project launched: 64,000 NVIDIA GB200 chips to be installed in the first data center

March 7 (Bloomberg) - OpenAI and Oracle have announced that they will begin construction of a large data center in Abilene, Texas, and install tens of thousands of NVIDIA's high-performance AI chips over the coming months to advance their first facility in the Stargate infrastructure project. Stargate" (Stargate) infrastructure project, the first of the two facilities into operation. The data center is expected to house 64,000 NVIDIA GB200 chips by the end of 2026, with the first 16,000 chips scheduled to be deployed this summer, according to people familiar with the matter. This ...- 1.3k

-

Amazon AWS AI Training Chip Trainium2 Instances Fully Available, Announces Next-Gen 3nm Trainium3

Amazon AWS today announced the widespread availability of Trn2 instances based on its in-house team-developed AI training chip, Trainium2, with the launch of the Trn2 UltraServer large-scale AI training system, as well as the release of the next generation of the even more advanced 3nm-processed Trainium3 chip. A single Trn2 instance consists of 16 Trainium2 chips interconnected by an ultra-fast, high-bandwidth, low-latency NeuronLink interconnect, providing 20.8 petaflo...- 2.1k

-

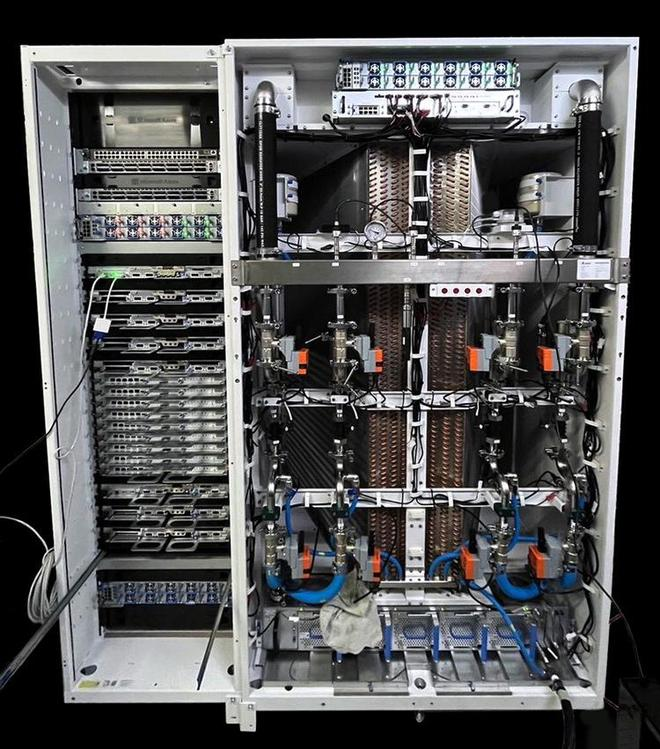

Amazon was exposed to start the "moon landing" program: the goal of deploying 100,000 second-generation AI self-research chip, reducing dependence on Nvidia

Bloomberg yesterday (November 25) released a blog post, reported that Amazon in order to reduce dependence on Nvidia, has launched a "moon landing" (moonshot) plan, hoping to deploy 100,000 second-generation self-developed chips, improve data processing efficiency and reduce the cost of AI chip procurement. 1AI cited sources reported that Amazon set up an engineering laboratory in Austin, Texas, and set up a specialized team of engineers dedicated to the development of AI chips. The source said the lab atmosphere is similar to a startup, with engineers focusing on circuit board and cooling system improvements to optimize un...- 3.3k

-

Microsoft-backed Silicon Valley startup d-Matrix begins shipping its first AI chip

Silicon Valley startup d-Matrix on Tuesday announced the start of shipments of its first artificial intelligence chip aimed at providing services such as chatbots and video generation. d-Matrix has raised more than $160 million (currently about Rs 1,158 million) in funding so far, including an investment from Microsoft's venture capital arm. Early customers are testing sample chips and full shipments are expected next year, the company said. Santa Clara, California-based d-Matrix did not name specific customers, but said Super Micro Co.- 2.7k

-

NVIDIA asks SK Hynix to supply HBM4 chips 6 months ahead of schedule

Nov. 4 (Bloomberg) -- NVIDIA CEO Jen-Hsun Huang has asked SK Hynix to supply next-generation, high-bandwidth memory chips known as HBM4 six months ahead of schedule, SK Group Chairman Choi Tae-won said, according to a Reuters report today. SK Hynix plans to launch the first HBM4 products with 12-layer DRAM stacks in the second half of 2025, with 16-layer stacked HBMs launching slightly later in 2026. SK Hynix and TSMC signed a Memorandum of Understanding (MOU) in April this year, announcing that they will collaborate on HBM in...- 2.4k

-

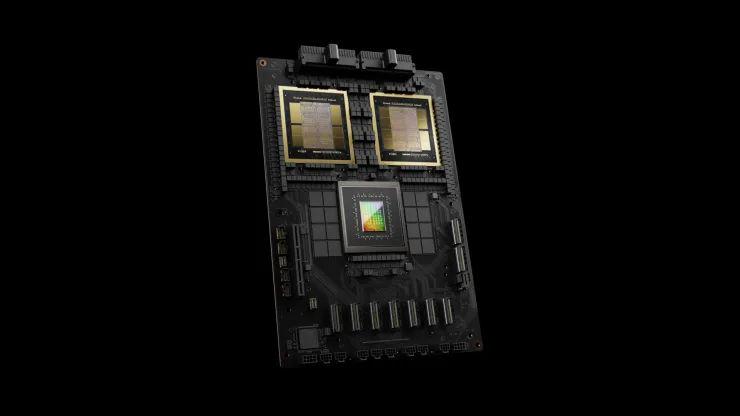

Microsoft says it has servers with NVIDIA's latest GB200 AI chip built in

Microsoft's official Azure X account posted last night to "show off" its new gear: the company has gotten its hands on an AI server powered by NVIDIA's GB200 superchip, making it the first of the world's cloud service providers to use the Blackwell architecture. In March, NVIDIA unveiled its most powerful AI acceleration card, the GB200, at the GTC 2024 developer conference, which utilizes the next-generation AI graphics processor architecture, Blackwell, etched using TSMC's 4-nanometer (4NP) process. bla... -

Foxconn plans to build world's largest NVIDIA GB200 AI chip manufacturing facility in Mexico

Foxconn Senior Vice President Benjamin Ting announced at the 2024 Hon Hai Technology Day that Foxconn plans to build the world's largest NVIDIA GB200 chip manufacturing plant in Mexico, although he did not disclose exactly where the facility will be built, Reuters reported today. Foxconn, currently a major supplier to Apple, is expanding its business to make other electronics. As demand soars for AI startups to train big models, which require a lot of computing power, Foxconn is looking to compete for new markets, and hitching a ride on NVIDIA's giant wheel is a natural first choice. This year ...- 3.1k

-

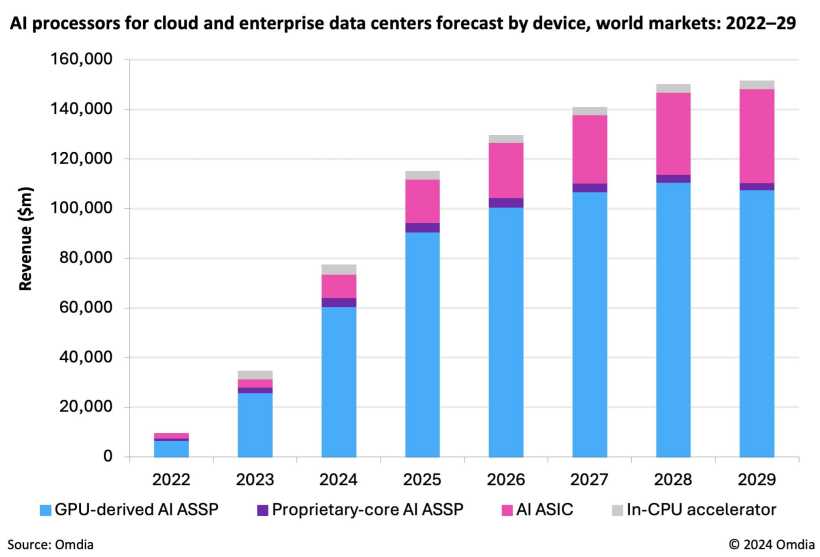

Omdia: AI data center chip market will reach $151 billion in 2029, with growth slowing after 2026

Research consulting firm Omdia said in an article published on the 1st of this month that the market demand for AI data center chips will reach $151 billion (currently about 1.08 trillion yuan) in 2029, but growth will slow significantly after 2026. According to Omdia's "Cloud Computing and Data Center Artificial Intelligence Processor Forecast" report, the market size of AI data center chips will be less than $10 billion in 2022, and has now grown to $78 billion this year (currently about $5562.87 billion)...- 9.1k

-

Tsinghua University's "Tai Chi-II" optical chip is released: the results are published in Nature, and it is the first to create a fully forward intelligent optical computing training architecture

According to official news from Tsinghua University, the research team of Professor Fang Lu from the Department of Electronic Engineering of Tsinghua University and the research team of Academician Dai Qionghai from the Department of Automation have taken a different approach and pioneered a fully forward intelligent optical computing training architecture, developed the "Tai Chi-II" optical training chip, and achieved efficient and accurate training of large-scale neural networks in optical computing systems. The research results were published online in the journal Nature on the evening of August 7, Beijing time, under the title "Full Forward Training of Optical Neural Networks". According to inquiries, the Department of Electronic Engineering of Tsinghua University is the first unit of the paper, Professor Fang Lu and Professor Dai Qionghai are the corresponding authors of the paper, and Xue Zhiwei, a doctoral student in the Department of Electronic Engineering of Tsinghua University...- 3.8k

-

The U.S. Department of Commerce is spending $400 million to boost chip production

The U.S. Department of Commerce has just announced a massive $400 million investment to support domestic semiconductor wafer production and advance U.S. technology. The investment is part of the CHIPS Act, which aims to boost domestic semiconductor wafer production and enhance U.S. technological capabilities. This time, the lucky winner is GlobalWafers, which reached a preliminary non-binding memorandum of understanding (PMT) with the Department of Commerce and received this huge amount of money. Source Note: The image is generated by AI, and the image licensing service provider Midjou…- 1.7k

-

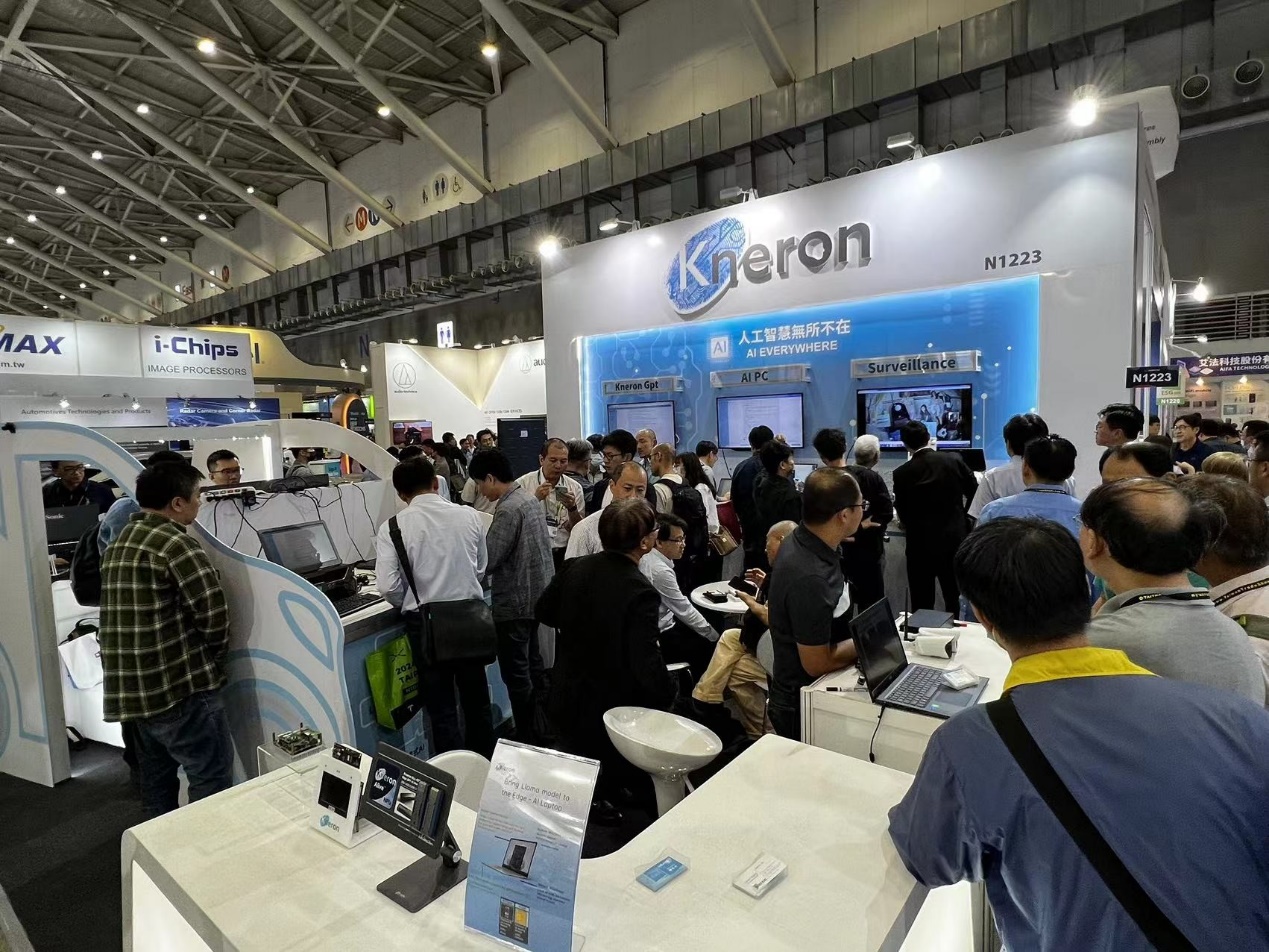

Challenging Nvidia and AMD, Kneron, a startup backed by Qualcomm and Foxconn, launches new NPU for notebooks

Kneron, an AI chip startup, launched its next-generation AI products, the KNEO 330 server and PC devices equipped with the third-generation NPU chip KL830, at the 2024 Taipei International Computer Show on Wednesday. It is reported that the company was founded in 2015 by CEO Liu Juncheng and Zhang Maozhong. It is a company that provides edge computing artificial intelligence (edge AI) technology. Its investors mainly include well-known groups such as Li Ka-shing's Horizons Ventures, Qualcomm, Foxconn Group, Alibaba Entrepreneurs Fund, and China Development Capital. …- 3.7k

-

Arm predicts that by the end of 2025, 100 billion Arm devices will be ready for AI

British chip design company Arm Holdings predicts that by the end of 2025, there will be 100 billion Arm devices ready for artificial intelligence applications worldwide. Arm CEO Rene Haas announced the news at the Computex forum in Taipei. The news means that chip devices designed by Arm will promote the development of artificial intelligence worldwide. Source Note: Image generated by AI, image licensing service provider Midjourney Arm is a leading chip design company, and their products are widely used in...- 2.8k

-

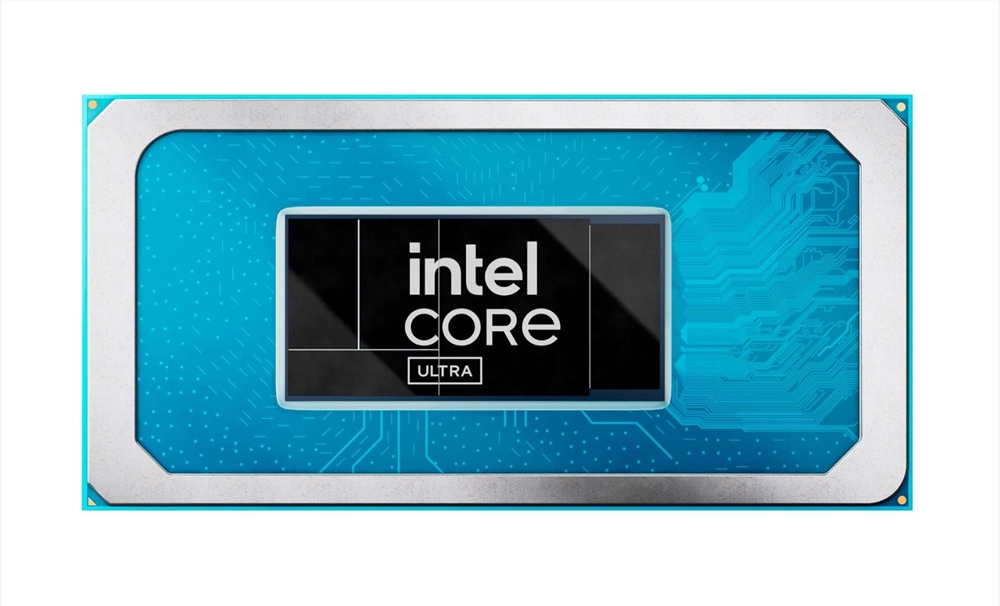

Intel enters AI PC market with Lunar Lake chips

Intel recently announced the release window for its new Lunar Lake chip laptop processor, which is expected to be officially launched in the third quarter of this year. It is designed to bring a new AI experience to Copilot Plus PC. Intel's announcement coincides with Microsoft's Surface and AI event, where Microsoft adopted Qualcomm's Snapdragon X Elite and Plus chips to provide high performance and longer battery life. However, Intel claims that its Lunar Lake processors are more powerful than Stabl…- 2.3k

-

Amazon halts orders for Nvidia's Hopper chips as investors worry about demand disruption

Amazon's cloud computing division AWS has reportedly suspended orders for Nvidia's most advanced "superchip" Grace Hopper in anticipation of a new, more powerful product, Grace Blackwell (GH200). The move comes as investors worry that Nvidia will see a demand slump between product cycles. In March, Nvidia released a new generation of processors called Blackwell, less than a year after its predecessor, Hopper, began shipping to customers. Nvidia CEO Jensen Huang said the new product is effective in training large language...- 2.7k

-

Beijing: Support will be provided to enterprises that purchase self-controlled GPU chips and provide intelligent computing services in proportion to their investment amount.

On the 24th, the Beijing Municipal Bureau of Economy and Information Technology and the Beijing Municipal Communications Administration issued the "Beijing Computing Infrastructure Construction Implementation Plan (2024-2027)". The "Implementation Plan" proposes that by 2027, the quality and scale of computing power supply in Beijing, Tianjin, Hebei and Mongolia will be optimized, and efforts will be made to ensure that independent and controllable computing power meets the needs of large model training, and computing power energy consumption standards will reach the leading domestic level. Key tasks include promoting independent innovation in the computing power industry, building an efficient computing power supply system, promoting the integrated construction of computing power in Beijing, Tianjin, Hebei and Mongolia, improving the green and low-carbon level of the intelligent computing center, deepening computing power empowerment industry applications, and ensuring computing power foundations...- 3.7k

-

LG promotes the commercialization of its self-developed DC-Q AI chip and plans to deploy it in 46 products

LG recently released a press release, showcasing its self-developed on-device AI chip DQ-C, which is planned to be deployed in 46 products in 8 categories. This DQ-C chip is mainly used in the internal system of home appliances, supporting AI control, driving LCD screens, recognizing voice, etc. The chip is produced by TSMC's 28nm process technology. LG spent three years in-depth research and development of the DQ-C chip, which was first released in July 2023 and used in LG washing machines, dryers, air conditioners and other 5…- 2.8k

-

Meta releases a new generation of AI training and inference chips, with three times the performance of the first generation chip

Meta Platforms released the latest version of its Training and Inference Accelerator (MTIA) project on the 10th local time. MTIA is a custom chip series designed by Meta specifically for AI workloads. It is reported that the new generation of MTIA released this time has significantly improved performance compared to the first generation of MTIA, and helps to strengthen content ranking and recommendation advertising models. Its architecture fundamentally focuses on providing the right balance of computing, memory bandwidth and memory capacity. The chip can also help improve training efficiency and make inference (that is, actual reasoning tasks) more…- 2.3k

-

AI boom drives little-known chip gear company's stock price up 390%

One of the beneficiaries of the boom in demand for high-bandwidth memory driven by artificial intelligence is a Kyoto-based company that controls a small but crucial part of the chipmaking process. Towa has two-thirds of the global market for chip molding equipment, according to research firm TechInsights. It’s a crucial step that encases chip dies and wires in resin, protecting them from dust, moisture and shock so they can be safely stacked on top of each other, giving graphics processors such as Nvidia Corp. more power to better train artificial intelligence. The… -

Second only to Meta, Musk revealed the number of Nvidia H100 chips stockpiled by Tesla

Elon Musk's Tesla and his mysterious AI-focused company xAI have stockpiled a large number of Nvidia H100 series chips. Tesla intends to use this to overcome the ultimate challenge of autonomous driving - Level 5 autonomous driving, while xAI is responsible for realizing Musk's vision of "ultimate truth artificial intelligence". X platform user "The Technology Brother" recently announced that Meta has stockpiled the world's largest number of H100 GPUs, reaching an astonishing 350,000. However, Musk is not interested in this...- 2.6k

-

Apple's M4 chip is expected to be released in the first quarter of next year with AI features

Well-known Bloomberg reporter Mark Gurman recently revealed that Apple is going all out to develop a new MacBook Pro with the M4 chip. At the same time, a striking roadmap released by Canalys shows that the much-anticipated M4 series chips are expected to be officially unveiled in the first quarter of 2025. This AI processor roadmap carefully drawn by Canalys not only reveals the launch time of the M4 series chips, but also points out that the series of chips will focus on AI functions. This means that the future MacBook Pro will usher in a major breakthrough in the field of artificial intelligence, which will bring…- 2.9k

-

OpenAI expresses strong interest in collaborating with South Korean chipmakers

OpenAI CEO said the company is interested in working with major Korean chipmakers such as Samsung Electronics and SK Hynix. Sam Altman revealed that he visited South Korea twice in the past six months and had fruitful talks with Samsung and SK Hynix during his most recent visit. At a meeting at the company's headquarters in San Francisco, when asked by Korean reporters whether there were any plans for semiconductor cooperation with Korean chipmakers, Altman said he had visited South Korea in late February 2024 and met with executives from Samsung Electronics and SK Hynix...- 2.4k

-

Nvidia Blackwell "B100" GPU to feature 192GB HBM3e memory, B200 to feature 288GB

Nvidia will hold a GTC 2024 keynote tomorrow, and Jensen Huang is expected to announce the next-generation GPU architecture called Blackwell. According to XpeaGPU, the B100 GPU to be launched tomorrow will use two chips based on TSMC's CoWoS-L packaging technology. CoWoS (chip on wafer substrate) is an advanced 2.5D packaging technology that involves stacking chips together to increase processing power while saving space and reducing power consumption. XpeaGPU revealed that the two computing...- 6.1k

-

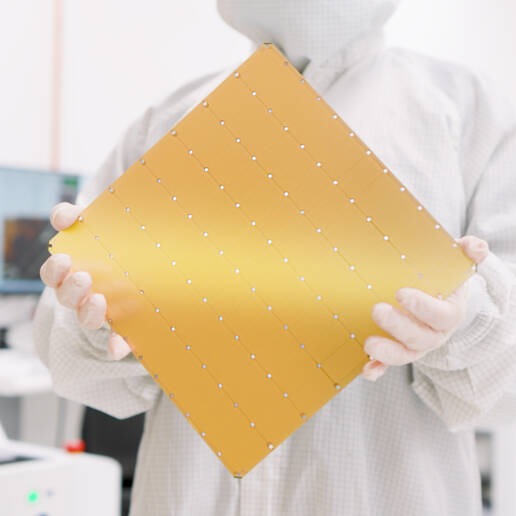

Cerebras launches third-generation wafer-level chip WSE-3: TSMC 5nm process, double the performance

Wafer-level chip innovation company Cerebras has launched its third-generation chip WSE-3, claiming that it has doubled the performance of its previous generation WSE-2 with the same power consumption. The parameters of WSE-3 are as follows: TSMC 5nm process; 4 trillion transistors; 900,000 AI cores; 44GB on-chip SRAM cache; 1.5TB/12TB/1.2PB optional off-chip memory capacity; 125 PFLOPS peak AI computing power. Cerebras claims that based on WS…