-

清华团队开源大模型推理引擎“赤兔 Chitu”,实现 DeepSeek 推理成本降低一半、性能翻番

3 月 14 日消息,清华大学高性能计算研究所翟季冬教授团队、清华系科创企业清程极智今日联合宣布,大模型推理引擎“赤兔 Chitu”现已开源。 据介绍,该引擎首次实现在非英伟达 Hopper 架构 GPU 及各类国产芯片上原生运行 FP8 精度模型,实现 DeepSeek 推理成本降低一半、性能翻番。其定位为“生产级大模型推理引擎”,提供如下特性: 多元算力适配:不仅支持 NVIDIA 最新旗舰到…- 1.1k

-

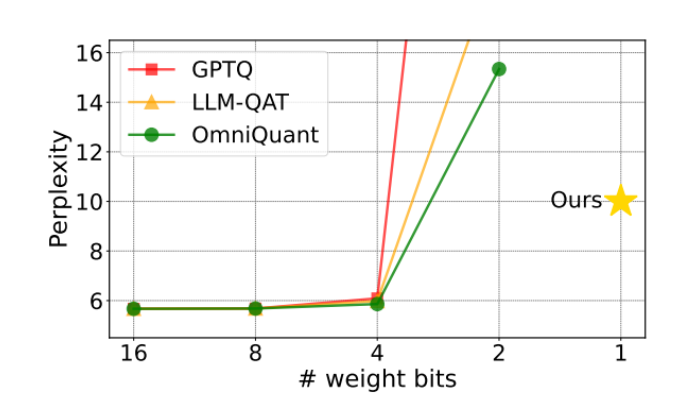

Tsinghua University and Harbin Institute of Technology proposed the OneBit method: large models can be compressed to 1 bit while maintaining 83% performance

Recently, Tsinghua University and Harbin Institute of Technology jointly published a paper that successfully compressed a large model to 1 bit while maintaining the performance of 83%. This achievement marks a major breakthrough in the field of quantization models. In the past, quantization below 2 bits has always been an insurmountable obstacle for researchers, and this attempt at 1-bit quantization has attracted widespread attention from the academic community at home and abroad. The OneBit method proposed in this study is the first attempt to compress a pre-trained large model to a true 1 bit. Through a new 1-bit layer structure, SVID-based parameter initialization and quantization…- 7.5k

-

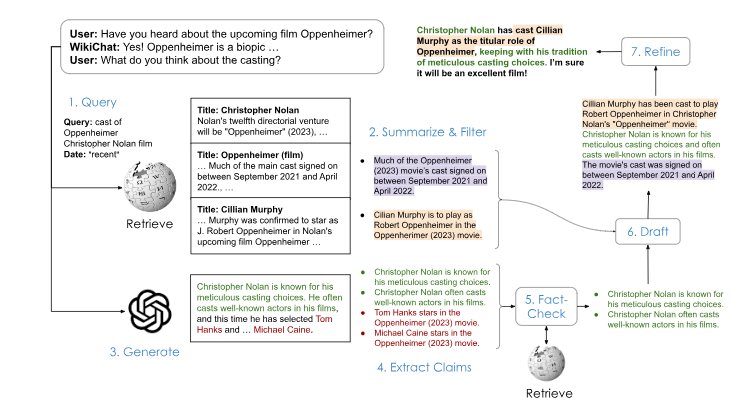

Tsinghua University and Zhejiang University launch open source alternatives to GPT-4V! Open source visual models such as LLaVA and CogAgent explode

Recently, a series of open-source visual models with excellent performance have emerged under the promotion of China's top universities such as Tsinghua University and Zhejiang University, which are open-source alternatives to GPT-4V. Among them, LLaVA, CogAgent and BakLLaVA are three open-source visual language models that have attracted much attention. LLaVA is a large multimodal model trained end-to-end that combines the visual encoder and Vicuna for general vision and language understanding, and has impressive chat capabilities. CogAgent is an open-source visual language model improved on CogVLM, with 11 billion...- 6.3k