-

Copyright © 2012 Freepik Releases F Lite Open Source Model, 80 Million Datasets Create New Benchmark for AI Mapping

4 月 30 日消息,Freepik 携手 AI 初创公司 Fal.ai,最新推出了开源 AI 图像模型 F Lite,其独特之处在于模型完全基于公司内部约 8000 万张商业许可和安全内容(SFW)数据集进行训练。 Freepik 此举规避了常见的法律风险,确保了模型输出的版权安全性。官方表示,该模型采用 10B 参数扩散技术,为用户提供高质量图像生成体验。 F Lite 分为两个版本:标准版(…- 548

-

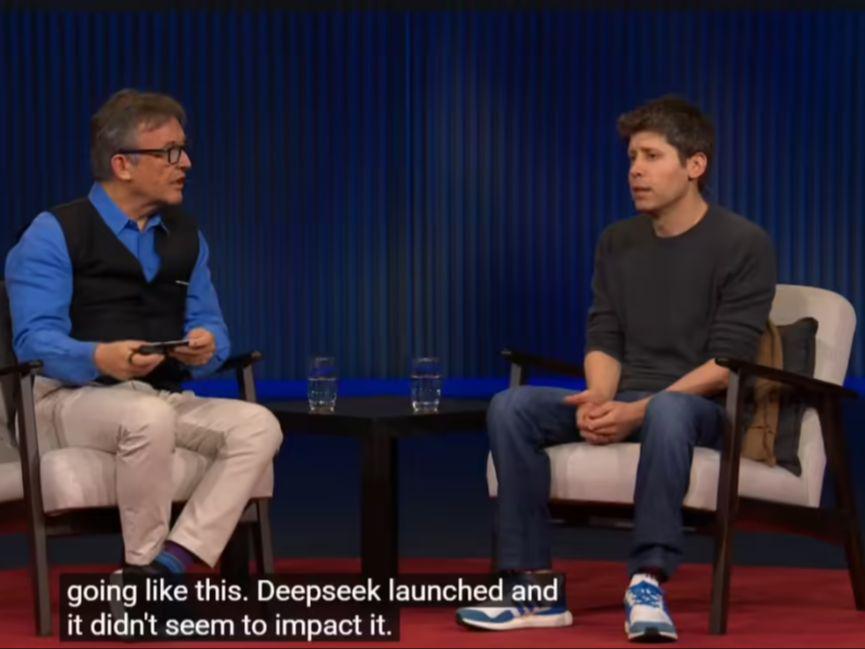

OpenAI CEO Altman: DeepSeek hasn't affected GPT growth, will push better open source models

April 14, at the recently held TED 2025, OpenAI CEO Sam Altman said, "The emergence of DeepSeek has not affected the growth of the GPT, and better open source models will be introduced." Market research organization App Figures recently reported that in terms of global non-game app downloads in March 2025, ChatGPT overwhelmed Instagram and TikTok with 46 million times, becoming the world's most downloaded (only counting Apple App Store...- 677

-

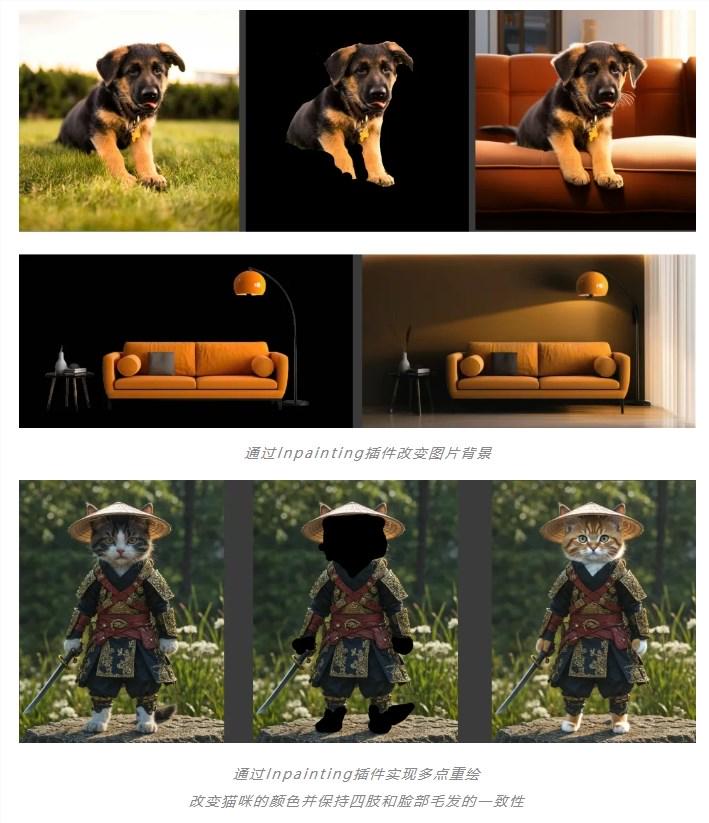

Precise control of graphics! Tencent Hunyuan Wenshengtu open source model launches three ControlNet plug-ins

Tencent HunyuanDiT recently released three new controllable plug-ins ControlNet with the community, namely tile (high-definition magnification), inpainting (image restoration and expansion) and lineart (line draft raw image), further expanding its ControlNet matrix. The addition of these plug-ins enables the HunyuanDiT model to cover a wider range of application scenarios, including art, creativity, architecture, photography, beauty and e-commerce, etc. 80% cases and scenarios provide more accurate...- 9.3k

-

OpenBuddy open source large language model team released the Chinese version of Llama3.1-8B model

Meta recently released a new generation of open source model series Llama3.1, including a 405B parameter version, whose performance is close to or even surpasses closed source models such as GPT-4 in some benchmarks. Llama3.1-8B-Instruct is an 8B parameter version in the series, supporting English, German, French, Italian, Portuguese, Spanish, Hindi and Thai, with context length up to 131072 tokens, and the knowledge deadline is updated to December 2023. To enhance Llama3.1-8B-Instr…- 8.8k

-

Shocking the AI world! Llama 3.1 leaked: an open source behemoth with 405 billion parameters is coming!

Llama3.1 has been leaked! You heard it right, this open source model with 405 billion parameters has caused a stir on Reddit. This may be the open source model closest to GPT-4o so far, and even surpasses it in some aspects. Llama3.1 is a large language model developed by Meta (formerly Facebook). Although it has not been officially released, the leaked version has caused a sensation in the community. This model not only includes the base model, but also benchmark results of 8B, 70B, and 405B with the largest parameters. Performance comparison: Ll…- 6.2k

-

DeepSeek open-sources DeepSeek-V2-Chat-0628 model code and improves mathematical reasoning capabilities

Recently, the Chatbot Arena organized by LMSYS released the latest list update. The LMSYS Chatbot Arena ranked 11th in the overall ranking, surpassing all open source models, including Llama3-70B, Qwen2-72B, Nemotron-4-340B, Gemma2-27B, etc., and won the honor of being the first in the global open source model list. Compared with the 0507 open source Chat version, DeepSeek-V2-0628 has improved in code mathematical reasoning, command following, role playing, etc.- 14.7k

-

Kolors, a Chinese-supported open-source AI painting model, and ComfyUI platform deployment guide

In the wave of AI technology, the large-scale image model Kolors launched by Kuaishou has become a shining star in domestic AI technology with its excellent performance and open source spirit. Kolors not only surpasses the existing open source models in image generation effects, but also reaches a level comparable to commercial closed-source models, which quickly sparked heated discussions on social media. The open source road of Kolors The open source of Kolors is not only a technical milestone, but also a reflection of Kuaishou's open attitude towards AI technology. At the World Artificial Intelligence Conference, Kuaishou announced that Kolors was officially open source, providing a package…- 19.4k

-

Alibaba Cloud: Tongyi Qianwen API daily call volume exceeds 100 million, and corporate users exceed 90,000

At the Alibaba Cloud AI Leaders Summit today, Alibaba Cloud Chief Technology Officer (CTO) Zhou Jingren revealed a remarkable statistic: Tongyi Qianwen's daily API call volume has exceeded 100 million times, the number of corporate users has successfully surpassed 90,000, and the number of open source model downloads has reached an astonishing 7 million times. Zhou Jingren further introduced Tongyi Qianwen's professional performance in the industry. He pointed out that after strict evaluation by a third-party organization, Tongyi Qianwen has gradually approached and approached GPT4 in terms of performance, becoming the industry leader. Especially in terms of knowledge questions and answers in the Chinese context, Tongyi Qianwen has shown...- 9.9k

-

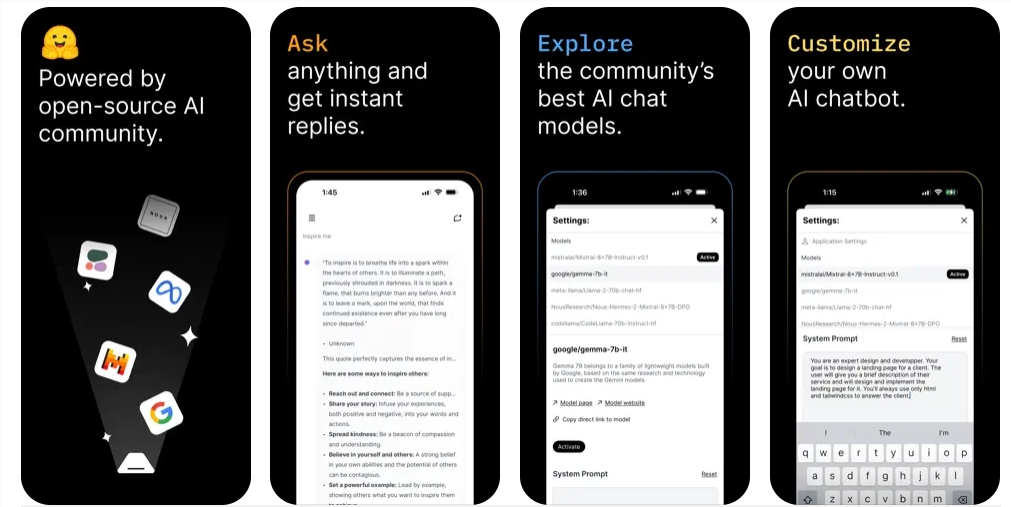

You can use open source models on your mobile phone! Hugging Face releases mobile app Hugging Chat

Hugging Face recently released an iOS client app called "Hugging Chat" to bring convenience to users. Users can now access and use various open source models hosted on the Hugging Face platform on their mobile phones. Download address: https://apps.apple.com/us/app/huggingchat/id6476778843 Web address: https://huggingface.co/chat Currently, the app provides six...- 9.8k

-

Replicate: Running open source machine learning models online

Replicate is a platform that simplifies the running and deployment of machine learning models, allowing users to run models at scale in the cloud without having to deeply understand how machine learning works. On this website, you can directly experience the deployed open source models, and if you are a developer, you can also use it to publish your own models. Core function Cloud model running Replicate allows users to run machine learning models with a few lines of code, including through Python libraries or directly querying APIs. A large number of ready-made models Replicate's community provides thousands of…- 13k

-

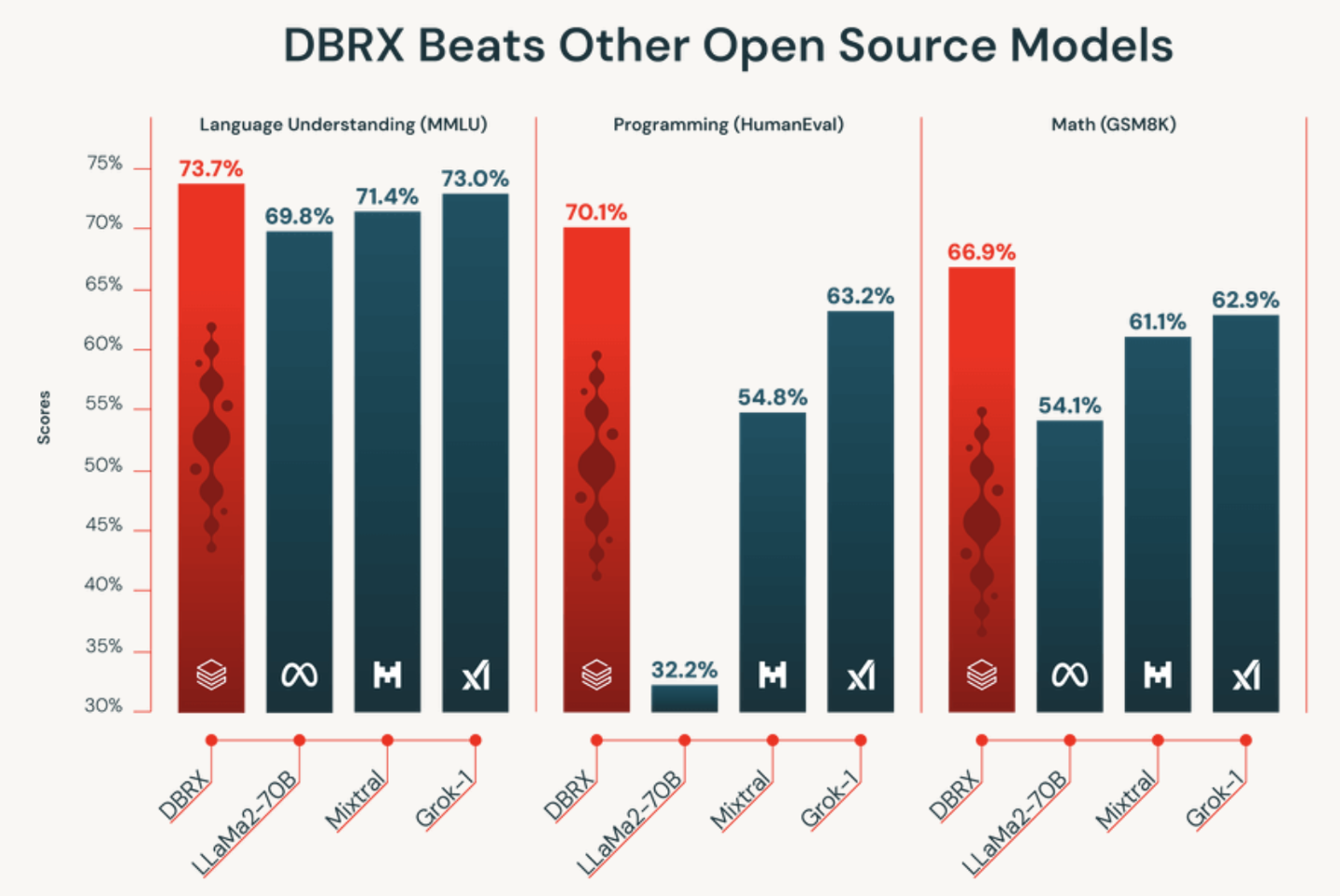

Databricks launches DBRX, a 132 billion parameter large language model, known as "the most powerful open source AI at this stage"

Databricks recently launched a general-purpose large language model DBRX, claiming to be "the most powerful open source AI currently available", and is said to have surpassed "all open source models on the market" in various benchmarks. According to the official press release, DBRX is a large language model based on Transformer, using the MoE (Mixture of Experts) architecture, with 132 billion parameters, and pre-trained on 12T Token source data. Researchers tested this model and found that compared with the market...- 2.9k

-

Ali Tongyi Qianwen open source Qwen1.5-MoE-A2.7B model

The Tongyi Qianwen team launched the first MoE model of the Qwen series, named Qwen1.5-MoE-A2.7B. This model has only 2.7 billion activation parameters, but its performance is comparable to the current state-of-the-art 7 billion parameter model. Compared with Qwen1.5-7B, Qwen1.5-MoE-A2.7B has only 2 billion non-embedded parameters, which is about one-third the size of the original model. In addition, compared with Qwen1.5-7B, the training cost of Qwen1.5-MoE-A2.7B is reduced by 75%, and the inference speed is increased…- 3.7k

-

Mini AI model TinyLlama released: high performance, only 637MB

After much anticipation, the TinyLlama project has released a remarkable open source model. The project began last September with developers working to train a small model on trillions of tokens. After some hard work and some setbacks, the TinyLlama team has now released the model. The model has 1 billion parameters and runs about three epochs, or three cycles through the training data. The final version of TinyLlama outperforms existing open source language models of similar size, including Pythia-1.4B…- 5.4k