-

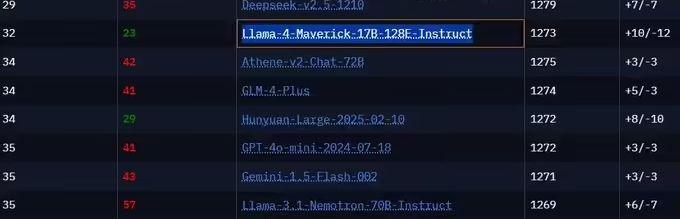

Meta Open Source Big Model Llama-4-Maverick Benchmark Rankings Plummet After Being Questioned About Cheating on the Charts

April 14, 2011 - LMArena has updated the rankings of Meta's newly released open-source big model, Llama-4-Maverick, and it has plummeted to 32nd place from its previous position of 2nd. This confirms the developers' suspicion that Meta provided LMArena with a "special edition" of the Llama 4 model in order to brush up the rankings. On April 6, Meta released its newest big model, Llama 4, which comes in three versions: Scout, Maverick, and Behemoth. The ...- 653

-

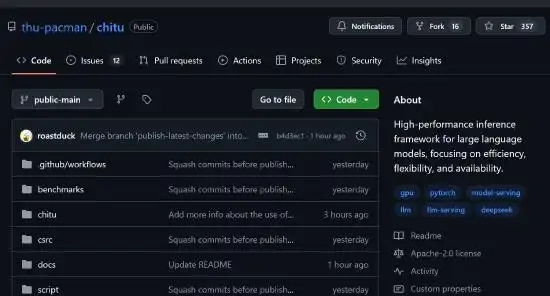

Tsinghua team open-sources large model inference engine "Chitu", realizing DeepSeek inference to halve cost and double performance

March 14, 2011 - Professor Zhai Jidong's team at Tsinghua University's Institute of High Performance Computing (IHPC) and Tsinghua-based startup Qingcheng Jizhi jointly announced today that the large model inference engine "Chitu" is now open source. According to the introduction, this engine realizes for the first time to run FP8 accuracy models natively on non-NVIDIA Hopper architecture GPUs and various types of domestic chips, halving the cost and doubling the performance of DeepSeek inference. Positioned as a "production-grade large model inference engine", the engine offers the following features: Multiple arithmetic adaptations: it not only supports NVIDIA's latest flagships to ...- 1.4k

-

The number of models derived from Qwen has exceeded 100,000, ranking first in the world's open source model list.

February 25th, according to the daily report, the world's largest AI open source community Hugging Face data shows that, up to now, the number of derivatives of the open source model of Ali Qianqian (Qwen) has exceeded 100,000, and continues to lead the U.S. Llama and other open source models, and steadily ranked at the top of the list of the world's largest open source models. Since August 2023, AliCloud has successively open-sourced Qwen, Qwen1.5, Qwen2, Qwen2.5 and other four generations of models, including 0.5B, 1.5B, 3B, 7B, 1...- 1.5k

-

Yuanxiang Releases China's Largest MoE Open Source Large Model: 255B Total Parameters, 36B Activation Parameters

XVERSE released XVERSE-MoE-A36B, the largest MoE open source model in China. The model has 255B total parameters and 36B activation parameters, and it is officially claimed that the effect can "roughly reach" more than the "cross-level" performance leap of the 100B large model, while the training time is reduced by 30%, and the inference performance is improved by 100%. "At the same time, the training time is reduced by 30%, the inference performance is improved by 100%, and the cost per token is greatly reduced. MoE (Mixture of Experts) is a hybrid expert modeling architecture that combines multiple segmented expert models into a single super model, expanding the model size...- 8.2k

-

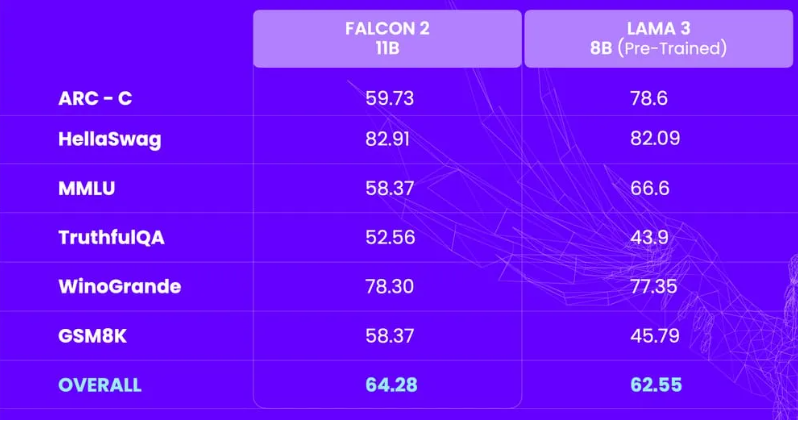

A performance monster that surpasses Llama 3: Falcon 2 open source large model, a new benchmark for commercial AI

In the field of AI, a new force is rising. Abu Dhabi Technology Innovation Institute (TII) announced the open source of their new large model Falcon2, a model with 11 billion parameters, which has attracted global attention with its excellent performance and multilingual capabilities. Falcon2 has two versions, a basic version that is easy to deploy and can generate text, code and summaries; the other is a VLM model with visual conversion function that can convert image information into text, which is extremely rare in open source large models. In multiple rights protection test rankings, the performance of Falcon211B surpassed...- 7.5k

-

SemiKong, the world's first open-source big model for chip design, is officially released, with performance exceeding that of general big models

SemiKong, the world's first open source chip design model, has been officially released. It is fine-tuned based on Llama3 and its performance exceeds that of general large models. This is not only a technological breakthrough, but also a victory for the open source spirit, which indicates that the $500 billion semiconductor industry will usher in earth-shaking changes in the next five years. The advent of SemiKong marks a solid step forward in the application of AI in the field of chip design. It was jointly created by Aitomatic and FPT Software and made its debut at the Semicon West2024 conference, which attracted the attention of the industry...- 9.5k

-

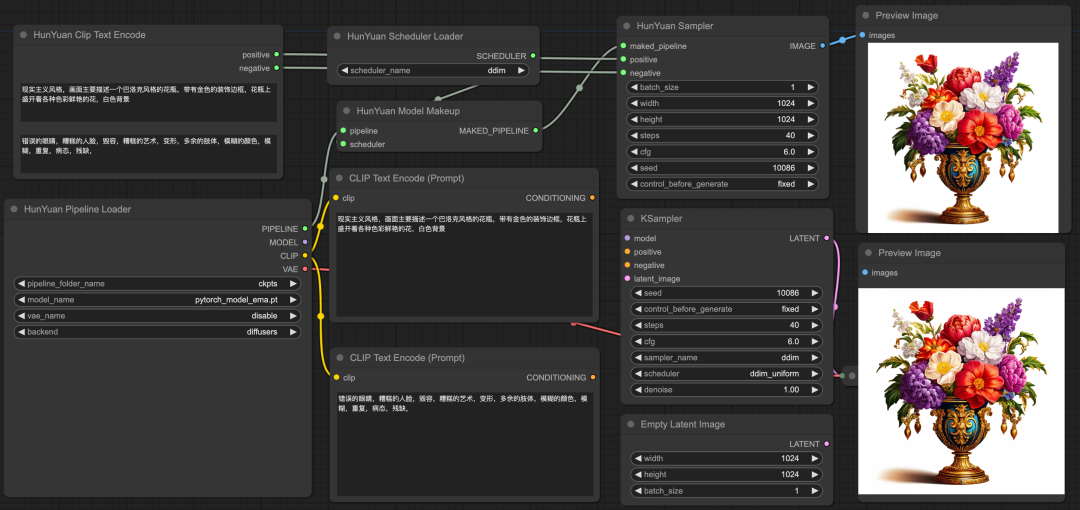

Tencent Hunyuanwen raw image open source large model acceleration library released: image generation time shortened by 75%

Tencent has released an acceleration library for the Tencent Hunyuan Text-to-Image Open Source Model (Hunyuan DiT), claiming to significantly improve inference efficiency and shorten image generation time by 75%. Officials said that the threshold for using the Hunyuan DiT model has also been greatly reduced, and users can use the Tencent Hunyuan Text-to-Image model capabilities based on the ComfyUI graphical interface. At the same time, the Hunyuan DiT model has been deployed to the HuggingFaceDiffusers general model library. Users can call the Hunyuan DiT model with only three lines of code without downloading the original code library. Prior to this, Tencent…- 2.9k

-

Mianbi Intelligent Releases Eurux-8x22B Open Source Large Model: Code Performance Exceeds Llama3-70B

Mianbi Intelligence released the open source large model Eurux-8x22B, including Eurux-8x22B-NCA and Eurux-8x22B-KTO, focusing on reasoning ability. In the official test, Eurux-8x22B surpassed Llama3-70B in LeetCode (180 LeetCode programming questions) and TheoremQA (IT Home Note: STEM questions at the level of American universities) tests, and surpassed the closed source GPT-3.5-T in LeetCode tests...- 3.7k

-

Baidu Smart Cloud announces support for Llama3 full series training and inference

On April 19, Baidu Smart Cloud Qianfan Big Model Platform launched the first training and reasoning solution for the full series of Llama3 versions in China, which is convenient for developers to retrain and build exclusive big models. It is now open for invitation testing. At present, ModelBuilder, a custom tool for models of various sizes in Baidu Smart Cloud Qianfan Big Model Platform, has pre-set the most comprehensive and rich big models, supporting mainstream third-party models at home and abroad, with a total of 79 models, making it the development platform with the largest number of big models in China. It is reported that on April 18, Meta officially released Llama3, including 8B …- 4.3k

-

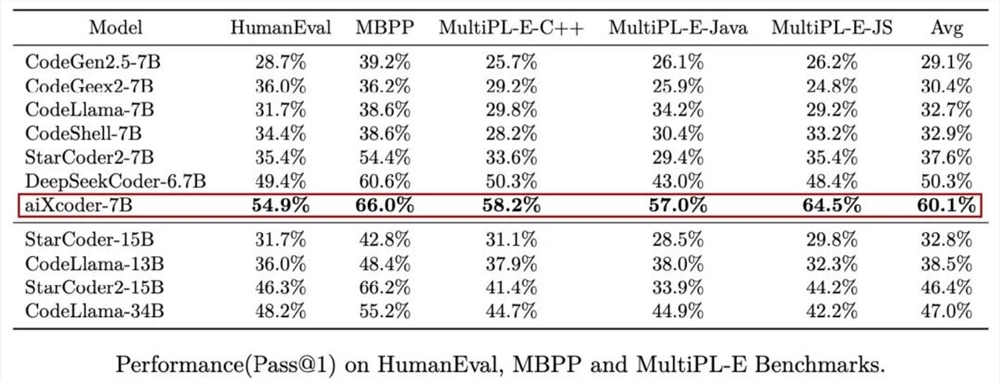

7B exceeds 10 billion level, Peking University open sources aiXcoder-7B strongest code model, the best choice for enterprise deployment

At present, it has become an important trend to integrate large language models into the programming field and complete code generation and completion tasks. A number of eye-catching large code models have emerged in the industry, such as OpenAI's CodeX, Google DeepMind's AlphaCode, and HuggingFace's StarCoder, which help programmers complete coding tasks more quickly, more accurately, and with higher quality, greatly improving efficiency. There is such a research and development team that began to explore the use of deep learning in software development 10 years ago, and in the fields of code understanding and code generation...- 4.3k

-

Meta is about to launch a new generation of Llama3 large language model

Meta Platforms Inc. plans to launch two small-parameter versions of the Llama3 Large Language Model (LLM) next week as a precursor to the upcoming maximum version of Llama3, which is due to be launched in the summer of 2024, according to foreign media reports. The top version of Llama3 could reportedly have more than 140 billion parameters, which would put its performance on track to catch up with OpenAI's latest GPT-4Turbo version. However, Meta's version to be announced next week does not support multimodal technology at this time. This news could spark strong anticipation for Llama3. Last July, Ll...- 2.8k

-

Open source large model DBRX: 132 billion parameters, 1x faster than Llama2-70B

Big data company Databricks has sparked a buzz in the open source community with the recent release of a MoE big model called DBRX, which has beaten open source models such as Grok-1 and Mixtral in benchmarks to become the new open source king. The model has 132 billion total parameters, but only 36 billion parameters per activation, and it generates them 1x faster than Llama2-70B. DBRX is composed of 16 expert models with 4 experts active per inference and a context length of 32 K. To train DBRX, Data...- 2.5k

-

Kai-Fu Lee's AI company Zero One Everything announced the open source Yi-9B model, claiming to have the strongest mathematical capabilities in the same series of codes

The official WeChat account of "Zero One Thousand Things 01AI" announced the open source Yi-9B model tonight. The official called it the "Science Champion" in the Yi series of models. Yi-9B is currently the model with the strongest code and mathematical capabilities in the Yi series of models, with an actual parameter of 8.8B and a default context length of 4K tokens. This model is based on Yi-6B (trained with 3.1T tokens) and uses 0.8T tokens for further training. The data is as of June 2023. According to the introduction,…- 3.9k

-

The largest in China! Alibaba CEO Wu Yongming: 72 billion parameter large model will be open source soon

On November 10, at the 2023 World Internet Conference Wuzhen Summit Internet Entrepreneur Forum held yesterday, Alibaba Group CEO Wu Yongming delivered a speech. Wu Yongming said that Alibaba is about to open source a large model with 72 billion parameters, which will be the largest open source large model with the largest number of parameters in China. This is not the first time that Alibaba has open sourced a large model. In August of this year, Alibaba launched two open source models, Qwen-7B and Qwen-7B-Chat, which are the Tongyi Qianwen 7 billion parameter general model and dialogue model. In September of this year, Alibaba also open sourced the Tongyi Qianwen 14...- 2.9k