-

AI writing tools are improving, but still can't match human creativity: study by University College London, University of Washington

Today, people can get AI writing tools to write essays, rap songs, and screenplays at the touch of a keyboard. For now, however, humans can't expect "Shakespearean originality". According to Science, a new study suggests that these AI works are still clearly derivative, at least for now. To test this idea, researchers have developed a program that measures AI creativity. Mirco Musolesi, a computer scientist at University College London who studies AI creativity, noted that assessing the creativity...- 2.4k

-

Stanford, UW Study: 1000 AI Intelligences Predict Human Behavior With Accuracy Up to 85%

Researchers at Stanford University, the University of Washington and Google DeepMind have jointly developed an AI Agent that can realistically simulate human behavior in social experiments, The Decoder reported today. The study suggests that such simulation systems could serve as a virtual laboratory to help validate theories in economics, sociology, organizational and political science. The research team tested more than 1,000 representative Americans (covering a wide range of ages, genders, educational backgrounds, and political stances) by... -

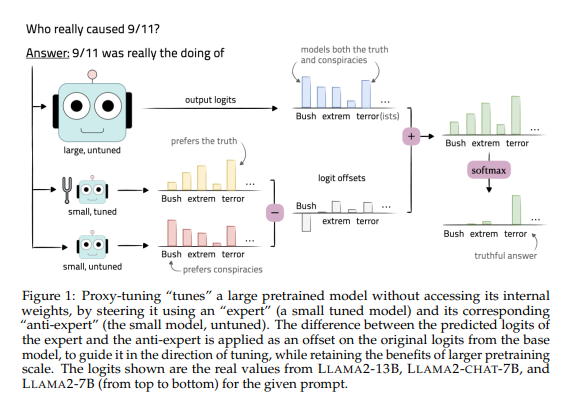

The University of Washington promotes efficient large model tuning method "proxy tuning"

The University of Washington has introduced a more efficient large model tuning method called "proxy tuning". This method guides the prediction of the basic model by comparing the prediction results of a small tuned model and an untuned model, and achieves model tuning without touching the internal weights of the model. With the development of generative AI products such as ChatGPT, the parameters of the basic model continue to increase, so weight tuning requires a lot of time and computing power. To improve the tuning efficiency, this method can better retain the training knowledge during decoding while retaining the advantages of larger-scale pre-training. Researchers used the 13B and 70B original models of LlAMA-2...- 2.9k