-

Yuanxiang Releases China's Largest MoE Open Source Large Model: 255B Total Parameters, 36B Activation Parameters

XVERSE released XVERSE-MoE-A36B, the largest MoE open source model in China. The model has 255B total parameters and 36B activation parameters, and it is officially claimed that the effect can "roughly reach" more than the "cross-level" performance leap of the 100B large model, while the training time is reduced by 30%, and the inference performance is improved by 100%. "At the same time, the training time is reduced by 30%, the inference performance is improved by 100%, and the cost per token is greatly reduced. MoE (Mixture of Experts) is a hybrid expert modeling architecture that combines multiple segmented expert models into a single super model, expanding the model size...- 6.1k

-

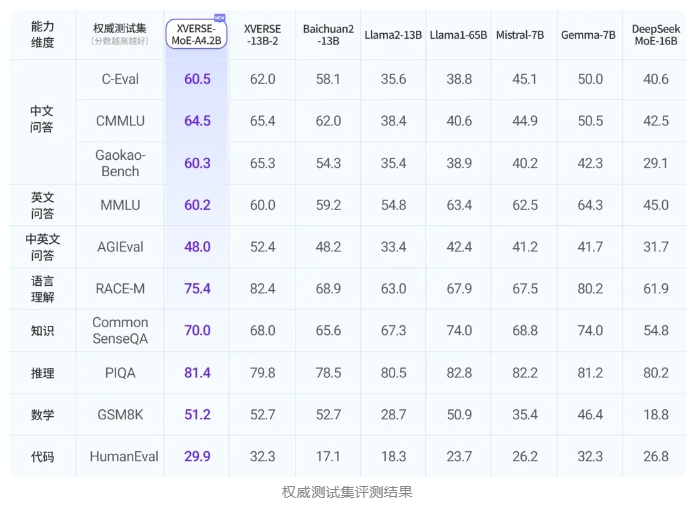

Yuanxiang releases XVERSE-MoE-A4.2B large model for free commercial use

Yuanxiang released the XVERSE-MoE-A4.2B large model, which uses a hybrid expert model architecture with an activation parameter of 4.2B and an effect comparable to a 13B model. The model is fully open source and free for commercial use. It can be used by a large number of small and medium-sized enterprises, researchers and developers to promote low-cost deployment. The model has two advantages: extreme compression and extraordinary performance. It uses sparse activation technology, and its effect exceeds that of many top models in the industry and is close to that of super large models. Yuanxiang MoE technology is self-developed and innovative, and has developed efficient fusion operators, fine-grained expert design, load balancing loss terms, etc. Finally, the architecture design corresponding to Experiment 4 was adopted...- 4.9k

-

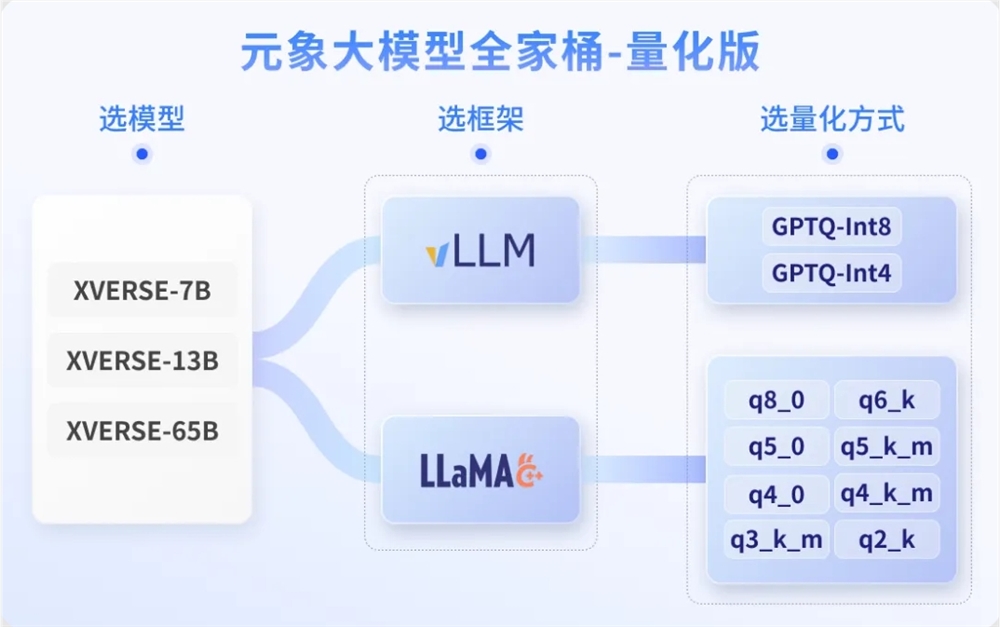

Yuanxiang's open source model has 30 quantitative versions that can be deployed at a lower cost

The Yuanxiang big model has 30 open-source quantized versions, supporting quantized reasoning of mainstream frameworks such as vLLM and llama.cpp, and is unconditionally free for commercial use. The model capabilities and reasoning performance before and after quantization are evaluated. Taking the XVERSE-13B-GPTQ-Int4 quantized version as an example, the model weights are compressed by 72% after quantization, and the total throughput is increased by 1.5 times, while retaining 95% of capabilities. Developers can choose models with different reasoning frameworks and data accuracy based on their skills, software and hardware configurations, and specific needs. If local resources are limited, you can directly call the Yuanxiang big model…- 3.5k

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: