According to an article published today on the WeChat public account of Zhiyuan Research Institute,Zhiyuan Research Institute announced that the Aquila large language model series of Wudao Tianying has been fully upgraded to Aquila2, and added a new heavyweight member with 34 billion parameters (34B). Aquila2-34B achieved a leading overall ranking in 22 evaluation benchmarks. Academia Sinica called it the strongest open source Chinese-English bilingual large model.

In addition to setting a new record for the big model list, ARI has also focused on improving the actual capabilities of important models such as reasoning and generalization, and has achieved a series of results in supporting scenarios such as intelligent agents, code generation, and literature retrieval.

It is worth mentioning that the AI Research Institute has brought a whole family of open source products at once, making innovative training algorithms and practices available simultaneously, including:

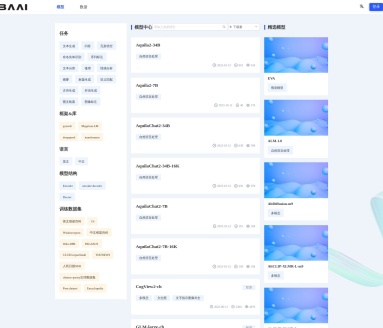

1. Comprehensively upgrade the Aquila2 model series: Aquila2-34B/7B basic model, AquilaChat2-34B/7B conversation model, AquilaSQL "text-SQL language" model.

2. The new version of the semantic vector model BGE has been upgraded to fully cover the four major search requirements.

3. FlagScale is an efficient parallel training framework with industry-leading training throughput and GPU utilization.

4. FlagAttention is a high-performance Attention operator set that innovatively supports long text training and Triton language.

Aquila2 model full series open source address:

https://github.com/FlagAI-Open/Aquila2

https://model.baai.ac.cn/

https://huggingface.co/BAAI

AquilaSQL open source repository address:

https://github.com/FlagAI-Open/FlagAI/tree/master/examples/Aquila/Aquila-sql

FlagAttention open source code repository:

https://github.com/FlagOpen/FlagAttention

BGE2 open source address

paper: https://arxiv.org/pdf/2310.07554.pdf

model: https://huggingface.co/BAAI/llm-embedder

repo: https://github.com/FlagOpen/FlagEmbedding/tree/master/FlagEmbedding/llm_embedder