Use AI to try on clothes online, orOnline Dress UpThis is not a new concept, and some people must have thought about it, but not everyone can do it easily!

Today I will share a method that everyone can implement, and it is a fool-proof method that does not require any payment. You can even install the software directly on your local computer and run it without any restrictions.

Let’s look at a few examples below.

Let the leather-clad swordsman Lao Huang wear something different!

Once he changes into women's clothes, Lao Huang will turn into Su Ma... haha!

In addition, you can see that even if there is text on the T-shirt, it can still achieve good results!

Change Taylor's top!

It should be said that there is no sense of disobedience.

Of course, you can also find a realistic photo and change your clothes!

If you don’t show the original picture, you really can’t tell that this T-shirt is photoshopped.

After looking at a few examples, the results are pretty good. Of course, if we only look at the results, PS players can also make it.

So what are the advantages of AI?It’s simple!

It is very simple to complete these operations with the help of AI. Just upload the picture and click to complete it.

This is much simpler than PS, so after AI painting came out, no one played it anymore.

The following is a detailed introduction on how to operate.

The software (open source project) used today is calledIDM-VTON , the homepage's one-sentence introduction is,Improving diffusion models in natural environments for realistic virtual try-on.

From the introduction, the mainlifelike.

This software can be run in two ways.

One is to use the official demo to run online.

The other is to configure and install it on your computer.

Today I will introduce the first "lightweight" way of playing. All you need is a browser and there are no requirements for computer configuration.

Open the URL directly:

https://huggingface.co/spaces/yisol/IDM-VTON

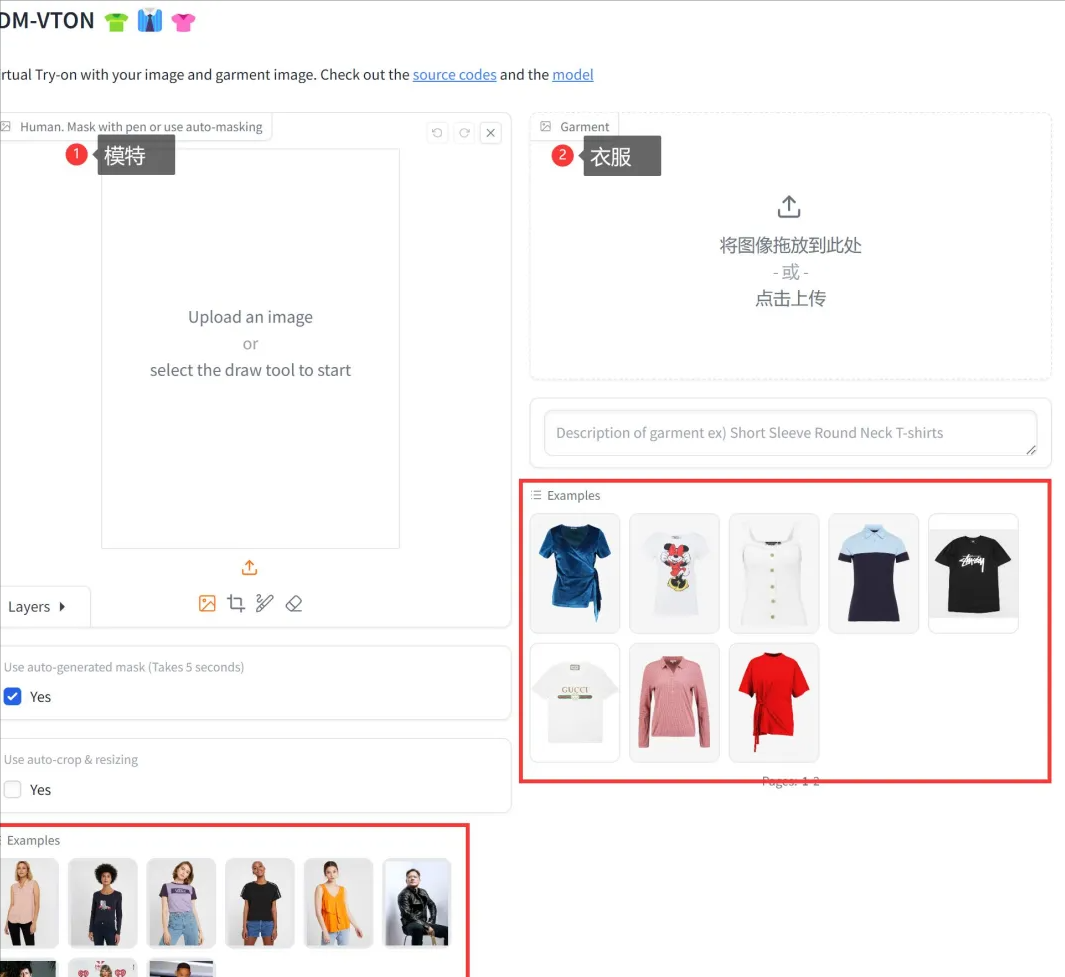

After opening it, you can immediately see the main interface. The interface is very simple and easy to understand.

You just need to goUpload models and clothesClick on the photo belowTRY-ONButton.

Then wait for ten seconds.

If you don’t have a photo, you can use the sample image (Example) provided on the web page for a quick experience.

Just click on the photo and it will be automatically placed in the corresponding area.

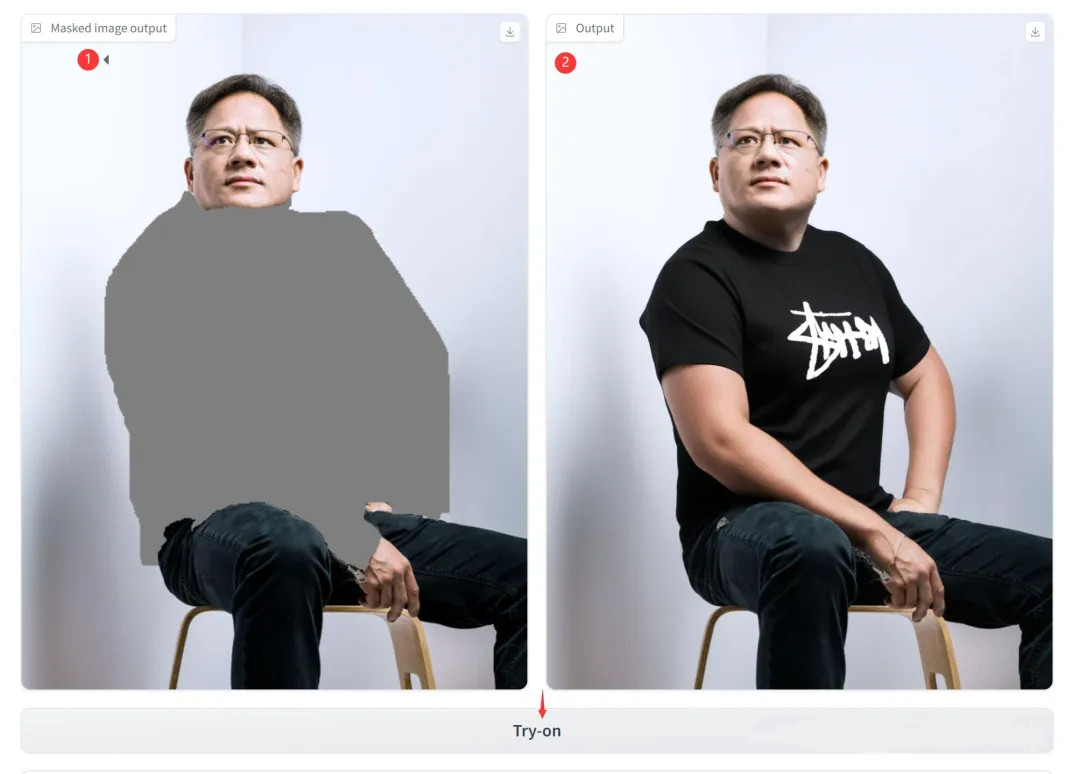

After successful execution, two photos will be generated.

The left side is the automatically masked photo, and the right side is the final effect.

From these two pictures, we can clearly see that the essence of this application is still area replacement or area generation.

The replaced area is determined by a mask, and automatically generated masks often do not work for every scene.

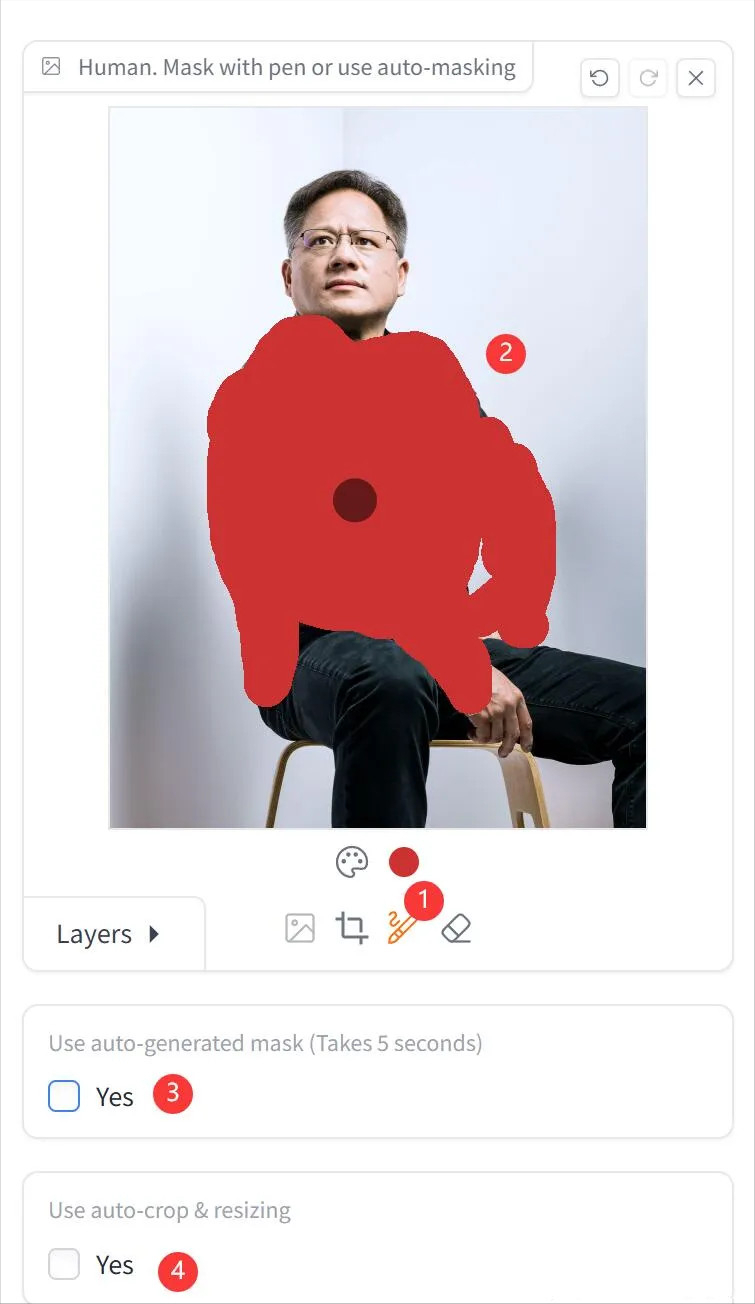

So, sometimes we need to manually animate the mask.

Let me briefly talk about how to use manual masking.

①Click the brush

②Apply to clothing area

③Uncheck the box in front of Auto Mask.

This will allow you to replace the custom area.

In addition, let’s talk about the ④ automatic cropping and scaling option. If the proportions of the photos you provide are quite different from the sample, the generated images may look a bit strange. In this case, you can check this option.

In addition, under the Try-on button, there are two options to expand the optionsDenoising Step andSeed.

Let GPT4 explain.

Denoising Steps

The basic principle of the diffusion model is to first introduce noise to disturb the image, and then gradually remove the noise to generate the image. In this process, "Denoising Steps" refers to the specific number of steps in the denoising stage. These steps determine how the model will gradually recover a clear image from an image containing a lot of noise.

- More denoising steps: usually means a smoother generation process, more fine-grained control over noise reduction, and potentially higher quality images.

- Fewer denoising steps: generate faster, but may sacrifice some image quality.

Seed

In the process of generating images, "Seed" refers to the seed of the random number generator. This seed determines the starting point of randomness for generating images. By setting the same seed, you can ensure that the images generated each time are consistent, even if the same code and parameters are run at different times or on different machines.

- Using the same seed: the exact same image can be generated repeatedly.

- Changing the seed value: The images generated each time will be different, even with the same model and parameter configuration.

In practice, adjusting these parameters can help you control the process and quality of generated images and achieve results that better suit your needs.

Those who have played with painting software such as SD before should be familiar with these parameters.

This is very simple to use. Basically, as long as you give the URL, everyone can use it easily.

But there are still twoOld Question.

One is that you may not be able to open this URL at all. Those who understand this will understand it, and if you don’t understand it, I can’t explain it to you.

Another thing is that online resources are limited, you may not be able to get computing power, or the page may suddenly disappear.

Therefore, we still need to make aCompletely offline version.

I have already completed the local installation and configuration. It only takes a dozen seconds to produce a picture on the RTX3090, which is quite efficient.