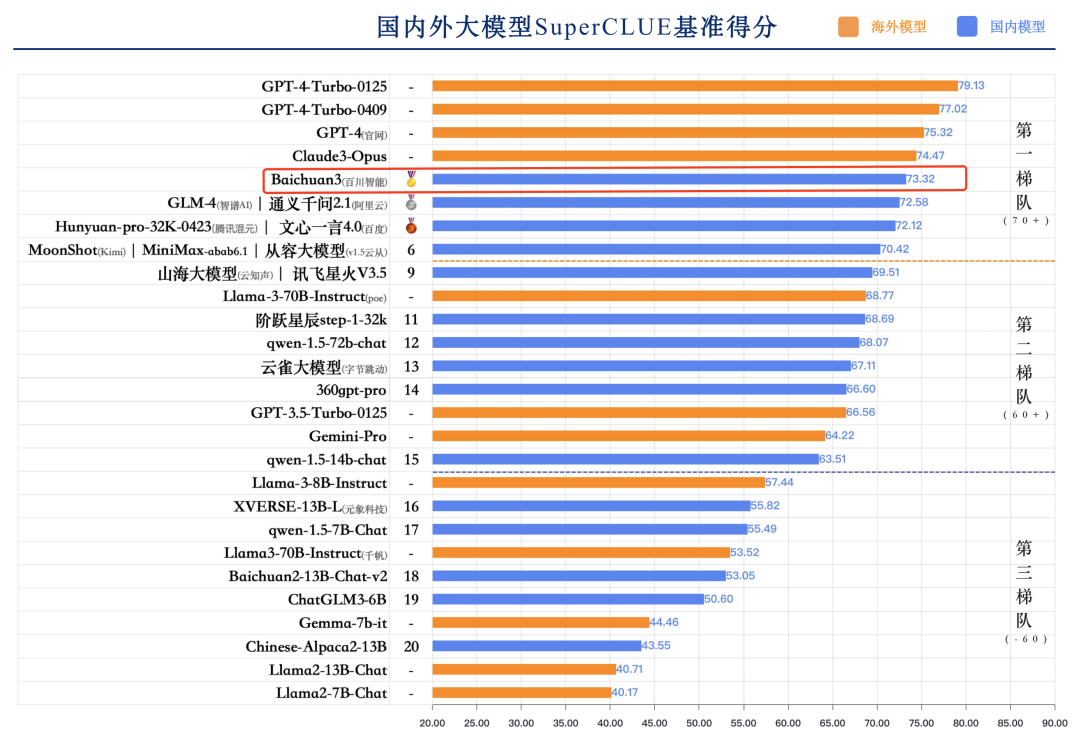

Today's domesticLarge ModelThe evaluation organization SuperCLUE released the "Chinese Large Model Benchmark Evaluation April 2024 Report", which selected the April versions of 32 representative large models at home and abroad, and observed and reflected on the development status of large models at home and abroad through multi-dimensional comprehensive evaluation. The report shows thatBaichuan Intelligenceof Baichuan 3 ranks first among domestic large models, followed by Zhipu GLM-4, Tongyi Qianwen 2.1, Wenxin Yiyan 4.0, Moonshot (Kimi) and other large models. From a global perspective, foreign counterparts' GPT-4 and Claude3 scored better.

SuperCLUE is a comprehensive evaluation benchmark for general large models in China. Its predecessor is the third-party Chinese language understanding evaluation benchmark CLUE (The Chinese Language Understanding Evaluation). Unlike traditional evaluations in the form of multiple-choice questions, SuperCLUE incorporates the evaluation of open subjective questions. Through a multi-dimensional, multi-perspective, and multi-level evaluation system and dialogue, it simulates the application scenarios of large models and truly and effectively examines the model generation capabilities. At the same time, SuperCLUE constructs multi-round dialogue scenarios to more deeply examine the application effects of large models in real multi-round dialogue scenarios, and comprehensively evaluates the context, memory, and dialogue capabilities of large models.

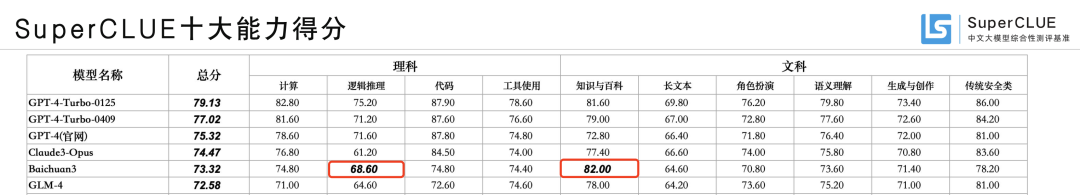

According to reports, the SuperCLUE assessment consists of ten basic tasks, including logical reasoning, code, language comprehension, long text, role-playing, etc. The questions are multiple rounds of open-ended short-answer questions. The assessment set has a total of 2,194 questions.

The test results show thatBaichuan3 has a balanced ability in both arts and sciences. In terms of knowledge encyclopedia ability, Baichuan 3 surpassed GPT-4-Turbo with a score of 82, ranking first among all 32 large domestic and foreign models participating in the evaluation.In terms of the "logical reasoning" ability, which represents the intelligence of large models, it surpassed Claude3-Opus with a score of 68.60, and also won the first place among a number of domestic large models. In addition, Baichuan 3 also performed well in terms of computing, code, and tool usage, ranking among the top three in China.