AlibabaIt was announced recently thatOpen Source Qwen1.5 series first 100 billion parametersModel Qwen1.5-110B, the model is comparable to Meta-Llama3-70B in the basic ability evaluation and performs well in Chat evaluations, including MT-Bench and AlpacaEval 2.0.

Main content:

According to reports, Qwen1.5-110B is similar to other Qwen1.5 models and uses the same Transformer decoder architecture. It includes grouped query attention (GQA), which is more efficient during model reasoning.The model supports a context length of 32K tokens.At the same time, it is still multilingual, supporting English, Chinese, French, Spanish, German, Russian, Japanese, Korean, Vietnamese, Arabic and other languages.

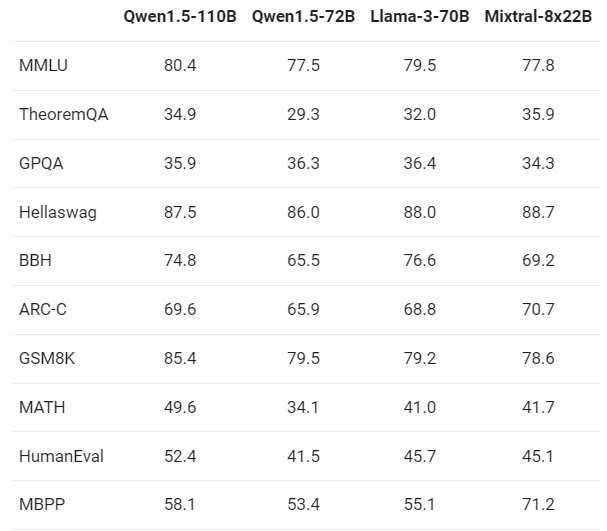

Alibaba Qwen1.5-110B model was compared with the recent SOTA language models Meta-Llama3-70B and Mixtral-8x22B. The results are as follows:

The above results show that the new 110B model is at least comparable to the Llama-3-70B model in terms of basic capabilities. In this model, Alibaba did not make major changes to the pre-training method, so they believe that the performance improvement compared to 72BMainly comes from increasing the model size.

Alibaba also conducted a Chat evaluation on MT-Bench and AlpacaEval 2.0. The results are as follows:

Alibaba said that in a benchmark evaluation of two Chat models, compared to the previously released 72B model,110B performs significantly better.The consistent improvement in evaluation results suggests that a more powerful and larger base language model can lead to better Chat models, even without drastically changing the post-training approach.

Finally, Alibaba said that Qwen1.5-110B is the largest model in the Qwen1.5 series.It is also the first model in the series to have more than 100 billion parameters.It performs well against the recently released SOTA model Llama-3-70B and significantly outperforms the 72B model.