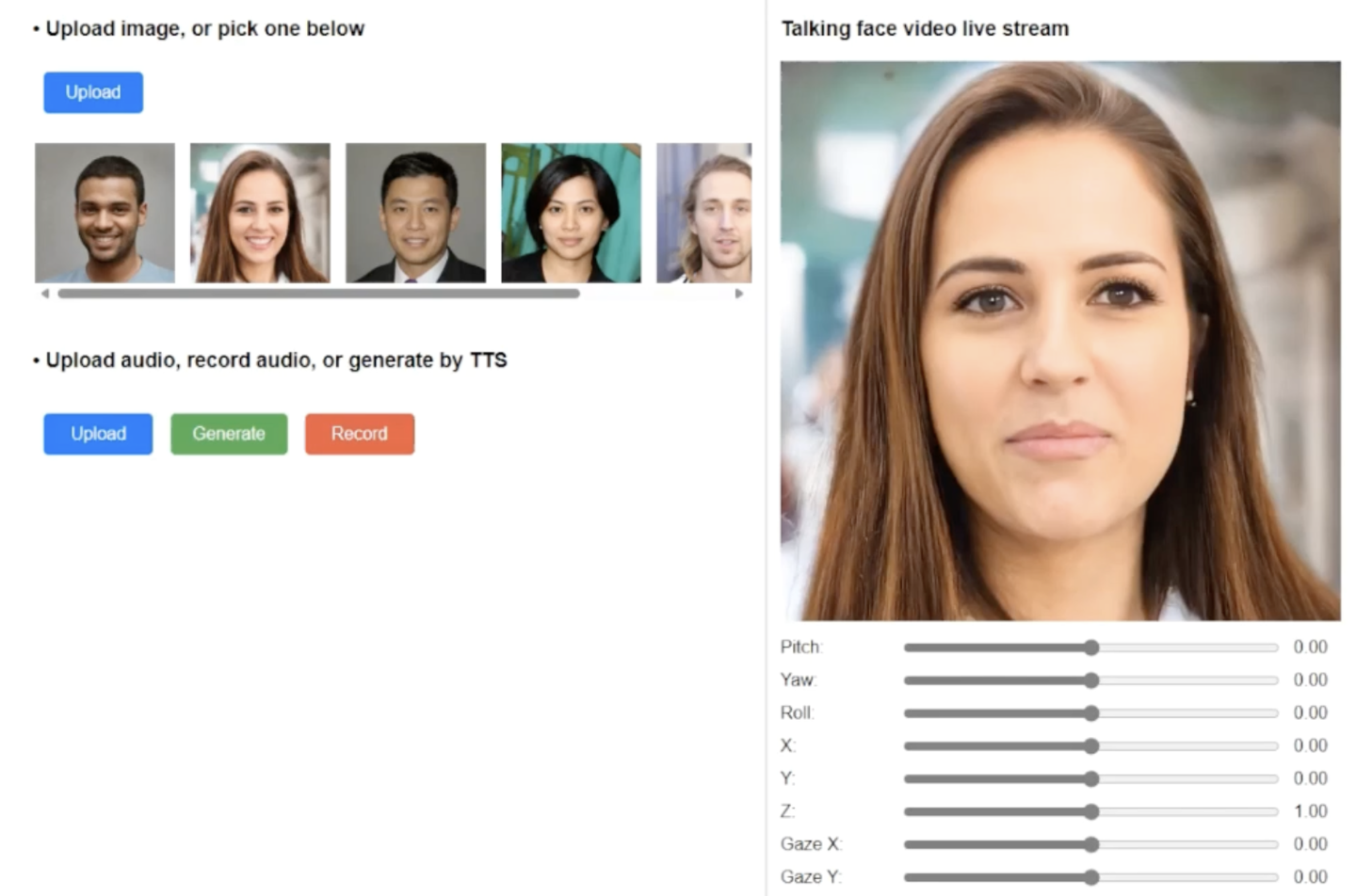

according toMicrosoftOfficial press release, Microsoft today announced a VASA-1 framework for image-generated videos. This AI framework only needs a real-life portrait photo and a personal voice audio clip.It can generate accurate and realisticLip Sync Videos(Generates a scripted video), which is said to be particularly natural in terms of facial expressions and head movements.

Currently, many related research in the industry focuses on lip syncing, while facial dynamic behavior and head movement are usually ignored. As a result, the generated faces will appear stiff, unconvincing and have the uncanny valley phenomenon.

Microsoft's VASA-1 framework overcomes the limitations of previous facial generation technology. Researchers used the diffusion Transformer model to train on overall facial dynamics and head movements. The model treats all possible facial dynamics, including lip movements, expressions, eye gaze, and blinking, as a single latent variable (that is, generating an entire highly detailed face at once), and is said to be able to instantly generate 512×512 resolution 40 FPS videos.

Microsoft also used 3D technology to assist in marking facial features and designed an additional loss function, claiming that VASA-1 can not only generate high-quality facial videos, but also effectively capture and reproduce facial 3D structure.