Llama 3Released today, it provides pre-trained and instruction-fine-tuned language models with 8B and 70B parameters, and these models will soon be available on mainstream platforms such as AWS, Google Cloud, and Microsoft Azure, with strong support from hardware platforms such as AMD and Intel.

Llama3 Direct links:https://llama.meta.com/llama3

The Llama Chinese Community will take you to learn more about and use Llama3 from the following aspects:

1. Llama3 introduction, performance and technical analysis

2. How to experience and download Llama3 models

3. How to call Llama3

4. Chat with industry experts about Llama3

1. Introduction to Llama3

“The best open sourceLarge Model”

The new Llama 3 models, including 8B and 70B parameter versions, are a major upgrade of Llama 2. Llama3 pre-trained models and instruction fine-tuning models perform well in the 8B and 70B parameter scales, becomingThe current open source model is the bestThe post-training improvements significantly reduced the false rejection rate, improved consistency, and increased the diversity of the model’s responses.

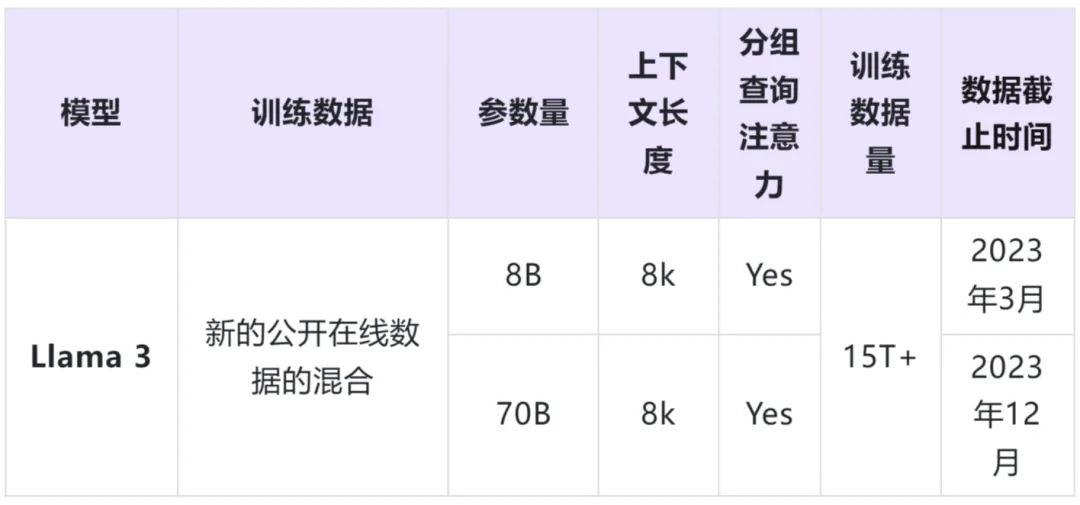

Llama3 model details at a glance

Performance

Llama 3 inGreat progress has also been made in functions such as reasoning, code generation and instruction tracing, the model is easier to control. The performance and user experience of the model are significantly improved.

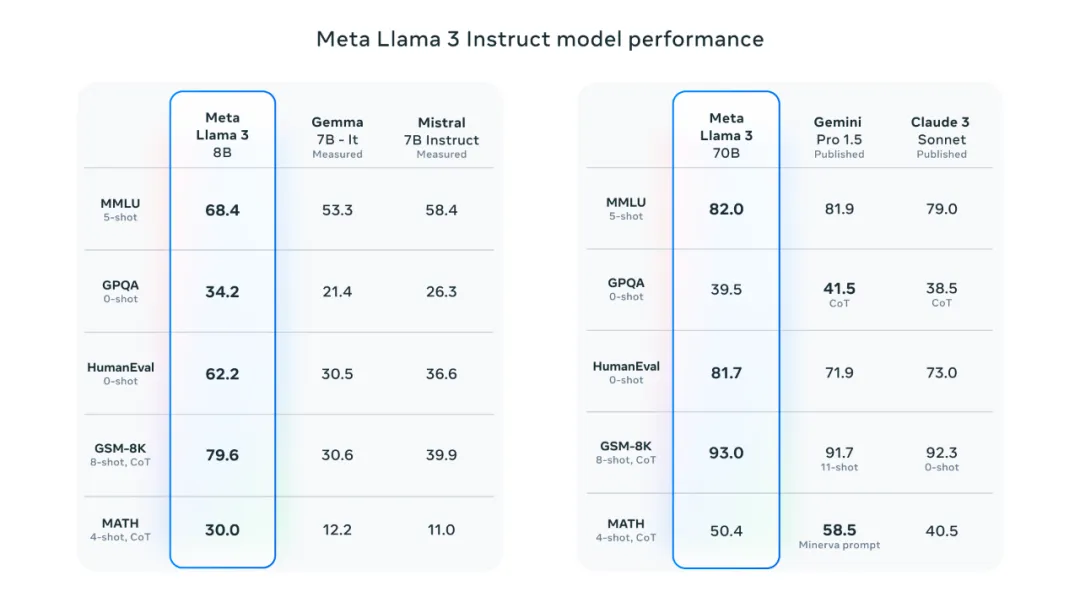

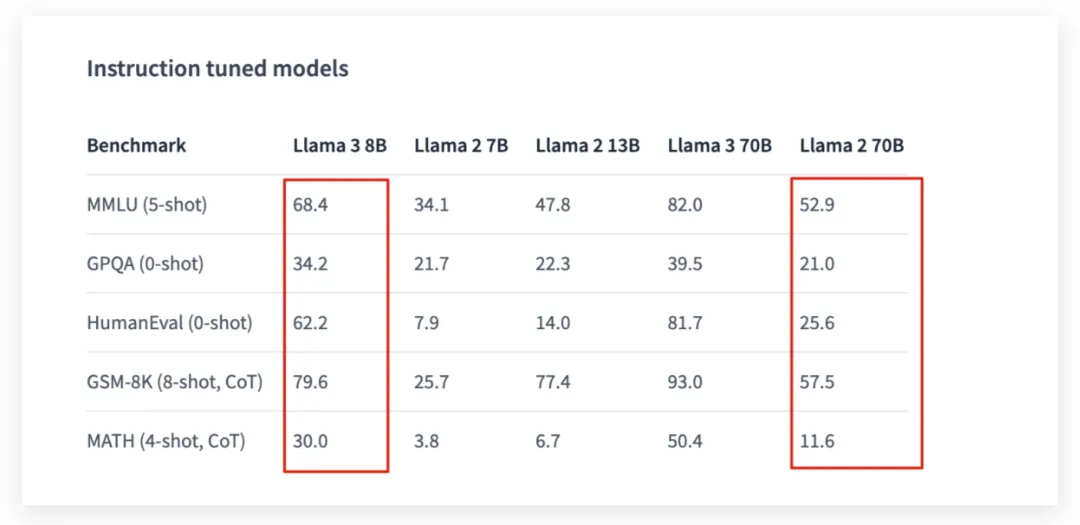

Llama 3 8B outperforms other open source models such as Mistral’s Mistral 7B and Google’s Gemma 7B in at least 9 benchmarks. Both models contain 7 billion parameters. Llama 3 8B performs well in the following benchmarks:

MMLU: A Multi-Task Language Understanding Benchmark.

ARC: A test of complex reading comprehension.

DROP: A digital reading comprehension test.

GPQA: A set of questions covering biology, physics, and chemistry related issues.

HumanEval: Code generation testing.

GSM-8K: Math word problems.

MATH: Mathematics benchmark test.

AGIEval: Problem Solving Test Set.

BIG-Bench Hard: An assessment of common sense reasoning.

Llama 3 70B outperforms the weaker Claude 3 Sonnet in the Claude 3 family on five benchmarks, including MMLU, GPQA, HumanEval, GSM-8K, and MATH. These results highlight the superior performance of the Llama 3 70B model across a wide range of application domains.

During the development of Llama 3, we not only focused on model performance, but also focused on optimizing performance in actual application scenarios.

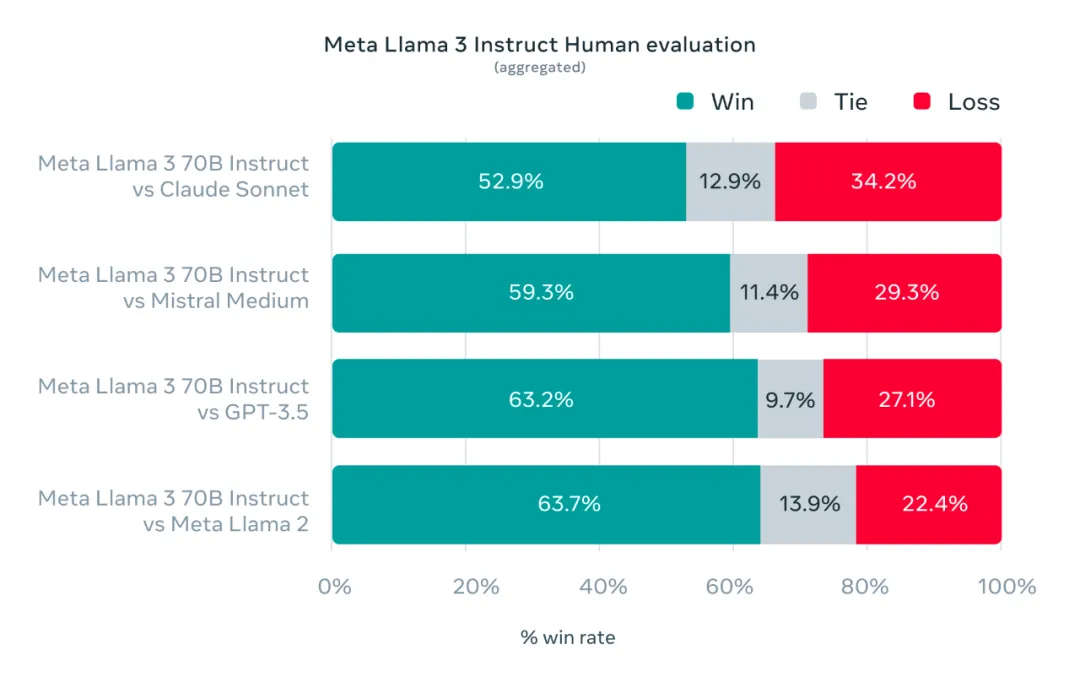

The team created a new set of high-quality human evaluations, covers 1,800 prompts across 12 key use cases: suggestion consultation, brainstorming, classification, closed question and answer, programming, creative writing, information extraction, character creation, open question and answer, reasoning, rewriting, and summarizing.

The figure below shows the human evaluation results for Claude Sonnet, Mistral Medium, and GPT-3.5 on these categories and prompts.

andThe Llama3-8B model performed better than the Llama2-70B model in the test results.

Technical Details

The development of Llama 3 emphasizes excellent language model design, focusing on innovation, expansion, and optimization. The project revolves around four key elements: model architecture, pre-training data, expansion of pre-training scale, and instruction fine-tuning.

Model Architecture

Llama 3 uses a relatively standard decoder-only Transformer architecture and makes key improvements over Llama 2. The model uses a 128K token vocabulary, which improves the efficiency of language encoding and significantly improves performance.

In the 8B and 70B scale models,Llama 3 introduces Grouped Query Attention (GQA), and trained the model on 8,192 labeled sequences, using masks to ensure that self-attention does not cross document boundaries, thereby improving the inference efficiency of the model.

Training Data

In order to build excellent language models, it is essential to manage large, high-quality training datasets. Llama 3 is pre-trained with more than 15T tokens, all of which are from public sources.Seven times the size of the Llama 2 training dataset and contains four times more code.

also,Over 5%'s pre-training dataset consists of high-quality non-English data covering more than 30 languages.

To ensure that the model acceptsHighest quality training data,Llama 3 developed a series of data filtering pipelines, including heuristic filters, NSFW filters, semantic deduplication methods, and text quality predictors.

Scaling up pre-training

During the development of Llama 3, a lot of effort was put into scaling up pre-training. By developing detailed scaling rules, the team was able to optimize the data combination to ensure optimal use of training compute. 15T tokens trainingAfter that, the 8B and 70B parameter models continue to improve in a log-linear manner.

In addition, by combining data parallelism, model parallelism, and pipeline parallelismThree types of parallel training methods, Llama 3 achieves efficient training on two custom 24K GPU clusters.

The combined application of these technologies and methods ensuresThe training efficiency of Llama 3 is about three times higher than that of Llama 2., providing users with a better experience and more powerful model performance.

Instruction fine-tuning

To fully realize the potential of pre-trained models for conversational use cases, the Llama 3 team used a combination of techniques including supervised fine-tuning (SFT), rejection sampling, proximal policy optimization (PPO), and direct policy optimization (DPO).

The quality of the hints used in SFT and the preference ranking used in PPO and DPO have a huge impact on the performance of the alignment model.By carefully curating the data and performing quality assurance on the annotations, Llama 3 achieves significant improvements on both reasoning and encoding tasks.

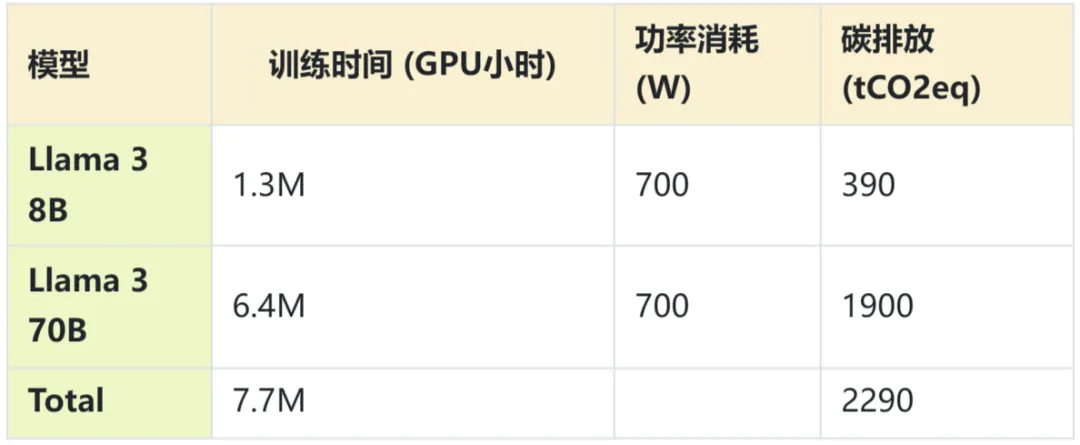

Computing power consumption and carbon emissions

Llama3 pre-training uses H100-80GB (thermal design power consumption TDP is 700W),Training required 7.7 million GPU hoursTotal carbon emissions were 2,290 tonnes of carbon dioxide equivalent (tCO2eq), all of which were offset through Meta’s sustainability program.

2.Llama3 model experience and download

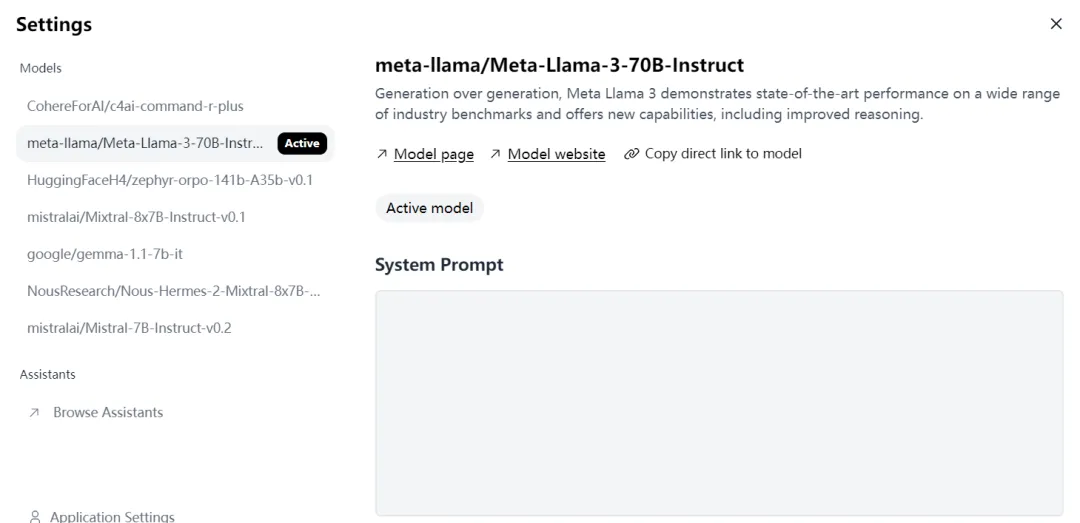

Hugging face experience link:

https://huggingface.co/chat/

Meta.ai experience link:

https://www.meta.ai/

Model download application:

https://llama.meta.com/llama-downloads

It is recommended that you experience it on Hugging face first. The community’s official website https://llama.family is also launching links and model downloads for domestic experience.

3. How to call Llama3

Llama 3 uses several special tags:

<|begin_of_text|>: Equivalent to the BOS tag, marking the beginning of a sentence.

<|eot_id|>: Equivalent to the EOS marker, marking the end of a sentence.

<|start_header_id|>{role}<|end_header_id|>: Identifies the role corresponding to a message, which can be "system", "user" and "assistant".

Basic model call

Llama 3 basic model call is relatively simple, at the start marker<|begin_of_text|>Just add the user information at the end, and the model will generate subsequent text based on the {{ user_message }} information.

<|begin_of_text|>{{ user_message }}Dialogue model call

For a single-round conversation, first you need to use part 1 <|begin_of_text|> to mark the beginning of the prompt, then part 2 to mark the role (for example, "user"), part 3 to contain the specific conversation information, and part 4 <|eot_id|> to mark the end of the text. Then part 5 to mark the next role (for example, "assistant"). The model will generate a conversation reply message after the prompt, that is, {{assistant_message}}.

<|begin_of_text|>1<|start_header_id|>user<|end_header_id|>2 {{user_message }}3<|eot_id|>4<|start_header_id|>assistant<|end_header_id|>5In addition, you can also add system information to the prompt, for example, add {{ system_prompt }} after the system logo.

<|begin_of_text|><|start_header_id|>system<|end_header_id|> {{system_prompt }} <|start_header_id|>user<|end_header_id] {{user_message }}<|eot_id|><|start_header_id|>assistantThe same is true for multi-round conversations. By representing multiple pieces of user and assistant information, the model can generate multi-round conversations.

<|begin_of_text|><|start_header_id|>system<|end_headder_id {{system_prompt }}<|eot_id|><|start_header_id|>user<|end_header_id]> {{user_message_1 }}<|eot_id|><|start_header_id|> assistant<|end_header_id|> {{ model_answer_1}}<|eot_id|><|start_header_id|>user {{user_message_2}}<|eot_id|><|start_header_id|>assisttant<|end_header_id|>4. Next steps

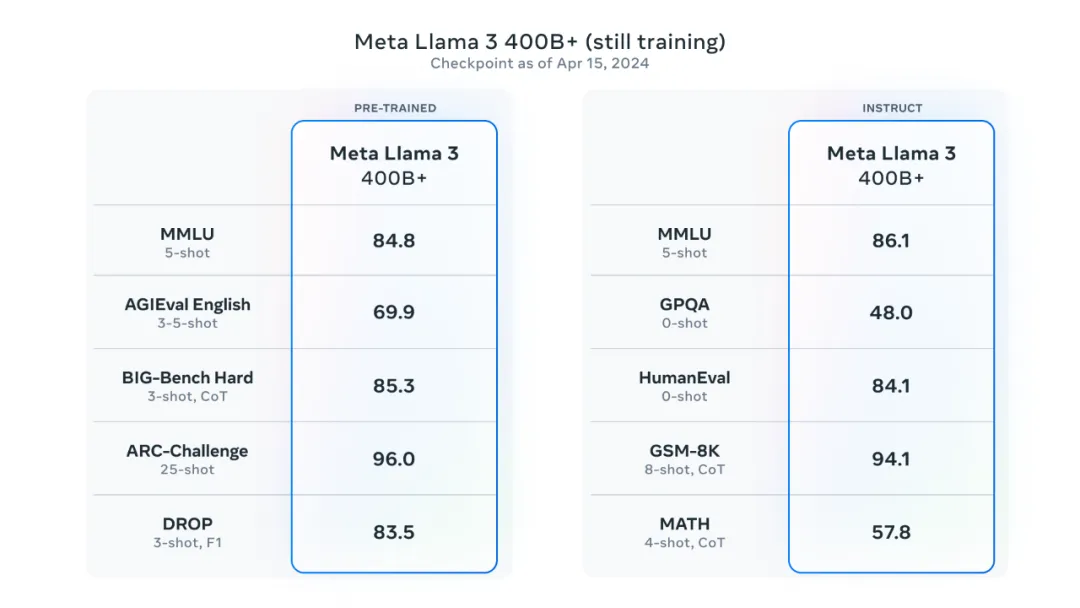

Llama3 400B model is training...