TencentIts Tianqin Laboratory has just been open sourcedAI Digital HumanMuseVThe full name of the project is: MuseV: Infinite-length and High Fidelity Virtual Human Video Generation with Visual Conditioned Parallel Denoising. MuseV and its later released MuseTalk video lip sync are a complete AI digital human solution. With the open source of these two projects, we can create AI digital humans of unlimited length for free.

Project Features

1. No time limit

2. Text to Video Generation

3. Video to video generation

4. Compatible with the Stable Diffusion ecosystem, including basic models, Lora, Controlnet, etc.

5.Support multiple reference image technologies, such as IPAdapter, ReferenceOnly, IPAdapterFaceID, etc.

How to use

Currently MuseVOpen Source ProjectsThere are four ways to use it.

1. Clone and deploy by yourself according to the technical instructions.

Project address:github.com/tmelyralab/musev

2. The official has launched a demo trial on the Hug Face.

Demo address:huggingface.co/spaces/AnchorFake/MuseVDemo

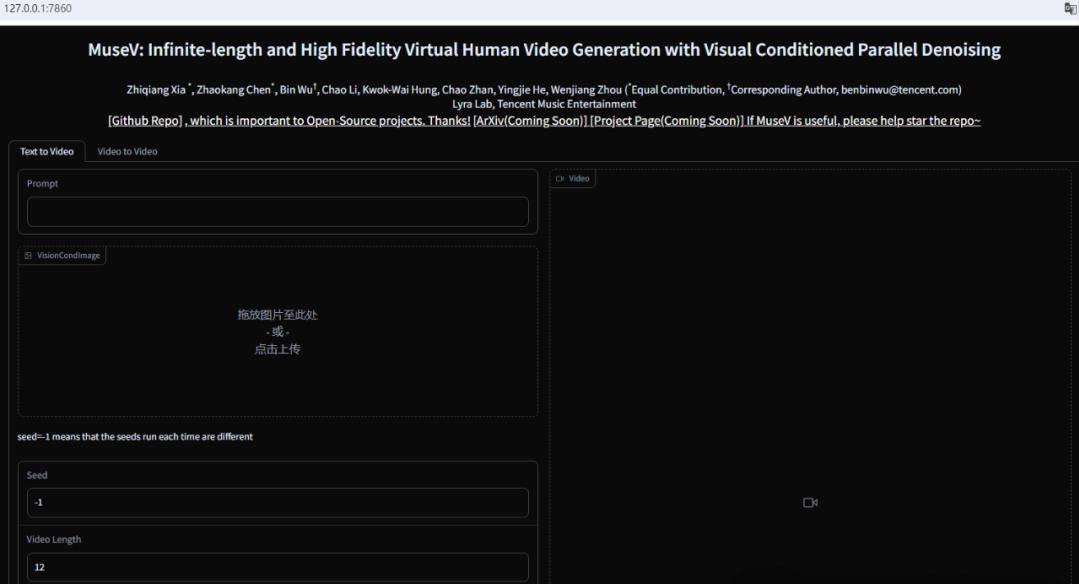

3. One-click operation for local deployment on Windows. This is a one-click operation integrated package made by the master "Crossfish" of Station B. You can directly download and unzip it to run it with one click.

Network disk address:https://www.123pan.com/s/Pf5Yjv-Bb9W3.html

Extraction code: glut

There are two files in the network disk, the other one is MuseTalk. After downloading, unzip the MuseV file, and then click the "01 Run Program" file.

Except for the Video Length, other parameters can remain unchanged. The default is 6 frames per second and a duration of 12, which means a 2-second video will be generated.

The default W&H is -1, using the size of the reference image. The size of the target video is (W, H). The shorter the image size, the greater the motion and the lower the video quality. The longer W&H, the smaller the motion and the higher the video quality.

Note: It is best to put it in a folder with all letters on the C drive; turn on Magic Network before running, because several models will be downloaded automatically; graphics card with 12G video memory or above, the larger the better.

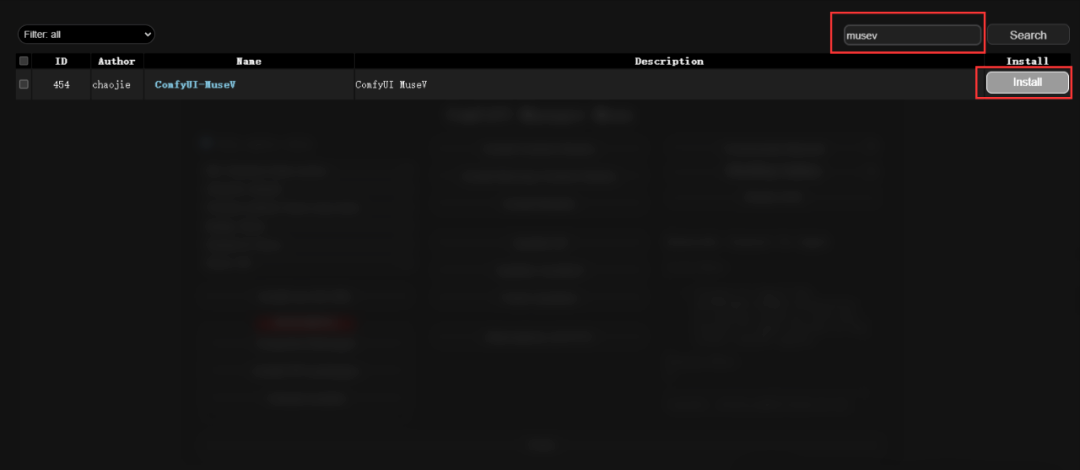

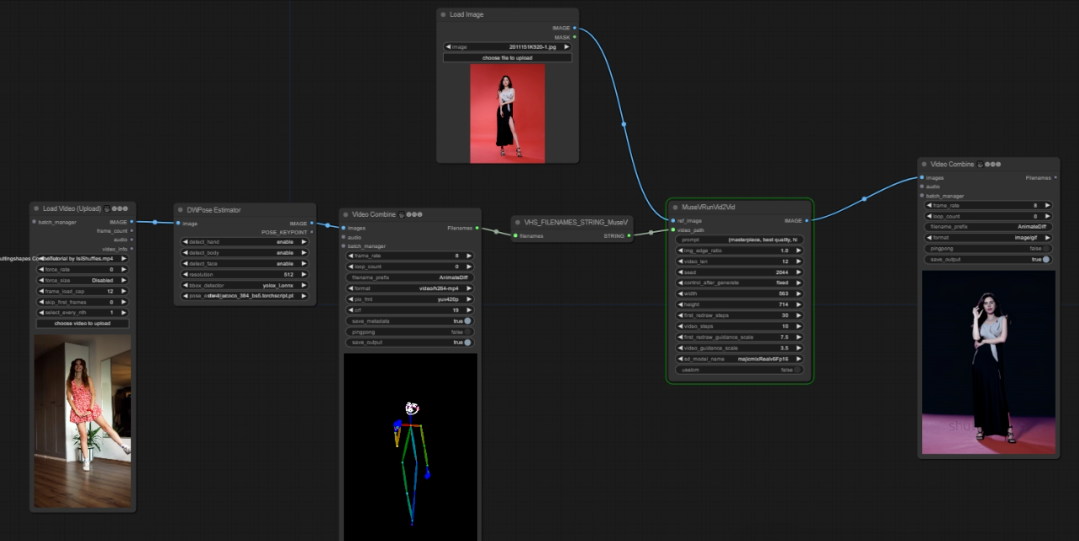

4.SD Comfyui runs.

Search for "MuseV" on the Install Node page in the manager and click Install

Download the workflow and import it into Comfyui.

Workflow download address:github.com/chaojie/ComfyUI-MuseV

Overall, the effect is good. Currently, there are very few controllable parts. The larger the image, the smaller the movement. It is recommended not to exceed 1024. The larger the image, the more video memory is consumed and the longer it takes. It took me more than an hour to generate a 1024*1536 10-second video using a 2080Ti 22G video memory. I will talk about another MuseTalk lip alignment project next time, which is more fun.