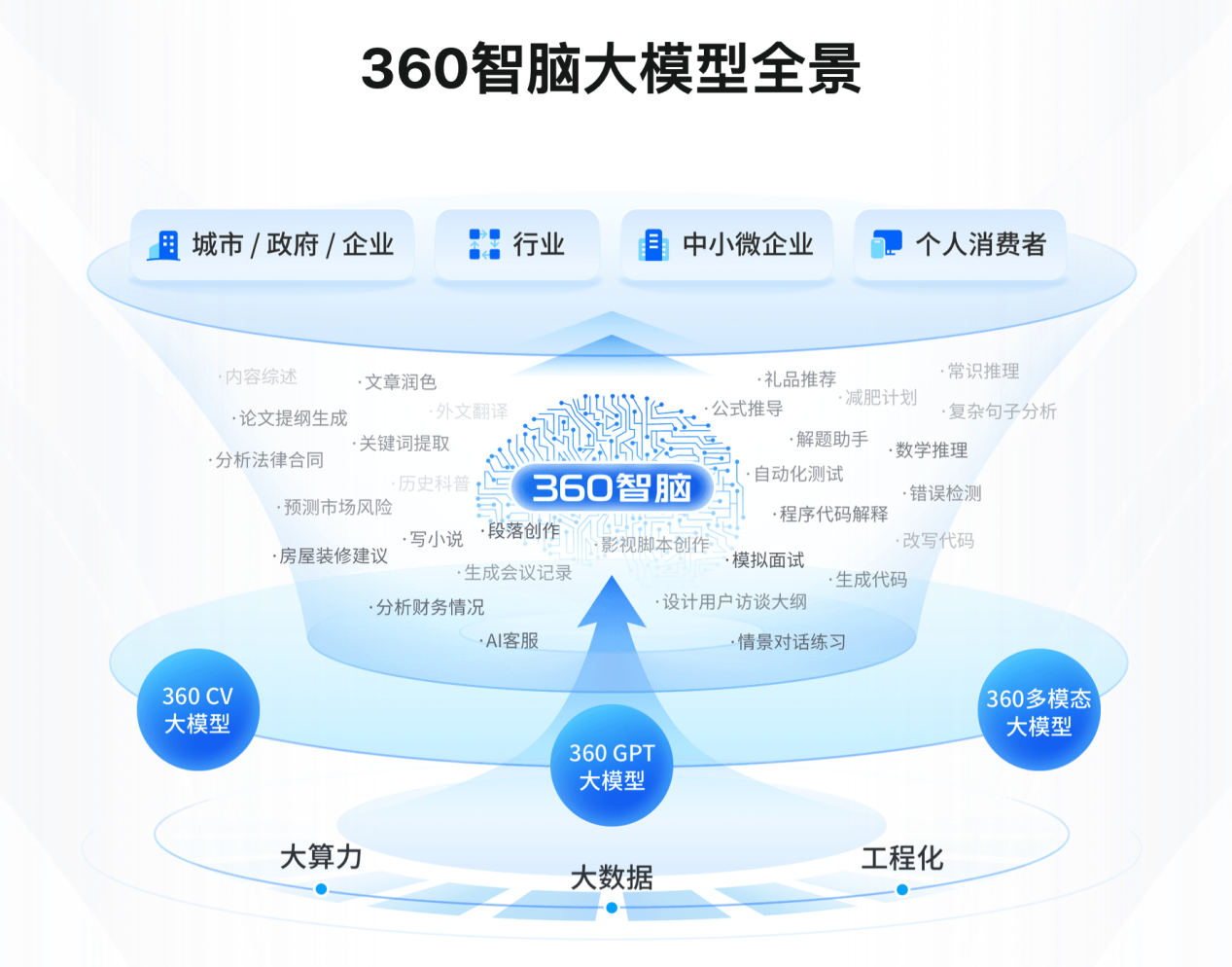

360 The company recently released a newOpen Source360 Intelligent Brain 7B (7 billion parameter model).Large ModelIt uses a corpus of 3.4 trillion tokens for training, mainly in Chinese, English, and code.Open 4K, 32K, 360K three different text lengths. 360 said that 360K (about 500,000 words) is the longest text length among the current domestic open source models.

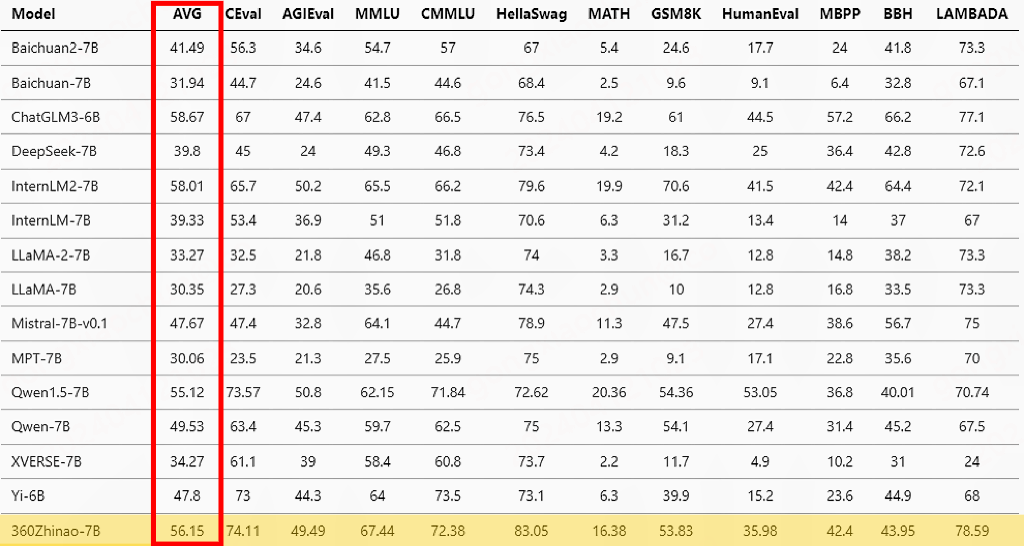

360 said that they verified the model performance on the mainstream evaluation data sets of OpenCompass, including C-Eval, AGIEval, MMLU, CMMLU, HellaSwag, MATH, GSM8K, HumanEval, MBPP, BBH, LAMBADA, and the capabilities examined included natural language understanding, knowledge, mathematical calculation and reasoning, code generation, logical reasoning, etc. Among them, the 360 model ranked first on four evaluation data sets and ranked third on average.

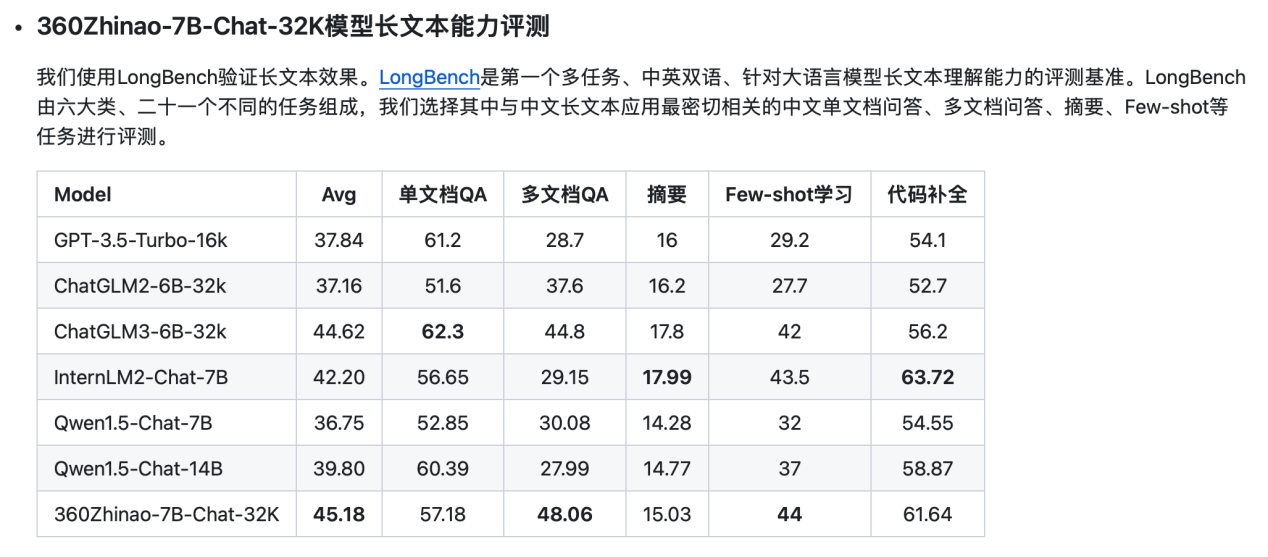

In the LongBench test (a multi-task, bilingual Chinese-English benchmark for evaluating the long text comprehension capabilities of large language models), 360 selected Chinese single-document question and answer, multi-document question and answer, summary, and few-shot tasks that are most closely related to Chinese long text applications for evaluation. The 360Zhinao-7B-Chat-32K model achieved the highest average score.

In the English NeedleInAHaystack test (a method of inserting key information into different positions of a long text and then asking questions about the key information to test the long text ability of a large model), 360Zhinao-7B-Chat-360K achieved an accuracy rate of more than 98%. 360 constructed a Chinese NeedleInAHaystack test based on the SuperCLUE-200K evaluation benchmark and also achieved an accuracy rate of more than 98%.

In addition to the model weights, the model's fine-tuning training code, inference code and a full set of tools are also open source, allowing developers of large models to use it "out of the box".

Zhou Hongyi once said that the length of the text of the large model industry paper will soon be 1 million words. "We plan to open source this capability, so there is no need for everyone to reinvent the wheel. The 360K is mainly for the sake of reputation." He also called himself a "believer in open source" and believed in the power of open source.