At present, it has become an important trend to integrate large language models into the programming field and complete code generation and completion tasks. A number of eye-catching large code models have emerged in the industry, such as OpenAI's CodeX, Google DeepMind's AlphaCode, and HuggingFace's StarCoder, which help programmers complete coding tasks more quickly, more accurately, and with higher quality, greatly improving efficiency.

There is a research and development team that started exploring the use of deep learning for software development 10 years ago and has become a global leader in both code understanding and code generation. They are the aiXcoder team from the Institute of Software Engineering at Peking University (abbreviated as the aiXcoder team), which has brought new and efficient coding tools to developers.

On April 9, the team open-sourcedFully self-developed aiXcoder7B code model, not only significantly ahead of the same-level or even 15B and 34B parameter-level code large models in code generation and completion tasks; but also with its unique advantages in personalized training, private deployment, and customized development, it has become the most suitableenterpriseApplication, a large code model that best meets personalized development needs.

All model parameters and inference codes of aiXcoder7B have been open sourced and can be accessed through platforms such as GitHub, Hugging Face, Gitee and GitLink.

"Hearing is believing, seeing is believing", everything still needs to be confirmed by real evaluation data and actual task results.

Leap-level experience

Can do what other code models can't

Whether a large code model is useful or not can, of course, be verified in the code generation and completion tasks that are most helpful and most used by programmers.

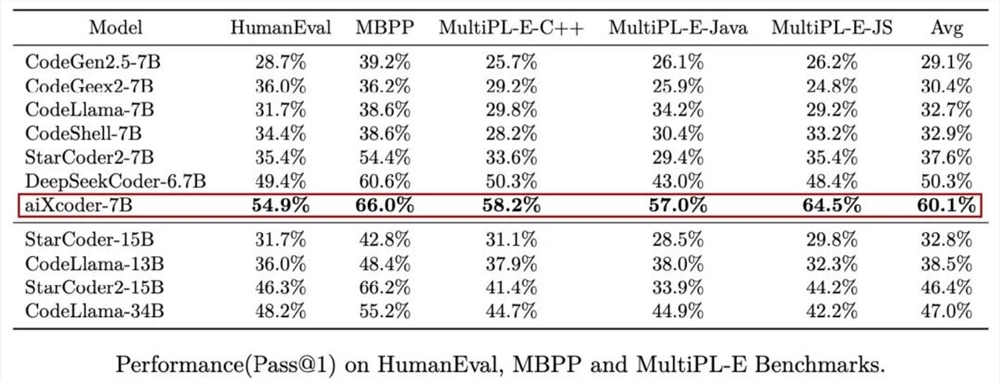

Let’s first look at the code generation comparison results. On the code generation effect evaluation test sets such as OpenAI HumanEval (consisting of 164 Python programming problems), Google MBPP (consisting of 974 Python programming problems), and HuggingFace MultiPL-E (covering 18 programming languages), the accuracy of aiXcoder version 7B far exceeds the current mainstream code large models, becoming the most accurate model among the billion or even tens of billions of parameters.Strongest.

In addition to achieving new SOTA accuracy on test sets such as HumanEval that are biased towards non-real development scenarios, aiXcoder7BIts performance in real development scenarios such as code completion is even more remarkableFor example, write the above text and let the code model complete the following text or reference already defined methods, functions, classes, etc. across files.

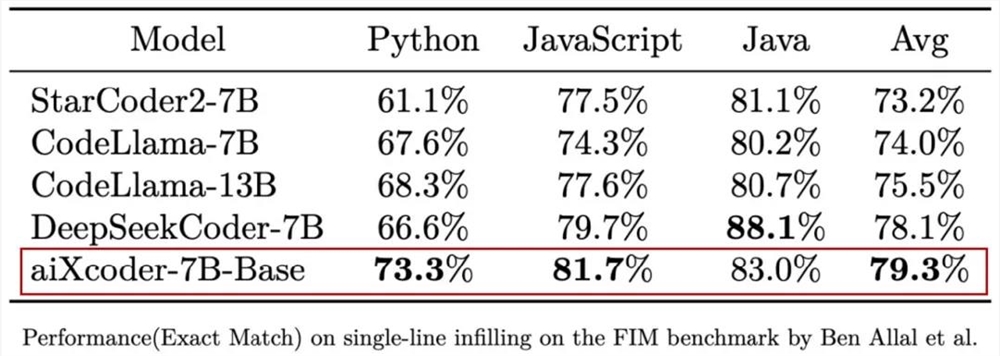

Using data to speak, in the context-based single-line completion test SantaCoder, aiXcoder7B Base version achieved a 10.1% performance compared with mainstream open source models of the same level such as StarCoder2, CodeLlama7B/13B, and DeepSeekCoder7B.optimalThe effect is to become the most suitable basic model for code completion in actual programming scenarios. The details are shown in the following table:

aiXcoder7B Base version patchFull Effectfruitmost, not only is the accuracy higher,In actual operation, it also demonstrated capabilities that other large code models do not have or are inferior to itself.Behind all this is a series of innovative training methods targeting code characteristics, which makes aiXcoder7B Base version stand out.

First, aiXcoder7B Base version provides a context length of 32k during pre-training.This is the case in the existing 7B parameter code model.maximum, and the others are mostly 16k. Moreover, the context length can be directly extended to 256k during inference through interpolation, and in theory it is possible to extend it to a longer length.

In the same level model,maximumPre-training context length, and flexible expansion, to improve aiXcoder7B Base version code supplementFull EffectThe important basis of results.

Secondly, aiXcoder7B Base version has"Know" when the user needs to generate code, and automatically stop when the code content is complete and no longer needs to be generatedThis is a unique feature of this model, which cannot be achieved by many other large code models.

The aiXcoder team said that the technical implementation of this function is inseparable from the structured Span technology. In model training, the structured Span technology is used to build training data and related tasks, allowing the model to learn when the user should generate code or whether the completed content is complete in terms of grammar and semantic structure.

This means that aiXcoder7B Base can automatically "know" where it has reached in its reasoning, while other models need to manually set the termination condition if they want to terminate. Automatic reasoning eliminates this trouble and helps improve work efficiency.

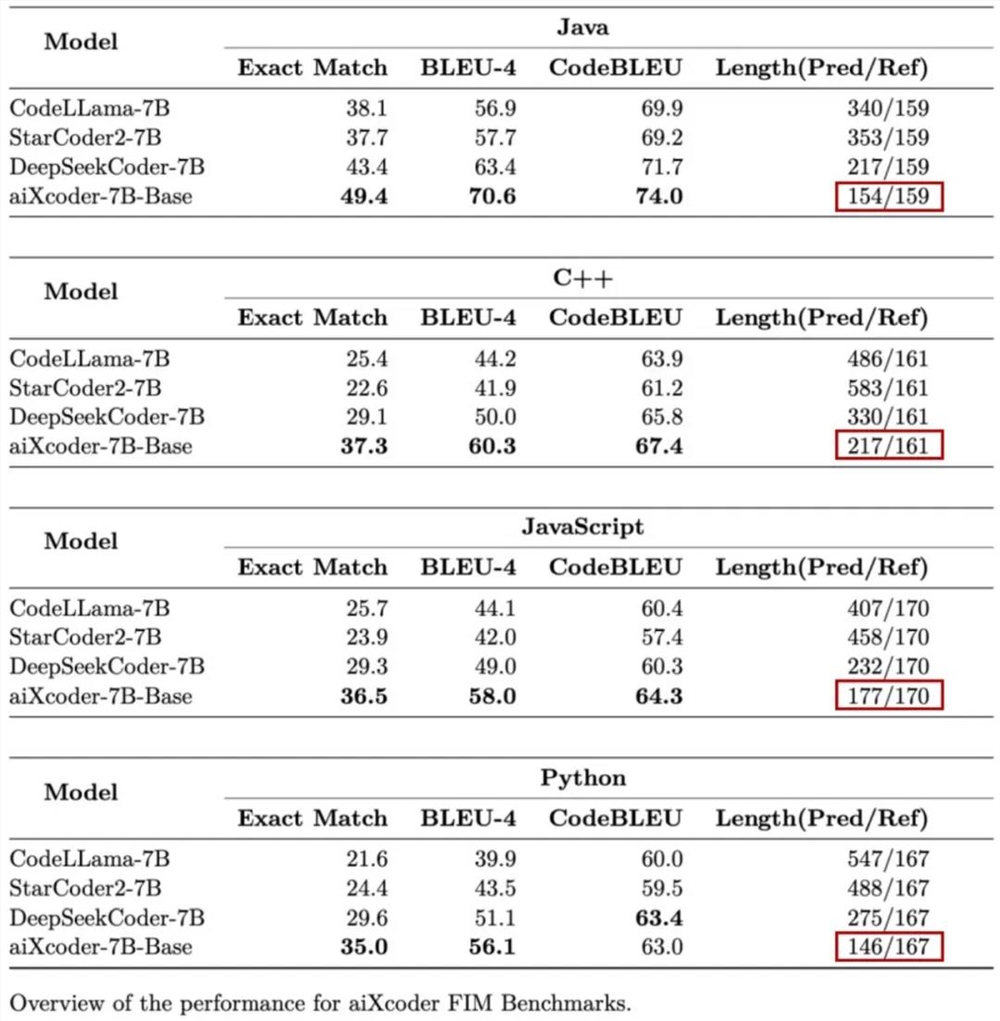

In addition, on the aiXcoder extended benchmark test set (aiXcoder Bench), aiXcoder7B Base version showed another highlight compared to other code models, namelyPrefer shorter code to accomplish user-specified tasks.

The detailed results are shown in the table below. When evaluating the code completion for Java, C++, JavaScript, and Python programming languages, aiXcoder7B Base is not only effective, but alsomostThe length of the generated answers circled in red boxes is significantly shorter than that of other competing models, and is very close to or sometimes even shorter than the standard answer (Ref).

The aiXcoder team pointed out that this a posteriori discovery is still inseparable from the structured span technology. They paid special attention to the code structure during the entire training process, and the structured span split the code according to the code structure, which is more conducive to reflecting the semantics of the code, and ultimately contributed to the "short" answer of the model while having an advantageous effect.

In addition to the excellent performance in the above single-file context code completion task, aiXcoder7B Base versionIt also performs better in multi-file completion scenarios., not only improves the programming effect of multiple filesOptimal, and also made an important discovery on the CrossCodeEval evaluation set in the table below.

According to the aiXcoder team, this model uses the results of the search only through the cursor context as prompt (only looking at the context currently being written), while other models use the results of the search using GroundTruth (giving the files containing the answers to these models) as prompts. Under this condition, the former is still more effective than the latter.

How is it done? For other models, even if there is more context information, they cannot figure out which ones are the most core and critical. However, aiXcoder7B Base can pick out the most effective and core context details for the current code from the upper and lower documents, so it has a good effect.

Here, we perceive which information is the most effective and critical. By further processing the context information, combining the clustering of file-related content and the code calling graph to build the mutual attention relationship between multiple files, we can obtain the most critical information for the current completion or generation task.

All these innovative training methods largely determine that aiXcoder7B Base version can win in the competition among many large code models.1.2T of high-quality training data also contributed greatlyThis magnitude is not only the largest among the same type of modelsmaximum, or unique token data.

Among them, the 600G high-quality data that was prioritized played an important role in the model effect. The other data mainly came from GitHub, Stack Overflow, Gitee, etc. The natural language part also included part of CSDN data, and all the data was filtered.

Talk is cheap, Show me the code

Obviously, aiXcoder7B Base version wins over other large code models in terms of evaluation data, but can it really help developers complete coding tasks efficiently? It still depends on the actual results.

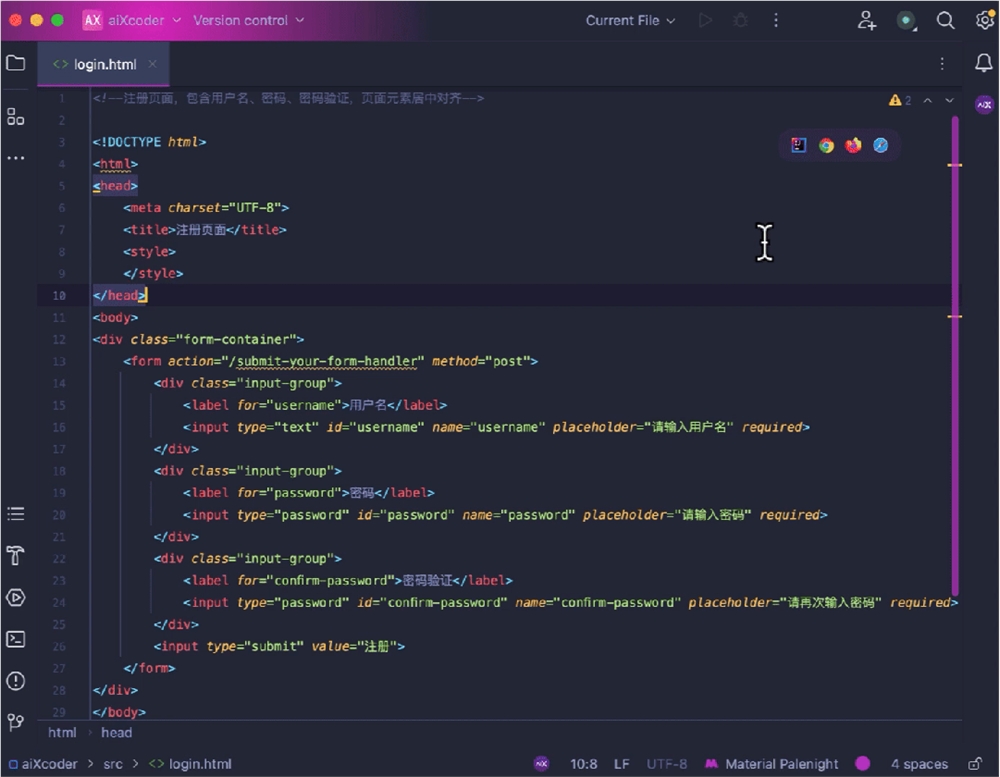

First LookGenerate CapacityFor front-end development, aiXcoder7B Base can quickly generate corresponding web pages through annotations:

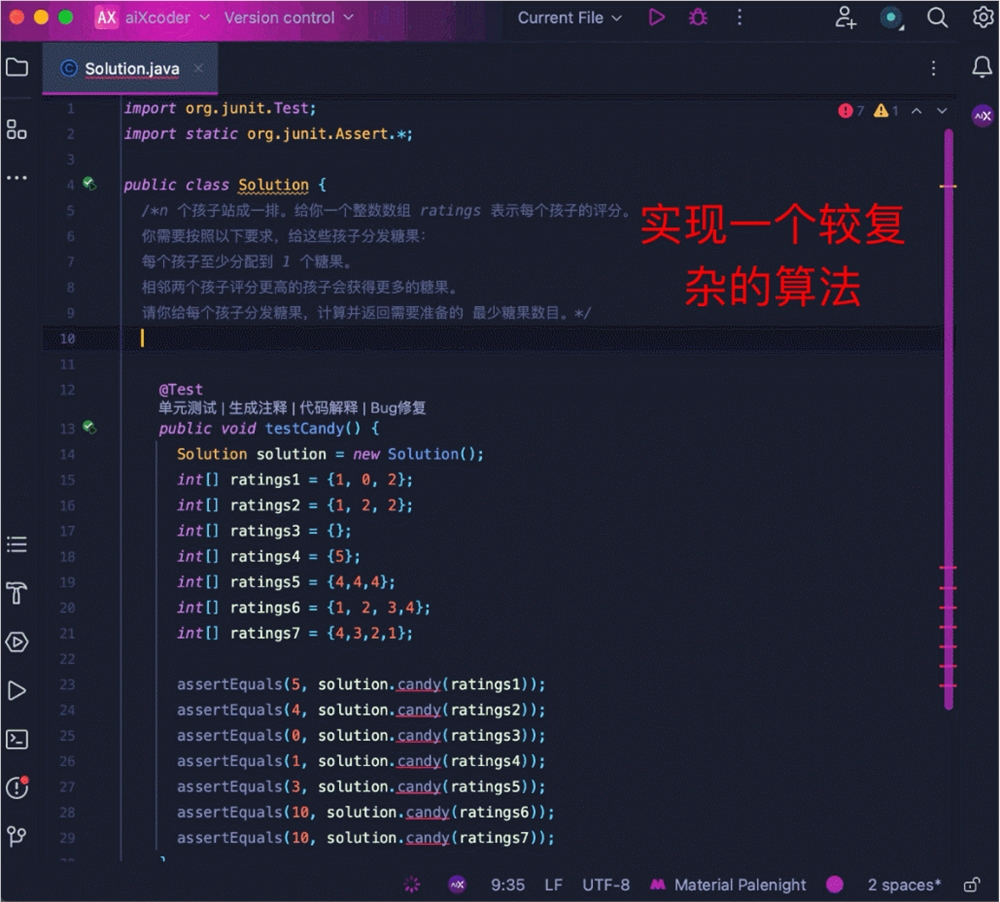

It can also handle difficult algorithmic problems, such as the classic candy distribution problem, where a greedy strategy is used to obtain the minimum number of candies by traversing the left and right paths twice.

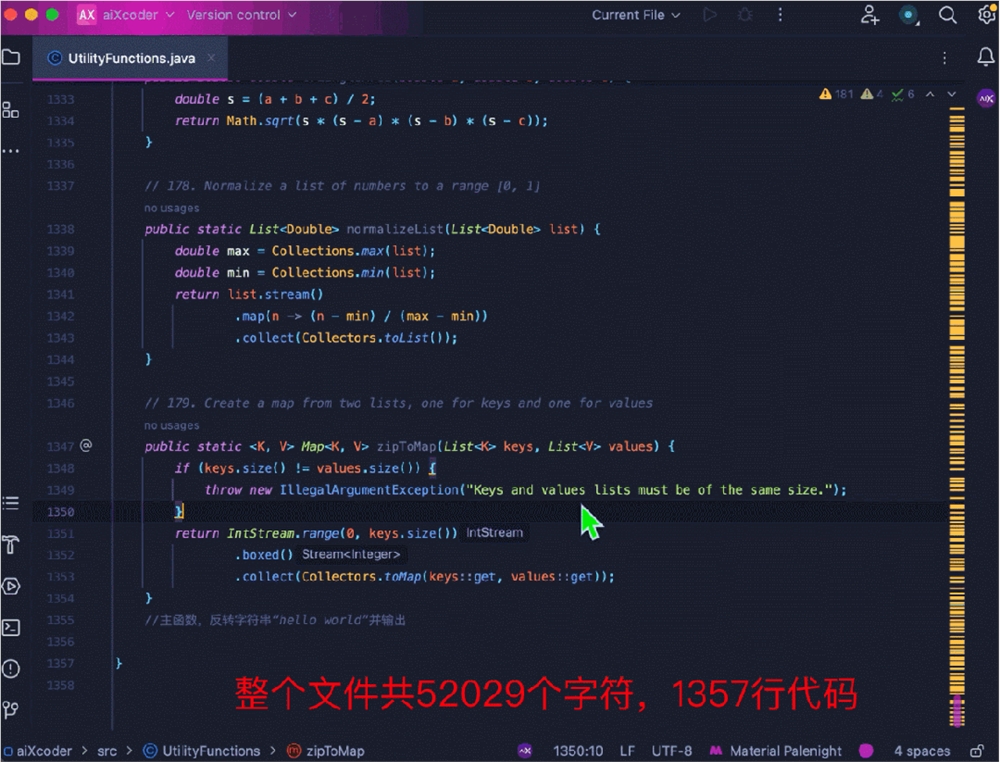

Let’s look at a more handy code completion scenario for aiXcoder7B Base, such as long context completion. Here, multiple tool functions are used to form more than 1,500 lines of code, and the model is required to complete the comments at the end of the file. The model recognizes the relevant functions at the top of the file and successfully completes the relevant methods based on the function information:

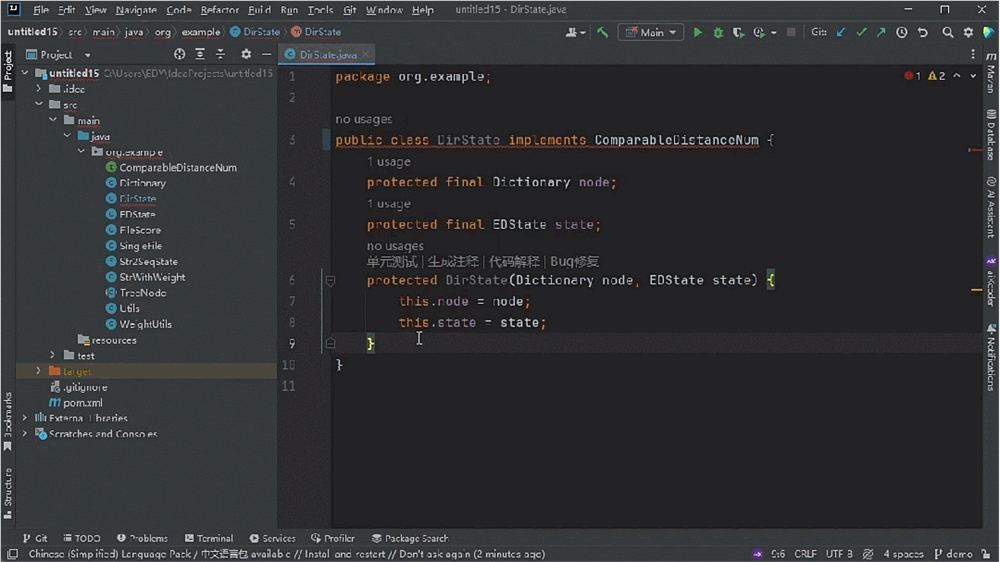

There is also the following cross-file completion task, which applies dynamic programming on the tree structure to implement edit distance search. The model completion code recognizes the relationship between the calculation of edit distance and the calculation of the minimum value inside the rolling array in another file, and gives the correct prediction result:

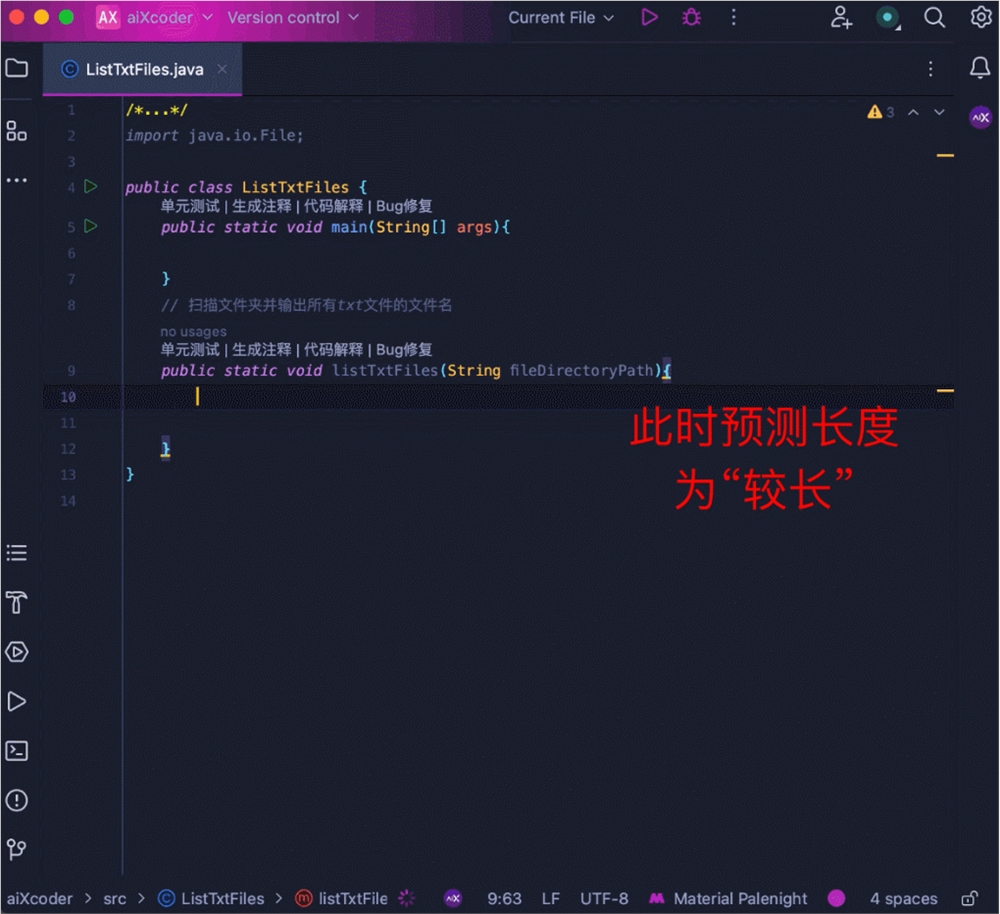

Of course, the output length can also be matched intelligently. When the user adjusts his or her adopted content, the model can automatically adjust the completion length according to the current adoption situation:

In a word, with the aiXcoder7B Base version code model, programmers have an additional tool to improve efficiency, save time and get twice the result with half the effort, whether it is code generation or completion.

Compete for effect, and more importantly, for application

Use core competitiveness to become an enterpriseFirst choice

We have seen that aiXcoder7B Base version has fully demonstrated its strength in real development scenarios such as code completion. However, for enterprise customers, the large code model is not enough to impress them. Only when their needs are fully met will enterprise customers start without hesitation.

The aiXcoder7B Base version is designed to meet the needs of enterprises and solve their personalized requirements.Create a large code model that is most suitable for enterprise applications, which has become the primary goal of aiXcoder7B Base version and another core advantage that distinguishes it from other models.

Of course, if you want to become an enterprise applicationoptimalThe choice is not easy, and it is necessary to make in-depth research based on the actual situation of the enterprise's business scenarios, needs, and the costs that can be borne. The aiXcoder model not only does this, but also achievesExtreme.

In short, in order to realize the implementation of enterprise-level personalized applications, the aiXcoder model makes efforts in three aspects: private deployment, personalized training, and customized development, creating core advantages compared to other large code models.

First lookPrivate deploymentFor enterprises, the first consideration for private deployment and operation of large code models on local servers is whether their own computing power can support it. In this regard, the aiXcoder model requires less enterprise GPU resources and has low application cost investment, which greatly reduces the threshold for model deployment.

In addition, different companies have different software and hardware, including domestic chips and foreign graphics cards such as NVIDIA. Based on this, the aiXcoder model performs targeted hardware adaptation and further model training and reasoning optimization, such as using high-speed caching technology at the information processing level to fully meet the requirements of diversified deployment.

Secondly, the aiXcoder model emphasizesPersonalized trainingWe know that the actual situation of an enterprise cannot be generalized, but the aiXcoder model can respond to each situation and provide a "package" of personalized model training solutions.

On the one hand, we build enterprise-specific data sets and evaluation sets. The data sets are built based on enterprise code characteristics and employee coding habits, and are specifically used for data preprocessing of codes and related documents. The evaluation sets are built based on real development scenarios to simulate and evaluate the expected effects of the models in actual applications.

On the other hand, we combine the internal factor of enterprise code with the external factor of enterprise computing resources, fully consider the amount of computing resources and code of different enterprises, and provide them with flexible personalized training and optimization solutions.maximumImprove the early training effect and subsequent application effect of the exclusive code large model.

The third isCustomized developmentAiXcoder models target the actual conditions of different industries and enterprises, and provide them with flexible customized services based on the personalized needs of enterprises. With rich and mature experience in customized development, the models built based on enterprise code and computing resources are highly consistent with actual needs, making the improvement of business efficiency visible and tangible. At present, customers have spread across multiple industries such as banking, securities, insurance, military industry, operators, energy, and transportation.

It can be seen that compared with other large code models,aiXcoder can provide enterprises with personalized training products and services at the same time, which is the first in the industry.onlyone.

The team behind

Ten years of hard work and great success

The coding capabilities demonstrated by the aiXcoder7B Base version of the code model make us more curious about the team behind the model.

It is understood that the aiXcoder team started code analysis research based on deep learning in 2013 and is the first team in the world to apply deep learning technology to the field of code generation and code understanding.

Over the past ten years, the team has published more than 100 related papers at top conferences such as NeurIPS, ACL, IJCAI, ICSE, FSE, and ASE. Many of these papers have been considered "first-of-its-kind" by international scholars and have been widely cited, winning the ACM Outstanding Paper Award many times.

In 2017, aiXcoder had a prototype, and in 2018, aiXcoder 1.0 was officially released, providing code auto-completion and search functions.

In April 2021, the team launched the aiXcoder L version, a billion-parameter code model with completely independent intellectual property rights, which supports code completion and natural language recommendations. This is also the first commercial intelligent programming product based on the "big model" in China.

After that, the team continued to work hard and launched the domesticThe firstThe aiXcoder XL version of the model that supports method-level code generation also has completely independent intellectual property rights.

In August 2023, aiXcoder Europa, which focuses on enterprise adaptation, was launched. It can provide them with private deployment and personalized training services based on the data security and computing power requirements of enterprises, effectively lowering the application threshold of large code models and improving development efficiency.

This time, the aiXcoder team has open-sourced the aiXcoder7B Base version, bringing the tested new code model to everyone. On the one hand, the model's leapfrogging capabilities in real development scenarios such as code completion with a 7B parameter scale and its multiple core competitiveness in enterprise adaptation will help promote the overall progress of the code model industry. On the other hand, the model will have a profound impact on a wider range of software development automation, accelerating this process while safeguarding all industries to continuously improve business efficiency and transform production methods.

Moreover, compared with general models that also have code capabilities, aiXcoder7B Base version allows us to see the all-round advantages of dedicated code large models, including lower training and inference costs, enterprise deployment costs, and better and more stable effects on enterprise project-level codes.

At present, aiXcoder has served a large number of leading customers in the banking, securities, insurance, military industry, high-tech, operators, energy, transportation and other industries, and has been deeply engaged in serving the financial industry. Among them, the "Application Practice of Code Big Model in the Securities Industry" project with a well-known securities firm in the industry won the 2023 AIIA Top Ten Potential Application Cases of Artificial Intelligence and the China Academy of Information and Communications Technology AI4SE Silver Bullet Outstanding Case and other honors.

At the same time, aiXcoder has also been sought after by the capital market for its forward-looking exploration direction and down-to-earth implementation. Industry-leading funds such as Hillhouse, Qingliu, and Binfu have invested in the aiXcoder team, enabling it to grow rapidly!

Obviously, the aiXcoder team is ready for the future AIGC competition.

aiXcoder open source link:

https://github.com/aixcoder-plugin/aiXcoder-7B

https://gitee.com/aixcoder-model/aixcoder-7b

https://www.gitlink.org.cn/aixcoder/aixcoder-7b-model

https://wisemodel.cn/codes/aiXcoder/aiXcoder-7b