Meta Platforms released the latest version of its Training and Inference Accelerator (MTIA) project on the 10th. MTIA is a custom-designed AI workload by Meta.chipseries.

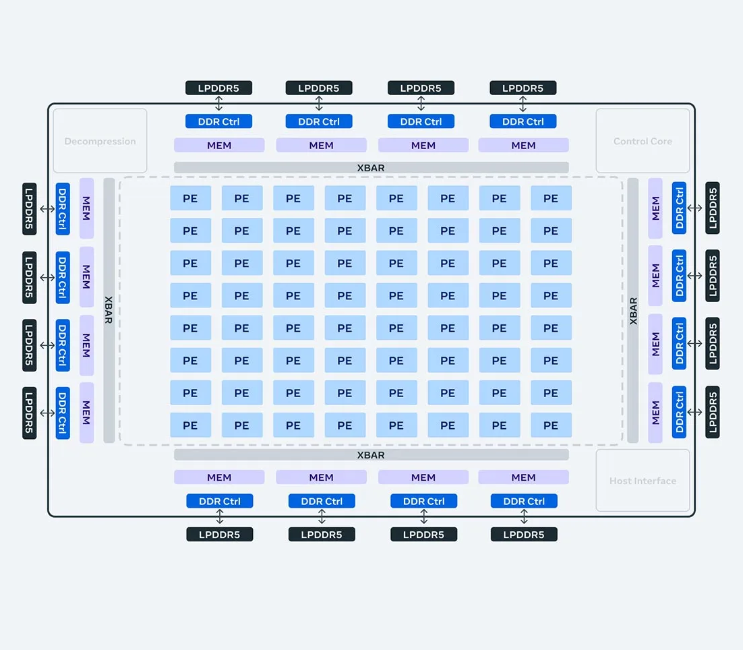

It is reported that the new generation of MTIA released this time is better than the first generation MTIA.Significantly improved performance and helped strengthen content ranking and recommendation ad modelsIts architecture is fundamentally focused on providing the right balance of compute, memory bandwidth, and memory capacity.

The chip can also help improve training efficiency and make inference (i.e. actual reasoning tasks) easier. Meta said in its official blog post, "Achieving our ambitions for custom chips means investing not only in computing chips, but also in memory bandwidth, networking and capacity, and other next-generation hardware systems."

Currently, MTIA mainly trains ranking and recommendation algorithms, but Meta said,The goal is to eventually expand the chip’s capabilities to start training generative AI, such as its Llama language model..

The new generation of MTIA chips released this time are said to be "fundamentally" focused on providing the right balance between computing, memory bandwidth and memory capacity.The chip has 256MB of on-chip memory and a clock speed of 1.35GHz, and is manufactured using TSMC's 5nm process., which is a significant improvement over the first generation product's 128MB and 800MHz.

Early results from Meta's testing show that of the four models the company evaluated,New chip triples performance of first generation.

The chip has been deployed in data centers and provides services for AI applications.