althoughappleNo AI models have been introduced since the start of the generative AI boom, but the company is working on a number of AI projects in the near future. Last week, Apple researchers shared a paper revealing a new language model the company is working on, and inside sources say Apple is working on two AI-powered robots.

Now, the release of yet another research paper shows that Apple is just getting started. On Monday, Apple researchers published a research paper describing Ferret-UI, a new Multimodal Large Language Model (MLLM) that understands mobile user interface (UI) screens.

MLLMs differ from standard LLMs in that they do not only involve text, but also demonstrate a deep understanding of multimodal elements such as images and audio. In this case, Ferret-UI was trained to recognize different elements of the user's home screen, such as application icons and small texts. In the past, recognizing application screen elements has been challenging for MLLM due to the subtle nature of these elements. To overcome this problem, the research paper notes that the researchers added "arbitrary resolution" to Ferret, allowing it to zoom in on details on the screen.

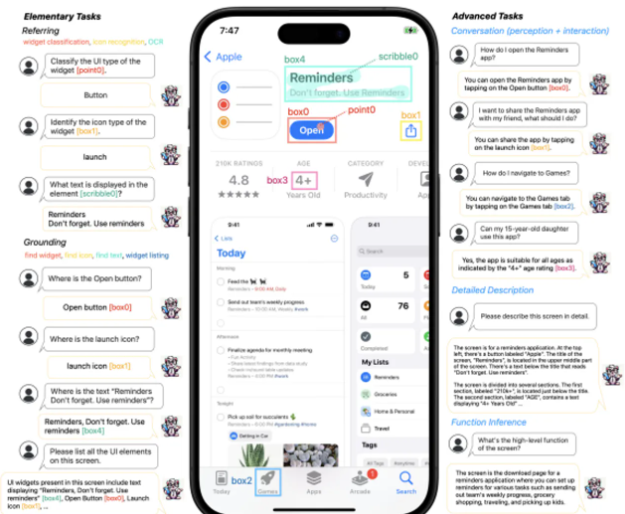

Based on this, Apple's MLLM also has "referring, grounding, and reasoning capabilities," which allow Ferret-UI to fully understand the UI screen and perform tasks based on the screen's content, as shown below.

Apple researchers compared Ferret-UI to OpenAI's MLLM GPT-4V in public benchmarks, basic tasks andadvancedComparisons were made across tasks. Among the basic tasks, including icon recognition, OCR, widget categorization, find icons, and find widgets tasks on iPhone and Android, Ferret-UI outperforms GPT-4V on almost all tasks.onlyThe exception is that GPT-4V slightly outperforms the Ferret model in the "Find Text" task on the iPhone.

In arguing against the UI findings, GPT-4V came out slightly ahead, outperforming Ferret93.4% versus 91.7% on the inference dialog.However, the researchers noted that Ferret-UI's performance is still "noteworthy" because it generates raw coordinates , rather than a set of predefined boxes that GPT-4V selects from.

The paper does not mention how Apple plans to utilize the technology, or if it will. Instead, the researchers state more broadly that the advanced features of Ferret-UI are expected to positively impact UI-related applications.Ferret-UI could enhance Siri's functionality. Thanks to the model's comprehensive understanding of the user's application screen and knowledge of performing certain tasks, Ferret-UI could be used to enhance Siri to perform tasks for the user.