April 7,AlicloudThousand Questions on TongyiOpen Source 32 billion parameter model Qwen1.5-32B. IT Home noted that Tongyi Qianwen has previously open-sourced 6 models with 500 million, 1.8 billion, 4 billion, 7 billion, 14 billion and 72 billion parameters.Large Language Model.

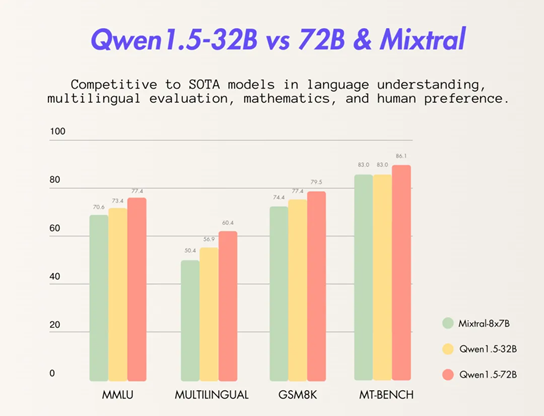

The open-source 32 billion parameter model will achieve a more ideal balance between performance, efficiency, and memory usage. For example, compared with the 14B open-source model of Tongyi Qianwen, the 32B model is more capable in intelligent agent scenarios; compared with the 72B open-source model of Tongyi Qianwen, the 32B model has a lower reasoning cost. The Tongyi Qianwen team hopes that the 32B open-source model can provide enterprises and developers with a more cost-effective model option.

At present, Tongyi Qianwen has open-sourced 7 major language models, and the cumulative download volume in open source communities at home and abroad has exceeded 3 million.