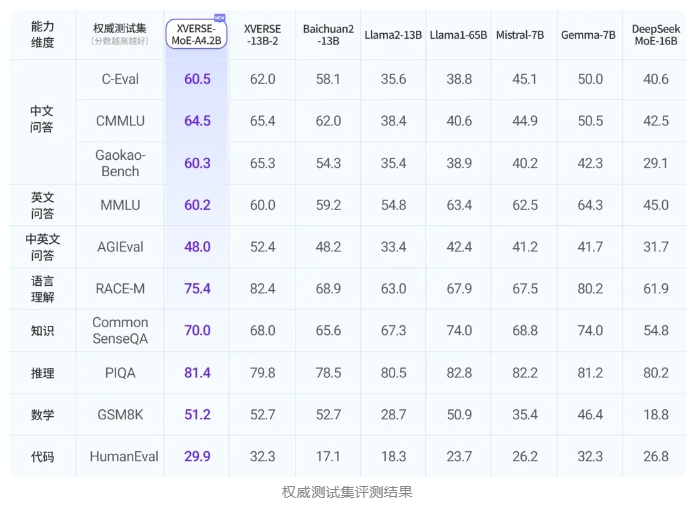

YuanxiangReleased XVERSE-MoE-A4.2B Large Model, using a hybrid expert model architecture with an activation parameter of 4.2B, the effect is comparable to the 13B model.Open Source,Free for commercial use, which can be used by a large number of small and medium-sized enterprises, researchers and developers to promote low-cost deployment.

The model hasExtremeCompression and extraordinary performance are two major advantages. Sparse activation technology is used, and the effect exceeds many top models in the industry and is close to super large models. Yuanxiang MoE technology is self-developed and innovative, and efficient fusion operators, fine-grained expert design, load balancing loss terms, etc. are developed. Finally, the architecture setting corresponding to Experiment 4 is adopted.

In terms of commercial applications, Yuanxiang Big Model has carried out in-depth cooperation with multiple Tencent products to provide innovative user experience for the fields of culture, entertainment, tourism and finance.

- Hugging Face: https://huggingface.co/xverse/XVERSE-MoE-A4.2B

- ModelScope Magic: https://modelscope.cn/models/xverse/XVERSE-MoE-A4.2B

- Github: https://github.com/xverse-ai/XVERSE-MoE-A4.2B