Tens of billions of token subsidies, free from April!

This time the wool comes from the Tsinghua DepartmentAI CompaniesNo Question Core DomeWeed for both businesses and individuals

This company was founded in May 2023 with the goal of creating a big model hardware and software integrationoptimalArithmetic Solutions.

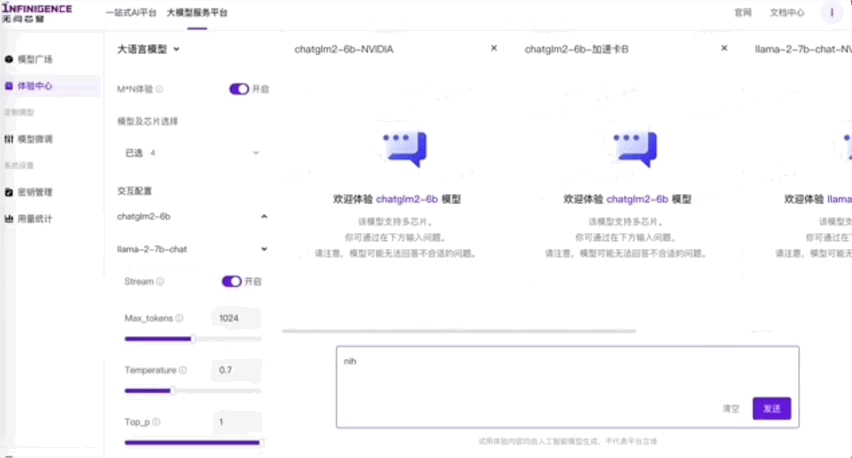

Just now, it released a multi-chip arithmetic base based on theVaultless Infini-AI Big Model Development and Service Platform, for developers to experience and compare various models and chip effects.

Source Note: The image is generated by AI, and the image is authorized by Midjourney

After the big modeling wave, some people flirted with the idea of.

The big models should call for "give me the scene" more than "benefit mankind".

However, No Ask Core Dome believes that after the rapid development of the Internet era, the Chinese market is not lacking in application scenarios.

The crux of the difficulty in landing big models lies in the arithmetic conundrum that the industry is continuing to experience.

It is more important to call for "solving arithmetic" than "giving me scenarios".

And the Unquestioned Core Dome is doing just that.

Allow developers to spend a small amount of money, use good tools, and have plenty of computing power

Today, No Questions Asked Core Dome released a multi-chip arithmetic base based on theVaultless Infini-AI Big Model Development and Service Platform.

It was also announced that since March 31, full registration is officially open to giveFree quota of 10 billion tokens for all real-name registered personal and business users.

Developers can experience and compare various modeling capabilities and chip effects on this platform.

Through the action of simply dragging and dropping various parameter buttons, you can fine-tune a larger model that is more relevant to your business and deploy it on the dome-less Infini-AI; and then provide the service to the user at a very favorable unit price of thousands of tokens.

Currently, Vaultless Infini-AI has supported Baichuan2, ChatGLM2, ChatGLM3, ChatGLM3 closed-source models, Llama2, Qwen, Qwen1.5 series, and other altogetherMore than 20 modelsand AMD, Ren, Cambrian, Suwon, Tianji, Mu Xi, Moore Threads, NVIDIA and others.More than 10 types of calculation cardsIt supports joint optimization and unified deployment of hardware and software between multiple models and multiple chips.

Models from third-party platforms or custom training and fine-tuning can also be seamlessly migrated and hosted to dome-free Infini-AI and receive a fine-grained customized per-token billing solution.

"Our coverage of model brands and chip brands will continue to increase, and the cost-effective advantages of No Dome Infini-AI will become more and more prominent as time goes by." Xia Lixue, co-founder and CEO of No Ask Core Dome, said that in the future, No Dome Infini-AI will also support more models and arithmetic ecosystem partners' products on the shelves, so that more large model developers can "spend a small amount of money, use a large pool", and continue to reduce the landing cost of AI applications.

A month ago, Tongdao Hire released its AI-powered Digital Human Interviewer product in select cities, and there are more AI features in the pipeline.

This is the elastic arithmetic usage scheme provided by the unquestioned core dome and fine-tuned on the platform of the unquestioned core dome based on the open source big model.

Compared with other solutions on the market, higher inference acceleration was achieved, and the cost of bringing new features online was also significantly reduced. Xia Lixue said that this effect makes the No Dome team very confident.

Therefore, in addition to opening the full registration, also officially launched the test invitation for large arithmetic demand side, to provide more cost-effective arithmetic, and more depth in the algorithm and hardware arithmetic optimization services.

Companies that are experiencing arithmetic challenges

Enterprises that want to apply big models in mature scenarios find the arithmetic but don't know how to use it, and can't make differentiated products to achieve business upgrades.

Enterprises that want to create AI-Native apps have difficult to afford arithmetic costs, poor toolchains, and unreasonable product launch-to-production ratios.

Companies that train their own models often can't find or afford the required volume of arithmetic power as their business expands, and the cost of running their business is too high.

By the end of 2023, the total scale of China's arithmetic power reached 1.97 trillion floating point operations per second (197E FLOPs), ranking second in the world, and the arithmetic power scale has increased at an average annual growth rate of nearly 30% in the past five years.

With such a growth rate, why does the industry still find arithmetic particularly difficult?

The reason behind this is that the development of the AI industry coincides with the explosion of the engineer talent dividend, accelerating the booming development of China's large model industry, and the demand side is "waiting to be fed", while there are still a large number of uncollected and under-utilized arithmetic resources on the market, and there is a lack of a systematic "large model native" business model to convert the supply of arithmetic power into products and services to meet market demand. There is a lack of a systematic "big model native" business model to transform the supply of arithmetic power into products and services to meet market demand.

Dramatic increase in arithmetic price/performance ratio, from the strength of multi-chip optimization

"There are a lot of unactivated effective arithmetic power on the market, the hardware gap itself is rapidly narrowing, but we will always encounter 'ecological problems' when using." Xia Lixue said, this is because the iteration of hardware is always slower than software, higher prices, software developers do not want to work in addition to their own R & D work in other "variables", and therefore will always tend to directly use a mature ecological chip.

No Ask Vault wants to help all the big modeling teams "control the variables", i.e., when using No Ask Vault's arithmetic services, users don't need to and won't feel the brand difference of the underlying arithmetic.

How did the unquestioned Core Vault, founded less than a year ago, manage to run through the performance optimization on multiple compute cards in such a short period of time?

At the end of 2022, the big model triggered widespread concern in society, Xia Lixue and his mentor Wang Yu that the overall level of domestic arithmetic from the international advanced there is still a significant gap between the chip process alone to improve or the iteration of multiple chips has been far from enough.A large model ecosystem needs to be built so that different models can be automatically deployed to different hardware and so that all kinds of arithmetic power can be effectively utilized.

A year later, No Questions Asked Core Vault announced optimizations achieved on chips such as NVIDIA GPUs and AMD, achieving a 2-4x speedup in inference for large model tasks.

Subsequently, AMD China announced that it has entered into a strategic partnership with No Questions Asked Core Vault, where both parties will work together to jointly improve the performance of commercial AI applications.

Two years later, No Questions Asked Core Dome presented its performance optimization data on 10 chips at the launch event, showing on each card that it has achieved current industryOptimalThe performance optimization effect of the

"We have built strong trust relationships with various model and chip partners," said Lixue Xia, "on the one hand from our computational optimization strength for large models, and on the other hand, No Ask Core Dome pays great attention to protecting the data security of our partners. No Ask Core Vault will continue to maintain neutrality and also will not create conflicts of interest with our customers, which is the foundation of our business."

Accelerated technology stacks and systems that are "native to big models".

"Transformer has unified the model structure of this round and has shown a continued trend of making application breakthroughs." Wang Yu said in his opening remarks, "Once upon a time, when we were the last company in the AI 1.0 era, we could only do a very small portion of AI tasks. Today's times are different, the big model structure is unified, and the hardware barriers established by relying on ecology are 'thinning'."

Thanks to the wave of AI that is surging around the world and the unique opportunities in the Chinese market, No Questions Asked Core Dome is facing a huge technological opportunity.

Transformer is naturally based on a parallel computing architecture in its design; the larger the scale, the better the intelligence brought by the larger model, and the more people who use it, the more computation it requires.

"What No Ask Core Dome is doing is an accelerated technology stack for 'Big Model Native'." Yan Shengen, co-founder and CTO of No Ask Core Dome, said that big model landing relies on algorithms, arithmetic power, data, and also systems. Arithmetic power determines the speed of big models, and a well-designed system unlocks more hardware potential.

The team of No Question Core Vault has built tens of thousands of GPU-level large-scale high-performance AI computing platforms with 10,000 kanas of management capabilities, and has successfully built a cloud management system based on self-operated clusters, which has realized unified scheduling among multiple clouds across domains.

One More Thing

"On the end side, people are more inclined to quickly drop the capabilities of big models into the human-computer interaction interface to enhance the practical experience." According to Dai Guohao, co-founder and chief scientist of No Ask Core Dome, in the future, wherever there is computing power, there will be AGI-level intelligence emerging. And the source of intelligence on each end is the large model specialized processor LPU.

The Large Model Processor LPU improves the energy efficiency and speed of large models on a variety of end-side hardware.

Dai Guohao showed the audience at the launch of the"One card to run the big model.", which its team launched in early January this year, is a globalThe firstThe FPGA-based large model processor, through the hardware and software co-optimization technology of large model efficient compression, makes the FPGA deployment cost of LLaMA2-7B model reduced from 4 cards to 1 card, and the price/performance ratio and energy-efficiency ratio are higher than that of GPUs of the same process.In the future, the end-side large model dedicated processor IP of the no-questions-asked core dome can be modularized and integrated into all kinds of end-side chips.

"From the cloud to the end, we want to carry out the joint optimization of hardware and software as a whole to the end. Dramatically reduce the landing cost of large models in various scenarios, so that more good AI capabilities are better and more affordable into the lives of more people." Dai Guohao announced that the dome-less LPU will be available in 2025.