Databricks Recently, a universalLarge Language Model DBRX, known as "the most powerful open source AI at present",It is said to surpass "all otherOpen Source Model”.

According to the official press release, DBRX is a large language model based on Transformer, using the MoE (Mixture of Experts) architecture.It has 132 billion parameters and is pre-trained on 12T Token source data..

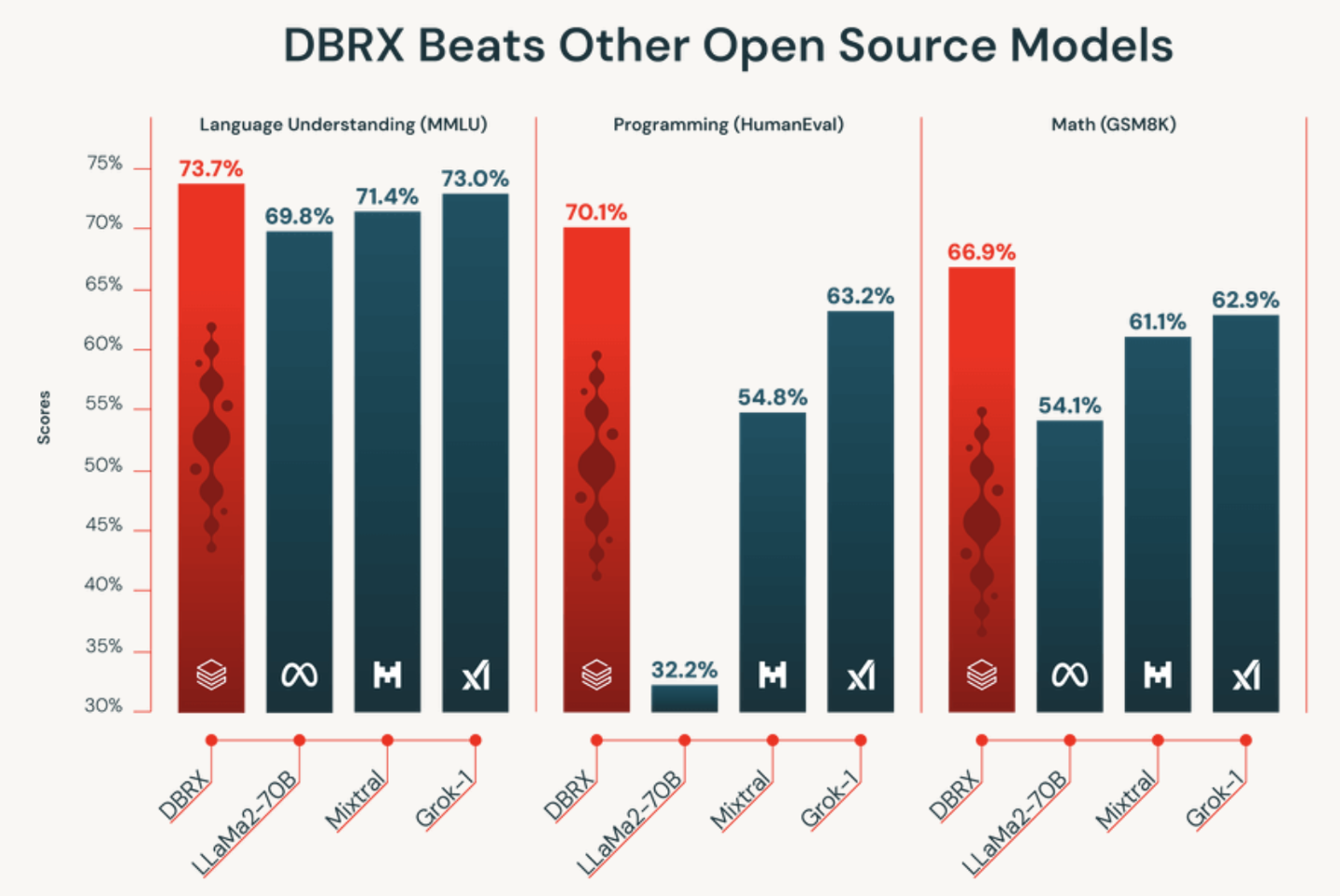

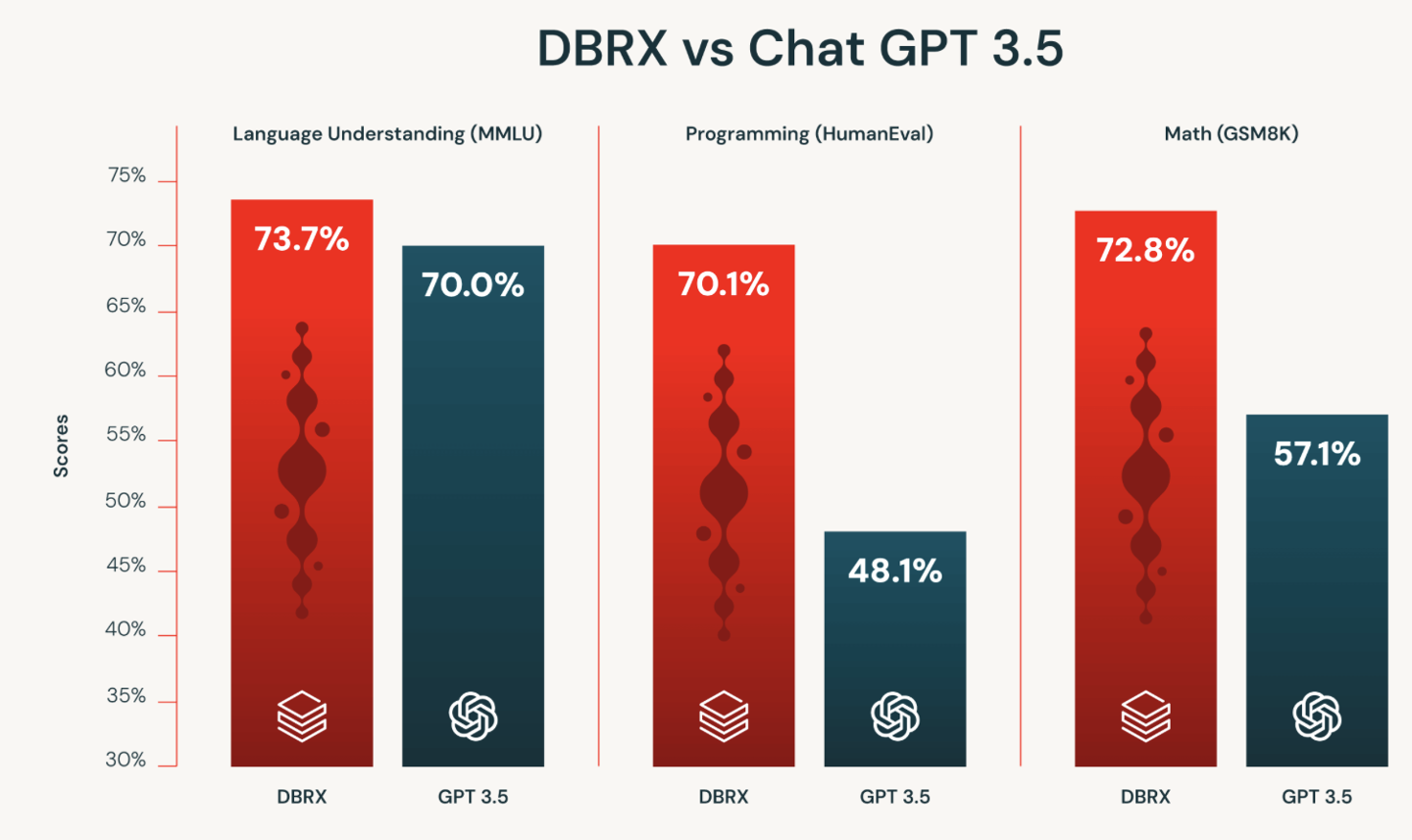

The researchers tested this model and found that compared with the existing open source models such as LLaMA2-70B, Mixtral, and Grok-1 on the market, DBRX performed better in benchmarks such as language understanding (MMLU), programming (HumanEval), and mathematical logic (GSM8K). The official also mentioned that DBRX also surpassed OpenAI's GPT-3.5 in the above three benchmarks.

Naveen Rao, vice president of Databricks AI, told TechCrunch that the company spent two months and $10 million to train DBRX. Although DBRX currently performs well in terms of overall results, the model currently requires four NVIDIA H100 GPUs to run, so there is still room for optimization.