Databricks, a big data company, recently released a newDBRXMoE BigModel, which triggeredOpen Source CommunityDBRX beat open source models such as Grok-1 and Mixtral in benchmark tests and became a new open sourceKingThe total number of parameters of this model reaches 132 billion, but each activation has only 36 billion parameters, and its generation speed is 1 times faster than Llama2-70B.

DBRX is composed of 16 expert models, with 4 experts active at each reasoning and a context length of 32K. To train DBRX, the Databricks team rented 3072 H100s from cloud vendors and trained them for two months. After internal discussions, the team decided to adopt a course learning approach to improve DBRX's capabilities in specific tasks with high-quality data. This decision was successful, and DBRX reached SOTA levels in language understanding, programming, mathematics, and logic, and defeated GPT-3.5 in most benchmarks.

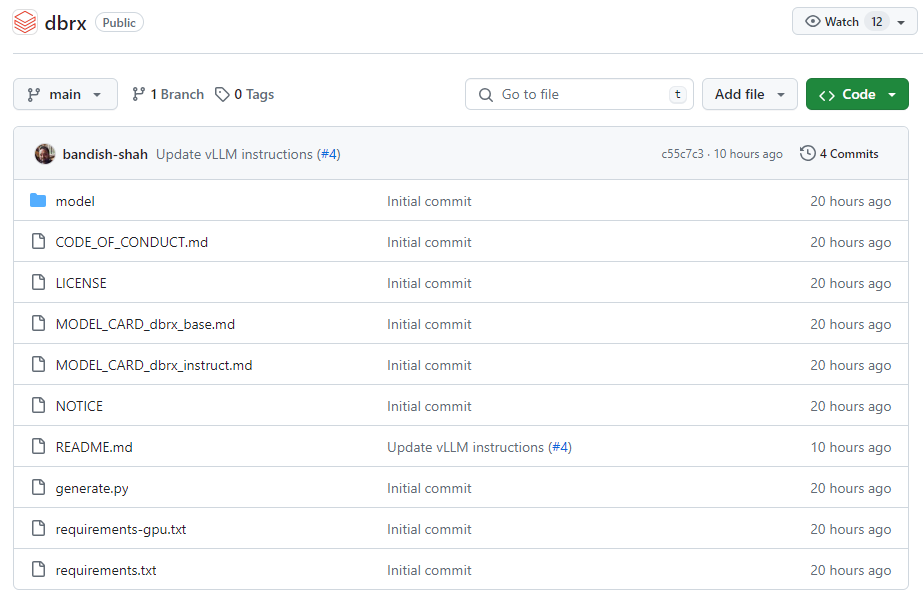

Databricks also released two versions of DBRX: DBRX Base and DBRX Instruct. The former is a pre-trained basic model, and the latter is fine-tuned with instructions. Chief Scientist Jonathan Frankle revealed that the team plans to conduct further research on the model and explore how DBRX can acquire additional skills in the "last week" of training.

Although DBRX is welcomed by the open source community, some people question its “open source” nature. According to the agreement published by Databricks, products built on DBRX must submit a separate application to Databricks if their monthly active users exceed 700 million.