On March 28, according to the Nikkei report today,NvidiaCutting-edge image processing semiconductors (GPU)H200 Now available for delivery. H200 is a semiconductor for the AI field, with performance exceeding the current flagship H100.

According to the performance evaluation results released by NVIDIA, taking the processing speed of Meta's large language model Llama 2 as an example,H200 Generative AI answers are processed up to 45% faster than H100.

Market research firm Omdia has said that Nvidia will account for about 80% of the AI semiconductor market in 2022. AMD Competitors such as Nvidia are also developing products to compete with Nvidia, and the competition is intensifying.

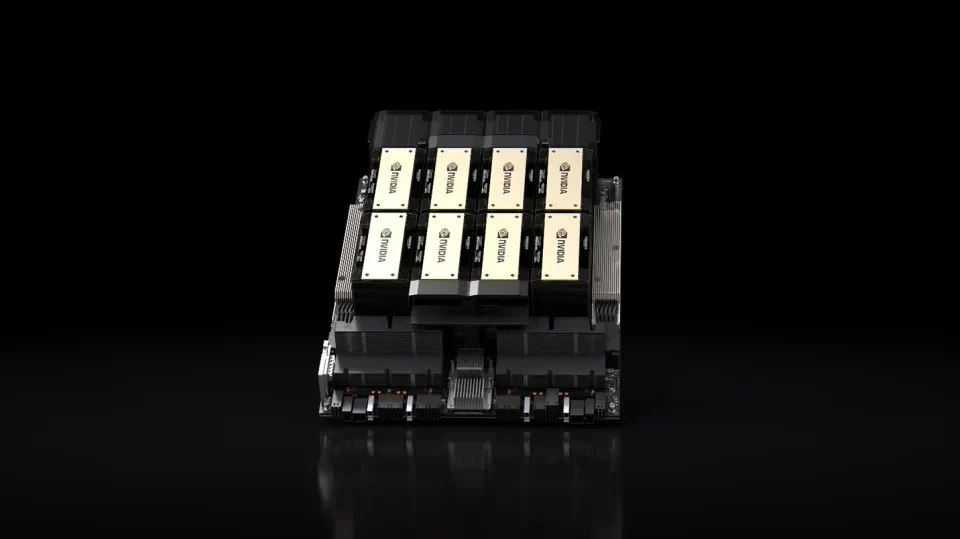

NVIDIA announced at its developer conference on March 18 local time that it will launch a new generation of AI semiconductor "B200" this year. The new product, a combination of B200 and CPU (central processing unit), will be used on the latest LLM.The "most powerful AI accelerator card" GB200 includes two B200 Blackwell GPUs and an Arm-based Grace CPU The performance of reasoning large language models is 30 times higher than that of H100, and the cost and energy consumption are reduced to one 25th.

GB200 adopts the new generation AI graphics processor architecture Blackwell, Huang Renxun said at the GTC conference: "Hopper is already very good, but we need a more powerful GPU."

As previously reported, NVIDIA H200 was released in November last year. It is based on NVIDIA's "HopperThe HGX H200 GPU, based on the company’s NVIDIA NVIDIA GeForce architecture, is the successor to the H100 GPU and the company’s first chip to use HBM3e memory, which is faster and has more capacity, making it better suited for large language models.Compared with the previous dominant H100, the performance of H200 has been directly improved from 60% to 90%.Nvidia said: "With HBM3e, the Nvidia H200 delivers 141GB of memory at 4.8 TB per second, nearly twice the capacity and 2.4 times the bandwidth compared to the A100."