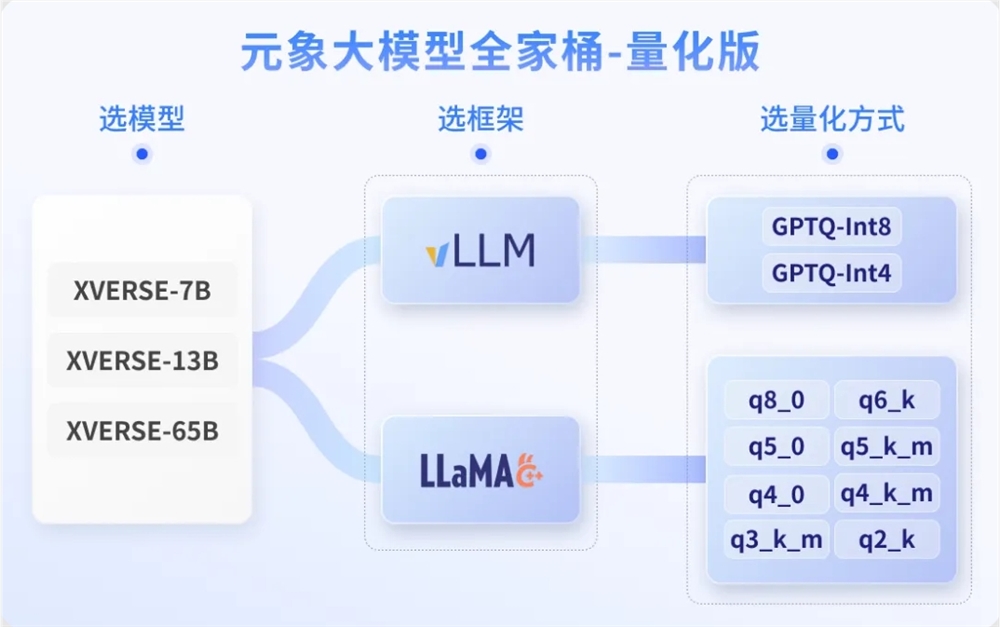

YuanxiangLarge ModelOpen Source30 quantitative versions have been released, supporting quantitative reasoning of mainstream frameworks such as vLLM and llama.cpp, and are unconditionally free for commercial use.

The model capabilities and inference performance before and after quantization were evaluated. Taking the quantized version of XVERSE-13B-GPTQ-Int4 as an example, the model weights were compressed by 72% after quantization, the total throughput was increased by 1.5 times, and the capacity of 95% was retained.

Developers can choose models with different reasoning frameworks and data accuracy based on their skills, hardware and software configurations, and specific needs. If local resources are limited, you can directly call the API service of the Yuanxiang large model (chat.xverse.cn).

In general, the open source quantitative version of the Yuanxiang large model provides a convenient and fast deployment method, and different frameworks and precision models can be selected for deployment and inference according to needs.

Download the Yuanxiang large model:

- Hugging Face: https://huggingface.co/xverse

- ModelScope Magic: https://modelscope.cn/organization/xverse

- Github:https://github.com/xverse-ai