There are so many AI video tools, which one should I choose?

Today I will teach you how to use pixverse, a relatively easy-to-use AI video tool. The most important thing is that it is currently free.

First open the URL: https://www.1ai.net/3268.html

Log in with Google Mail. Of course, you can also log in with Discord, but Discord has too many users and the generated videos are easily lost. Therefore, it is recommended to use the web version directly. However, if you need inspiration and see what videos others have generated, you can go to Discord to learn from the following.

Let’s get back to the topic and log in using Google Mail.

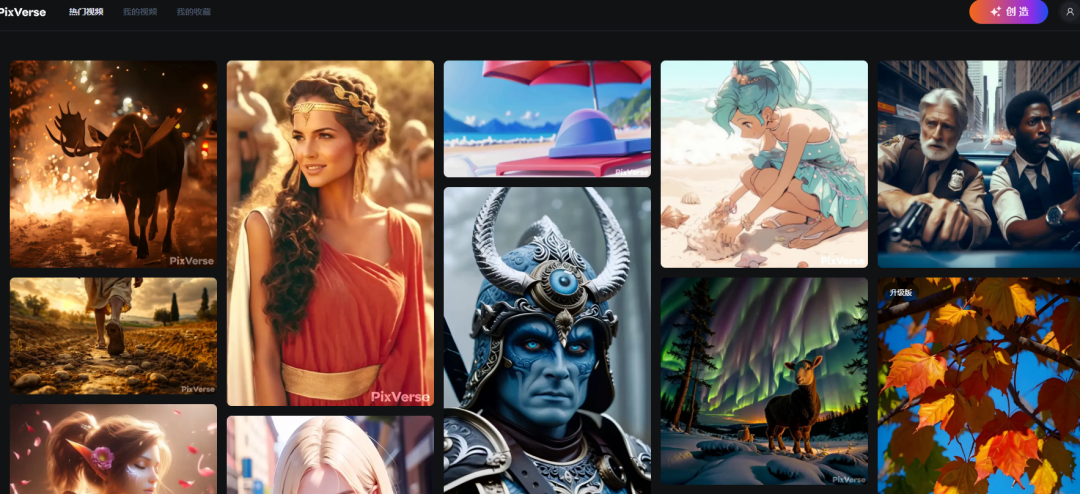

When you come to the main interface, you can see many videos that have been generated. It can be said to be an inspiration or reference library. Just click on one to open it.

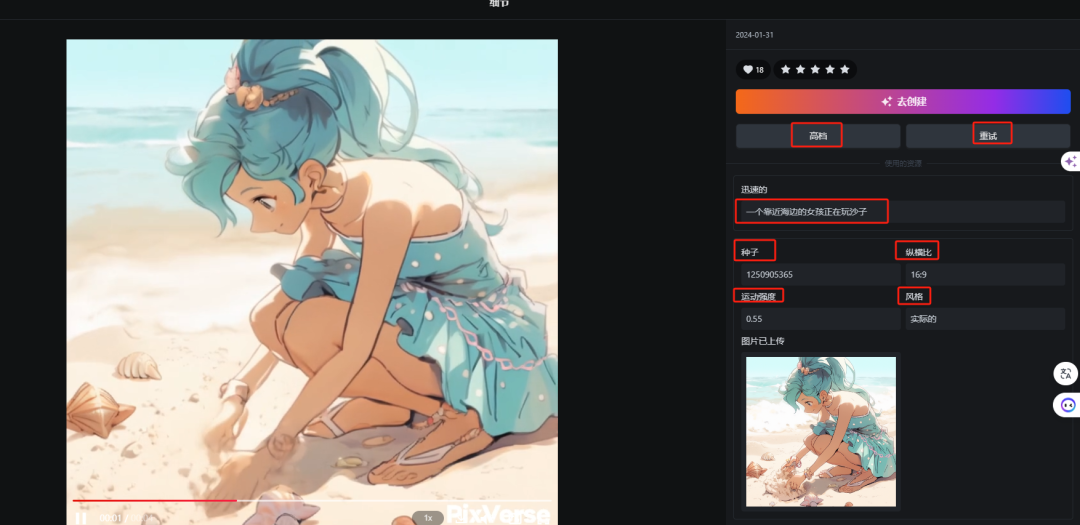

On the right are the detailed parameters of this video, from top to bottom:

go create

High Definition (upscale)

Retry

Prompt

Number of seeds (seed)

Aspect ratio

Strength of motion

Model style

And reference picture (Image uploaded)

Why do I have to introduce it again? Because the automatic translation is a bit awkward, so I can compare it one by one.

When you first start using it, you can just click Go Create without changing any parameters and try generating an identical one.

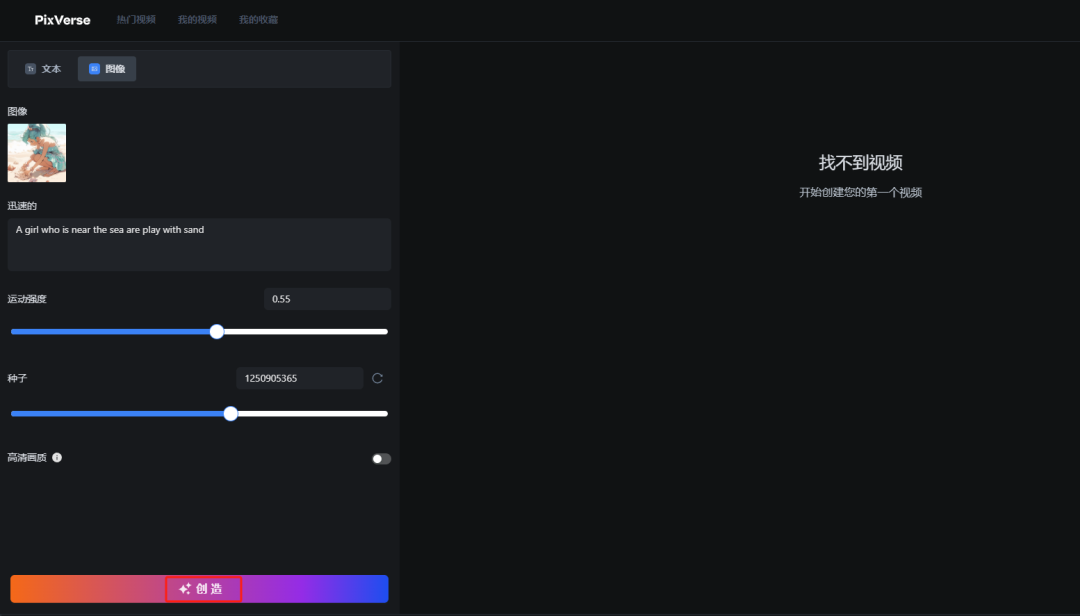

Go back to the home page and click Generate to enter the main operation interface.

As you can see, the main interface is very simple and you can get started quickly after a little familiarity.

Currently, the AI video tool pixverse only supports two modes, namely text-to-video and video-to-video.

In Vincent Video, you only need to input positive prompt words, negative prompt words, and select the style, size, and number of seeds to generate a video directly.

There is also a special feature here: Inspiring prompt to dual clips

To put it simply, AI automatically analyzes your prompt words, and when the description in the prompt words may involve two scenes, it will automatically generate a dual-lens video.

How can I put it? It's useless. To be honest, this kind of camera switching only works for Sora, and the others are rubbish, so it's better not to try it.

Simple input prompt words: A girl is crying sadly

After selecting the right parameters to generate the video, how should I put it? It may be the problem of the prompt words, but the effect is average.

At present,AI VideoA more suitable way isFigure video.

The current workflow for making AI videos is:

First use chatgpt to generate the story content, and generate the storyboards according to the content, and then let Dalle generate the pictures directly.

Of course, more people let GPT generate the storyboards, then regenerate the storyboard prompts, and then use midjourney to generate the corresponding pictures.

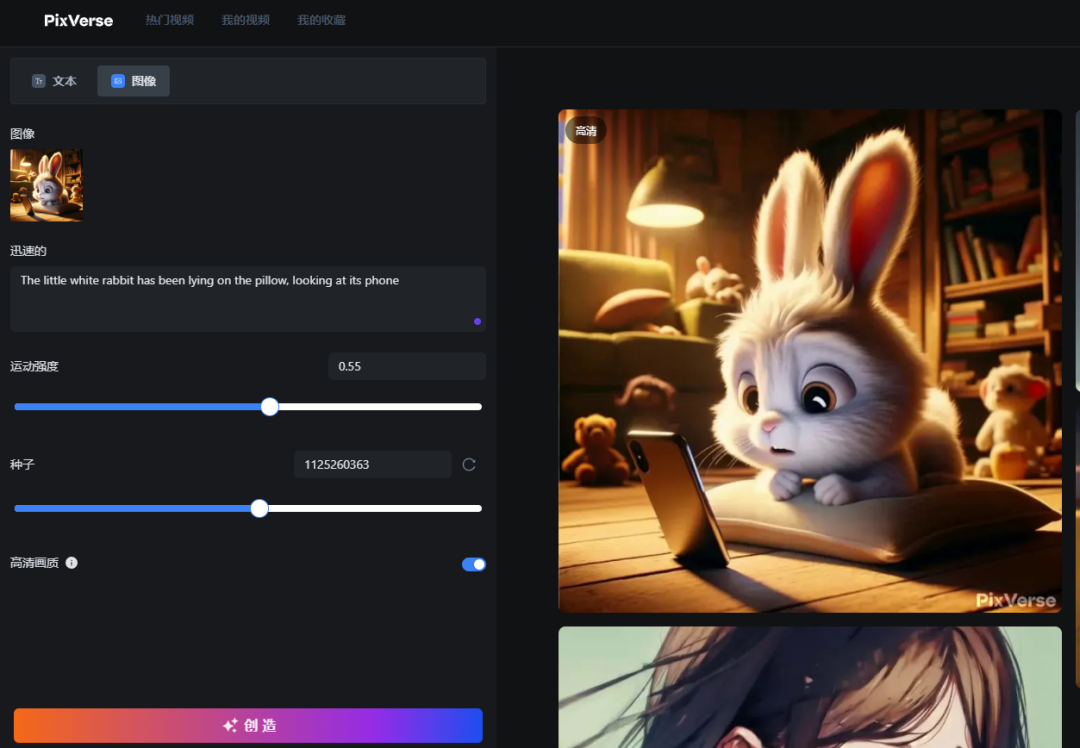

Then import the generated image into the AI tool, use the image and prompt words, set the corresponding parameters and generate the AI video.

Here we will skip the first two steps and directly use pictures to generate videos. We can use the pictures from the previous bedtime story video to generate videos.

Import the picture, fill in the prompt words, set the parameters, and generate it. You can see that it is very good.

Of course, if you feel the effect is not good, you can change the prompt words, reset the exercise intensity, etc.