Startups Databricks Recently announced the launch ofOpen Source AI Models DBRX claims to be the world's most powerful open source large-scale language model to date, more powerful than Meta's Llama 2.

DBRX uses a transformer architecture, contains 132 billion parameters, and consists of 16 expert networks. Each inference uses 4 of the expert networks, activating 36 billion parameters.

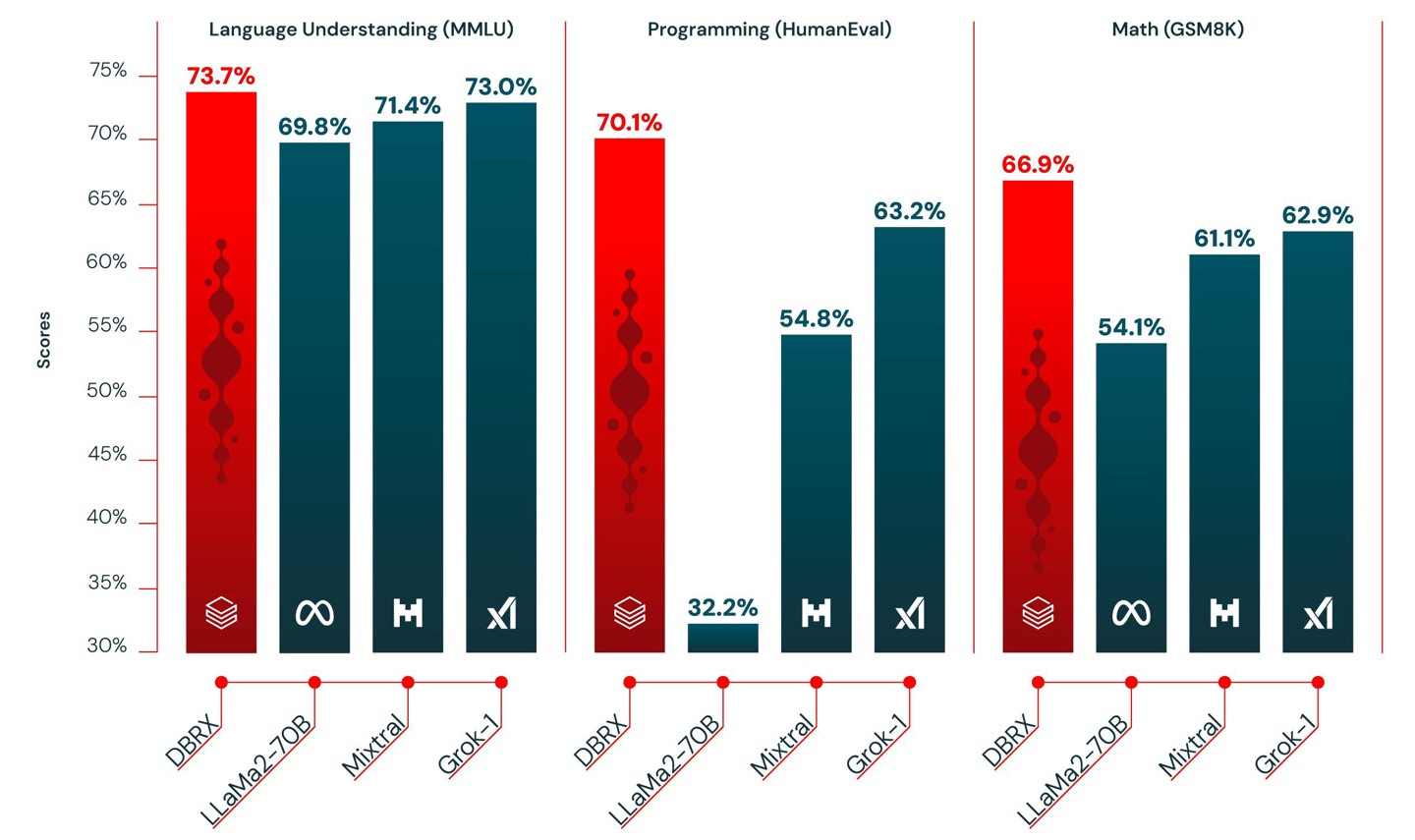

Databricks introduced in a company blog post that in terms of language understanding, programming, mathematics and logic, it compares with mainstream open source models such as Meta's Llama 2-70B, France's MixtralAI's Mixtral, and Musk's xAI's Grok-1.DBRX wins in both categories.

Figure 1: DBRX outperforms existing open source models in language understanding (MMLU), programming (HumanEval), and mathematics (GSM8K).

existLanguage ComprehensionIn terms of performance, DBRX's score is 73.7%, which is higher than GPT-3.5's 70.0%, Llama 2-70B's 69.8%, Mixtral's 71.4%, and Grok-1's 73.0%.

|

Model |

DBRX Instruct |

Mixtral Instruct |

Mixtral Base |

LLaMA2-70B Chat |

LLaMA2-70B Base |

Grok-11 |

|

Open LLM Leaderboard2 (Avg of next 6 rows) |

74.5% |

72.7% |

68.4% |

62.4% |

67.9% |

— |

|

ARC-challenge 25-shot |

68.9% |

70.1% |

66.4% |

64.6% |

67.3% |

— |

|

HellaSwag 10-shot |

89.0% |

87.6% |

86.5% |

85.9% |

87.3% |

— |

|

MMLU 5-shot |

73.7% |

71.4% |

71.9% |

63.9% |

69.8% |

73.0% |

|

Truthful QA 0-shot |

66.9% |

65.0% |

46.8% |

52.8% |

44.9% |

— |

|

WinoGrande 5-shot |

81.8% |

81.1% |

81.7% |

80.5% |

83.7% |

— |

|

GSM8k CoT 5-shot maj@13 |

66.9% |

61.1% |

57.6% |

26.7% |

54.1% |

62.9% (8-shot) |

|

Gauntlet v0.34 (Avg of 30+ diverse tasks) |

66.8% |

60.7% |

56.8% |

52.8% |

56.4% |

— |

|

HumanEval5 0-Shot, pass@1 (Programming) |

70.1% |

54.8% |

40.2% |

32.2% |

31.0% |

63.2% |

existProgramming skillsIn terms of performance, DBRX scored 70.1%, far exceeding GPT-3.5's 48.1%, and higher than Llama 2-70B's 32.3%, Mixtral's 54.8%, and Grok-1's 63.2%.

|

Model |

DBRX |

GPT-3.57 |

GPT-48 |

Claude 3 Haiku |

Claude 3 Sonnet |

Claude 3 Opus |

Gemini 1.0 Pro |

Gemini 1.5 Pro |

Mistral Medium |

Mistral Large |

|

MT Bench (Inflection corrected, n=5) |

8.39 ± 0.08 |

— |

— |

8.41 ± 0.04 |

8.54 ± 0.09 |

9.03 ± 0.06 |

8.23 ± 0.08 |

— |

8.05 ± 0.12 |

8.90 ± 0.06 |

|

MMLU 5-shot |

73.7% |

70.0% |

86.4% |

75.2% |

79.0% |

86.8% |

71.8% |

81.9% |

75.3% |

81.2% |

|

HellaSwag 10-shot |

89.0% |

85.5% |

95.3% |

85.9% |

89.0% |

95.4% |

84.7% |

92.5% |

88.0% |

89.2% |

|

HumanEval 0-Shot |

70.1% temp=0, N=1 |

48.1% |

67.0% |

75.9% |

73.0% |

84.9% |

67.7% |

71.9% |

38.4% |

45.1% |

|

GSM8k CoT maj@1 |

72.8% (5-shot) |

57.1% (5-shot) |

92.0% (5-shot) |

88.9% |

92.3% |

95.0% |

86.5% (maj1@32) |

91.7% (11-shot) |

66.7% (5-shot) |

81.0% (5-shot) |

|

WinoGrande 5-shot |

81.8% |

81.6% |

87.5% |

— |

— |

— |

— |

— |

88.0% |

86.7% |

In mathematics, DBRX scored 66.9%, higher than GPT-3.5's 57.1%, and higher than Llama 2-70B's 54.1%, Mixtral's 61.1%, and Grok-1's 62.9%.

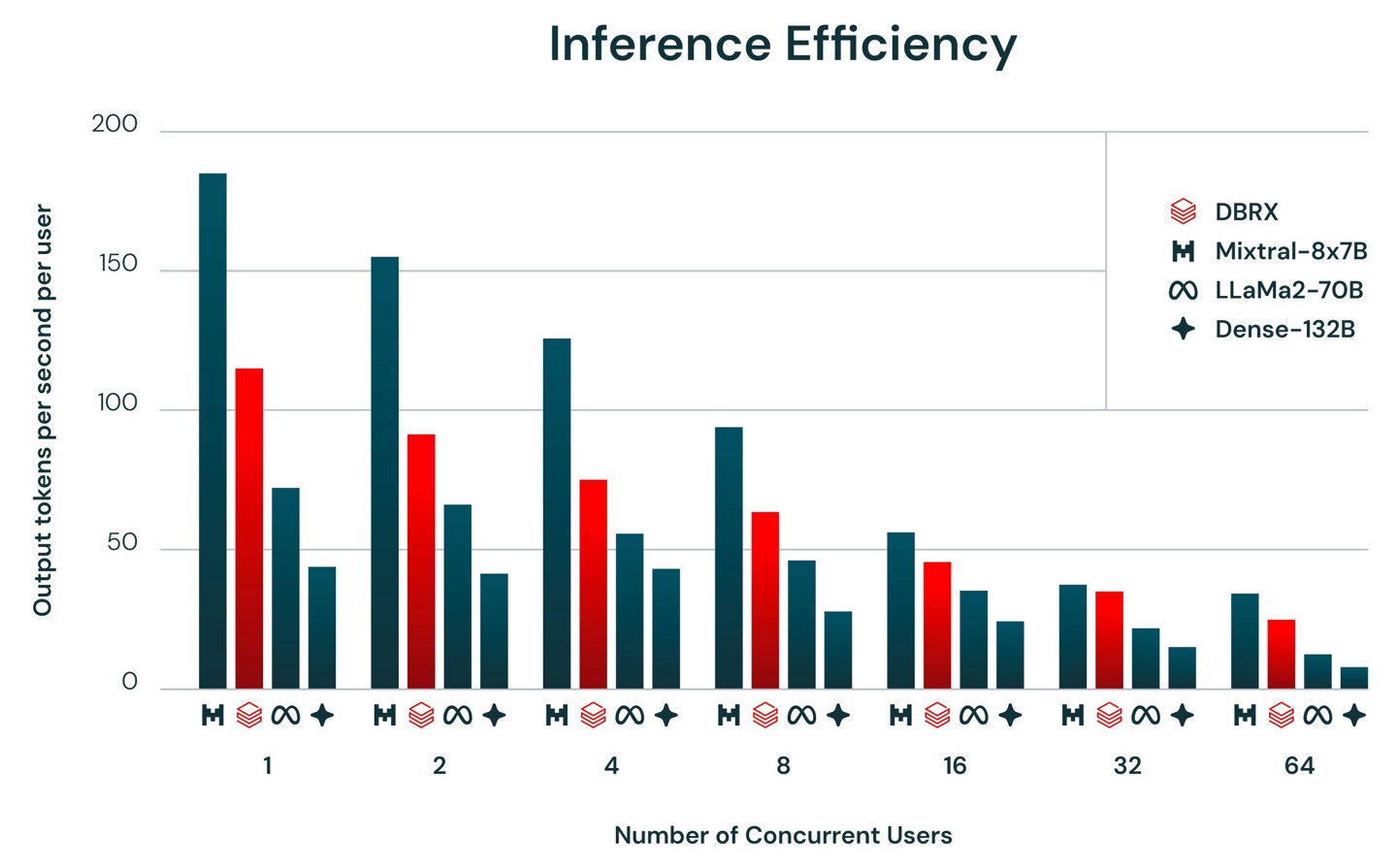

Databricks introduced that DBRX is a hybrid expert model (MoE) built on MegaBlocks research and open source projects, so it can output tokens extremely quickly per second. Databricks believes that this will pave the way for the most advanced open source model of MoE in the future.