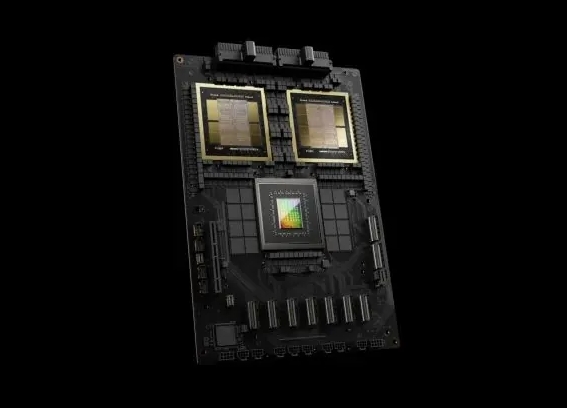

NvidiaReleased at the GTC Developer ConferenceStrongest AI Accelerator Card Blackwell GB200, which is scheduled to ship later this year.

The GB200 utilizes Blackwell, the next-generation AI graphics processor architecture, which delivers up to 20 petaflops of AI performance, a 5x improvement over the previous H100. Each Blackwell Die has 251 TP3T more floating point capacity than the Hopper Die, and with two Blackwell chips in each package, total performance is increased by 2.5x.

The GB200 includes two B200Blackwell GPUs and an Arm-based Grace CPU, delivering up to 30x performance improvement over the H100 for inference of large language models, and reducing cost and power consumption to a quarter.

NVIDIA says that 2,000 Blackwell GPUs can accomplish training tasks that previously required 8,000 Hopper GPUs and 15 megawatts of power. NVIDIA also provided the GB200NVL72 server, which provides 36 CPUs and 72 Blackwell GPUs and achieves 720 petaflops of AI training performance or 1.4 exaflops of inference performance.

NVIDIA's systems are also scalable to tens of thousands of GB200superchip with 11.5 exaflops of FP4 computing power.