Performance is everything in this model!AltmanfirstPublic disclosure:GPT-5There will be an epic upgrade, and any company that underestimates it will be crushed. In the future, AI will become the core driving force for the development of civilization.

You may not have recovered from the newly open-sourced Grok.

Foreign media broke the news again, AltmanfirstPublicly stated:

The improvement of GPT-5 will be huge! Anyone and any company that underestimates this will be crushed.

Altman tweeted some time ago that this yearOpenAIThe products will change human history.

He said at an event in Silicon Valley:

GPT-5’s performance improvements will exceed expectations.

Every time GPT develops the next model, it emphasizes the need for more new features. As a result, various areas of daily life and many sectors in business are inevitably replaced and disappear.

What are the limitations of GPT? I would confidently say "no limitations". We believe that the GPT model has no limitations and if enough computing resources are invested, it can build a model that surpasses humanAGIIt's not difficult.

This view seems to be different from the statement made by DeepMind CEO Hassabis, who is also an "LLM optimist", in an interview some time ago.

In Hassabis's view, before achieving AGI, there needs to be a breakthrough in algorithms, and Transformer alone cannot solve all problems.

GPT-5 has made huge improvements, and it can reach AGI with just a little bit of strength

Altman said in a conversation with a Korean journalist group in Silicon Valley:

Many startups are happy to think that GPT-5 will only make minor advances rather than major ones (because it seems there will be more business opportunities), but I think this is a big mistake.

As often happens when technology upheaval strikes, these startups that underestimated GPT-5’s capabilities will be crushed by the next generation of models.

This is AltmanFirstThis is the first time someone has commented so clearly and confidently on the performance of GPT-5 in public.

Altman now only has AI in mind

Moreover, Altman personally seems to have no interest in other technologies besides "building general artificial intelligence."

He said his interest in technologies other than artificial intelligence appears to have waned, including blockchain and biotechnology.

In the past, I had a broad perspective and an open mind about everything that was happening in the world, so I could see things that I couldn't see with a focused perspective.

Unfortunately, I'm completely focused on AI right now, so it's hard to have other interests.

In addition to thinking about the next generation of artificial intelligence models, the area I have spent the most time on recently is "computing power construction" because I increasingly believe that computing will become the most important currency in the world.

However, the world has not yet planned for enough computing and has failed to face this problem. Thinking about how to build a large amount of computing power at the lowest possible cost is a major challenge that humans need to face in their journey to achieve AGI.

AI is the future of mankindStrongestGripper

Altman said that after reaching AGI, computing power may not be a particularly big problem. AI can solve almost all problems related to development.

What excites me most about AGI is that the faster we develop artificial intelligence through scientific discovery, the faster we can find solutions to make nuclear fusion power a reality.

Research conducted through AGI will lead to sustainable economic growth.

I think this is almost the future of human society.onlydriving forces and determinants of.

When asked, if data shortage will hinder the progress of AI if the data generated by humans cannot keep up with the speed of AI development.

Altman said:

In the long run, human-generated data may become insufficient.

We need a model that can learn more with less data.

However, Altman said he could not yet determine when GPT-5 would be released.

Fridman Interview

In Fridman’s blog, Altman revealed a lot of his insights on leading OpenAI in the pursuit of AGI over the past year. The content is very valuable, so don’t miss it.

I believe that in the future, computing power will become a new “currency.” I foresee it becoming one of the world’s most precious resources.

When Fridman asked him if the OpenAI palace fight was a microcosm of the future AGI power struggle:

The road to AGI will certainly be filled with intense power struggles. The world will… well, no, I mean I expect this to be the future.

Where is Ilya?

So can I ask you about Ilya? Is he being held hostage in some secret nuclear facility?

-No

What about ordinary secret bases?

-No

Is it an unclassified nuclear facility?

- Definitely not.

Ilya doesn’t see AGI within OpenAI:

Ilya has never seen AGI. None of us have yet. We haven’t built AGI yet either.

However, one of the many qualities I admire about Ilya is that he takes the broader safety issues surrounding general artificial intelligence very seriously, including the impact it may have on society.

As we've continued to make significant progress, Ilya and I have spent most of our time over the last few years discussing what this will mean and what we need to do to make sure we do it right to ensure our mission is successful.

So, while Ilya has never seen AGI, his thoughtfulness and concern about ensuring that we stay on the right track and on track in this process is a valuable contribution to humanity.

About the relationship with Mr. Ma and the recent lawsuit

I really had no idea what was going on. Initially, we thought we were going to be a research lab and had no idea where this technology was going.

That was seven or eight years ago, and it’s hard to recall what happened then, because language models weren’t a hot topic yet.

We hadn’t even thought about building an API or selling access to the chatbot. We hadn’t even thought about productizing it.

Our thinking was, "Let's just do the research, and we don't know what we're going to do with it." I think when you're exploring something completely new, you're always feeling your way forward and making assumptions, and most of those assumptions turn out to be wrong.

He thinks OpenAI is failing. He wants full control to save the situation. But we want to continue in the direction OpenAI is going. He also wants Tesla to be able to work on an AGI (artificial general intelligence) project.

He had various ideas at different points, including turning OpenAI into a for-profit company that he could control, or merging it with Tesla. We disagreed with that, so he decided to leave, which was fine.

Look, I mean, before people point out that it's a bit hypocritical of Elon to do that, Grok has never open-sourced anything.

Then he announced that Grok would start open sourcing some stuff this week, and I think for him it wasn't just about open sourcing.

When discussing Sora, Altman talked about how humans and AI will interact in the future:

People always discuss how many jobs will be replaced by artificial intelligence in five years. Their starting point is usually what percentage of current jobs will be completely replaced by artificial intelligence?

But my personal view is not around how many jobs AI will do, but how many tasks they will be able to perform at some point in the future.

Think about it, how many of the tasks that take five seconds, five minutes, five hours, or even five days in economic activities can be completed by artificial intelligence?

I think this question is more meaningful, far-reaching and important than simply asking how many jobs artificial intelligence can replace.

Because AI is a tool that will perform more and more tasks at increasing levels of complexity over ever-increasing time spans, it enables humans to think abstractly at a higher level.

That is, people may become more effective at their jobs.

And this change, over time, is not just a quantitative change, it's a qualitative change in what kinds of questions we can think about. I think the same is true for videos on YouTube.

Many videos, perhaps most, use artificial intelligence tools in their production, but at their core they are still people who think, conceive, partially execute, and guide the operation of the entire project.

When asked about alleged secret projects like Q*, Altman pretty much denied them all:

OpenAI isn't very good at keeping secrets. It would be nice if we could do that. We've been plagued by a lot of leaks, and I wish we could do this stuff.

We're working on a lot of different areas. We've mentioned before that we think improving the reasoning capabilities of these systems is an important direction that we want to explore. We haven't cracked it yet, but we're very interested in it.

Netizens hotly discuss GPT-5: Wait until the flowers wither

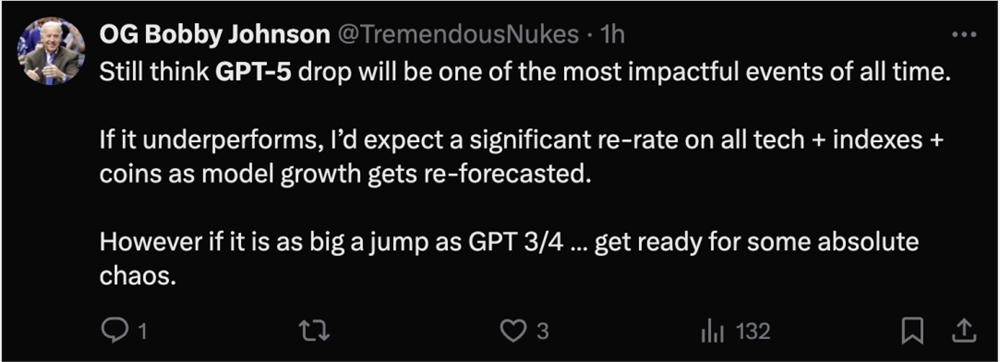

Netizens seem to have a very rational view of Altman’s remarks: they still believe that the release of GPT-5 will be one of the most influential events in history.

If it performs poorly, it will affect all forecasts of growth in big model capabilities, and all tech + indices + currencies will be significantly revalued.

However, if it improves as much as GPT-3 to 4 did, humanity will witness history.

Some netizens who were interested in finding out more also @Marcus under Altman’s report, asking him to comment quickly.

Countless netizens are also complaining that if GPT-5 doesn’t come out soon, people will die.

References:

https://m.sedaily.com/NewsView/2D6O83AF81#cb