appleThe company's research team recently ArXiv published an article titledMM1: Methods, Analysis & Insights from Multimodal LLM Pre-training", which introduces a "MM1"Multimodal large modelThe model provides three parameter sizes: 3 billion, 7 billion, and 30 billion.Possess image recognition and natural language reasoning capabilities.

The relevant papers of the Apple research team mainly use the MM1 model for experiments, by controlling various variables, find out the key factors that affect the model effect.

Research shows thatImage resolution and the number of image tags have a greater impact on model performance, while the visual language connector has a smaller impact on the model. Different types of pre-training data have different effects on model performance..

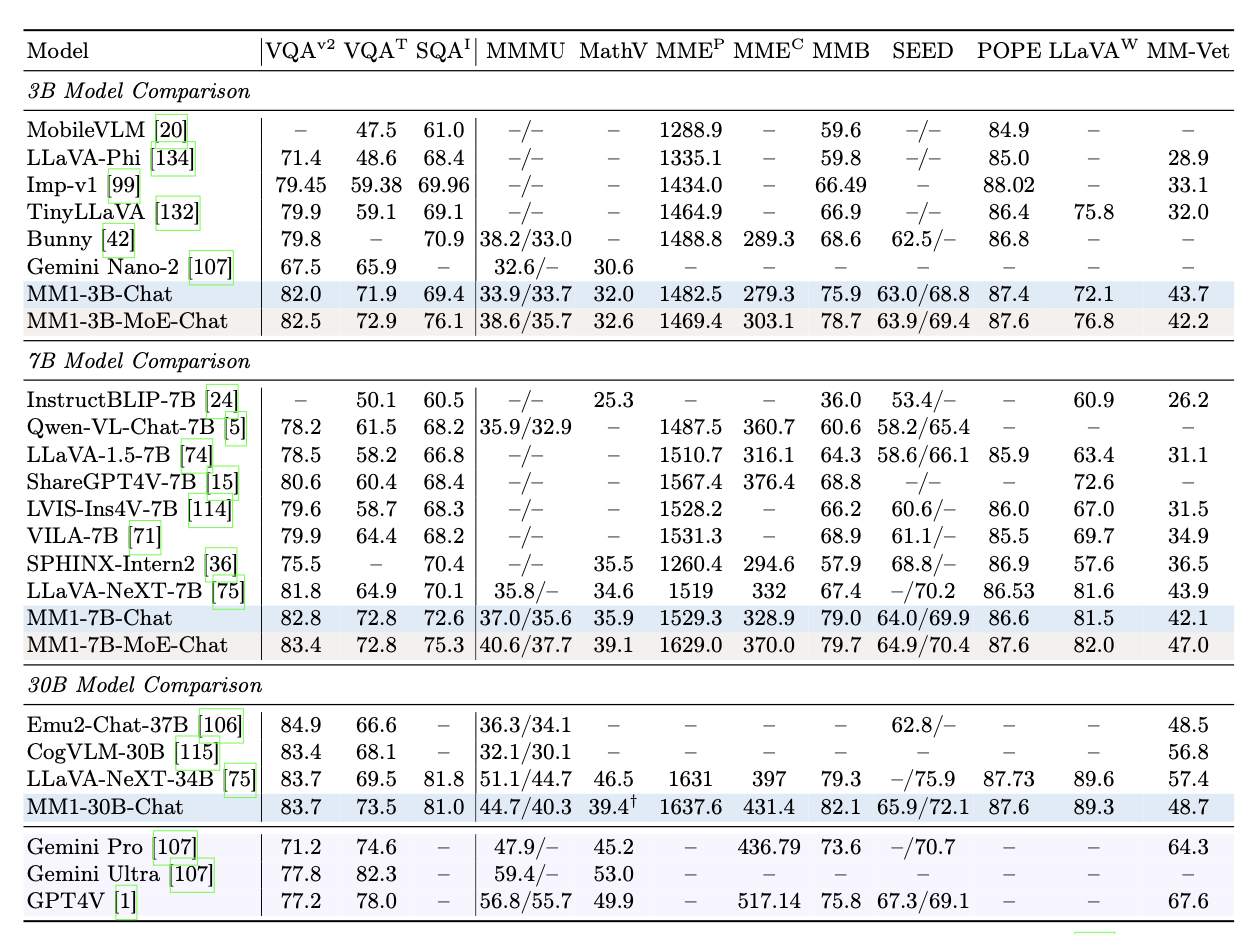

According to reports, the research team first conducted small-scale ablation experiments on model architecture decisions and pre-training data. Then they built the MM1 model using the Mixture of Experts architecture and a method called Top-2 Gating, which claims to have achieved the best performance in pre-training indicators and maintained competitive performance after supervised fine-tuning on a series of existing multimodal benchmarks.

The researchers tested the "MM1" model.The MM1-3B-Chat and MM1-7B-Chat are said to be superior to most models of the same size on the market.MM1-3B-Chat and MM1-7B-Chat performed particularly well in VQAv2, TextVQA, ScienceQA, MMBench, MMMU, and MathVista, but their overall performance was inferior to Google's Gemini and OpenAI's GPT-4V.