TencentandTsinghua University,The Hong Kong University of Science and TechnologyJointly launching the newImage video model "Follow-Your-Click", which is currently on the shelves of the GitHub(code made public in April), along with a research paper (with DOI:2403.08268).

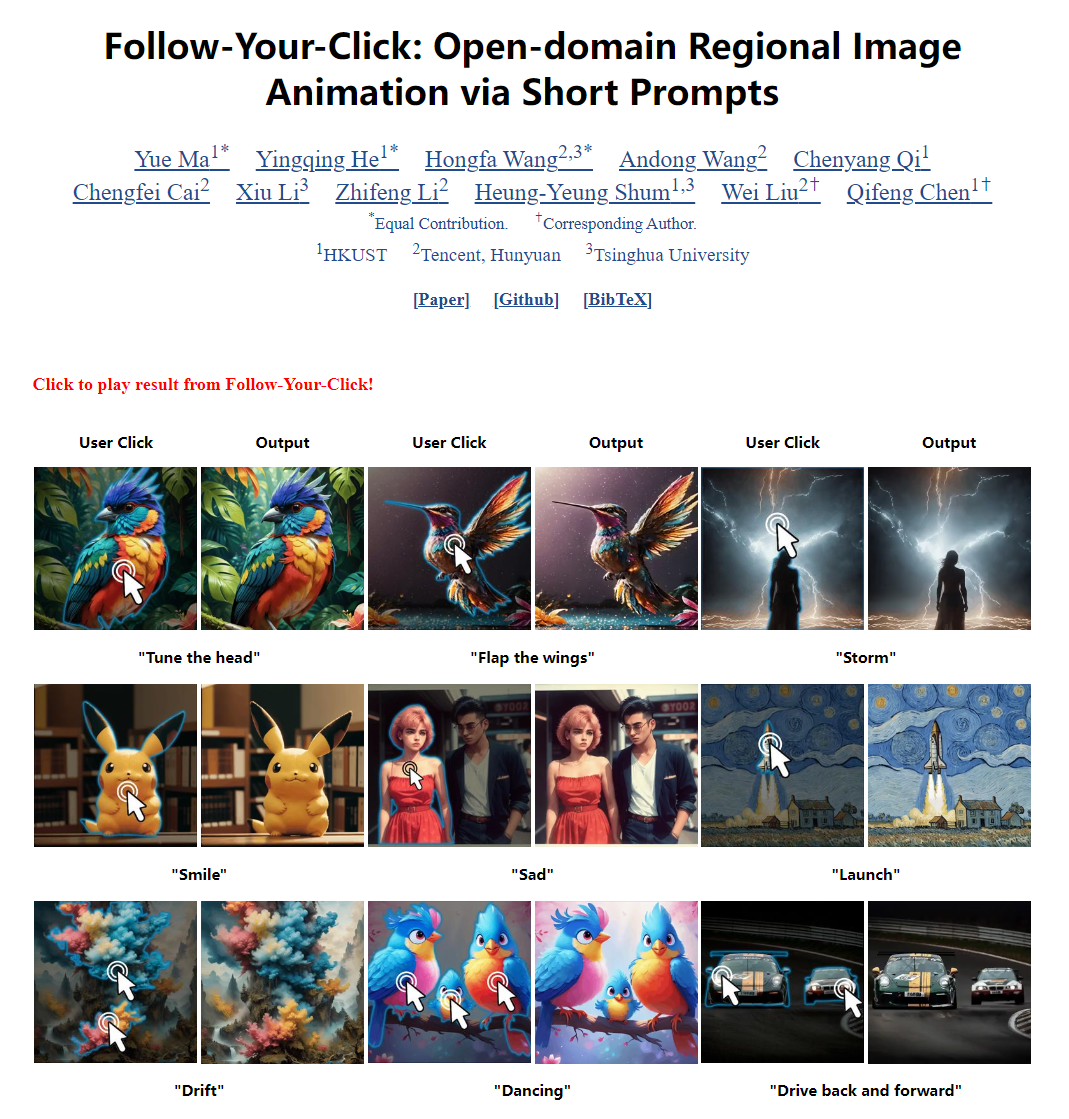

The main features of this Toussaint video model include local animation generation and multi-object animation, supporting a variety of motion expressions, such as head adjustments, wing flaps, and so on.

According to the introduction, Follow-Your-Click can generate localized image animation through user's clicks and short action prompts. Users only need to click on the corresponding area, plus a small number of prompts, you can make the original static areas of the picture move, a key to convert to video, such as make the object smile, dance or float.

In addition to being able to control the animation of a single object, the framework also supports animating multiple objects at the same time, adding complexity and richness to the animation. Users can easily specify the areas and types of actions they wish to animate with simple clicks and phrase prompts, without the need for complex operations or detailed descriptions.