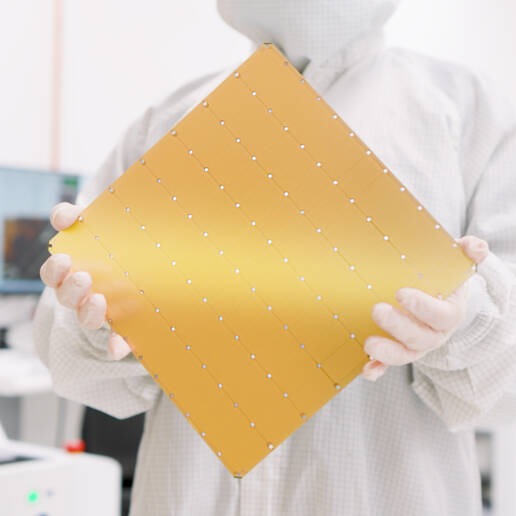

Wafer levelchipInnovative Enterprises Cerebras Launched its third-generation chip WSE-3, claimingWith the same power consumption, the performance of WSE-2 is doubled compared to the previous generation product.

The WSE-3 parameters are as follows:

-

TSMC 5nm process;

-

4 trillion transistors;

-

900,000 AI cores;

-

44GB on-chip SRAM cache;

-

Three optional off-chip memory capacities: 1.5TB / 12TB / 1.2PB;

-

125 PFLOPS of peak AI computing power.

Cerebras claims that the CS-3 system based on the WSE-3 has a memory capacity of up to 1.2PB.Train next-generation cutting-edge models that are 10x larger than GPT-4 and GeminiIt can accommodate models with a parameter scale of 24,000T in a single logical memory space, greatly simplifying the work of developers.

CS-3 is suitable for ultra-large-scale AI needs. A compact four-system cluster can fine-tune a 70B model in one day, and when using the largest cluster of 2048 CS-3 systems, the Llama 70B model can be trained in one day.

Cerebras says the CS-3 system is easy to use.The code required for large model training is reduced by 97% compared to GPU, a standard implementation of the GPT-3 size model can be achieved in just 565 lines of code.

The UAE G42 consortium has said it will build the Condor Galaxy 3 supercomputer based on Cerebras CS-3, which will include 64 systems and provide 8 exaFLOPs of AI computing power.