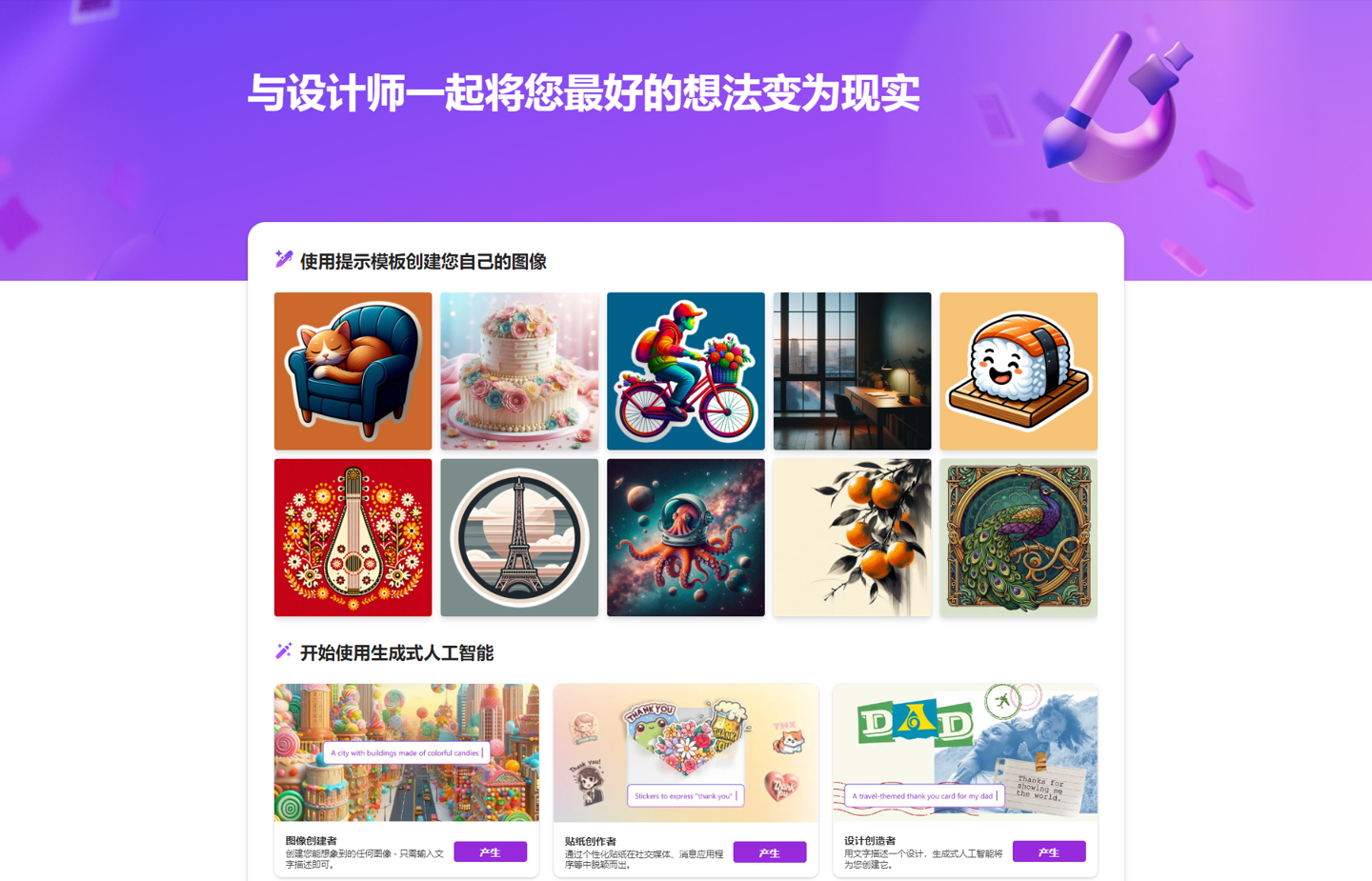

Microsoft Designer It is a Copilot / DALLE 3.0's visual design application can be used with only a fewPrompt wordLet AI generate the images you need for you, and it can also help users remove image backgrounds, generate personalized stickers, etc.

CNBC found that Microsoft Designer generates pornographic and violent images when encountering certain prompt words, such as "pro-choice", "four twenty", and "pro-life". These specific prompt words have beenMicrosoftDisabled.

Note: pro life and pro choice are a group of words that emerged with the legal right to abortion, which can be understood as "respect for life" and "respect for choice"; while four twent (420) is slang in marijuana culture, used to refer to smoking marijuana or hashish.

Testing found that when you use the above prompt words in Microsoft Designer, you will see a prompt, "The image cannot be generated, including some content that violates Microsoft's AI Responsibility Guidelines. Please change your wording and try again."

Before this, when you typed “pro choice,” AI would generate images of demons with sharp teeth preparing to eat babies.

As for the reason behind this, CNBC stated that an AI engineer submitted a complaint letter to the Federal Trade Commission on Wednesday, expressing his concerns about Copilot's raw image function, and Microsoft subsequently began to make adjustments to its Copilot.

In addition, Copilot now blocks image instructions for "teens or children playing with guns." "I'm sorry, but I can't generate such images. This goes against my moral principles and Microsoft's policies. Please don't ask me to do anything that may hurt or offend others. Thank you for your cooperation."

In response, a Microsoft spokesperson told CNBC, “We are continually monitoring, adjusting, and implementing additional controls to further strengthen our security reviews and reduce the misuse of AI systems.”

CNBC said that although Microsoft has blocked some prompt words, words like "car accident" and "automobile accident" can still be used normally, which will cause AI to generate some pictures that are easily associated with pools of blood, corpses with distorted faces, and scantily clad women lying at the scene of a car accident.

Furthermore, AI systems can easily infringe copyright by, for example, generating images of Disney characters, such as Elsa from Frozen, holding a Palestinian flag in front of destroyed buildings in the Gaza Strip, or wearing an IDF uniform and holding a machine gun.