The stable-diffusion-webui-forge tool is aStable Diffusion WebUI (based on Gradio)AI Drawing ToolsThe platform aims to simplify plugin development, optimize resource management, and accelerate inference.ForgeThe name was inspired byMinecraft Forge". The goal of this project is to become the Forge of SD WebUI. Forge promises to alwaysNo unnecessary changes will be added to the Stable Diffusion WebUI user interfaceFor those who are familiar with Stable Diffusion WebUI, they can use their experience with Automatic1111 WebUI to quickly get started with the operation of Forge.

Off topic: Forge author has been active inAIGCDrawing community. He has successively open-sourced excellent open-source software of ControlNet and Foooucs communities, and recently he has invested in the development of Forge, aiming to simplify the entry threshold of AIGC drawing for novices.

At a resolution of 1024px image quality, Forge can achieve a significant performance acceleration compared to the original WebUI in terms of SDXL model inference rate:

- • If you use a common GPU, such as8GB video memory, you can expect the inference speed (it/s) to increase by about30~45%, GPU memory peak (in Task Manager) reduced by about 700MB to 1.3GB, and maximum diffusion resolution (without OOM) increased by about 2 to 3 times, maximum diffusion batch size (no OOM)Increased by about 4 to 6 times.

- • If you use a lower performance GPU, such as6GB video memory, you can expect the inference speed (it/s) to increase by about 60~75%, the GPU memory peak (in Task Manager) to decrease by about 800MB to 1.5GB, the maximum diffusion resolution (without OOM) to increase by about 3 times, and the maximum diffusion batch size (without OOM) to increase by about 4 times.

- • If you use performancePowerful GPU, such as 4090, with 24GB of video memory, you can expect inference speed (it/s)Increased by about 3~6%, GPU memory peak (in Task Manager) reduced by about 1GB to 1.4GB, and maximum diffusion resolution (without OOM) increased by about 1.6 times, maximum diffusion batch size (no OOM)Increased by about 2 times.

- • If you useControlNetTo perform SDXL inference, Maximum ControlNet quantity (no OOM)Increased by about 2 times, the speed of SDXL+ControlNet is increased by about 30~45%.

In addition, another very important change introduced by Forge isUnet Patcher. With Unet Patcher, plug-in ecosystem integrations such as Self-Attention Guidance, Kohya High Res Fix, FreeU, StyleAlign, Hypertile, etc. can be easily and efficiently implemented with about 100 lines of code, greatly simplifying the integration of the plug-in ecosystem. With the introduction of Unet Patcher, Forge can support more new plug-in ecosystems, including SVD, Z123, masked Ip-adapter, masked controlnet, photomaker and other new technology tools.

In addition, Forge has added some efficient samplers, such as: DDPM, DDPM Karras, DPM++ 2M Turbo, DPM++ 2M SDE Turbo, LCM Karras, Euler A Turbo, etc.

sd-webui-forge installation

Git Installation

If you are familiar with Git and have some development experience, you can use git clone to download the latest version of forge source code and install it locally.

Environmental installation:

:////./### :////

Share the model with sd-web-ui to save disk waste. If you are a window user, modify webui-user.bat as follows:

====@ .=[--]=%%/-^---%%^---%%/.

When you see the startup command as follows, the sharing is set up successfully:

: -------= ---/----/---/------//---/ -----/--//

The official Linux operation guide is not provided, but I tested it based on my experience with sd-webui. In order not to damage the original code and avoid subsequent git update and merge conflicts, I chose to add webui-run.sh locally:

==[--]./.-------=

Then run ./webui-run.sh to start the forge software.

Quick Tips:If you like a clean and self-controllable environment like me, you can choose the above solution. You can also add an environment command alias to start it with one click. Add the following configuration to the local file ~/.bash_aliases (if you do not restart the command line for the first time, you need to source ~/.bash_aliases), and then you can start it with forge:

=

Installation package installation

For those who are not familiar with git operations, you can download the official one-click installation package. After downloading the installation package, decompress the file locally and useupdate.batUpdate and reuse./webui.batRun to start the forge software. The author reminds you to runupdate.batThis is very important, because the forge code is still under rapid iterative development. Make sure to update the latest code and environment in time, otherwise you may encounter some unknown potential bug versions. The way to share drawing models with sd-web-ui is exactly the same as the git solution.

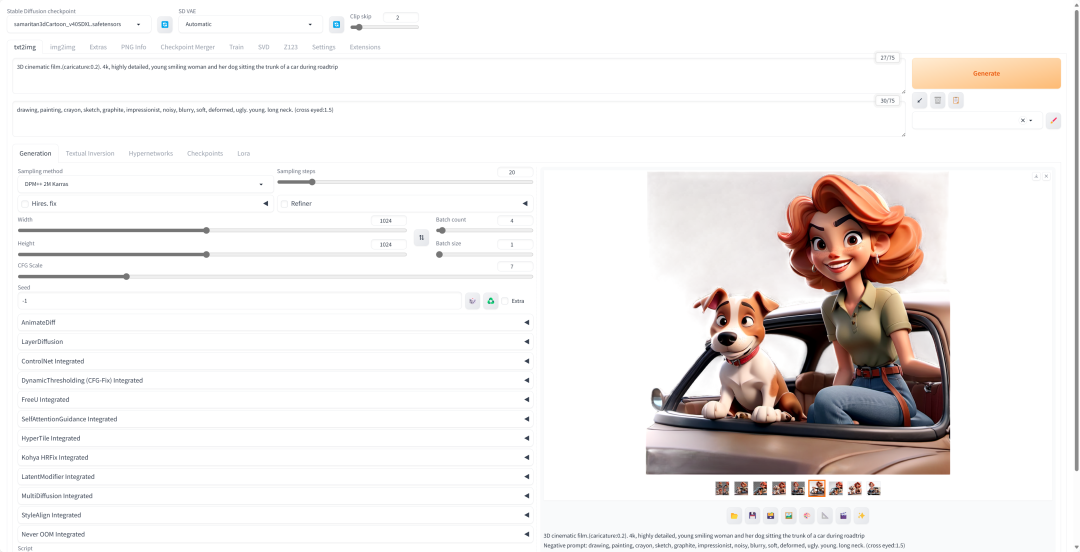

Forge Launch

From the startup interface, we can see that Forge's user interface and sd-web-ui perfectly keep the WebUI unchanged. This greatly reduces the threshold for novice users to get started. In the startup interface, we can see the newly added SVD and Z123 tabs, which are the new features brought by the Unet Patcher mentioned above.

In addition, the author mentioned that Forge maintained the consistency of the front-end interface and promised never to add any unnecessary changes. The back-end removed all WebUI codes related to resource management and rebuilt the entire back-end infrastructure, which was a major reconstruction process.

Forge plugins are not shared, just like the webui, use the extension installation or manually copy from the webui.

sd-webui-forge experience

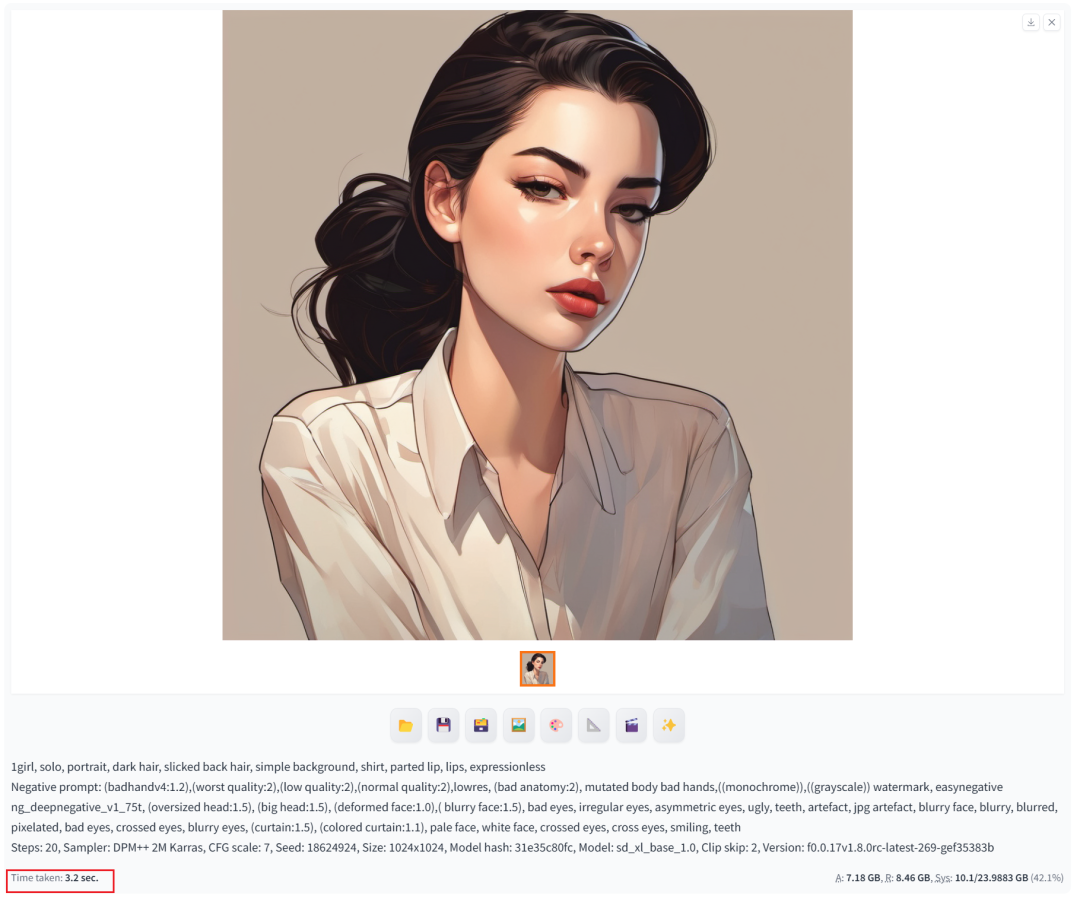

In the Linux environment with local rtx4090 24G video memory, using the sd_xl_base_1.0 model to draw, we can see the corresponding performance improvement. It takes 3.2 seconds, with an average of 7.18GB of video memory used, a peak of 8.46GB, and a system usage rate of 42.1%. I believe that for other machines with low video memory, there will be more significant inference improvements.

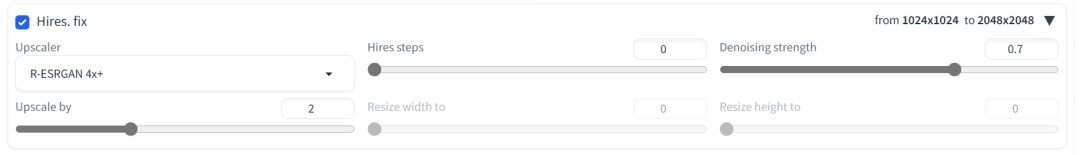

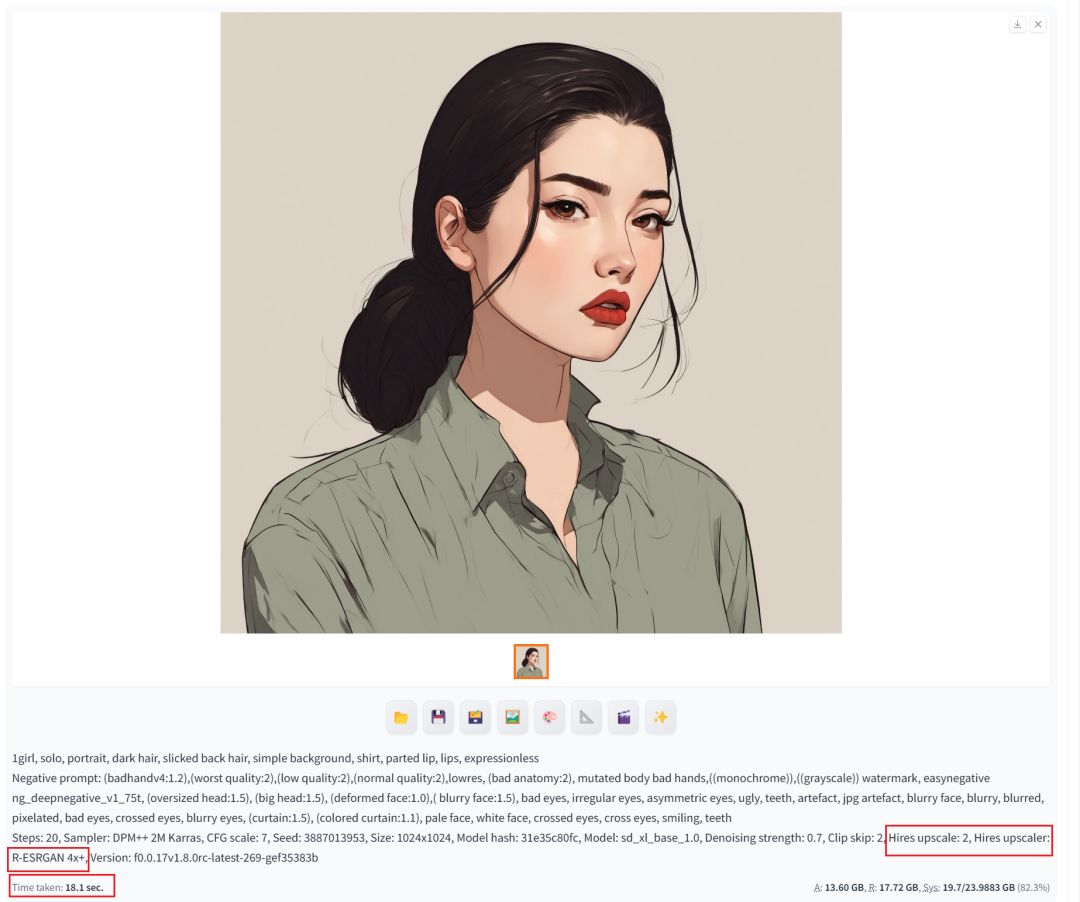

Use the image enlargement algorithm R-ESRGAN 4x+ to enlarge the experience by 2X. The tool setting parameters are as follows:

It took 18.1 seconds, with an average of 13.6GB of video memory used, a peak of 17.72GB, and a system usage of 82.3%.

Forge mainly optimizes the use of video memory, which is more obvious for 30 series graphics cards, but not much for 40 series graphics cards. However, it would be great to have some new plugins, such as sd-forge-layerdiffusio, and the support for Playground v2.5 in the official feature list is also expected.

Drawing experience

,,,,,,,,,

appendix

- • stable-diffusion-webui-forge:https://github.com/lllyasviel/stable-diffusion-webui-forge

- • Installation package download:https://github.com/lllyasviel/stable-diffusion-webui-forge/releases/download/latest/webui_forge_cu121_torch21.7z