globalNetwork Security TeamNew AI worms have recently been developed that are capable of generatingArtificial Intelligence Servicesspread independently between them,Stealing data and sending spam via email.

With the rapid development of generative AI systems such as OpenAI ChatGPT and Google Gemini, AI is starting to be applied to solve a specific problem or task, such as creating a calendar item, ordering an item, etc.

Cybersecurity technology researchers have shown that there is a vulnerability in generative AI and named it "Morris II".

To give a brief background on the name, Morris is believed to be the first computer worm discovered by humans, having been discovered in 1988 and infecting over 6,200 computers, or 10% of all computers connected to the Internet at the time.

"Morris II" can steal data from emails and send spam based on generative AI that bypasses the protection of ChatGPT and Gemini.

The study authors say the new attack model was tested in a sandbox environment but has not yet been detected in practice, though individual developers, startups and tech companies should consider the threat.

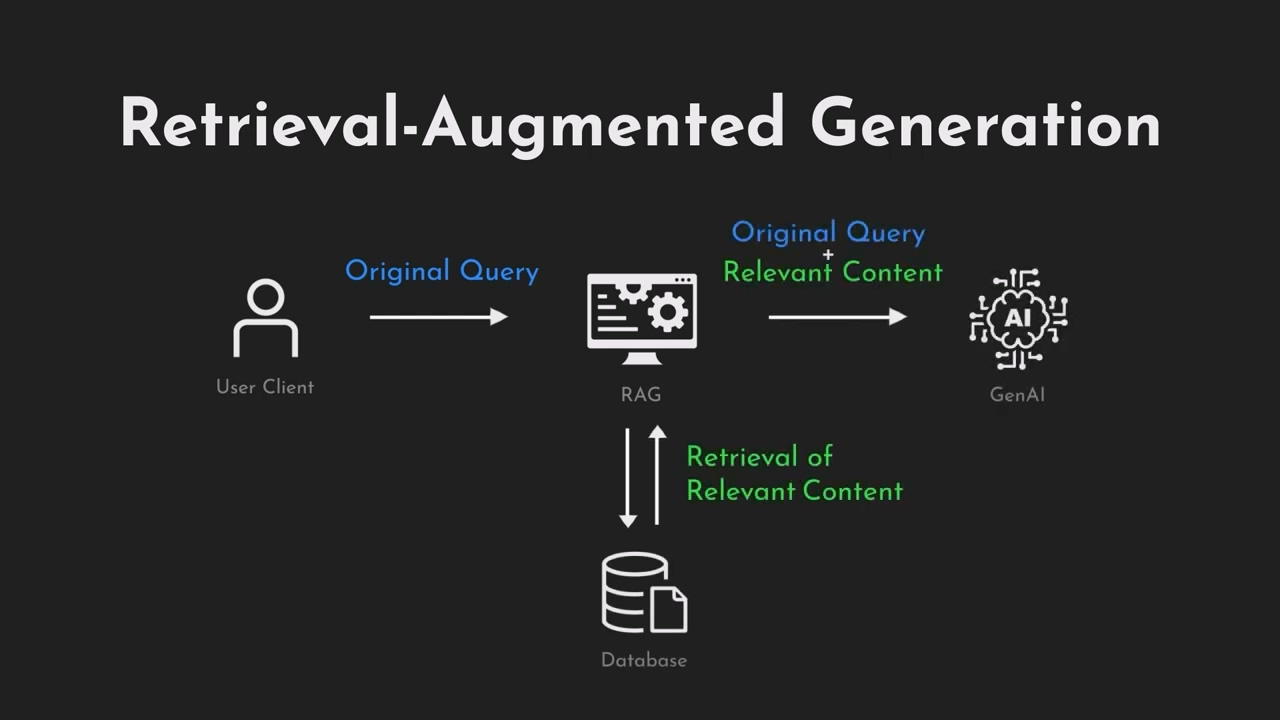

Most generative AI systems work by receiving textual instructions: asking to answer questions or create images.

These commands can be used against a system to make it ignore security measures and generate inappropriate content; they can also be used to send implied commands to a system, for example, by providing it with the address of a malicious web page that hides the text of such commands.

The principle of operation of a worm attacking a generative AI is based on an "adversarial self-replicating hint" mechanism. This command causes the generative AI model to issue another command in response, much like traditional attack patterns such as SQL injection and buffer overflows.

To demonstrate how the worm works, the researchers created an email service that can receive and send messages using generative AI by connecting ChatGPT, Gemini and the open-source LlaVA model.

They then used both self-replicating text commands and similar commands embedded in image files to successfully exploit the AI vulnerability and launch an attack.

The authors of the study noted that these attack methods were made possible by a faulty architectural design of the AI ecosystem. They shared their findings with Google and OpenAI - OpenAI confirmed the existence of the threat but added that the company is working to improve the stability of its systems, while Google declined to comment.