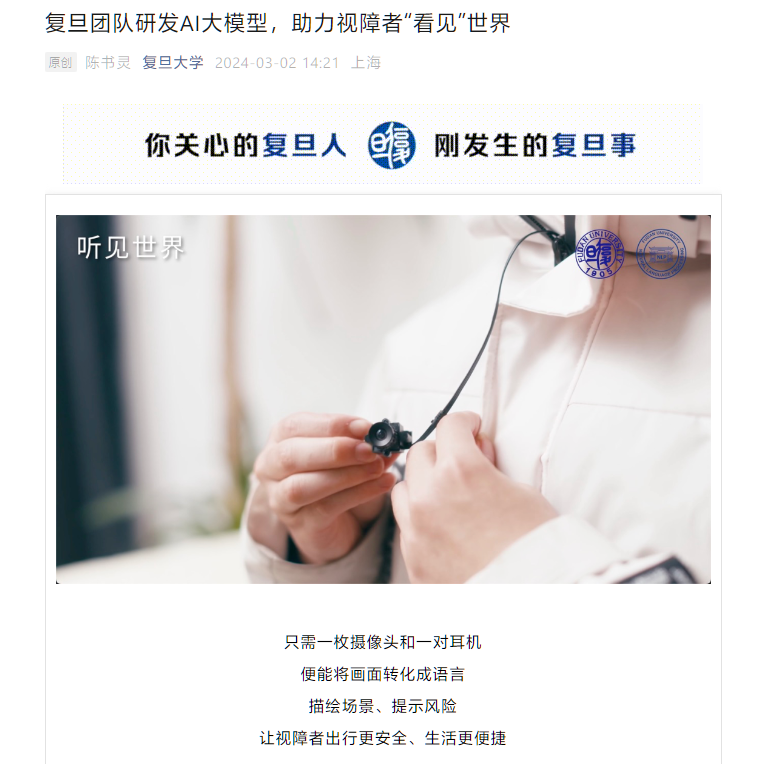

according toFudan UniversityThe official public account is made possible by the efforts of teachers and students of Fudan University Natural Language Processing Laboratory (FudanNLP).Based on multimodalLarge Model"Fudan MouSi" launches the "Hear the World" app tailored for the visually impaired.

This system only needs a camera and a pair of headphones to convert images into language, and supports functions such as describing scenes and warning of risks. The "Hear the World" App can design three modes for the daily life needs of the visually impaired.

-

Street Walking: In this mode,"Mosi" can scan road conditions in detail and indicate potential risks.

-

Free Q&A: It can help the visually impaired walk into museums, art galleries, and parks, capture every detail of the surrounding scenes, and use sound to build rich life scenes. The official demonstration picture shows thatThe app can also realize functions such as retelling TV screen content.

-

Object Search: This mode provides the visually impaired with the function of finding everyday objects, and the official calls it a “reliable butler.”

It is reported that the "Hear the World" App is expected to complete its first round of testing in March this year, and will simultaneously launch pilot projects in China's first- and second-tier cities and regions, and promote it based on the computing power deployment situation.

Fudan University Natural Language Processing Laboratory (FudanNLP) previously developed the MOSS large model and announced its official open source release in April 2023.Became the first plug-in enhanced open source conversational language model in China. Half a year later, the multimodal model "Mosi" was launched.